Section 9 Statistical Testing of Method Settings

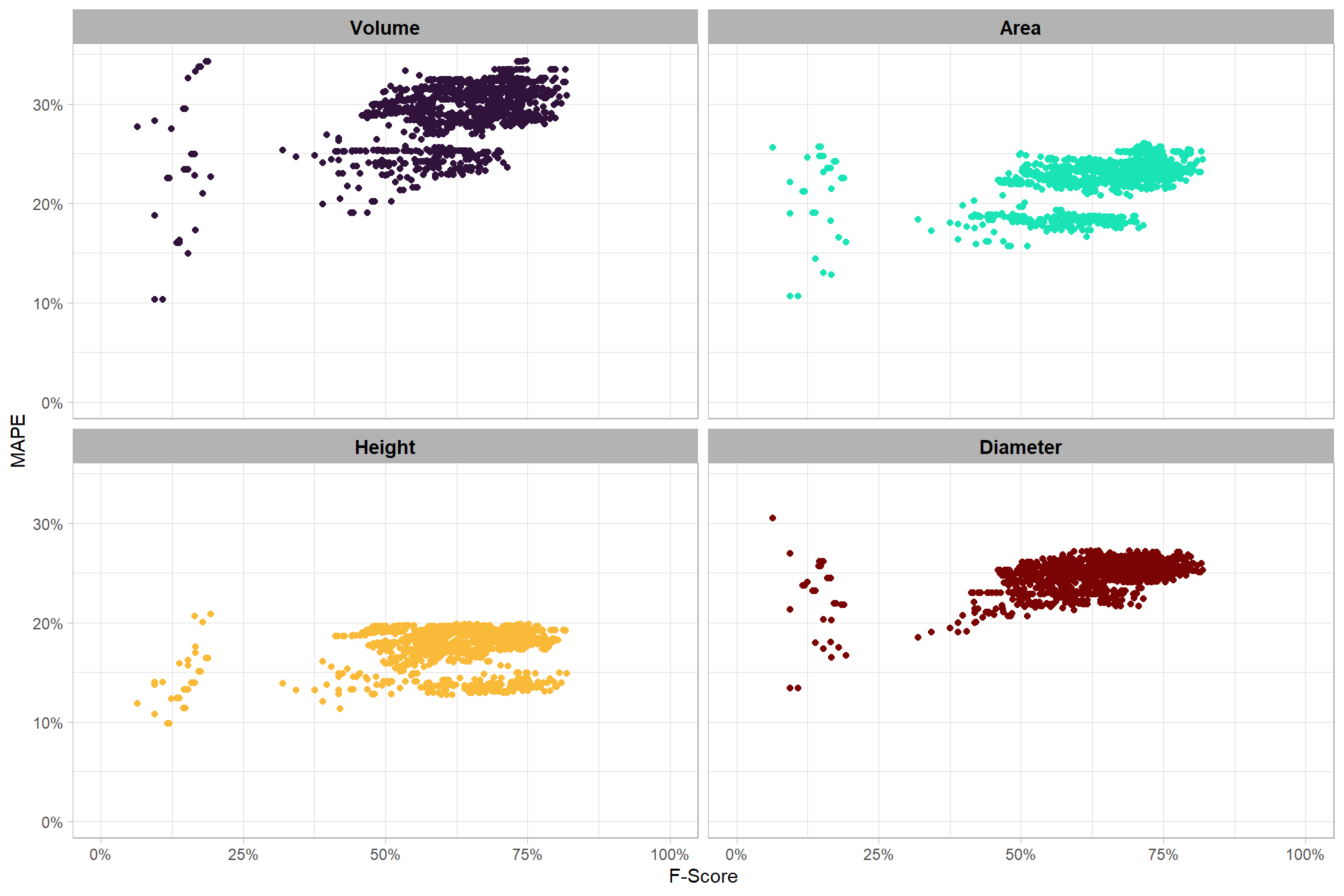

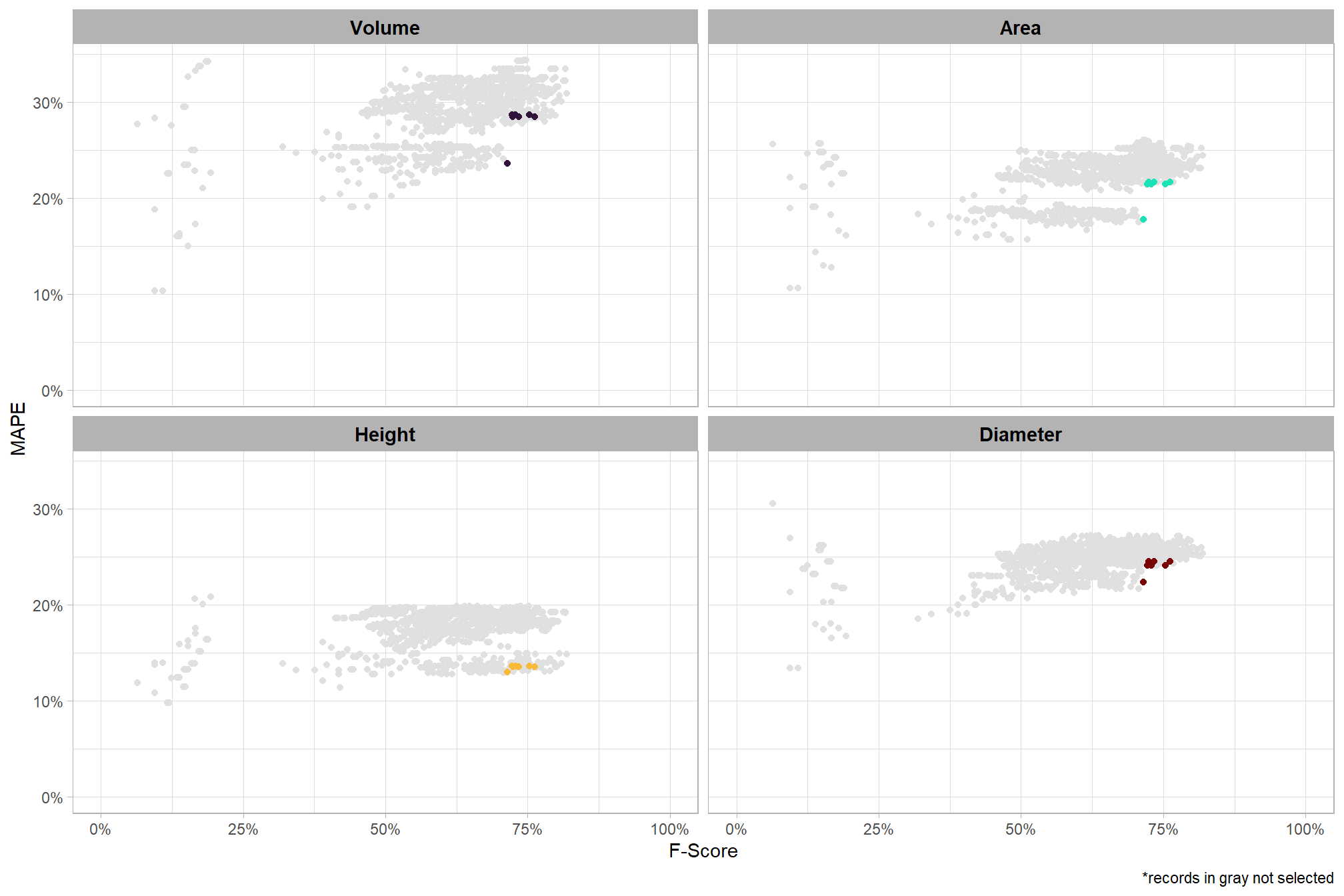

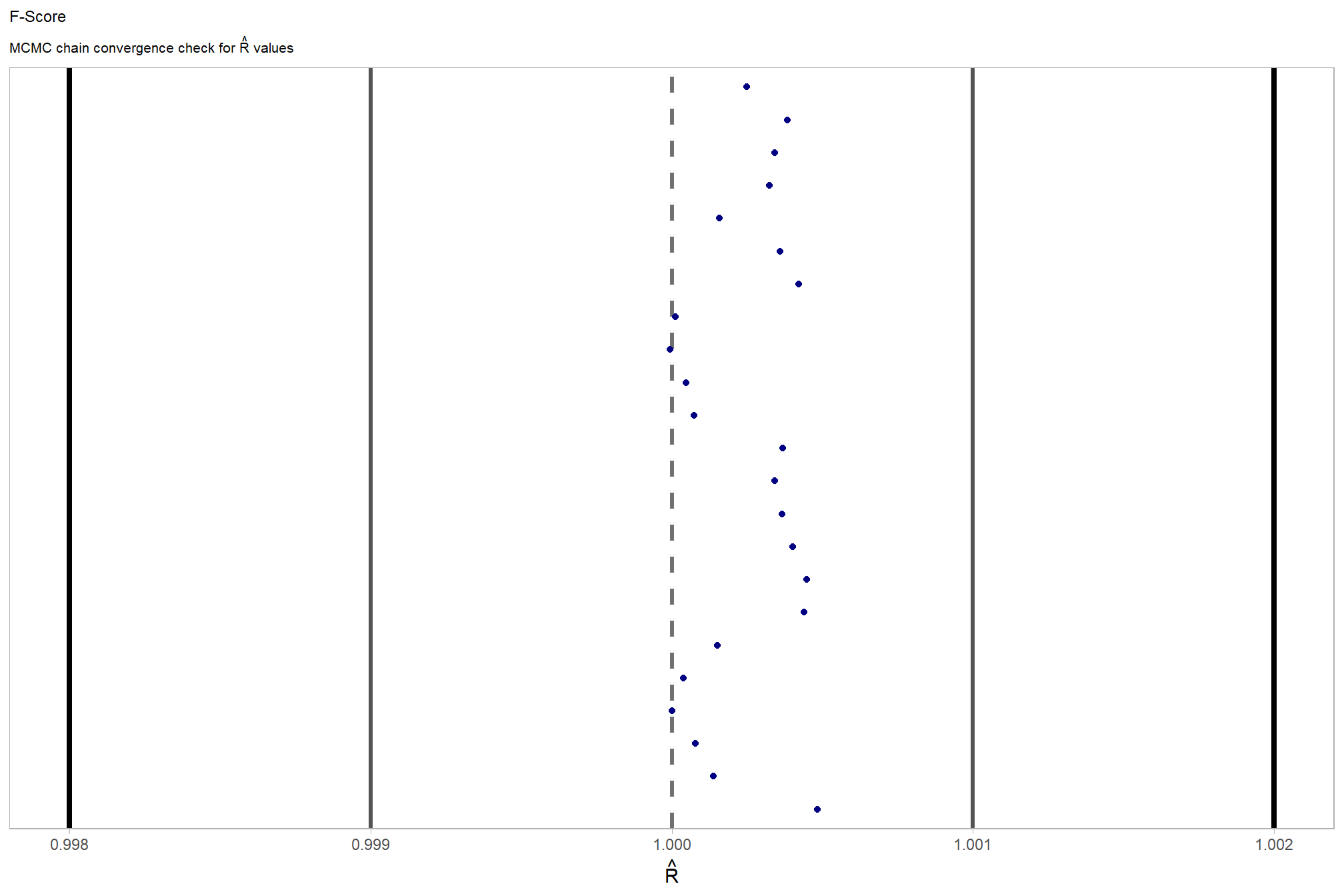

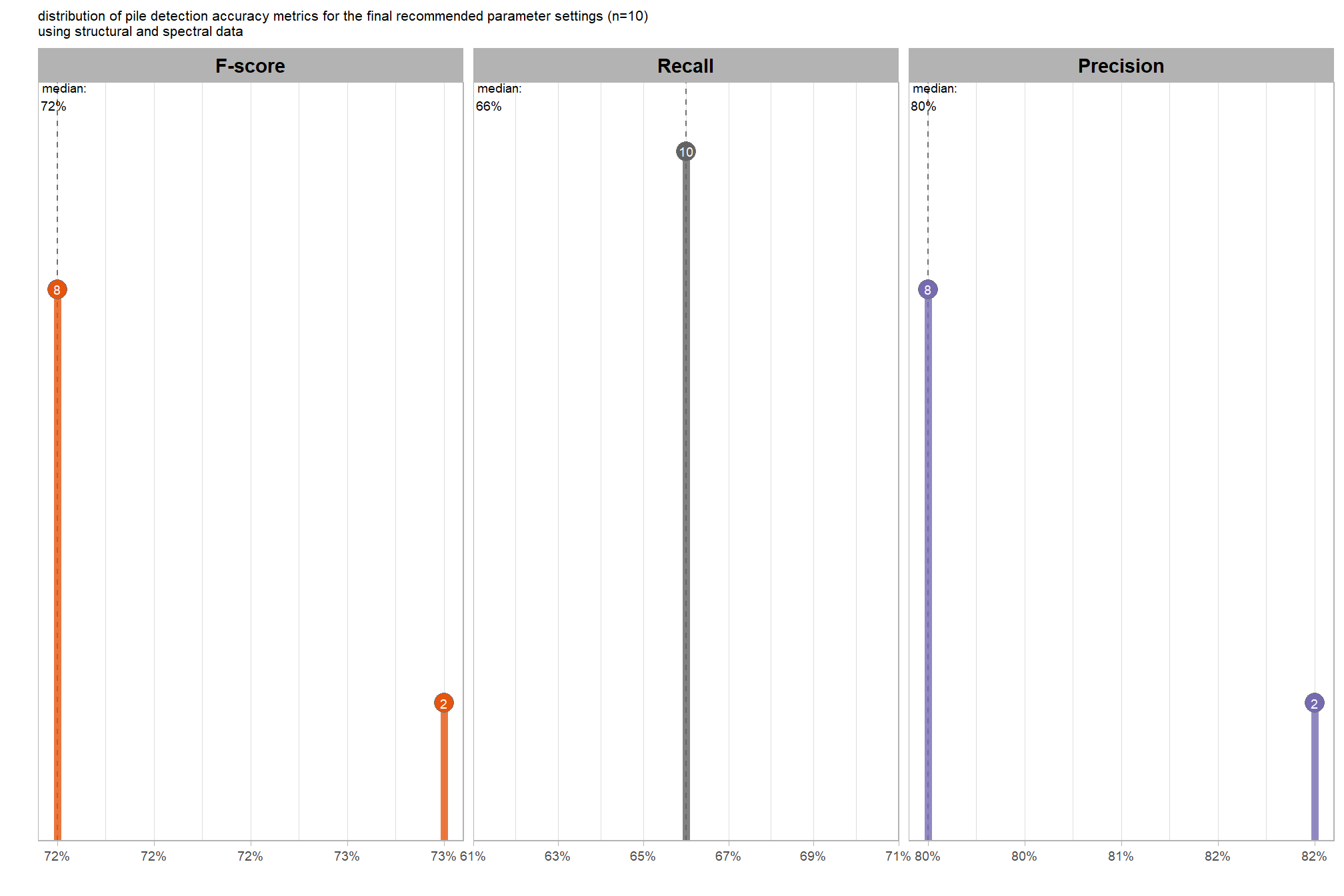

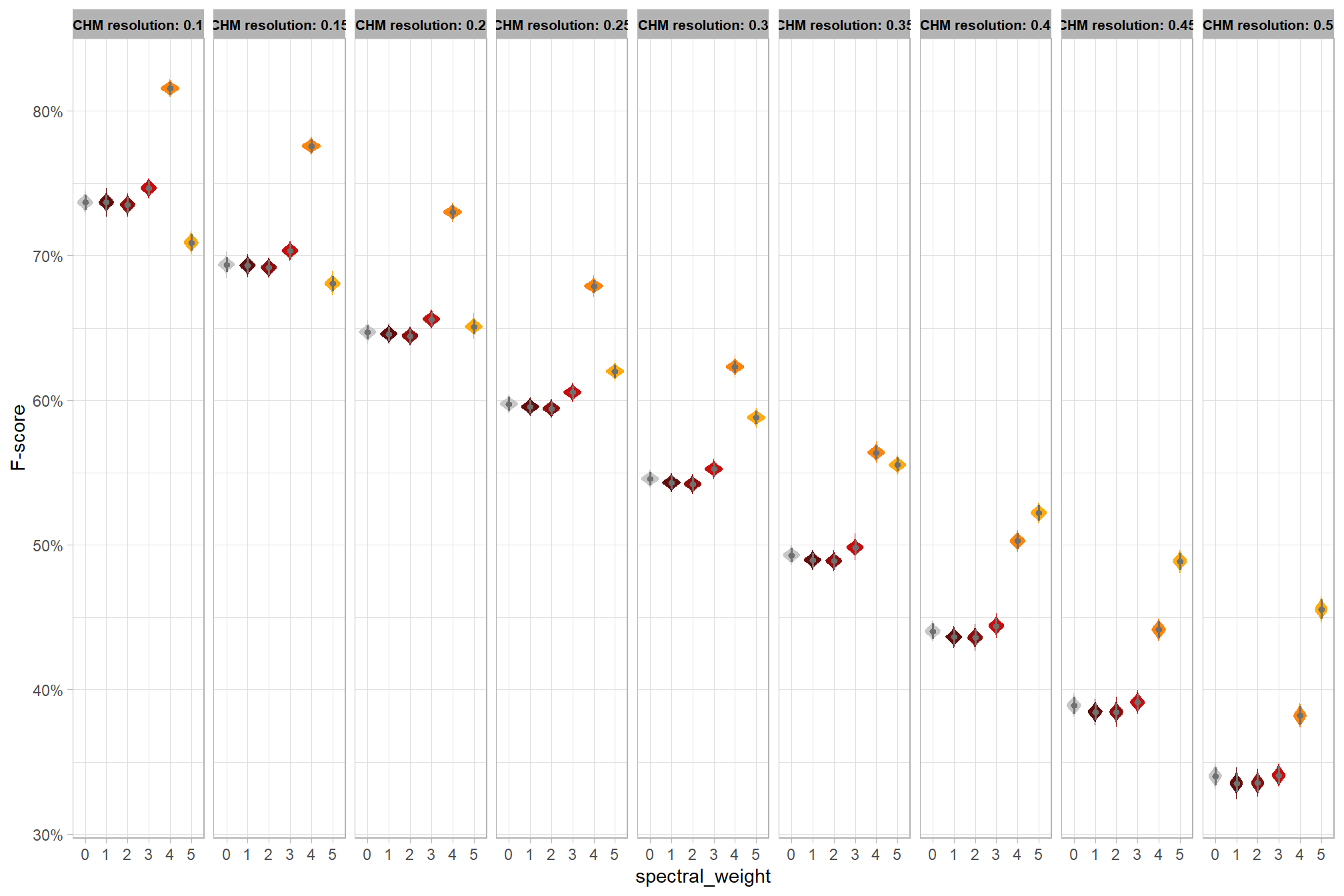

In this prior section we performed sensitivity testing of our slash pile detection method using multiple CHM raster resolutions and ended up testing tens of thousands of possible parameterizations and input data combinations. using the tested parameter and data input combinations, we’re going build statistical models to quantify the influence of these parameters and input data on pile detection and quantification accuracy. we’ll utilize a Bayesian modelling framework which will allow us to probabilistically quantify parameter influence while accounting for uncertainty.

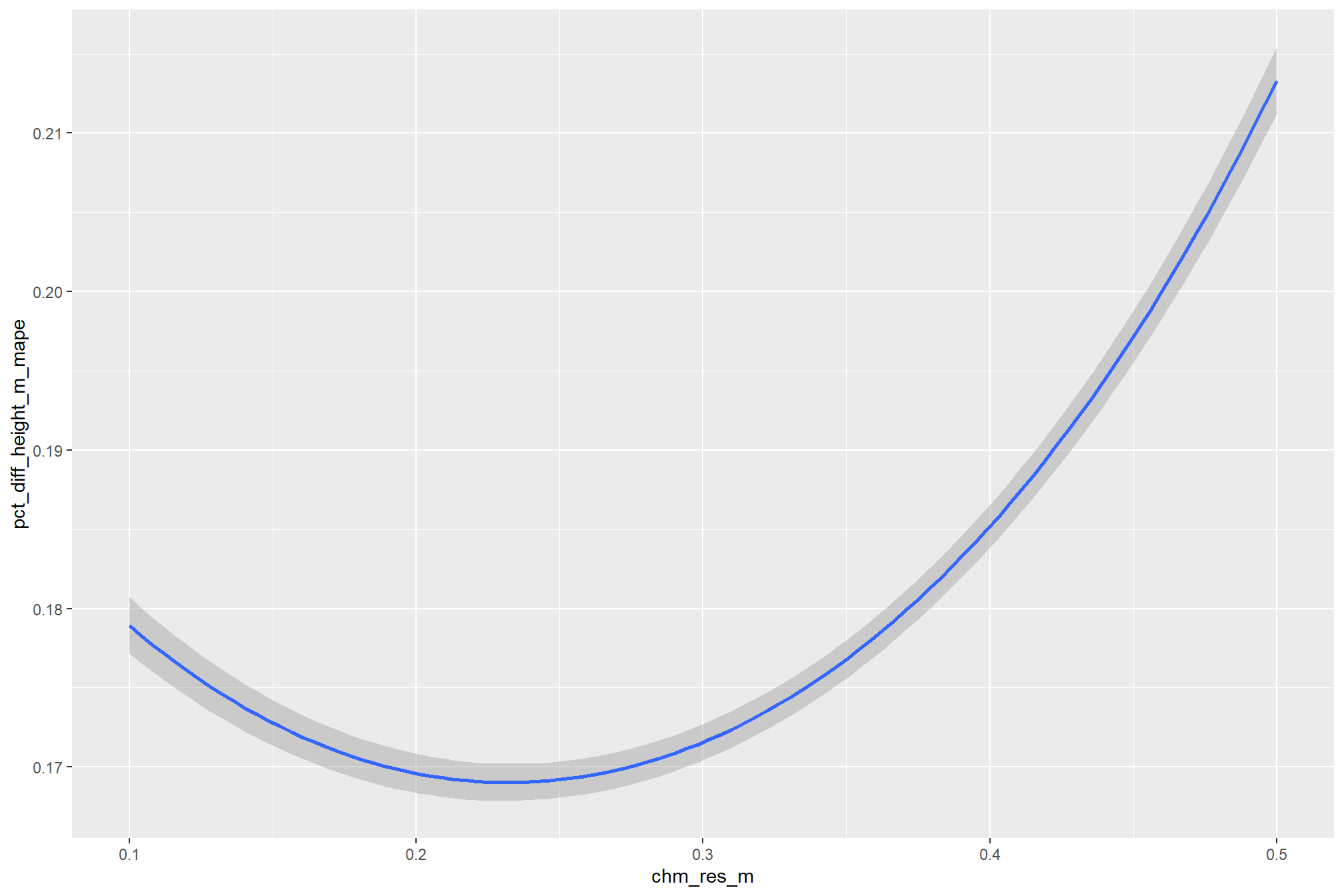

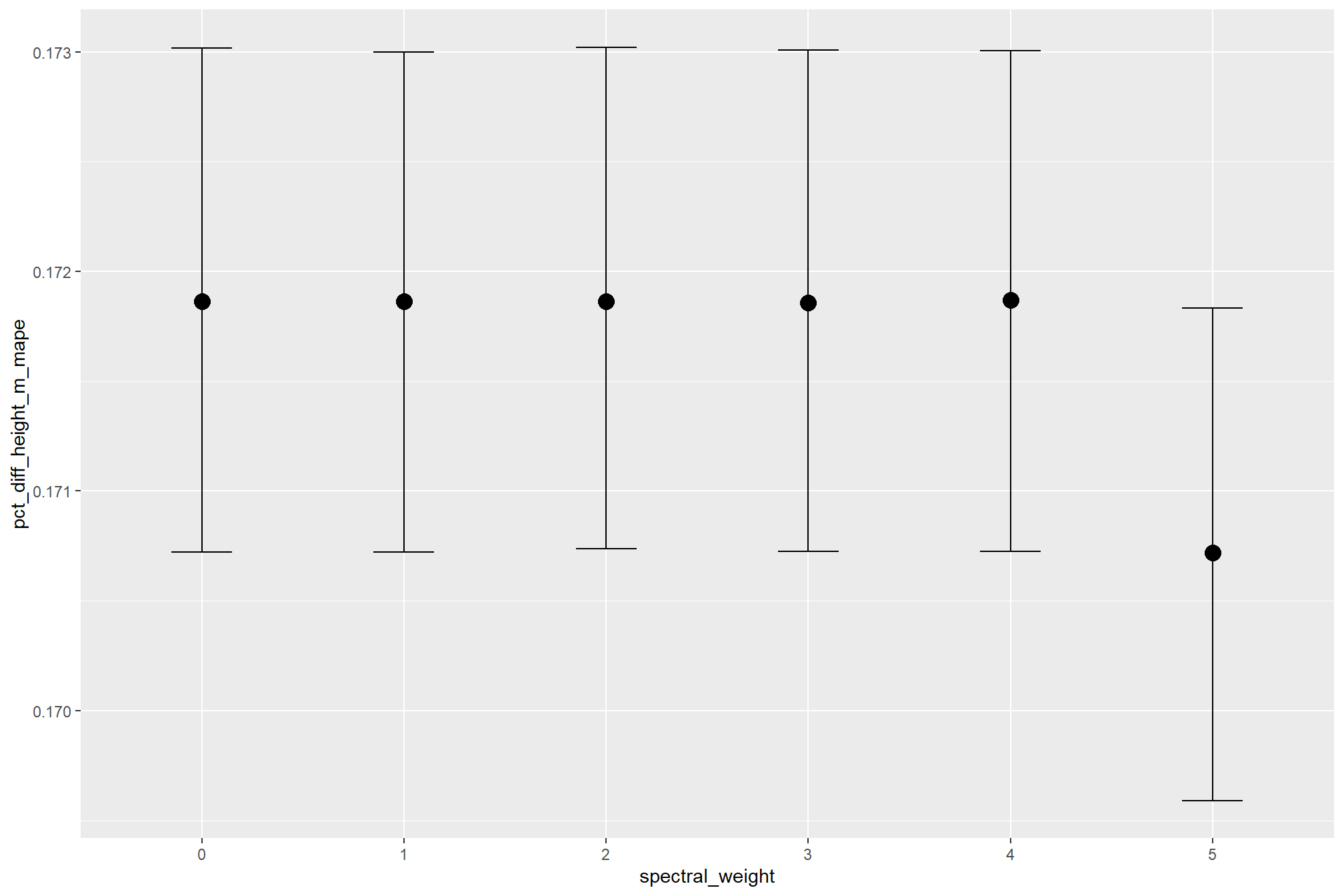

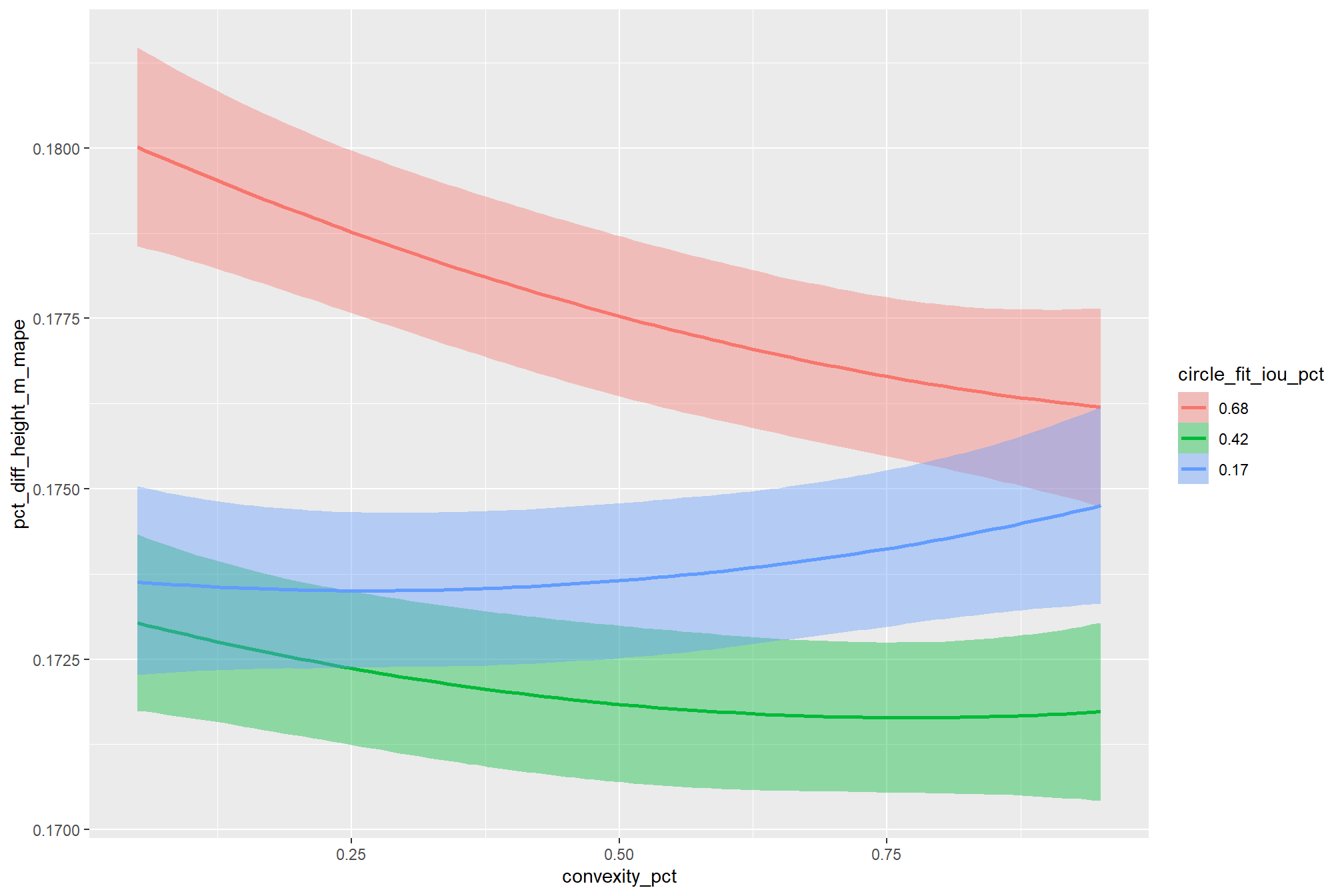

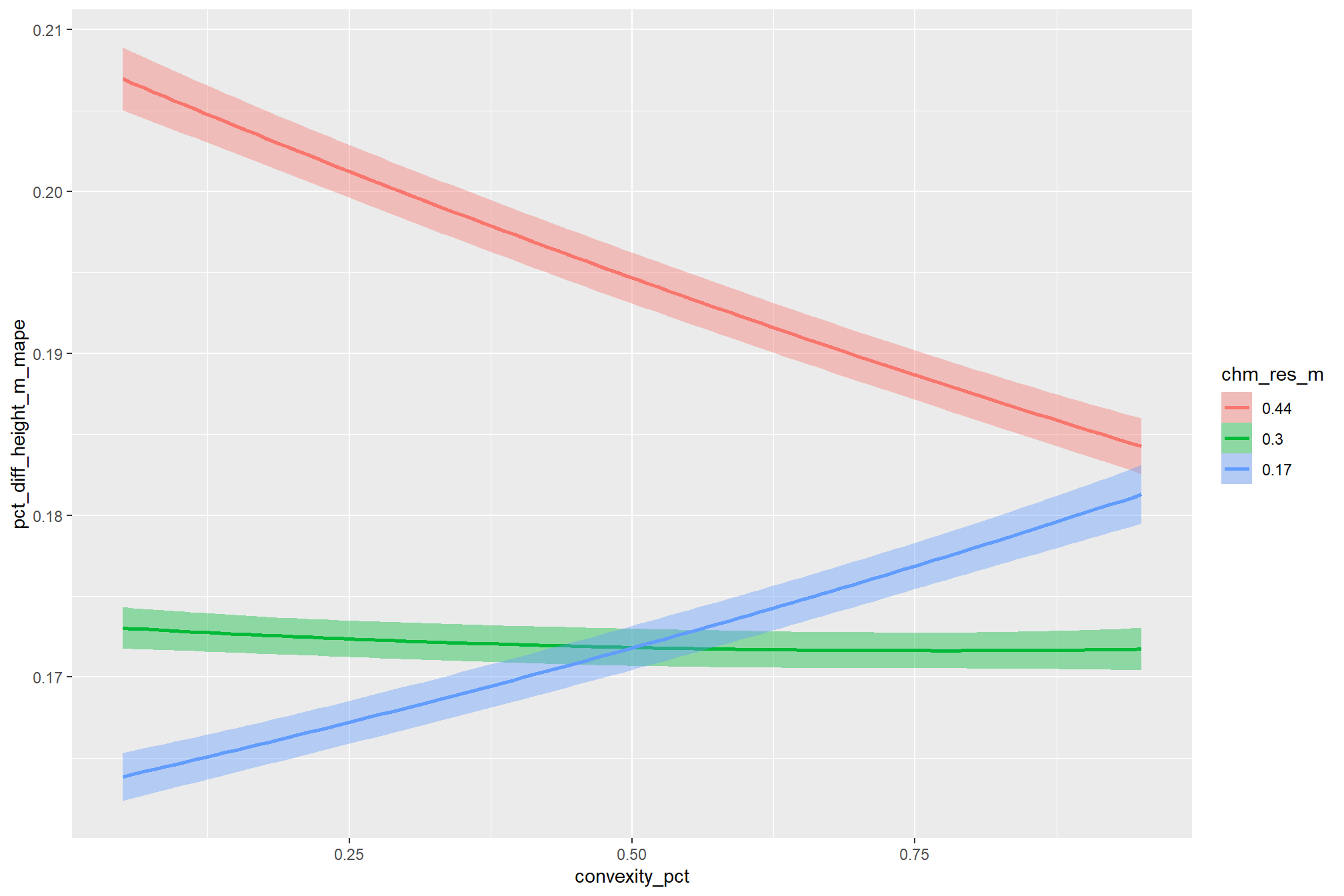

here are some of the hypotheses about the slash pile detection methodology that we’ll be exploring:

- does CHM resolution influences detection and quantification accuracy?

- does the effect of CHM resolution change based on the inclusion of spectral data versus using only structural data?

- does the use of spectral data have a meaningful impact on detection and quantification accuracy

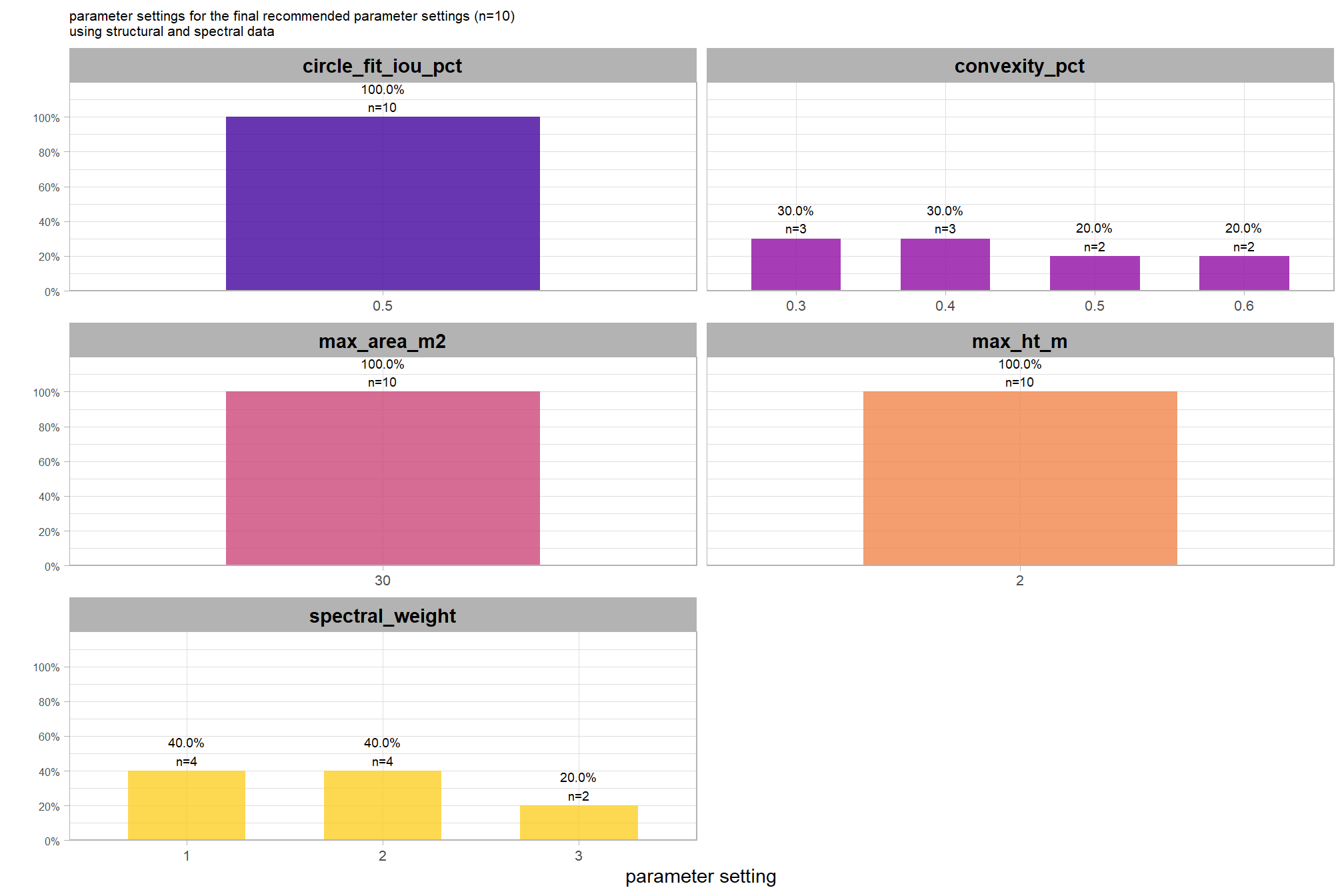

our analysis data set will be the param_combos_spectral_ranked data which includes accuracy measurements at the parameter combination level using both structural data only as well as structural and spectral data in our data fusion approach. in this data, a row is unique by the full set of parameters and input data tested: max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m, spectral_weight. there are four structural parameters: max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct which are used to determine candidate slash piles from the CHM data alone, and the chm_res_m and spectral_weight parameters represent the input data with spectral_weight classifying if spectral data was not used (i.e. spectral_weight = 0), or if spectral data was used, what the weighting of that spectral data was on a 1-5 scale where the number represents the number of individual spectral index thresholds that must be met for a candidate pile detected from the structural data to be kept. for example, a value of “5” requires that all spectral criteria be met and will result in more candidate piles being filtered out than a value of “3”. See this section for full details on the data fusion approach.

we’ll read in the sensitivity test result data which includes point estimates of detection and form quantification accuracy if it’s not already in memory

if( length(ls()[grep("param_combos_ranked",ls())])!=1 ){

param_combos_ranked <- readr::read_csv(file.path("../data", "param_combos_ranked.csv"), progress = F, show_col_types = F)

}

if( length(ls()[grep("param_combos_spectral_ranked",ls())])!=1 ){

param_combos_spectral_ranked <- readr::read_csv(file.path("../data", "param_combos_spectral_ranked.csv"), progress = F, show_col_types = F)

}

# convert spectral weight to factor for modelling

param_combos_spectral_ranked <- param_combos_spectral_ranked %>%

dplyr::mutate(

spectral_weight = factor(spectral_weight)

# replace 0 F-score with very small positive to run GLM models

, f_score = ifelse(f_score==0,1e-4,f_score)

)

# check out this data

param_combos_spectral_ranked %>%

dplyr::select(

max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m

, spectral_weight, spectral_weight_fact

, f_score, precision, recall

, tidyselect::ends_with("_mape")

) %>%

dplyr::glimpse()## Rows: 35,280

## Columns: 13

## $ max_ht_m <dbl> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 4, 4, …

## $ max_area_m2 <dbl> 40, 50, 50, 50, 50, 50, 60, 60, 60, 60, 60, 5…

## $ convexity_pct <dbl> 0.80, 0.05, 0.20, 0.35, 0.50, 0.65, 0.05, 0.2…

## $ circle_fit_iou_pct <dbl> 0.50, 0.65, 0.65, 0.65, 0.65, 0.65, 0.65, 0.6…

## $ chm_res_m <dbl> 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, …

## $ spectral_weight <fct> 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, …

## $ spectral_weight_fact <fct> structural+spectral, structural+spectral, str…

## $ f_score <dbl> 0.8740157, 0.8870968, 0.8870968, 0.8870968, 0…

## $ precision <dbl> 0.8345865, 0.8661417, 0.8661417, 0.8661417, 0…

## $ recall <dbl> 0.9173554, 0.9090909, 0.9090909, 0.9090909, 0…

## $ pct_diff_area_m2_mape <dbl> 0.1063683, 0.1013882, 0.1013882, 0.1013882, 0…

## $ pct_diff_diameter_m_mape <dbl> 0.10231758, 0.09905328, 0.09905328, 0.0990532…

## $ pct_diff_height_m_mape <dbl> 0.1851636, 0.1822841, 0.1822841, 0.1822841, 0…a row is unique by the full set of parameters tested: max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m, spectral_weight

# a row is unique by max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m, and spectral_weight

identical(

param_combos_spectral_ranked %>% dplyr::distinct(max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m, spectral_weight) %>% nrow()

, param_combos_spectral_ranked %>% nrow()

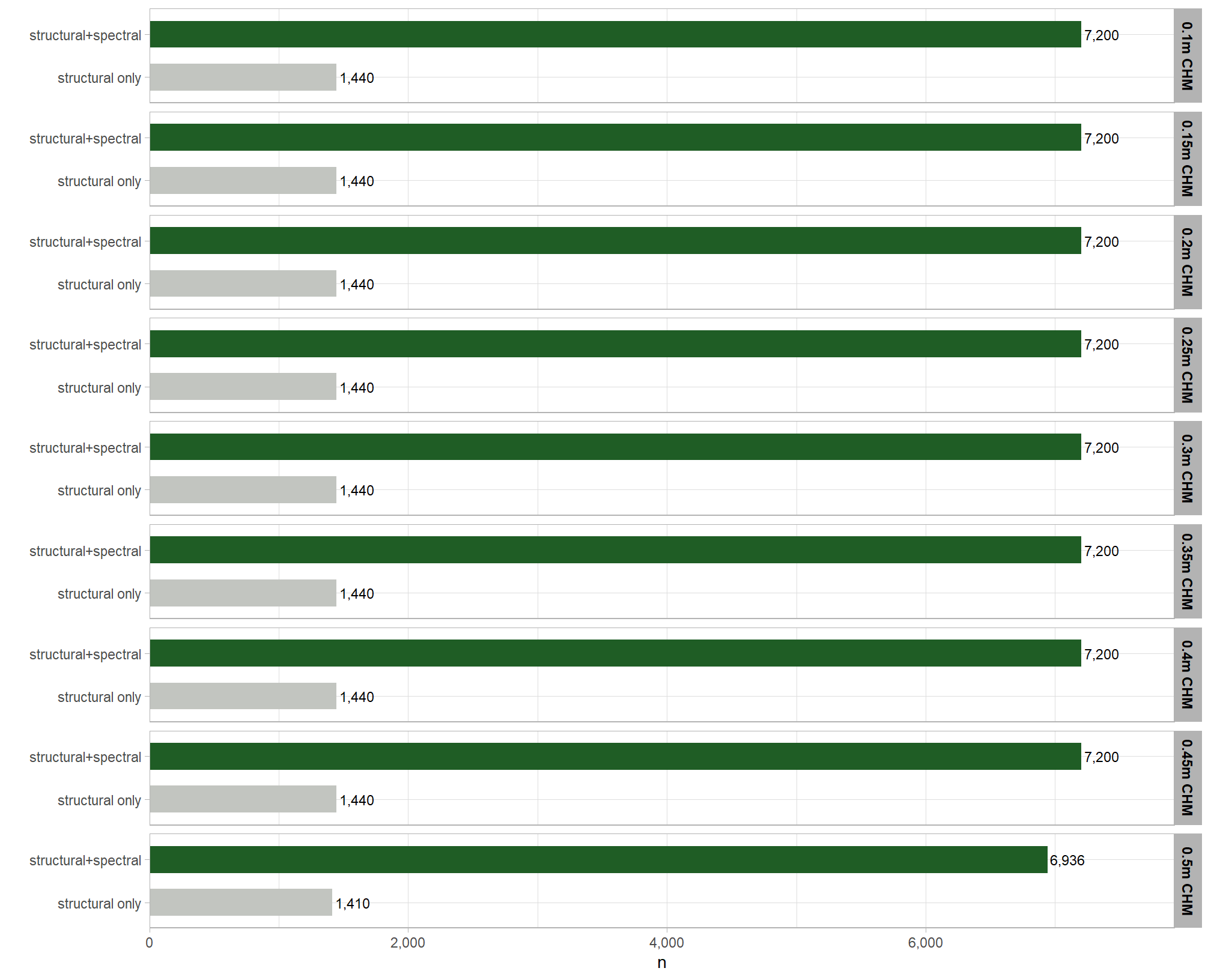

)## [1] TRUEhere are the number of records which returned valid predicted slash pile polygons by CHM resolution and data input setting (i.e. structural only versus data fusion). the number of records for the data fusion approach (“structural+spectral”) should be roughly five times the number of records as the structural only approach because we tested five different settings of the structural_weight parameter from the lowest weighting of the spectral data of “1” (only one spectral index threshold must be met) to the highest weighting of spectral data “5” (all spectral index thresholds must be met)

param_combos_spectral_ranked %>%

dplyr::count(chm_res_m_desc,spectral_weight_fact) %>%

ggplot2::ggplot(mapping = ggplot2::aes(x=n,y=spectral_weight_fact, color = spectral_weight_fact, fill = spectral_weight_fact)) +

ggplot2::geom_col(width = 0.6) +

ggplot2::geom_text(

mapping = ggplot2::aes(label=scales::comma(n))

, color = "black", size = 3

, hjust = -0.1

) +

ggplot2::facet_grid(rows = dplyr::vars(chm_res_m_desc)) +

harrypotter::scale_fill_hp_d(option = "slytherin") +

harrypotter::scale_color_hp_d(option = "slytherin") +

ggplot2::scale_x_continuous(labels = scales::comma, expand = ggplot2::expansion(mult = c(0,.1))) +

ggplot2::labs(y="") +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "none"

, strip.text.y = ggplot2::element_text(size = 9, color = "black", face = "bold")

)

9.1 Bayesian GLM - F-score

given that our data contains only one observation per parameter combination, we’re going to use a Bayesian Beta generalized linear model (GLM) to ensure a statistically sound approach and interpretable relationships between each parameter and the dependent variable (e.g. F-score). our model will treat the parameters as a mix of continuous and nominal variables, preventing model saturation (where the model has as many parameters to estimate as data points, so the data perfectly explains the model). A Bayesian hierarchical model would not be appropriate for this structure, since it is designed for datasets with nested or grouped observations (e.g. if we had evaluated the method across different plots or study sites).

Our Bayesian Beta regression models the F-score with a Beta distribution because it is a proportion between 0 and 1, which ensures that the predictions and uncertainty estimates are always within the valid range. We’re treating the four structural parameters (e.g. max_ht_m and circle_fit_iou_pct) and the CHM resolution (chm_res_m) as metric (i.e., continuous) variables, as this is statistically sound for our data and allows for a continuous interpretation where the model coefficient will represent the change in F-score for a one-unit change in the parameter value. The spectral_weight parameter, however, will be treated as nominal to capture its discrete effects without assuming a linear relationship.

9.1.1 Model selection

we’re going to use a sub-sample of the data to perform model testing. our objective is to construct the model such that it faithfully represents the data.

we reviewed the main effect parameter trends against F-score here and used these to guide our model design. we’ll follow Kurz 2025 and compare our models with the LOO information criterion

Like other information criteria, the LOO values aren’t of interest in and of themselves. However, the values of one model’s LOO relative to that of another is of great interest. We generally prefer models with lower estimates.

# subsample data

set.seed(222)

ms_df_temp <- param_combos_spectral_ranked %>% dplyr::slice_sample(prop = 0.11)

# mcmc setup

iter_temp <- 2444

warmup_temp <- 1222

chains_temp <- 4

####################################################################

# base model with form selected based on main effect trends

####################################################################

fscore_mod1_temp <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 + convexity_pct +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = ms_df_temp

, family = Beta(link = "logit")

# mcmc

, iter = iter_temp, warmup = warmup_temp

, chains = chains_temp

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "fscore_mod1_temp")

)

fscore_mod1_temp <- brms::add_criterion(fscore_mod1_temp, criterion = "loo")

####################################################################

# allows slope and curvature of circle_fit_iou_pct to vary by chm_res_m and vice-versa

####################################################################

fscore_mod2_temp <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 + convexity_pct +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

circle_fit_iou_pct:chm_res_m + # changed from base model

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = ms_df_temp

, family = Beta(link = "logit")

# mcmc

, iter = iter_temp, warmup = warmup_temp

, chains = chains_temp

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "fscore_mod2_temp")

)

fscore_mod2_temp <- brms::add_criterion(fscore_mod2_temp, criterion = "loo")

####################################################################

# allows slope and curvature of circle_fit_iou_pct to vary by convexity_pct and vice-versa

####################################################################

fscore_mod3_temp <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 + convexity_pct +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

circle_fit_iou_pct:convexity_pct + # changed from base model

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = ms_df_temp

, family = Beta(link = "logit")

# mcmc

, iter = iter_temp, warmup = warmup_temp

, chains = chains_temp

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "fscore_mod3_temp")

)

fscore_mod3_temp <- brms::add_criterion(fscore_mod3_temp, criterion = "loo")

####################################################################

# a three-way interaction of circle_fit_iou_pct, convexity_pct and chm_res_m

####################################################################

fscore_mod4_temp <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 + convexity_pct +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

circle_fit_iou_pct:convexity_pct + # changed from base model

circle_fit_iou_pct:chm_res_m + # changed from base model

convexity_pct:chm_res_m + # changed from base model

circle_fit_iou_pct:chm_res_m:convexity_pct + # changed from base model

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = ms_df_temp

, family = Beta(link = "logit")

# mcmc

, iter = iter_temp, warmup = warmup_temp

, chains = chains_temp

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "fscore_mod4_temp")

)

fscore_mod4_temp <- brms::add_criterion(fscore_mod4_temp, criterion = "loo")

####################################################################

# a three-way interaction of circle_fit_iou_pct, convexity_pct and chm_res_m. quadratic convexity_pct

####################################################################

fscore_mod5_temp <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

convexity_pct + I(convexity_pct^2) + # changed from base model

circle_fit_iou_pct:convexity_pct + # changed from base model

circle_fit_iou_pct:chm_res_m + # changed from base model

convexity_pct:chm_res_m + # changed from base model

circle_fit_iou_pct:chm_res_m:convexity_pct + # changed from base model

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = ms_df_temp

, family = Beta(link = "logit")

# mcmc

, iter = iter_temp, warmup = warmup_temp

, chains = chains_temp

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "fscore_mod5_temp")

)

fscore_mod5_temp <- brms::add_criterion(fscore_mod4_temp, criterion = "loo")compare our models with the LOO information criterion. with the brms::loo_compare() function, we can compute a formal difference score between models with the output rank ordering the models such that the best fitting model appears on top. all models also receive a difference score relative to the best model and a standard error of the difference score

brms::loo_compare(fscore_mod1_temp, fscore_mod2_temp, fscore_mod3_temp, fscore_mod4_temp, fscore_mod5_temp) %>%

kableExtra::kbl(caption = "F-score model selection with LOO information criterion") %>%

kableExtra::kable_styling()| elpd_diff | se_diff | elpd_loo | se_elpd_loo | p_loo | se_p_loo | looic | se_looic | |

|---|---|---|---|---|---|---|---|---|

| fscore_mod4_temp | 0.0000 | 0.00000 | 4254.854 | 139.8614 | 26.42988 | 1.441628 | -8509.709 | 279.7227 |

| fscore_mod5_temp | 0.0000 | 0.00000 | 4254.854 | 139.8614 | 26.42988 | 1.441628 | -8509.709 | 279.7227 |

| fscore_mod3_temp | -216.3790 | 26.76322 | 4038.475 | 139.5926 | 27.71362 | 1.931427 | -8076.951 | 279.1851 |

| fscore_mod2_temp | -229.8413 | 28.35355 | 4025.013 | 138.5435 | 27.54291 | 1.893650 | -8050.026 | 277.0871 |

| fscore_mod1_temp | -234.9112 | 30.47721 | 4019.943 | 139.0671 | 27.27559 | 1.942107 | -8039.886 | 278.1342 |

we can also look at the AIC-type model weights

brms::model_weights(fscore_mod1_temp, fscore_mod2_temp, fscore_mod3_temp, fscore_mod4_temp, fscore_mod5_temp) %>%

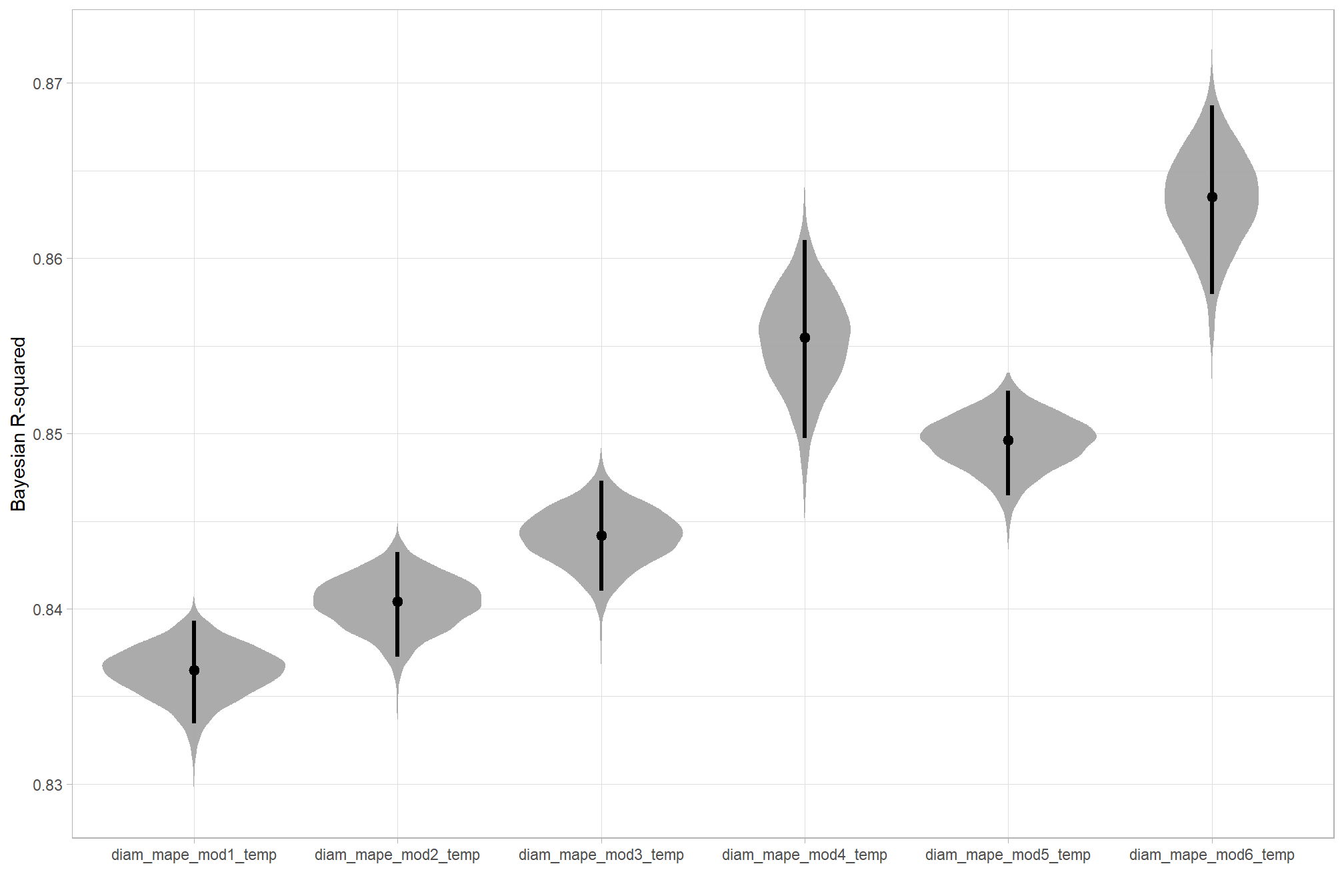

round(digits = 4)we can also quickly look at the Bayeisan \(R^2\) returned from the brms::bayes_R2() function

dplyr::bind_rows(

brms::bayes_R2(fscore_mod1_temp,summary=F) %>% dplyr::as_tibble() %>% dplyr::mutate(mod = "fscore_mod1_temp")

, brms::bayes_R2(fscore_mod2_temp,summary=F) %>% dplyr::as_tibble() %>% dplyr::mutate(mod = "fscore_mod2_temp")

, brms::bayes_R2(fscore_mod3_temp,summary=F) %>% dplyr::as_tibble() %>% dplyr::mutate(mod = "fscore_mod3_temp")

, brms::bayes_R2(fscore_mod4_temp,summary=F) %>% dplyr::as_tibble() %>% dplyr::mutate(mod = "fscore_mod4_temp")

, brms::bayes_R2(fscore_mod5_temp,summary=F) %>% dplyr::as_tibble() %>% dplyr::mutate(mod = "fscore_mod5_temp")

) %>%

dplyr::mutate(mod = factor(mod)) %>%

ggplot2::ggplot(mapping=ggplot2::aes(y=R2, x = mod)) +

tidybayes::stat_eye(

point_interval = median_hdi, .width = .95

, slab_alpha = 0.95

) +

# ggplot2::facet_grid(cols = dplyr::vars(spectral_weight)) +

# ggplot2::scale_fill_manual(values = pal_chm_res_m) +

ggplot2::labs(x = "", y = "Bayesian R-squared") +

ggplot2::theme_light()

the more complex models were selected as the best. while our model evaluation indicated that the more parsimonious model with fewer parameters was comparable to the most complex model tested, we’ll the more complex model (fscore_mod5_temp) model for our based on the AIC-type model weights. because the selected model includes a quadratic term and multiple interactions parameter interpretation will be a challenge, so we will have to rely on plotting the modeled relationships rather than trying to interpret the coefficients.

9.1.2 Modeling

the four structural parameters (e.g. max_ht_m and circle_fit_iou_pct) and the CHM resolution (chm_res_m) as metric (i.e., continuous) variables. we include an interaction between chm_res_m and spectral_weight to directly compare the effect of CHM resolution with and without the use of spectral data.

we’ll generally follow Kurz (2023a; 2023b; 2025 for multiple linear regression model building using the brms Bayesian model framework based on McElreath (2015, Ch. 5,7) and Kruschke (2015, Ch. 18)

the fully factored Bayesian statistical model that details the likelihood, linear model, and priors used is:

\[\begin{align*} \text{F-score}_i \sim & \operatorname{Beta}(\mu_{i}, \phi) \\ \operatorname{logit}(\mu_i) = & (\beta_1 \cdot \text{max_ht_m}_i) + (\beta_2 \cdot \text{max_area_m2}_i) \\ & + (\beta_3 \cdot \text{circle_fit_iou_pct}_i) + (\beta_4 \cdot (\text{circle_fit_iou_pct}_i)^2) \\ & + (\beta_5 \cdot \text{convexity_pct}_i) + (\beta_6 \cdot (\text{convexity_pct}_i)^2) \\ & + (\beta_7 \cdot \text{chm_res_m}_i) \\ & + \sum_{j=0}^{5} \left( \beta_{8, j} \cdot \mathbf{I}(\text{spectral_weight}_i = j) \right) \\ & + (\beta_9 \cdot \text{circle_fit_iou_pct}_i \cdot \text{convexity_pct}_i) \\ & + (\beta_{10} \cdot \text{circle_fit_iou_pct}_i \cdot \text{chm_res_m}_i) \\ & + (\beta_{11} \cdot \text{convexity_pct}_i \cdot \text{chm_res_m}_i) \\ & + \sum_{j=0}^{5} \left( \beta_{12, j} \cdot \text{chm_res_m}_i \cdot \mathbf{I}(\text{spectral_weight}_i = j) \right) \\ & + (\beta_{13} \cdot \text{circle_fit_iou_pct}_i \cdot \text{chm_res_m}_i \cdot \text{convexity_pct}_i) \\ \beta_k \sim & \operatorname{Normal}(0, \sigma_k) \quad \text{for } k = 0, \dots, 13 \\ \sigma_k \sim & \operatorname{Student T}(3,0,2.5) \quad \text{for } k = 0, \dots, 13 \\ \phi \sim & \operatorname{Gamma}(0.01,0.01) \\ \end{align*}\]

where, \(i\) represents a single observation in the dataset which corresponds to a specific combination of the six parameters (max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct, chm_res_m, and spectral_weight) and its resulting F-score. Where k is used to index the different beta coefficients, which correspond to the intercept and the effects of each of the independent variables and their interactions and j denotes the specific level of the nominal (i.e. categorical) predictor spectral_weight

let’s fit the model using the brms framework to fit Bayesian regression models using the Stan probabilistic programming language. if we want to set the prior for \(\beta_0\) given a non-centered predictors, then we need to use the 0 + Intercept syntax to fit the model (see Kurz 2025 for full discussion), but we’ll just fit the model with the brsm::brm() default settings which automatically mean centers the predictors and also set the intercept to 0 so that we get explicit coefficient estimates for each level of our spectral_weight nominal variable which determines the intercept in this model

The table below details the terms used in our Bayesian GLM model defined in the brms::brm() call:

| Term in Formula | Type of Effect | Description of Relationship Tested |

|---|---|---|

0 + |

Zero Intercept | Specifies that the model is fit without a global intercept (baseline is determined by the combination of all factor levels). |

max_ht_m |

Main Effect (Linear) | Tests the direct, isolated linear influence of the maximum pile height threshold on the F-score. |

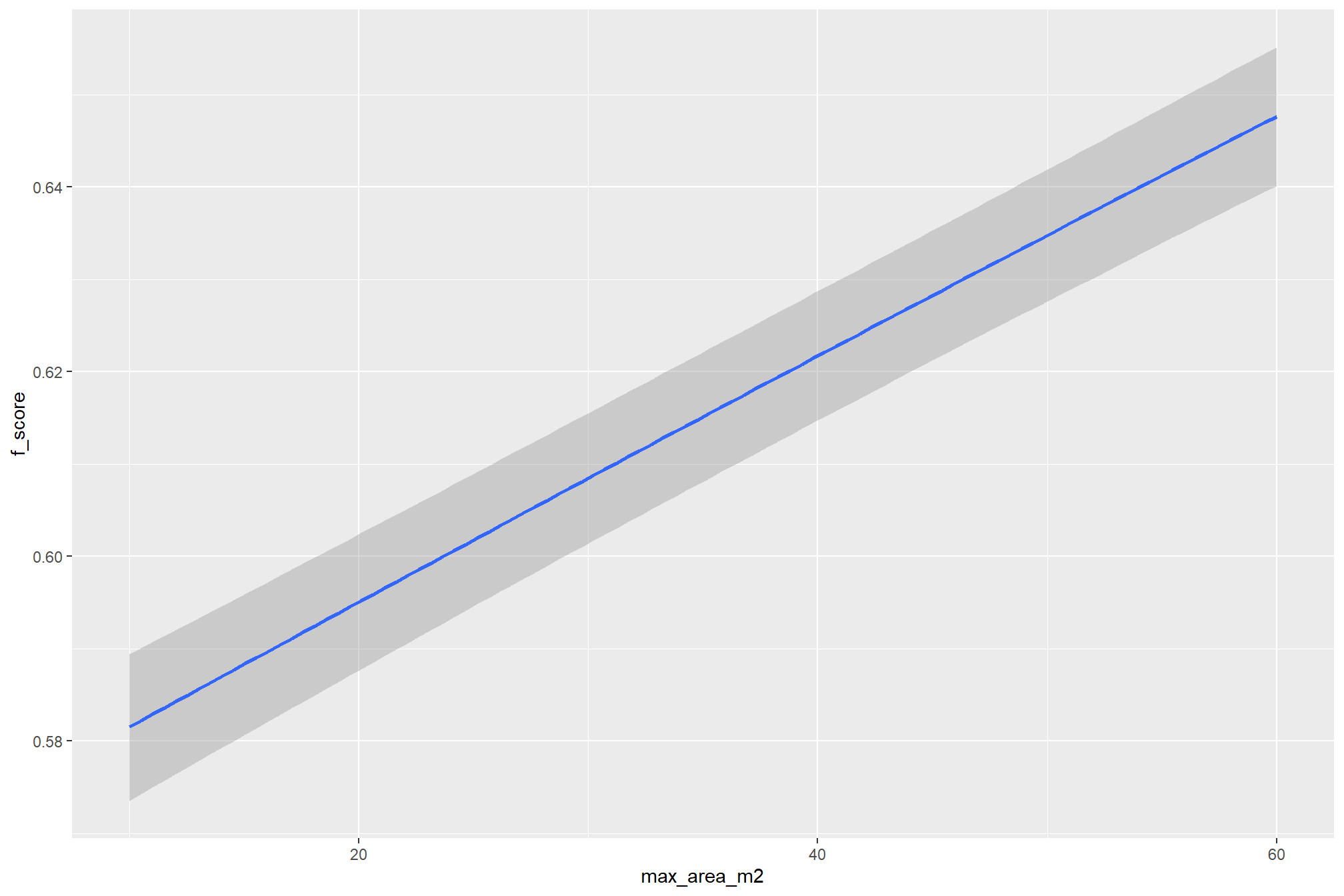

max_area_m2 |

Main Effect (Linear) | Tests the direct, isolated linear influence of the maximum pile area threshold on the F-score. |

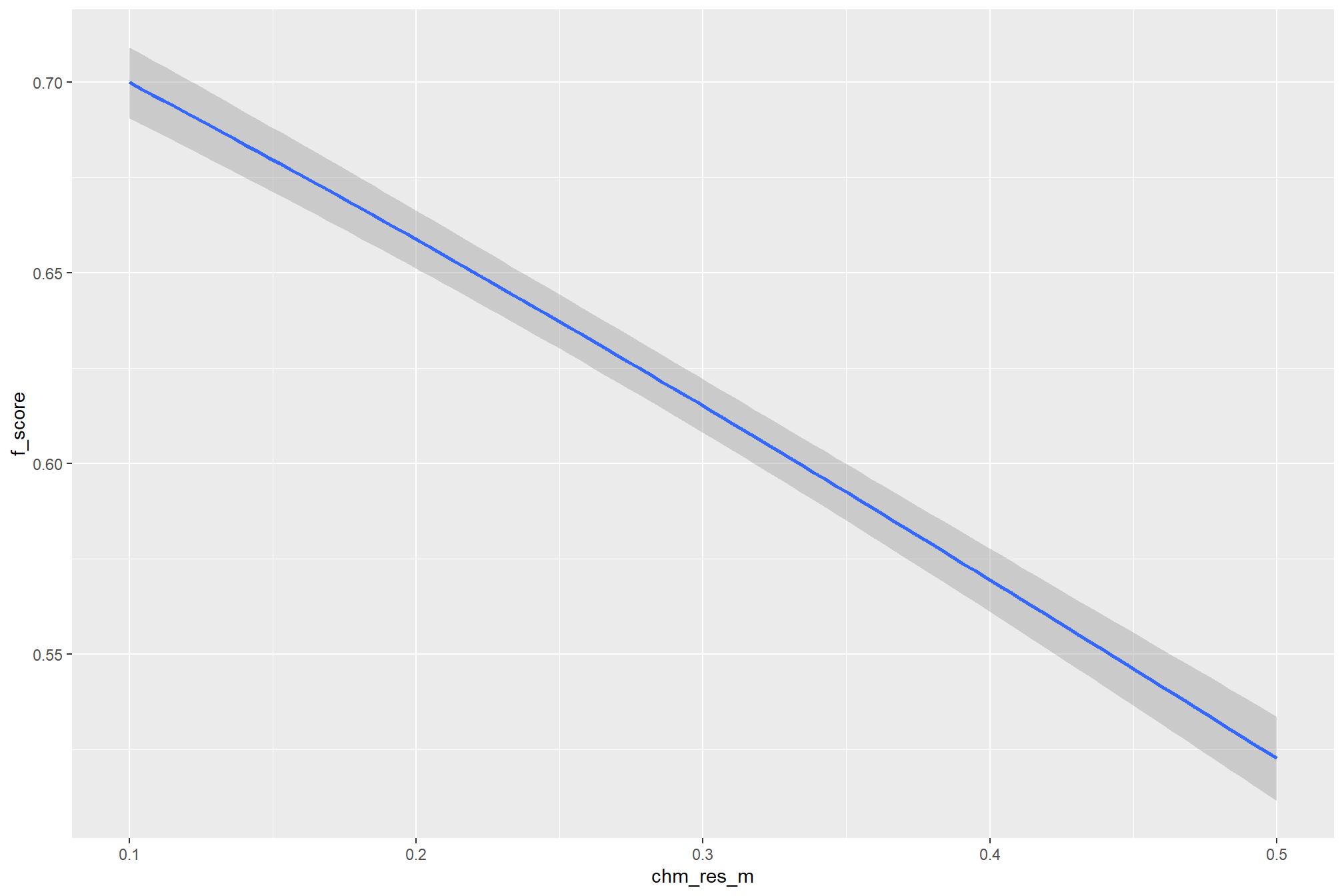

chm_res_m |

Main Effect (Linear) | Tests the direct, isolated linear influence of the input Canopy Height Model (CHM) resolution on the F-score. |

spectral_weight |

Main Effect (Factor) | The model estimates a separate coefficient for each of the six spectral weight levels (0 through 5). This coefficient represents the estimated mean F-score for that specific spectral weight level when all continuous variables are zero. |

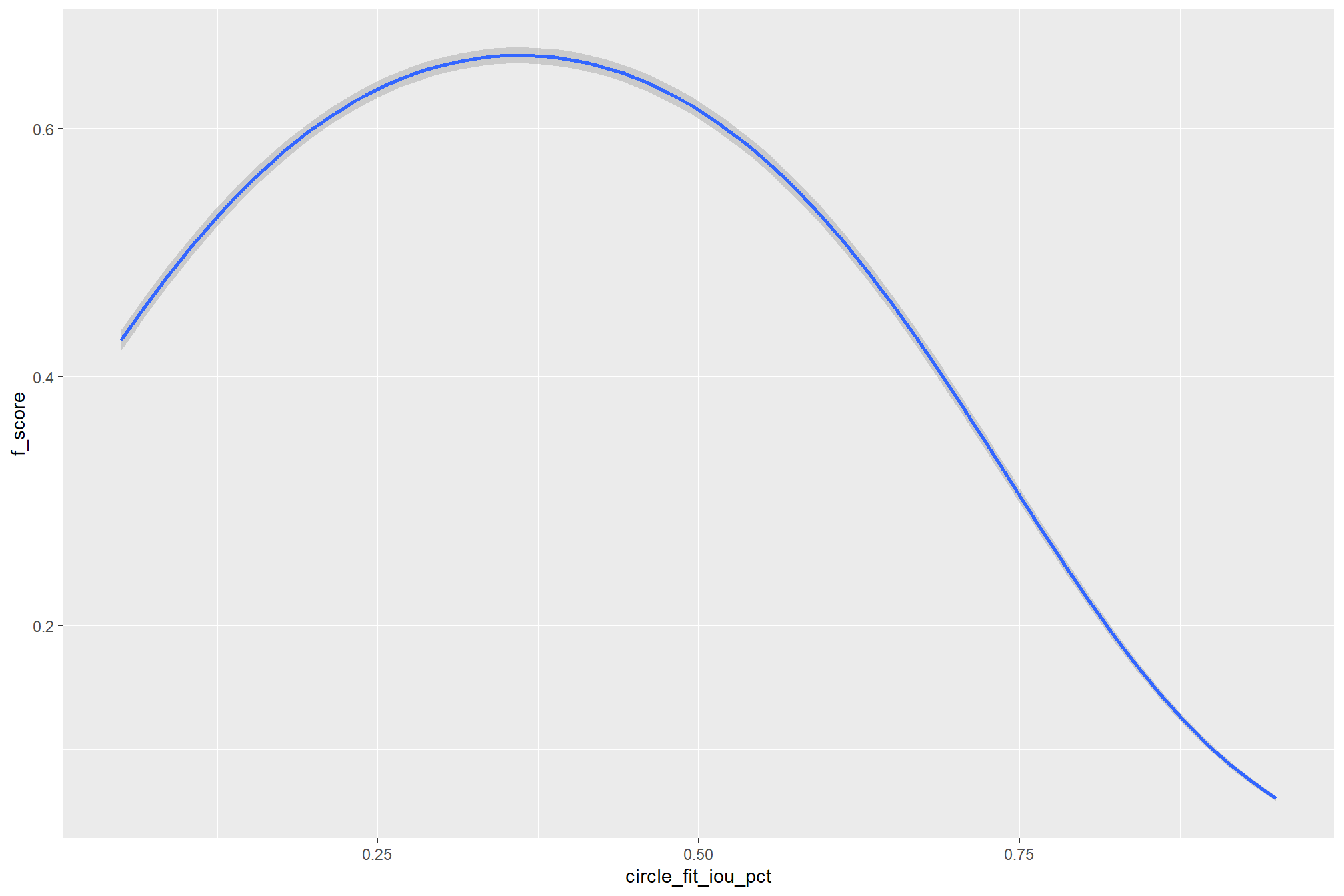

circle_fit_iou_pct |

Main Effect (Linear) | Tests the direct linear influence of the pile’s circular conformity threshold on the F-score. |

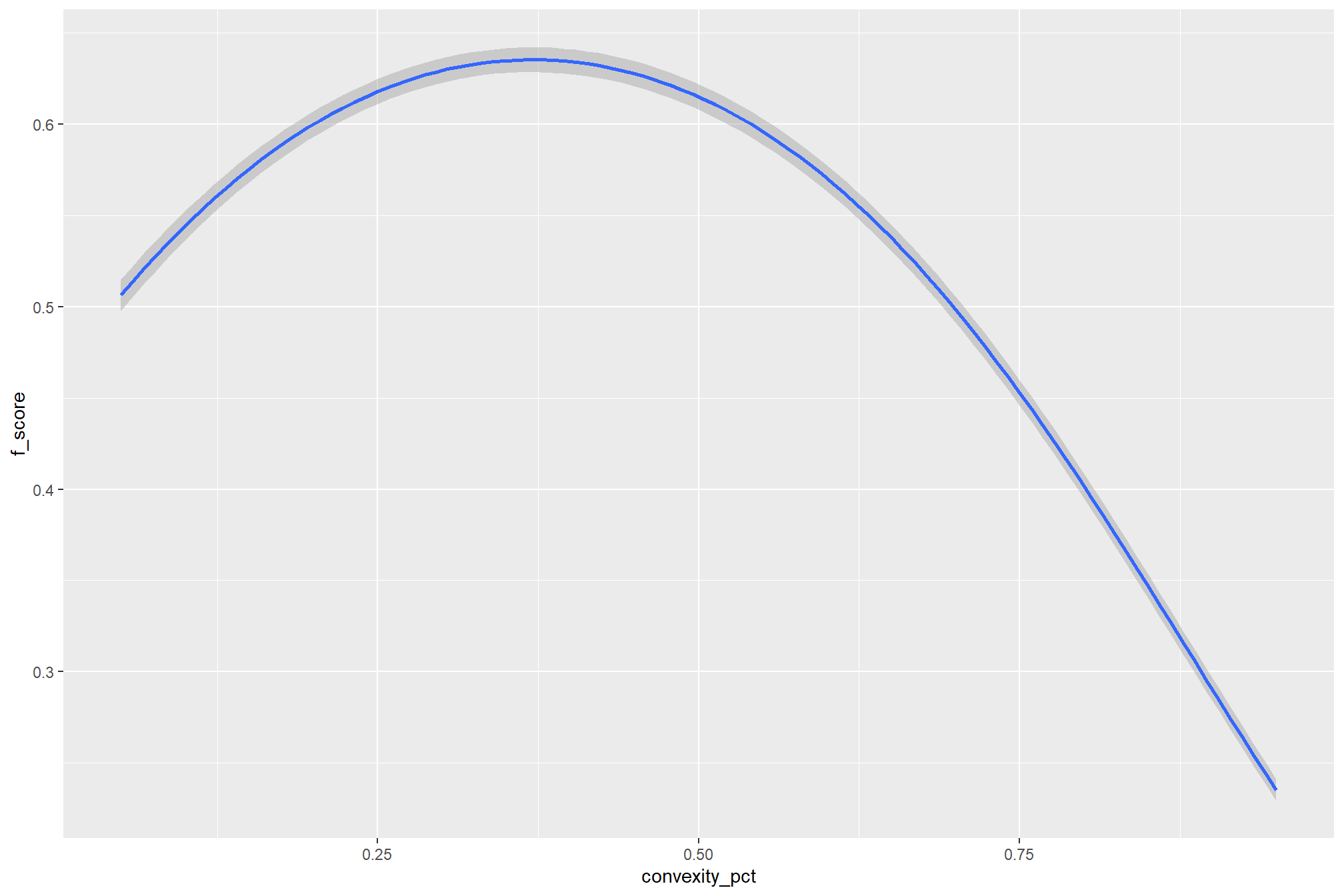

convexity_pct |

Main Effect (Linear) | Tests the direct linear influence of the pile’s boundary smoothness (convexity) threshold on the F-score. |

I(circle_fit_iou_pct^2) |

Nonlinear (Quadratic) | Models a curved relationship where the F-score may peak or bottom out at an intermediate threshold for pile circularity. |

I(convexity_pct^2) |

Nonlinear (Quadratic) | Models a curved relationship where the F-score may peak or bottom out at an intermediate threshold for pile boundary smoothness. |

circle_fit_iou_pct:convexity_pct |

Two-Way Interaction | Captures how the optimal balance between pile circular conformity and boundary smoothness changes for the F-score. |

chm_res_m:spectral_weight |

Two-Way Interaction (Factor) | Captures how the effect of CHM resolution on the F-score changes across each of the six spectral weighting levels. |

circle_fit_iou_pct:chm_res_m |

Two-Way Interaction | Captures how the importance of the pile’s circular conformity threshold changes as the input data resolution changes. |

convexity_pct:chm_res_m |

Two-Way Interaction | Captures how the sensitivity to the pile boundary smoothness threshold changes with the input data resolution. |

circle_fit_iou_pct:chm_res_m:convexity_pct |

Three-Way Interaction | The most complex term, showing how the combined effects of the circularity and convexity thresholds change simultaneously across different input CHM resolutions. |

brms_f_score_mod <- brms::brm(

formula = f_score ~

0 + # no intercept to allow all values of spectral_weight to be shown instead of set as the baseline

max_ht_m + max_area_m2 +

circle_fit_iou_pct + I(circle_fit_iou_pct^2) +

convexity_pct + I(convexity_pct^2) + # changed from base model

circle_fit_iou_pct:convexity_pct + # changed from base model

circle_fit_iou_pct:chm_res_m + # changed from base model

convexity_pct:chm_res_m + # changed from base model

circle_fit_iou_pct:chm_res_m:convexity_pct + # changed from base model

chm_res_m + spectral_weight + chm_res_m:spectral_weight

, data = param_combos_spectral_ranked # %>% dplyr::slice_sample(prop = 0.33)

, family = Beta(link = "logit")

# , prior = c(

# brms::prior(student_t(3, 0, 5), class = "b")

# , brms::prior(gamma(0.01, 0.01), class = "phi")

# )

# mcmc

, iter = 14000, warmup = 7000

, chains = 4

# , control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = lasR::half_cores()

, file = paste0("../data/", "brms_f_score_mod")

)

# brms::make_stancode(brms_f_score_mod)

# brms::prior_summary(brms_f_score_mod)

# print(brms_f_score_mod)

# brms::neff_ratio(brms_f_score_mod)

# brms::rhat(brms_f_score_mod)

# brms::nuts_params(brms_f_score_mod)The brms::brm model summary

brms_f_score_mod %>%

brms::posterior_summary() %>%

as.data.frame() %>%

tibble::rownames_to_column(var = "parameter") %>%

dplyr::rename_with(tolower) %>%

dplyr::filter(

stringr::str_starts(parameter, "b_")

| parameter == "phi"

) %>%

# dplyr::mutate(

# dplyr::across(

# dplyr::where(is.numeric)

# , ~ dplyr::case_when(

# stringr::str_ends(parameter,"_pct") ~ .x*0.01 # convert to percentage point change

# , T ~ .x

# )

# )

# ) %>%

kableExtra::kbl(digits = 3, caption = "Bayesian model for F-score") %>%

kableExtra::kable_styling()| parameter | estimate | est.error | q2.5 | q97.5 |

|---|---|---|---|---|

| b_max_ht_m | -0.033 | 0.004 | -0.042 | -0.025 |

| b_max_area_m2 | 0.006 | 0.000 | 0.005 | 0.006 |

| b_circle_fit_iou_pct | 5.893 | 0.100 | 5.696 | 6.091 |

| b_Icircle_fit_iou_pctE2 | -9.792 | 0.066 | -9.923 | -9.662 |

| b_convexity_pct | 0.297 | 0.103 | 0.094 | 0.498 |

| b_Iconvexity_pctE2 | -5.165 | 0.065 | -5.291 | -5.037 |

| b_chm_res_m | -6.767 | 0.159 | -7.081 | -6.456 |

| b_spectral_weight0 | 0.897 | 0.057 | 0.786 | 1.010 |

| b_spectral_weight1 | 0.896 | 0.057 | 0.785 | 1.008 |

| b_spectral_weight2 | 0.896 | 0.057 | 0.786 | 1.009 |

| b_spectral_weight3 | 0.925 | 0.057 | 0.814 | 1.038 |

| b_spectral_weight4 | 1.209 | 0.056 | 1.099 | 1.320 |

| b_spectral_weight5 | 1.083 | 0.056 | 0.973 | 1.195 |

| b_circle_fit_iou_pct:convexity_pct | 2.815 | 0.127 | 2.569 | 3.065 |

| b_circle_fit_iou_pct:chm_res_m | 2.690 | 0.230 | 2.240 | 3.141 |

| b_convexity_pct:chm_res_m | 10.563 | 0.232 | 10.105 | 11.020 |

| b_chm_res_m:spectral_weight1 | 0.001 | 0.120 | -0.234 | 0.237 |

| b_chm_res_m:spectral_weight2 | 0.001 | 0.121 | -0.236 | 0.238 |

| b_chm_res_m:spectral_weight3 | 0.000 | 0.120 | -0.235 | 0.236 |

| b_chm_res_m:spectral_weight4 | -0.263 | 0.117 | -0.491 | -0.033 |

| b_chm_res_m:spectral_weight5 | 0.628 | 0.115 | 0.403 | 0.853 |

| b_circle_fit_iou_pct:convexity_pct:chm_res_m | -6.991 | 0.407 | -7.789 | -6.188 |

| phi | 4.170 | 0.031 | 4.110 | 4.230 |

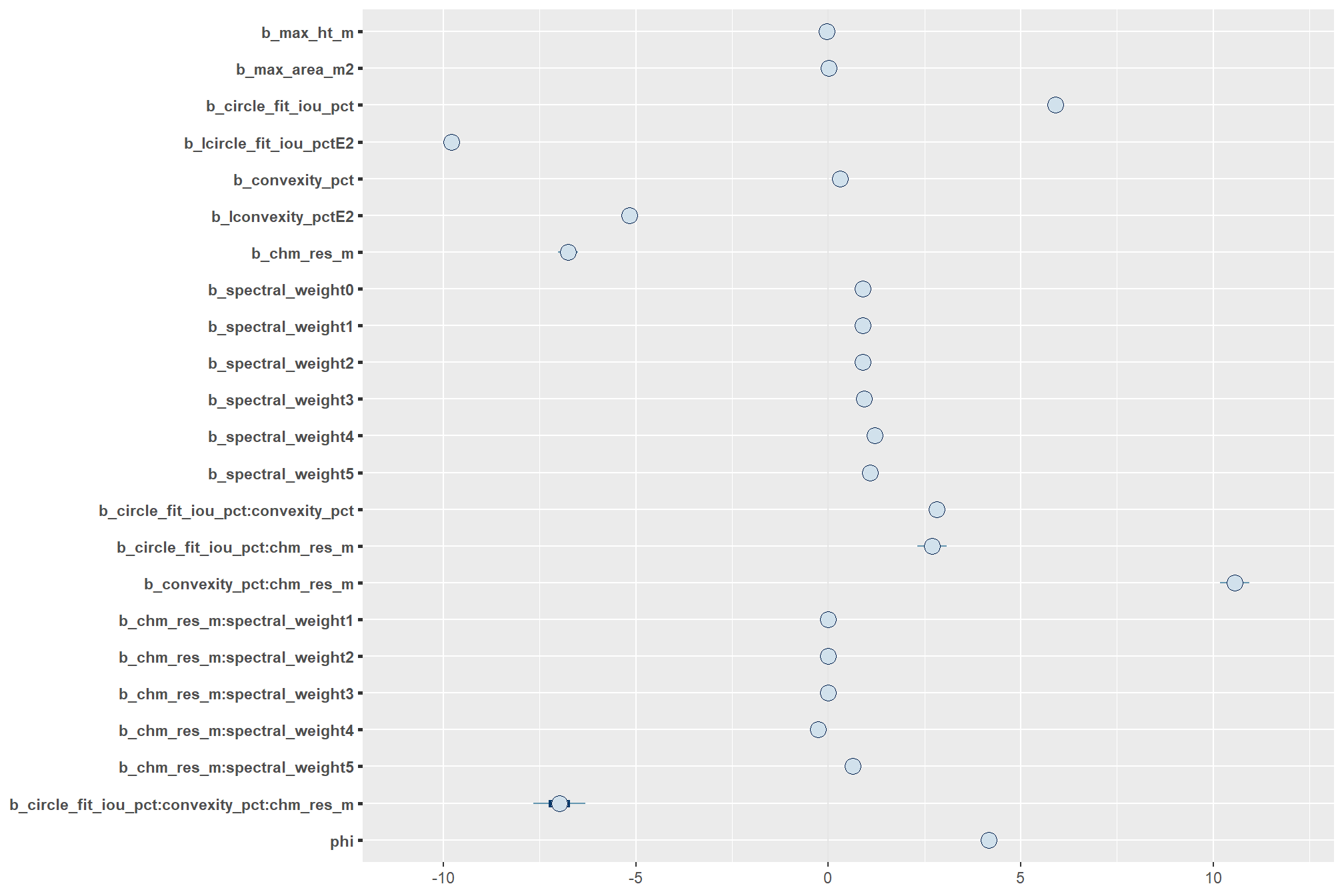

note the quadratic coefficients ending in E2, Kruschke (2015) provides some insight on how to interpret:

A quadratic has the form \(y = \beta_{0} + \beta_{1}x + \beta_{2}x^{2}\). When \(\beta_{2}\) is zero, the form reduces to a line. Therefore, this extended model can produce any fit that the linear model can. When \(\beta_{2}\) is positive, a plot of the curve is a parabola that opens upward. When \(\beta_{2}\) is negative, the curve is a parabola that opens downward. We have no reason to think that the curvature in the family-income data is exactly a parabola, but the quadratic trend might describe the data much better than a line alone. (p. 496)

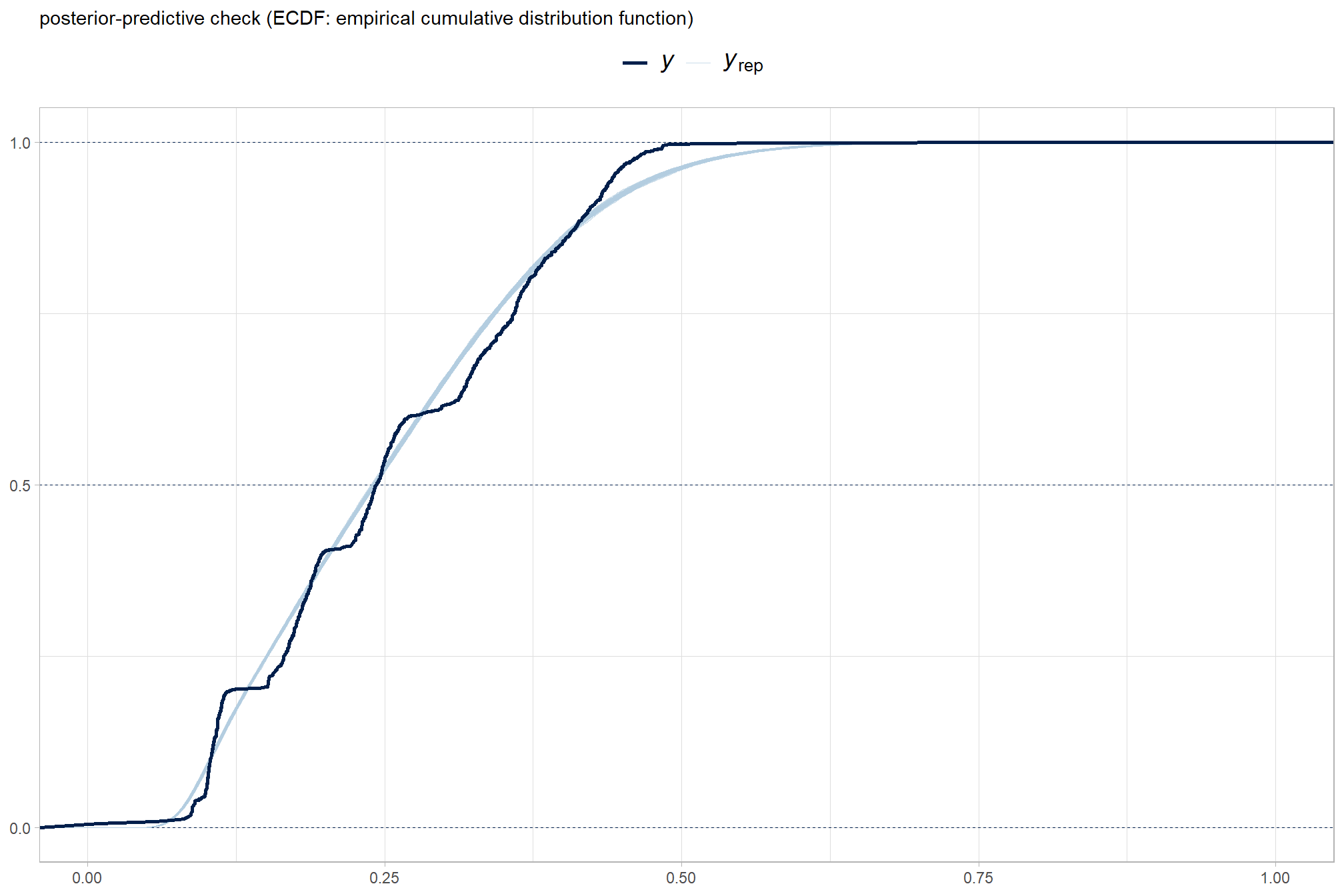

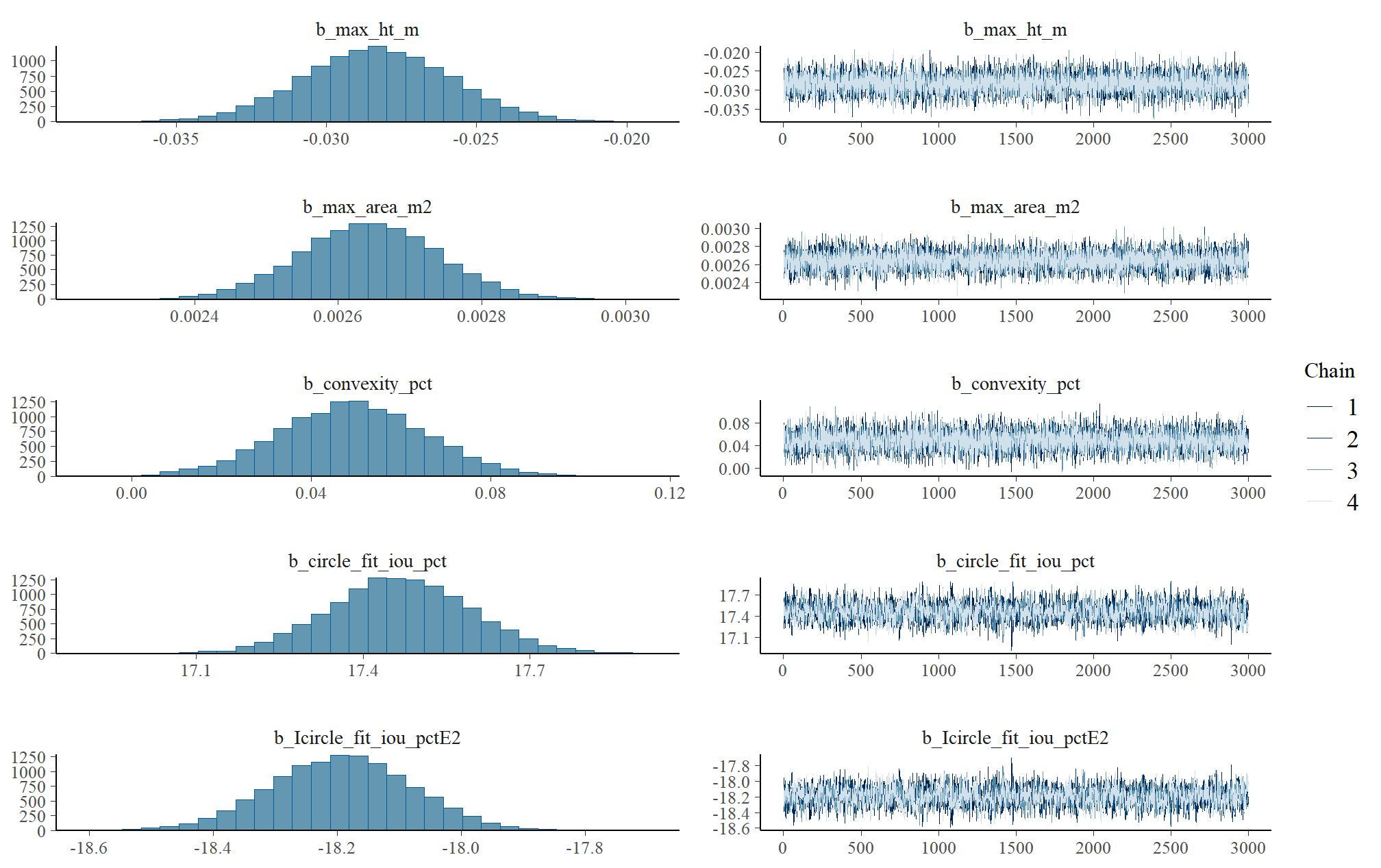

9.1.3 Posterior Predictive Checks

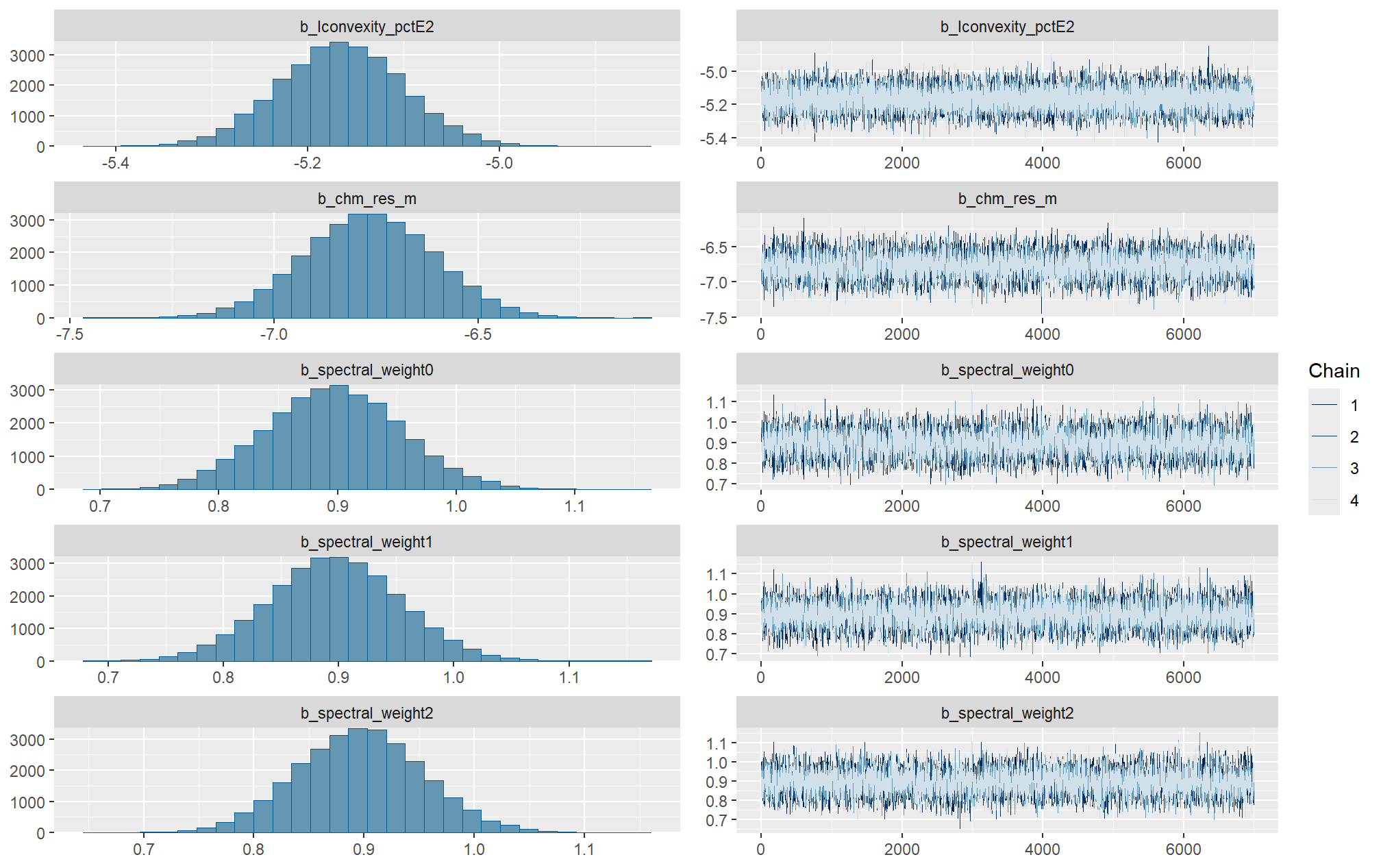

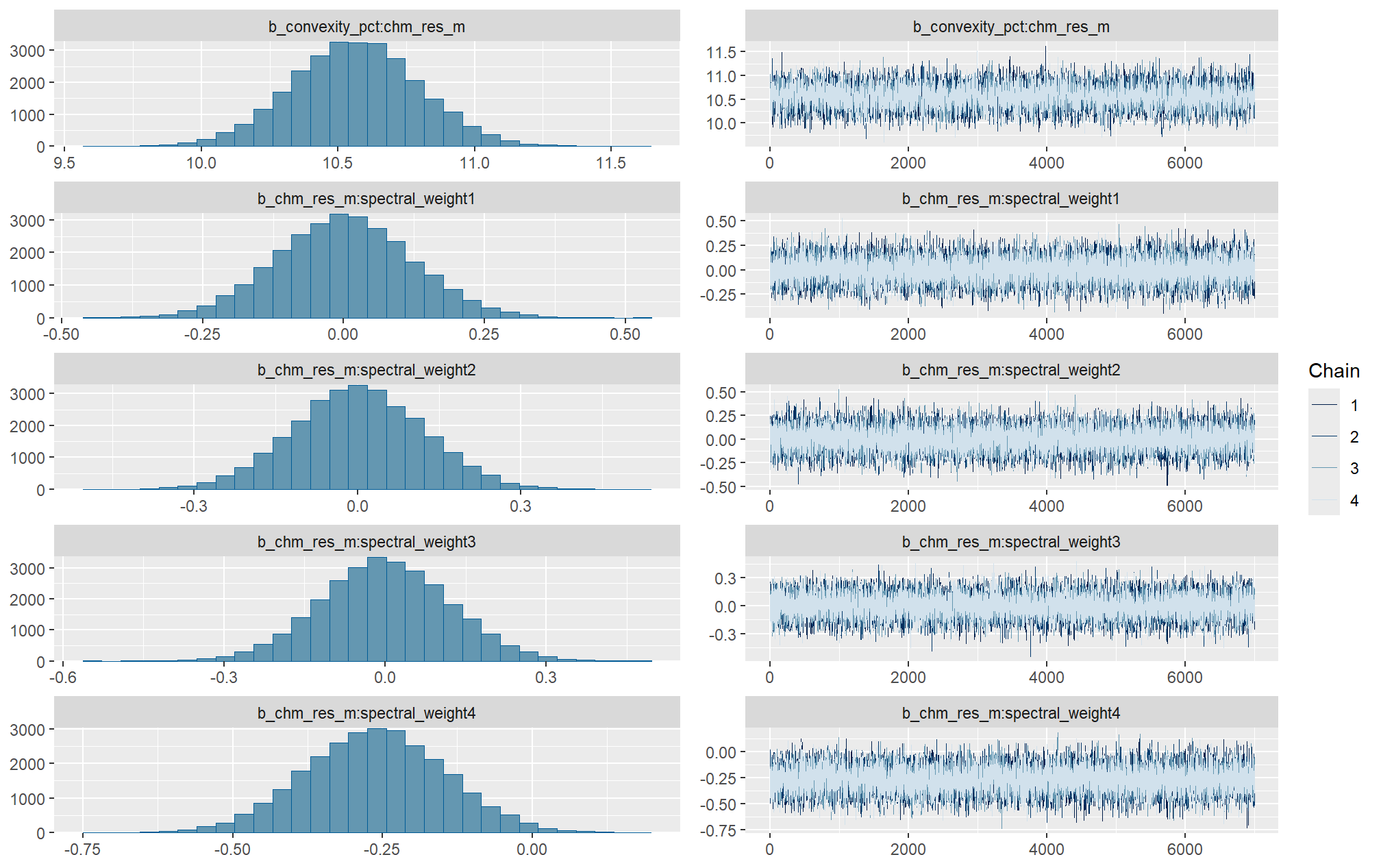

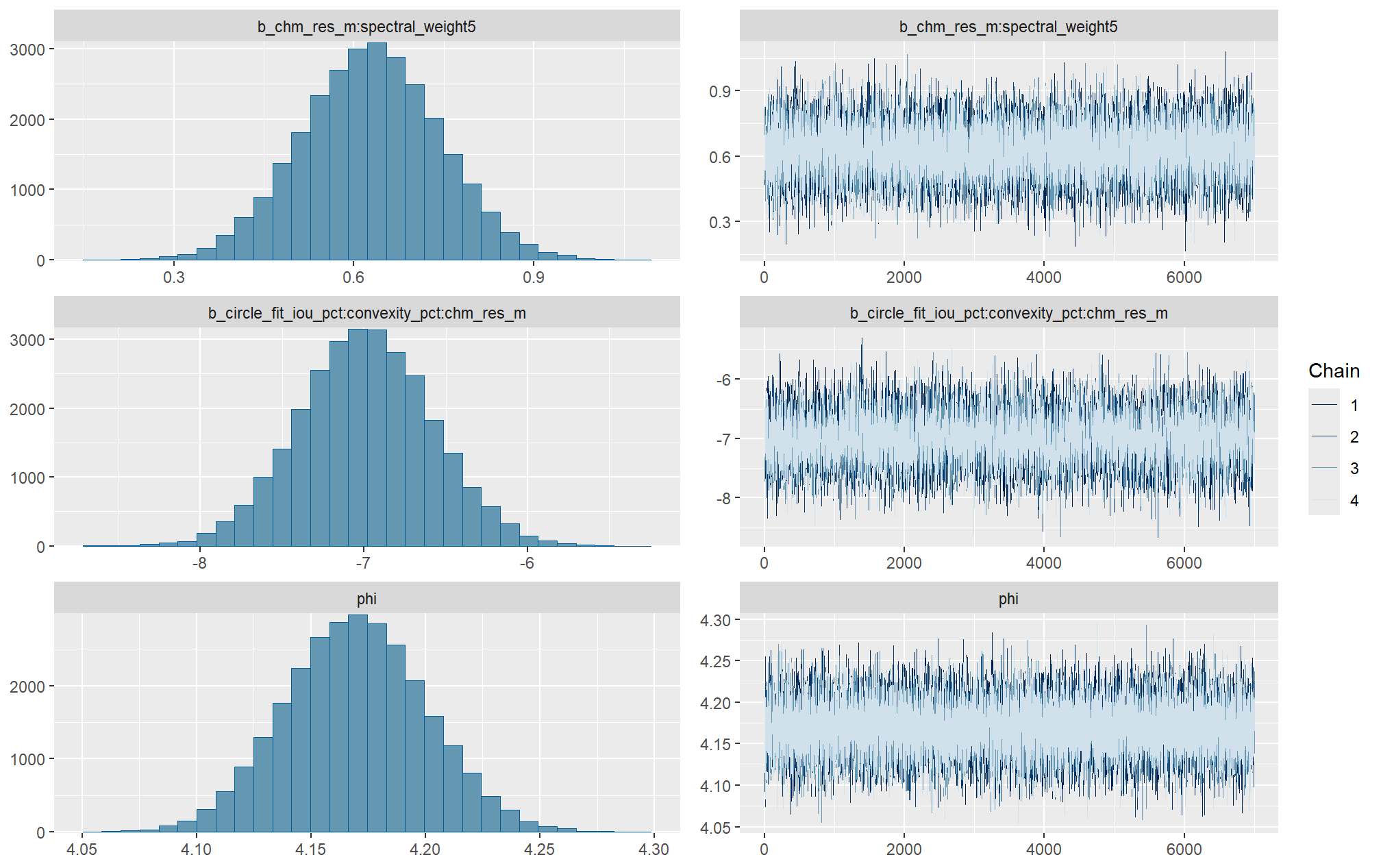

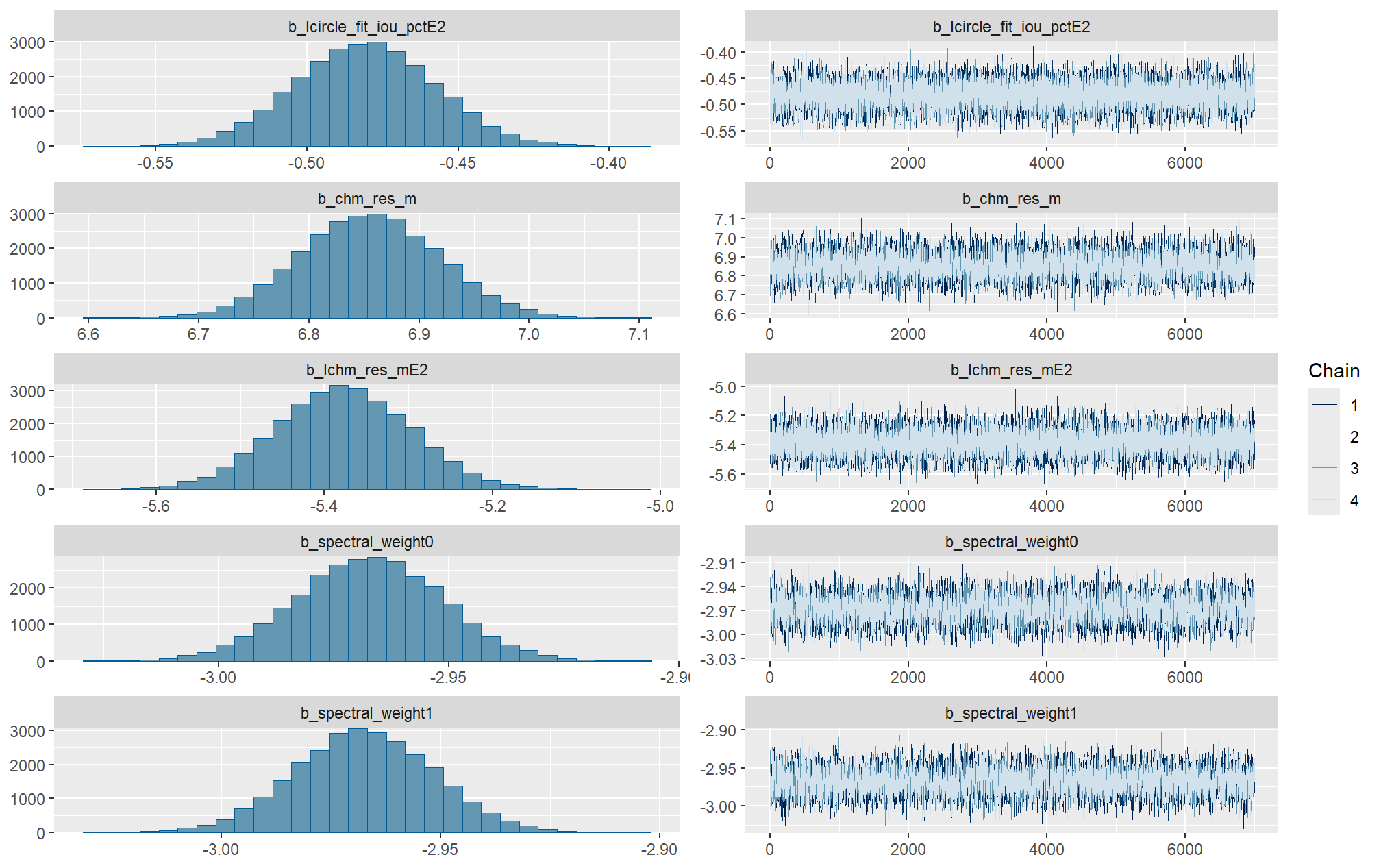

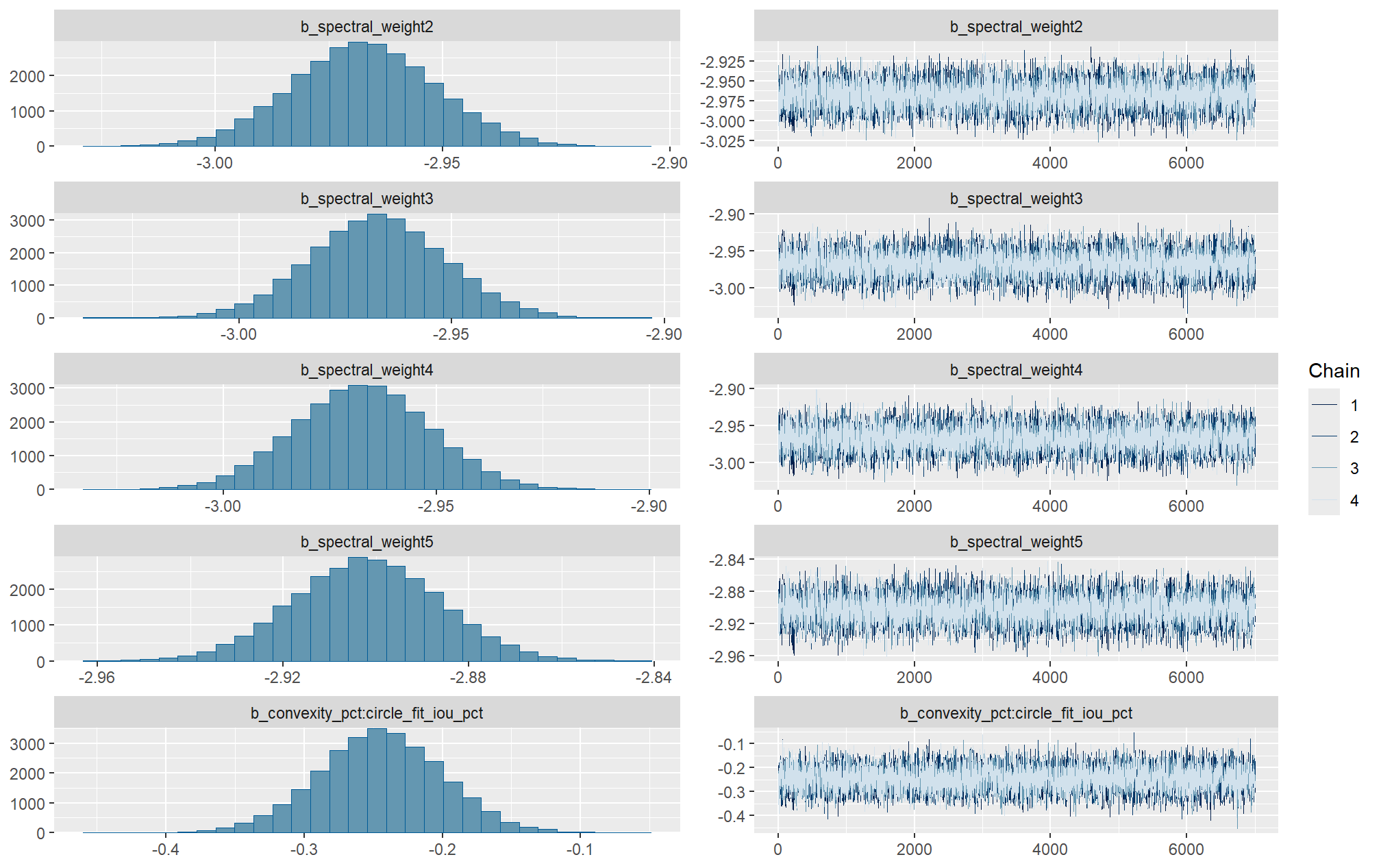

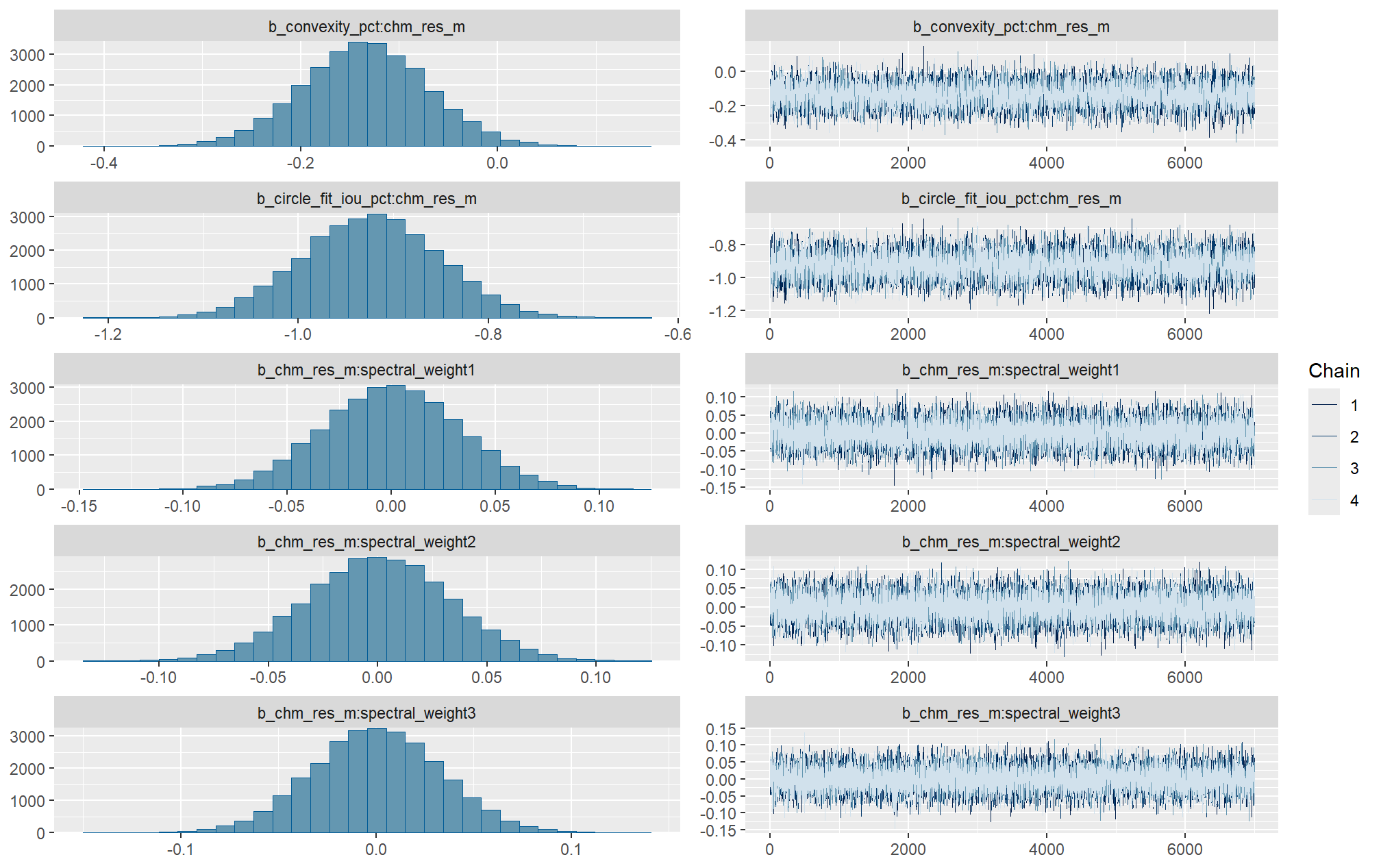

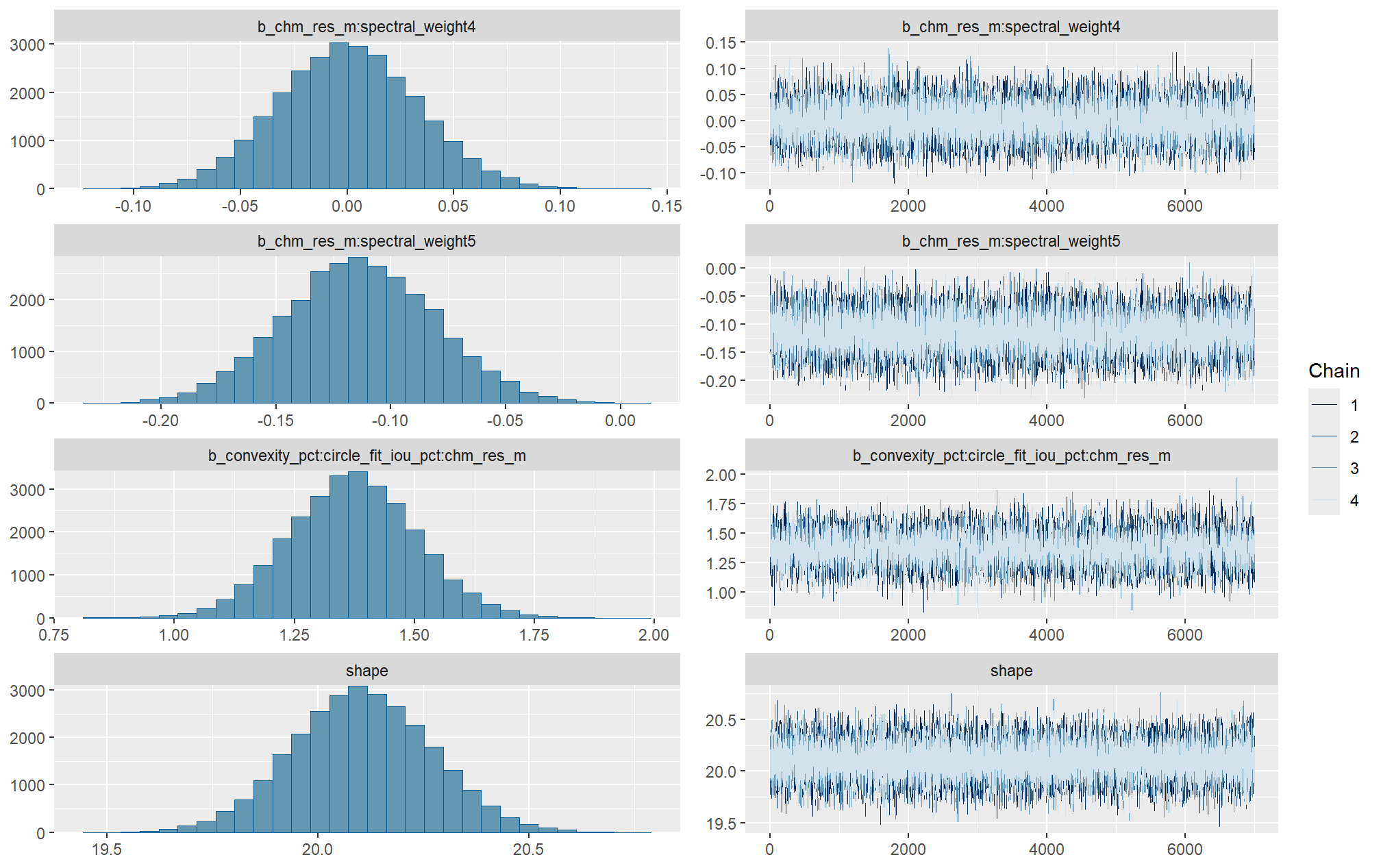

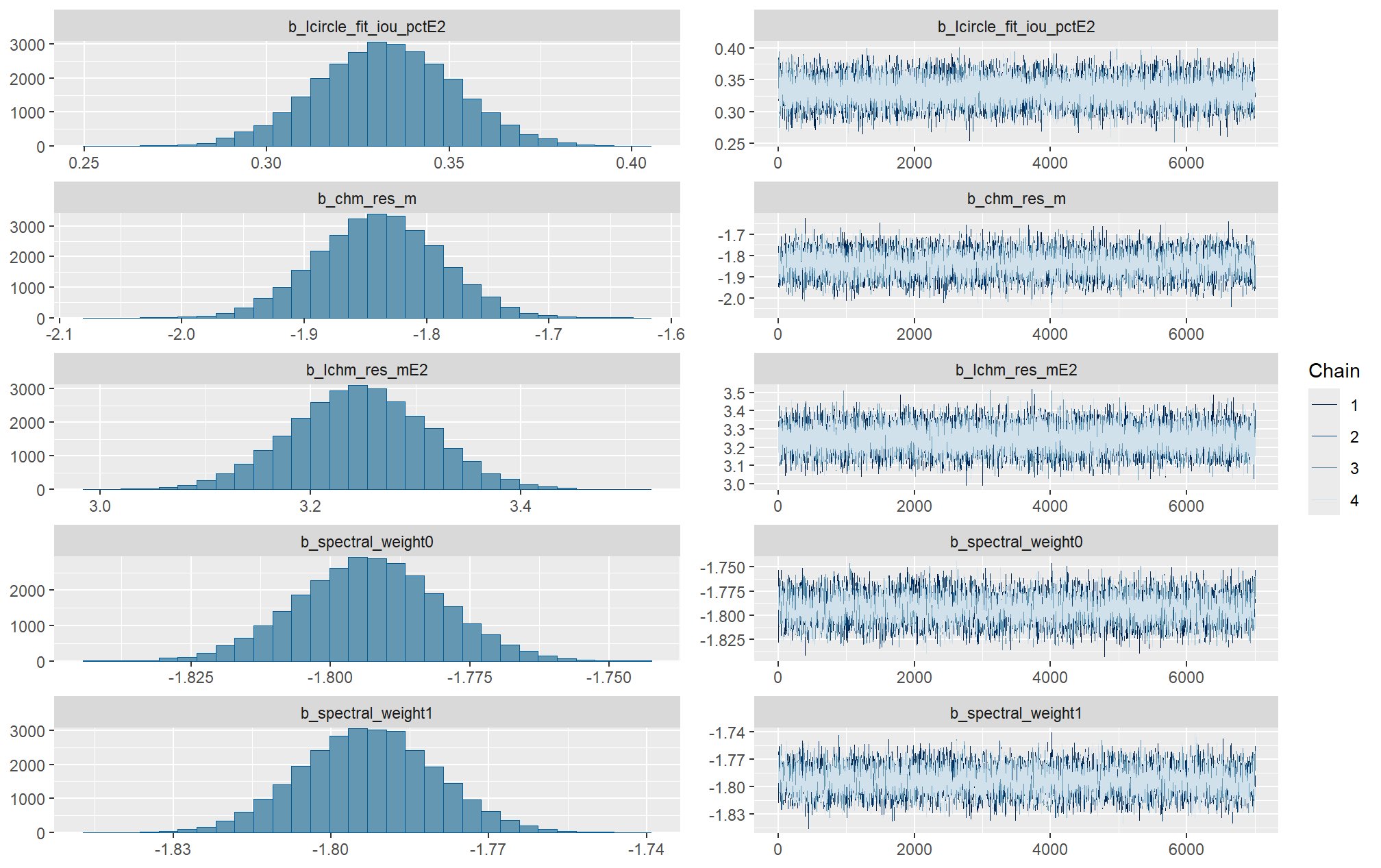

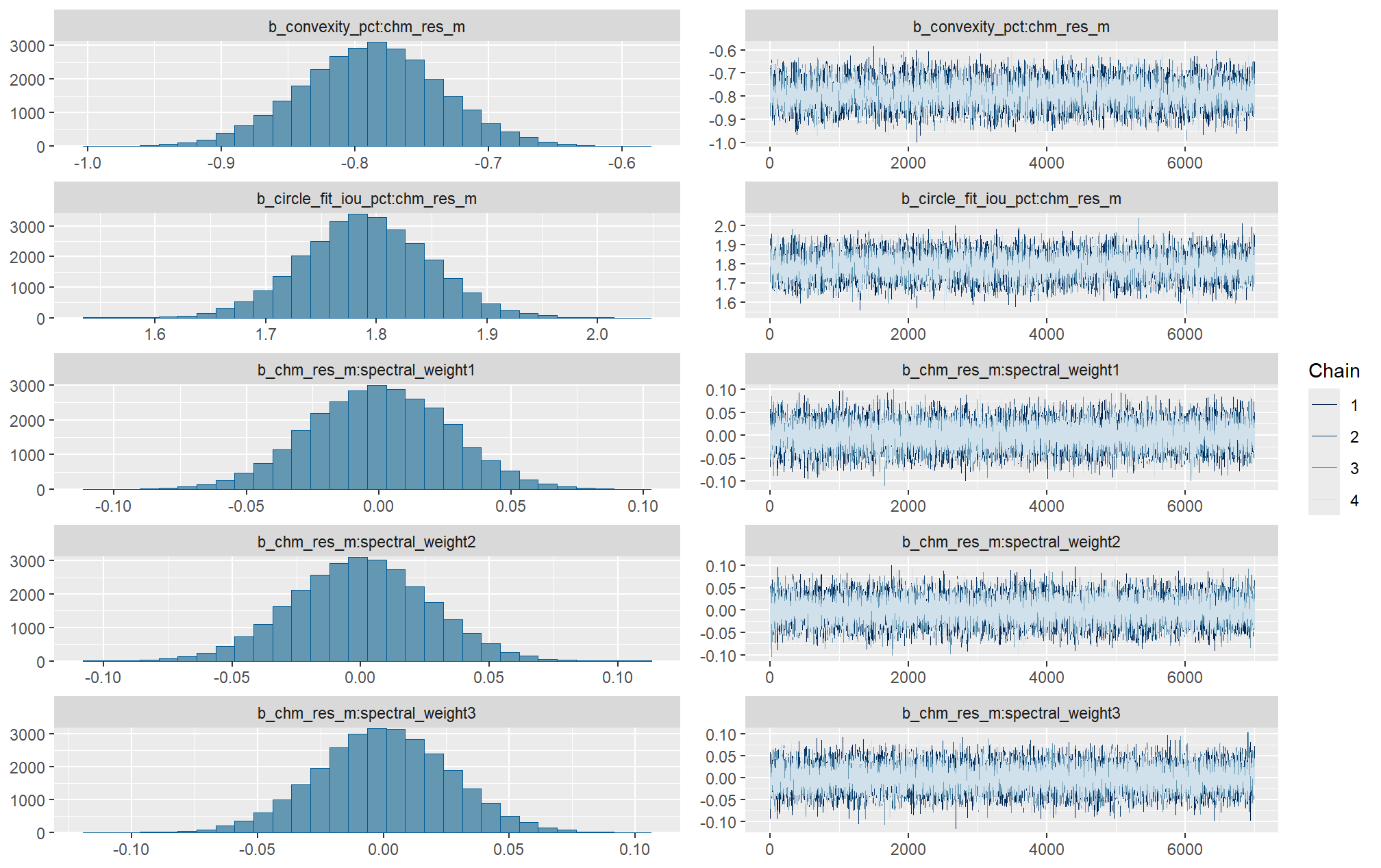

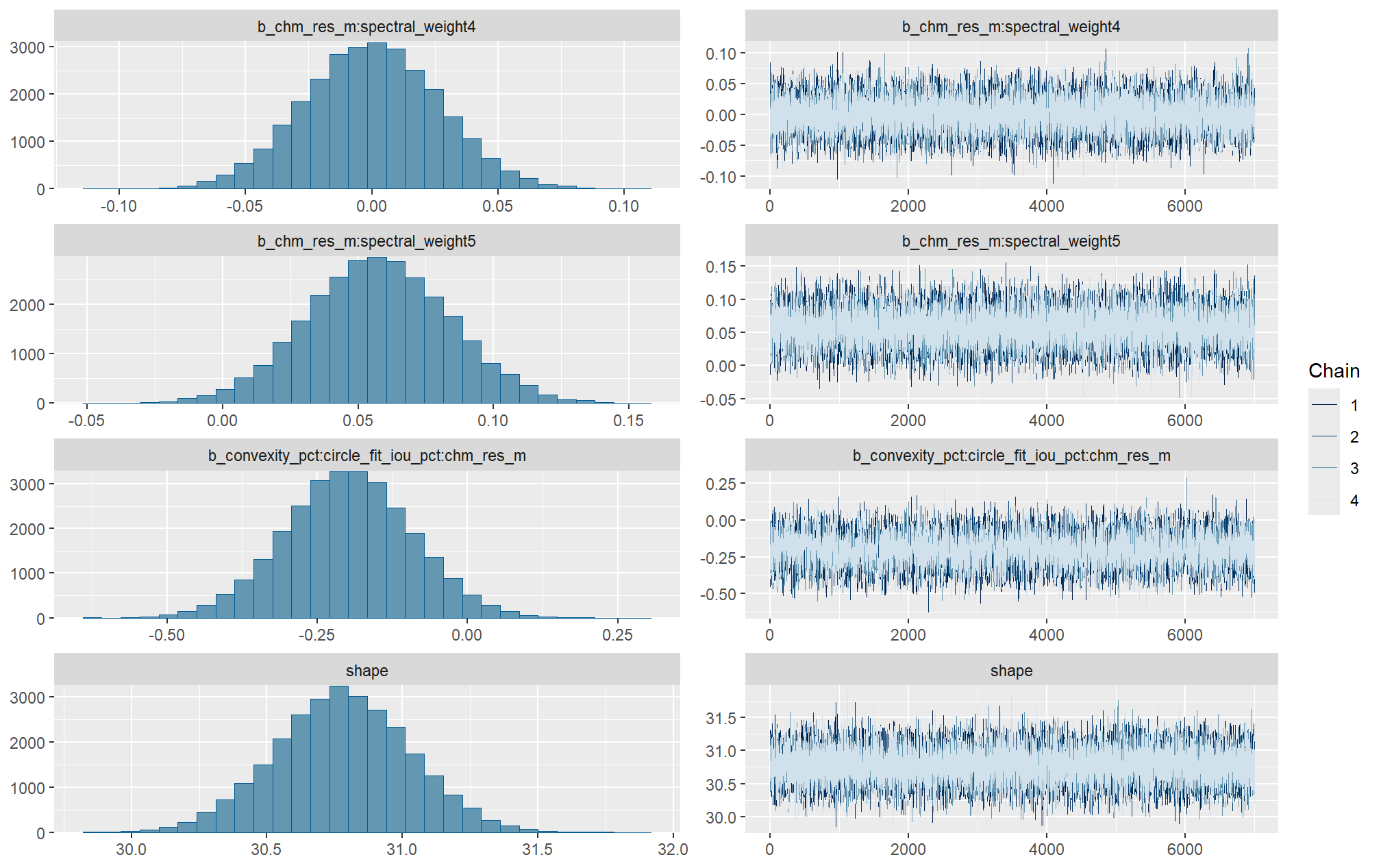

Markov chain Monte Carlo (MCMC) simulations were conducted using the brms package (Bürkner 2017) to estimate posterior predictive distributions of the parameters of interest. We ran 4 chains of 14,000 iterations with the first 7,000 discarded as burn-in. Trace-plots were utilized to visually assess model convergence.

check the trace plots for problems with convergence of the Markov chains

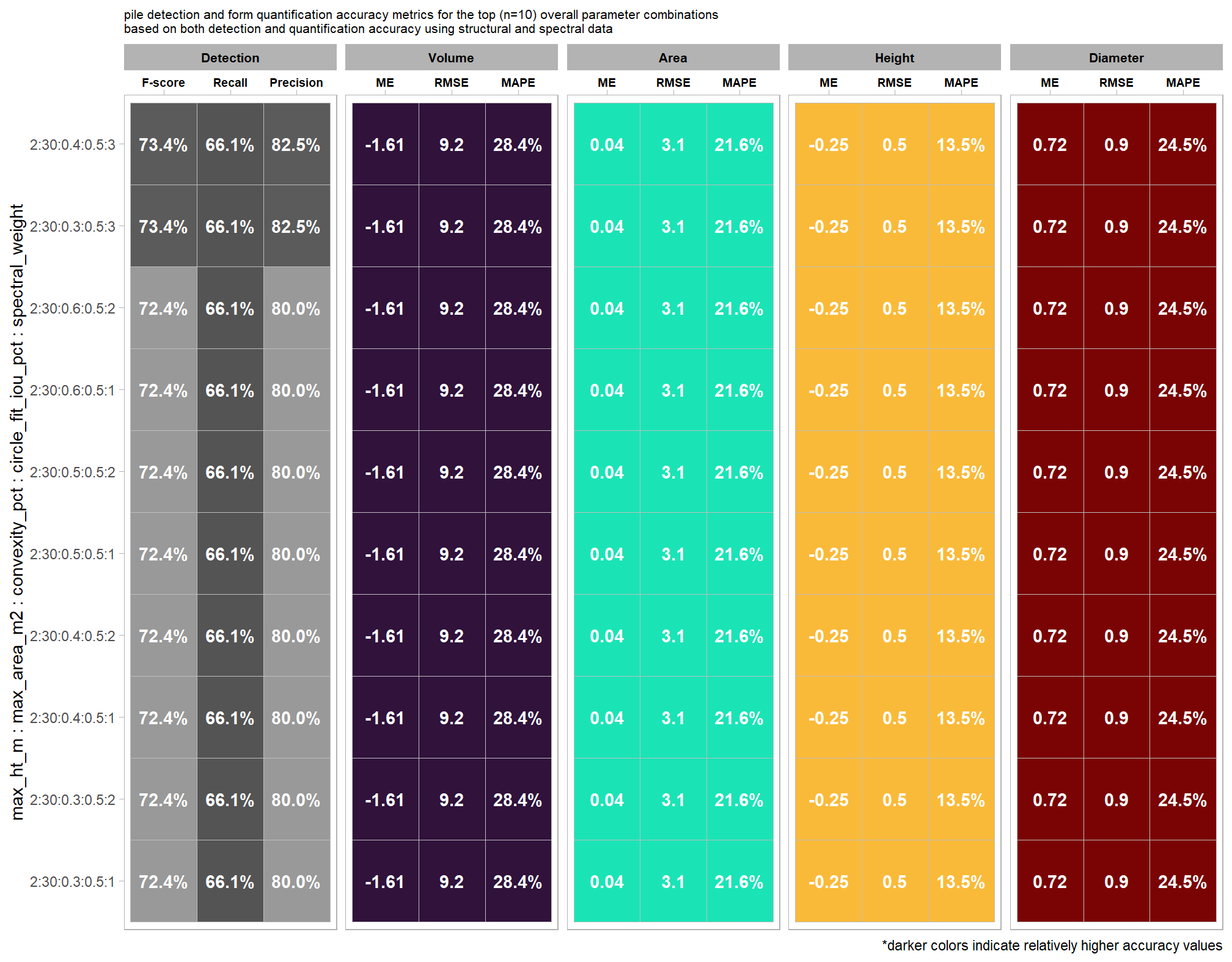

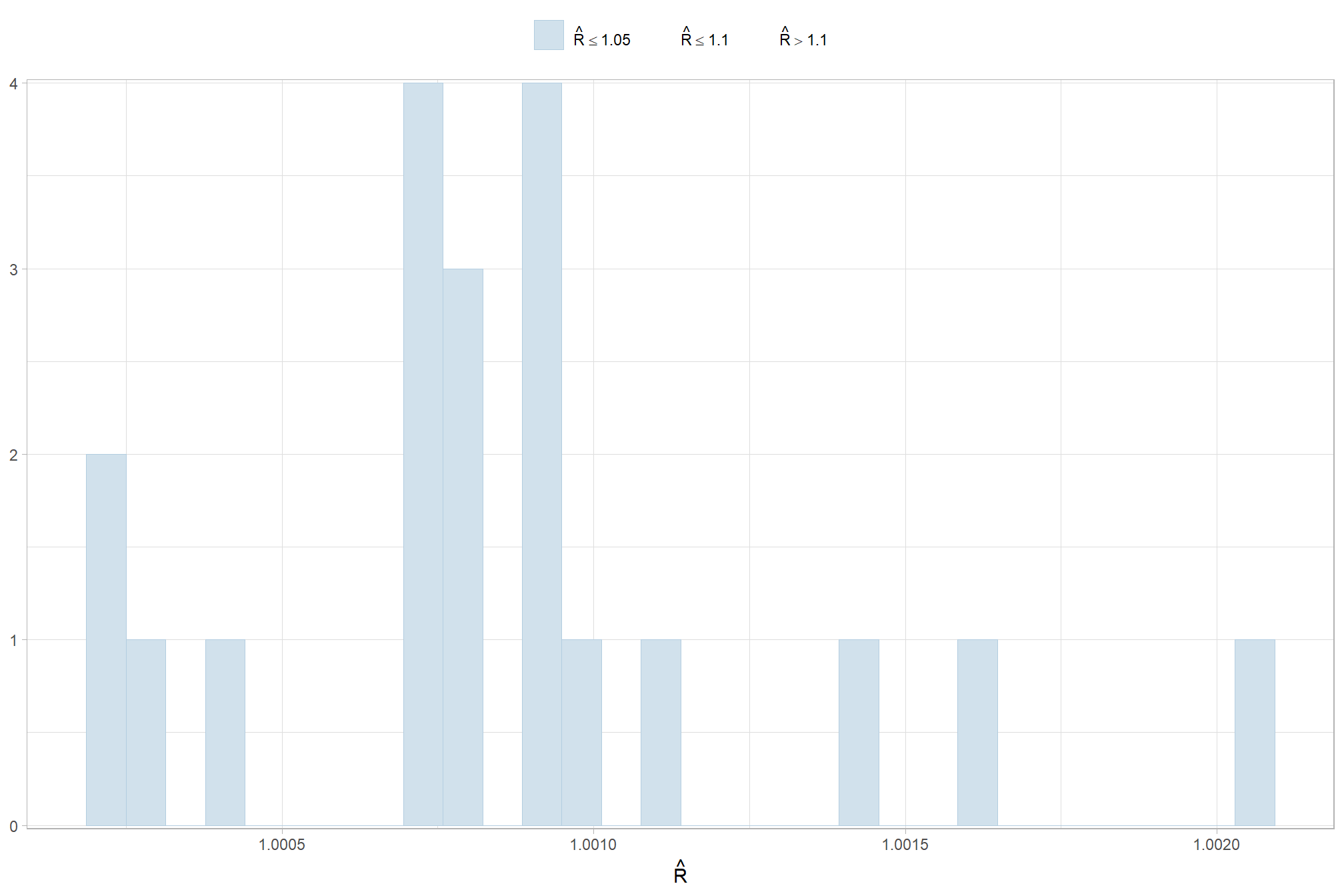

Sufficient convergence was checked with \(\hat{R}\) values near 1 (Brooks & Gelman, 1998).

in the plot below, \(\hat{R}\) values are colored using different shades (lighter is better). The chosen thresholds are somewhat arbitrary, but can be useful guidelines in practice (Gabry and Mahr 2025):

- light: below 1.05 (good)

- mid: between 1.05 and 1.1 (ok)

- dark: above 1.1 (too high)

check our \(\hat{R}\) values

brms::mcmc_plot(brms_f_score_mod, type = "rhat_hist") +

ggplot2::scale_x_continuous(breaks = scales::breaks_extended(n = 6)) +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top", legend.direction = "horizontal"

)

and another check of our \(\hat{R}\) values

# and another check of our $\hat{R}$ values

brms_f_score_mod %>%

brms::rhat() %>%

as.data.frame() %>%

tibble::rownames_to_column(var = "parameter") %>%

dplyr::rename_with(tolower) %>%

dplyr::rename(rhat = 2) %>%

dplyr::filter(

stringr::str_starts(parameter, "b_")

| stringr::str_starts(parameter, "r_")

| stringr::str_starts(parameter, "sd_")

| parameter == "phi"

) %>%

dplyr::mutate(

chk = (rhat <= 1*0.998 | rhat >= 1*1.002)

) %>%

ggplot(aes(x = rhat, y = parameter, color = chk, fill = chk)) +

geom_vline(xintercept = 1, linetype = "dashed", color = "gray44", lwd = 1.2) +

geom_vline(xintercept = 1*0.998, lwd = 1.5) +

geom_vline(xintercept = 1*1.002, lwd = 1.5) +

geom_vline(xintercept = 1*0.999, lwd = 1.2, color = "gray33") +

geom_vline(xintercept = 1*1.001, lwd = 1.2, color = "gray33") +

geom_point() +

scale_fill_manual(values = c("navy", "firebrick")) +

scale_color_manual(values = c("navy", "firebrick")) +

scale_y_discrete(NULL, breaks = NULL) +

labs(

x = latex2exp::TeX("$\\hat{R}$")

, subtitle = latex2exp::TeX("MCMC chain convergence check for $\\hat{R}$ values")

, title = "F-Score"

) +

theme_light() +

theme(

legend.position = "none"

, axis.text.y = element_text(size = 4)

, panel.grid.major.x = element_blank()

, panel.grid.minor.x = element_blank()

, plot.subtitle = element_text(size = 8)

, plot.title = element_text(size = 9)

)

The effective length of an MCMC chain is indicated by the effective sample size (ESS), which refers to the sample size of the MCMC chain not to the sample size of the data where acceptable values allow “for reasonably accurate and stable estimates of the limits of the 95% HDI…If accuracy of the HDI limits is not crucial for your application, then a smaller ESS may be sufficient” (Kruschke 2015, p. 184)

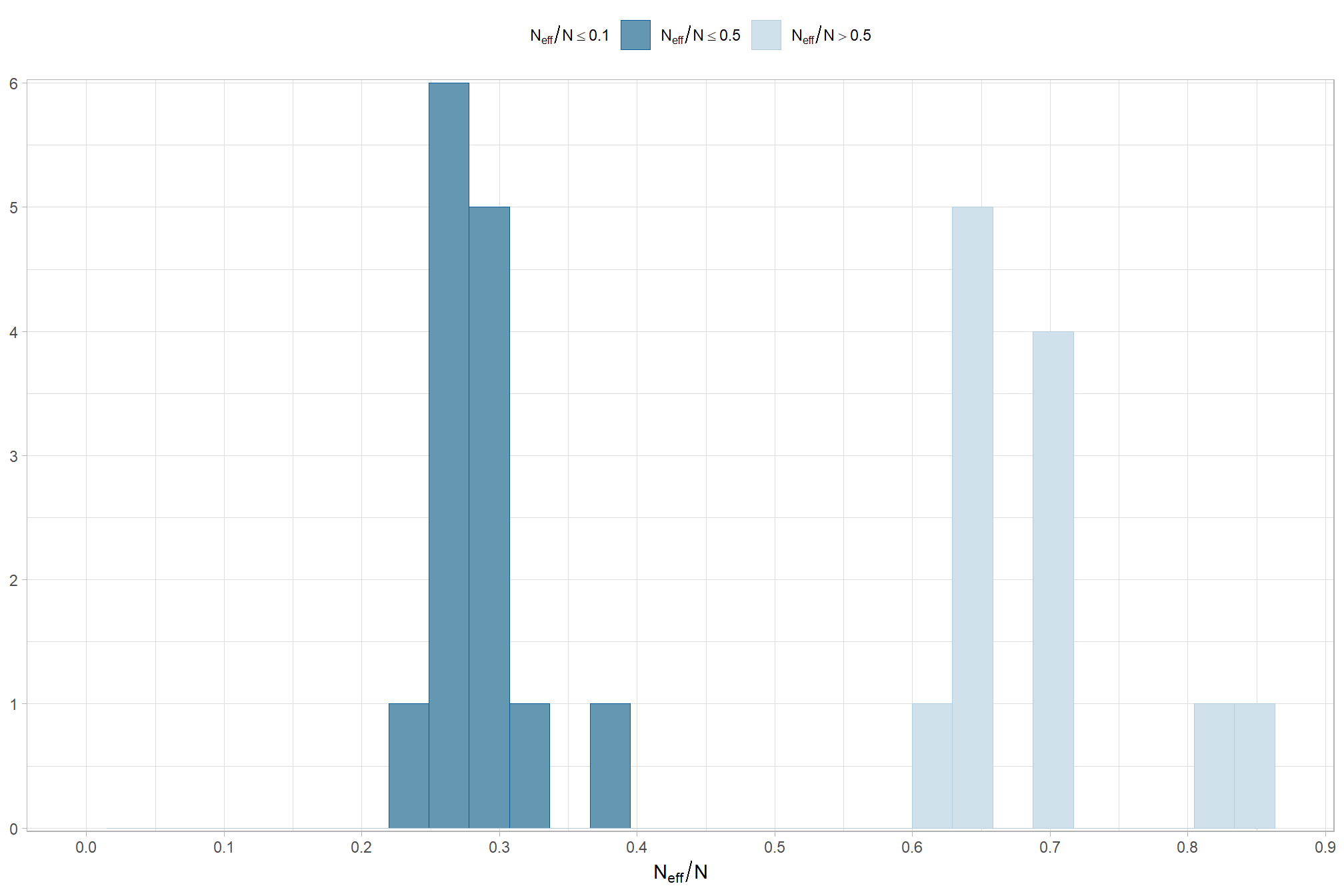

Ratios of effective sample size (ESS) to total sample size with values are colored using different shades (lighter is better). A ratio close to “1” (no autocorrelation) is ideal, while a low ratio suggests the need for more samples or model re-parameterization. Efficiently mixing MCMC chains are important because they guarantee the resulting posterior samples accurately represent the true distribution of model parameters, which is necessary for reliable and precise estimation of parameter values and their associated uncertainties (credible intervals). The chosen thresholds are somewhat arbitrary, but can be useful guidelines in practice (Gabry and Mahr 2025):

- light: between 0.5 and 1 (high)

- mid: between 0.1 and 0.5 (good)

- dark: below 0.1 (low)

# and another effective sample size check

brms::mcmc_plot(brms_f_score_mod, type = "neff_hist") +

# brms::mcmc_plot(brms_f_score_mod, type = "neff") +

ggplot2::scale_x_continuous(limits = c(0,NA), breaks = scales::breaks_extended(n = 9)) +

# ggplot2::scale_color_discrete(drop = F) +

# ggplot2::scale_fill_discrete(drop = F) +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top", legend.direction = "horizontal"

)

our observed range of ESS to Total Sample Size ratios (~0.2 to ~0.8) are generally considered good to excellent, indicating the MCMC chains are performing well and mixing efficiently

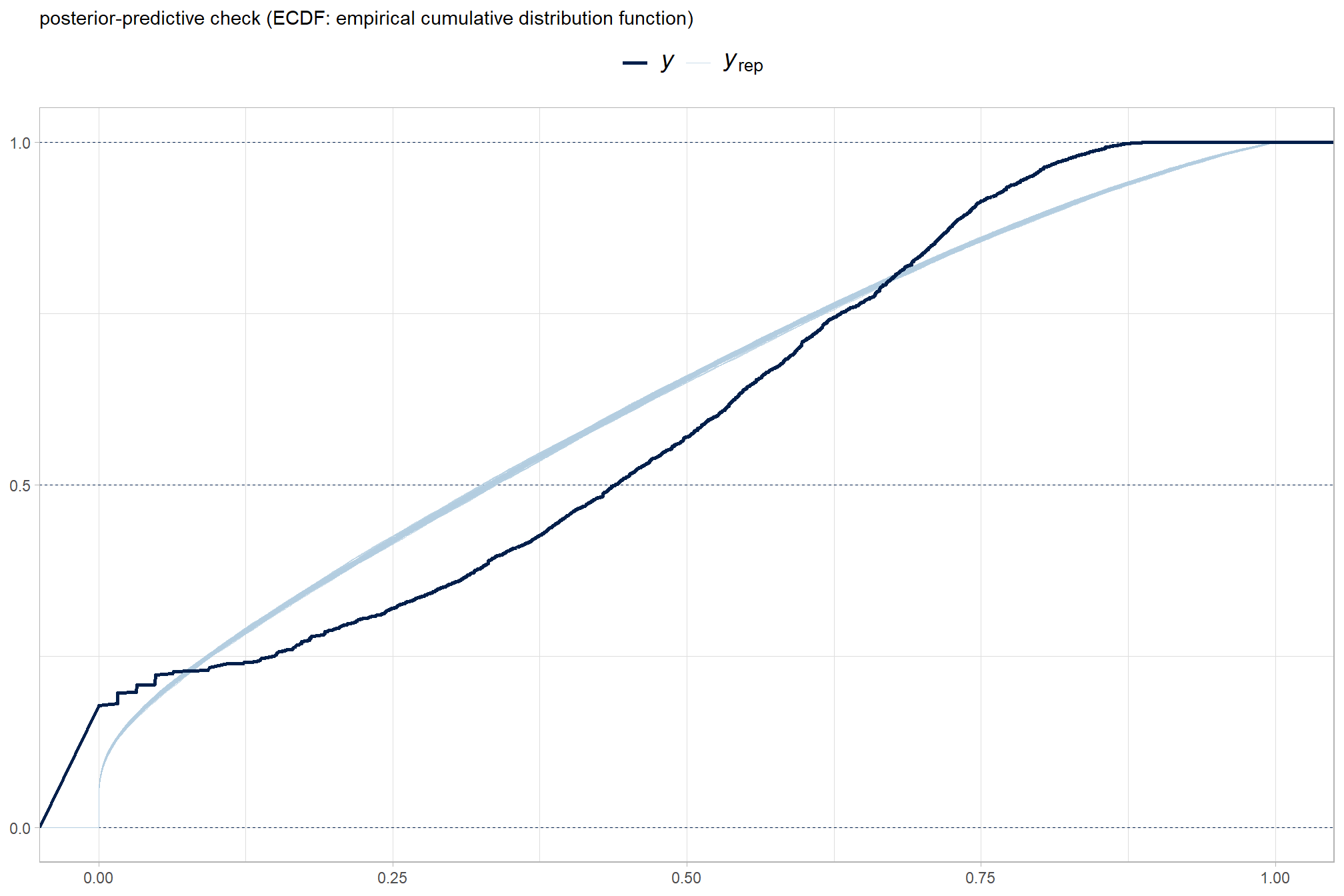

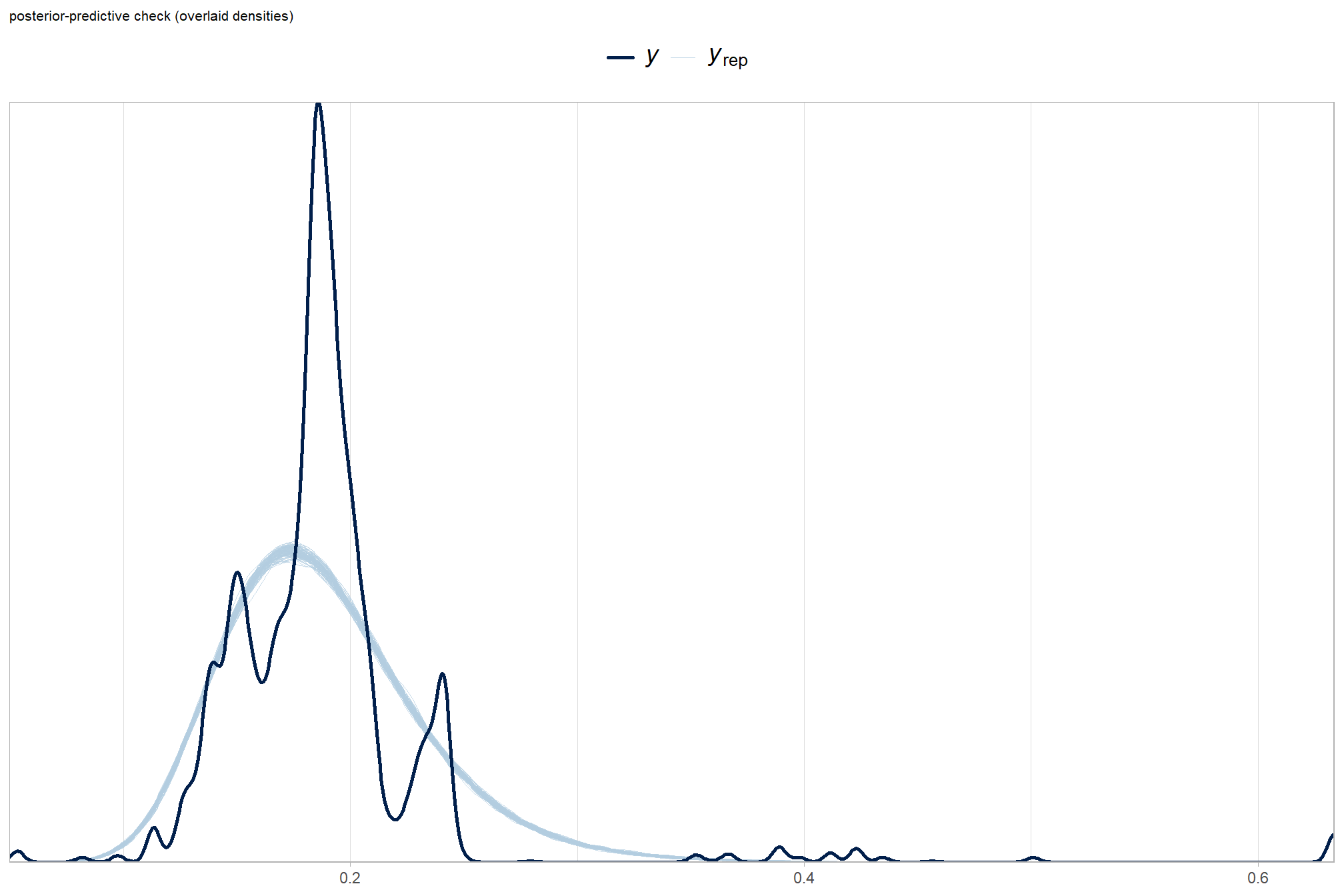

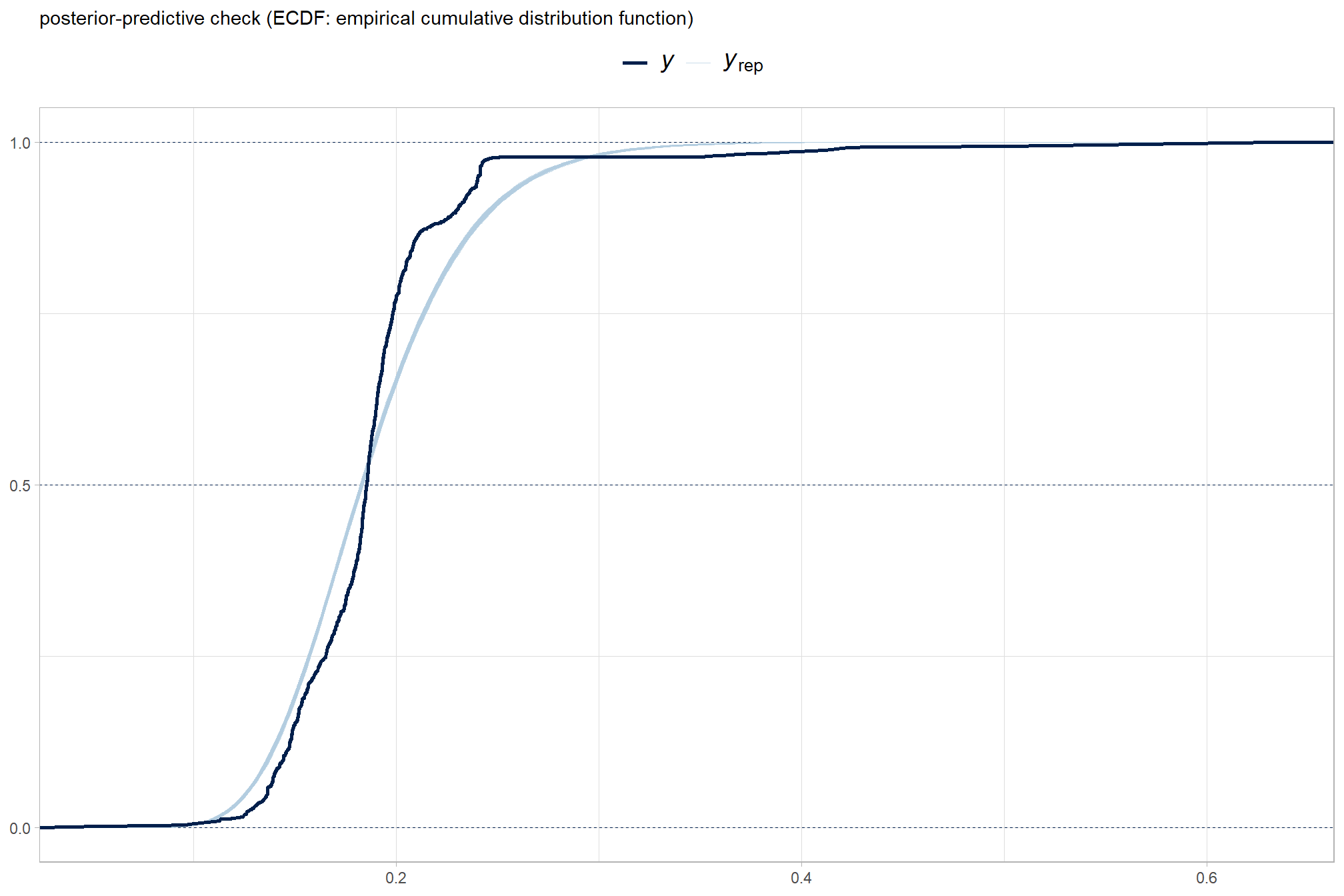

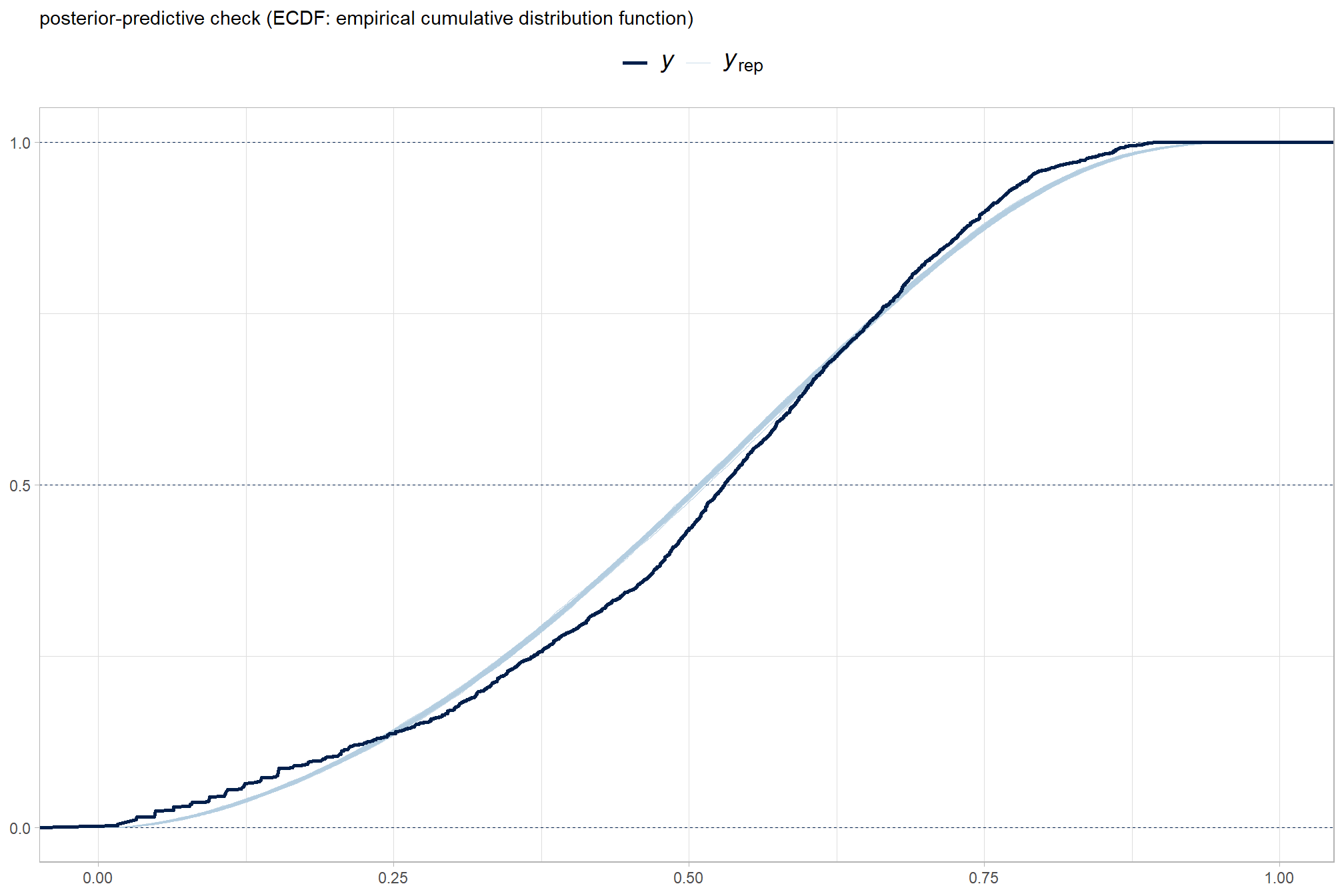

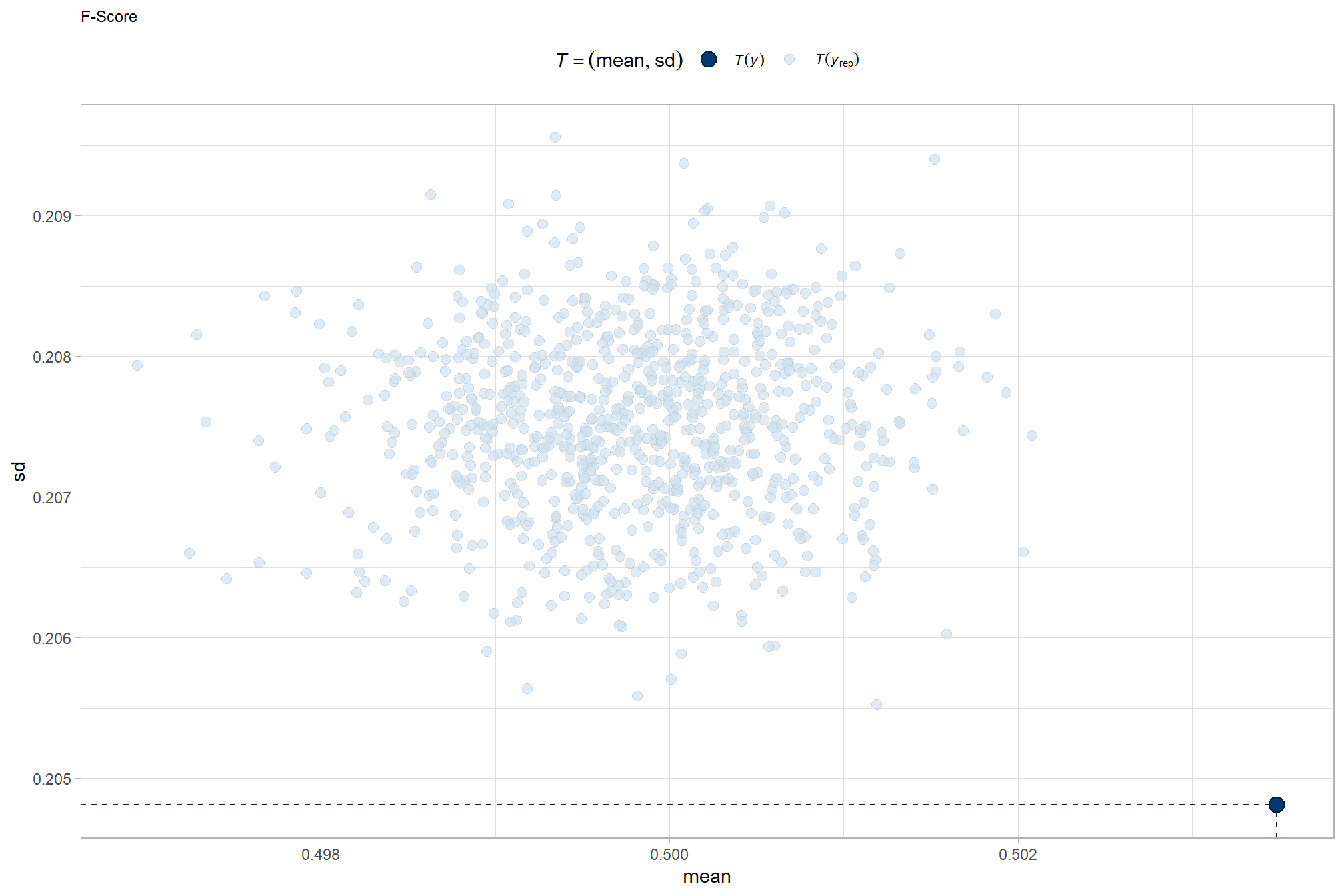

Posterior predictive checks were used to evaluate model goodness-of-fit by comparing data simulated from the model with the observed data used to estimate the model parameters (Hobbs & Hooten, 2015). Calculating the proportion of MCMC iterations in which the test statistic (i.e., mean and sum of squares) from the simulated data and observed data are more extreme than one another provides the Bayesian p-value. Lack of fit is indicated by a value close to 0 or 1 while a value of 0.5 indicates perfect fit (Hobbs & Hooten, 2015).

To learn more about this approach to posterior predictive checks, check out Gabry’s (2025) vignette, Graphical posterior predictive checks using the bayesplot package.

posterior-predictive check to make sure the model does an okay job simulating data that resemble the sample data. our objective is to construct the model such that it faithfully represents the data.

# posterior predictive check

brms::pp_check(

brms_f_score_mod

, type = "dens_overlay"

, ndraws = 100

) +

ggplot2::labs(subtitle = "posterior-predictive check (overlaid densities)") +

ggplot2::theme_light() +

ggplot2::scale_y_continuous(NULL, breaks = NULL) +

ggplot2::theme(

legend.position = "top", legend.direction = "horizontal"

, legend.text = ggplot2::element_text(size = 14)

, plot.subtitle = ggplot2::element_text(size = 8)

, plot.title = ggplot2::element_text(size = 9)

)

another way

brms::pp_check(brms_f_score_mod, type = "ecdf_overlay", ndraws = 100) +

ggplot2::labs(subtitle = "posterior-predictive check (ECDF: empirical cumulative distribution function)") +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top", legend.direction = "horizontal"

, legend.text = ggplot2::element_text(size = 14)

)

9.1.4 Conditional Effects

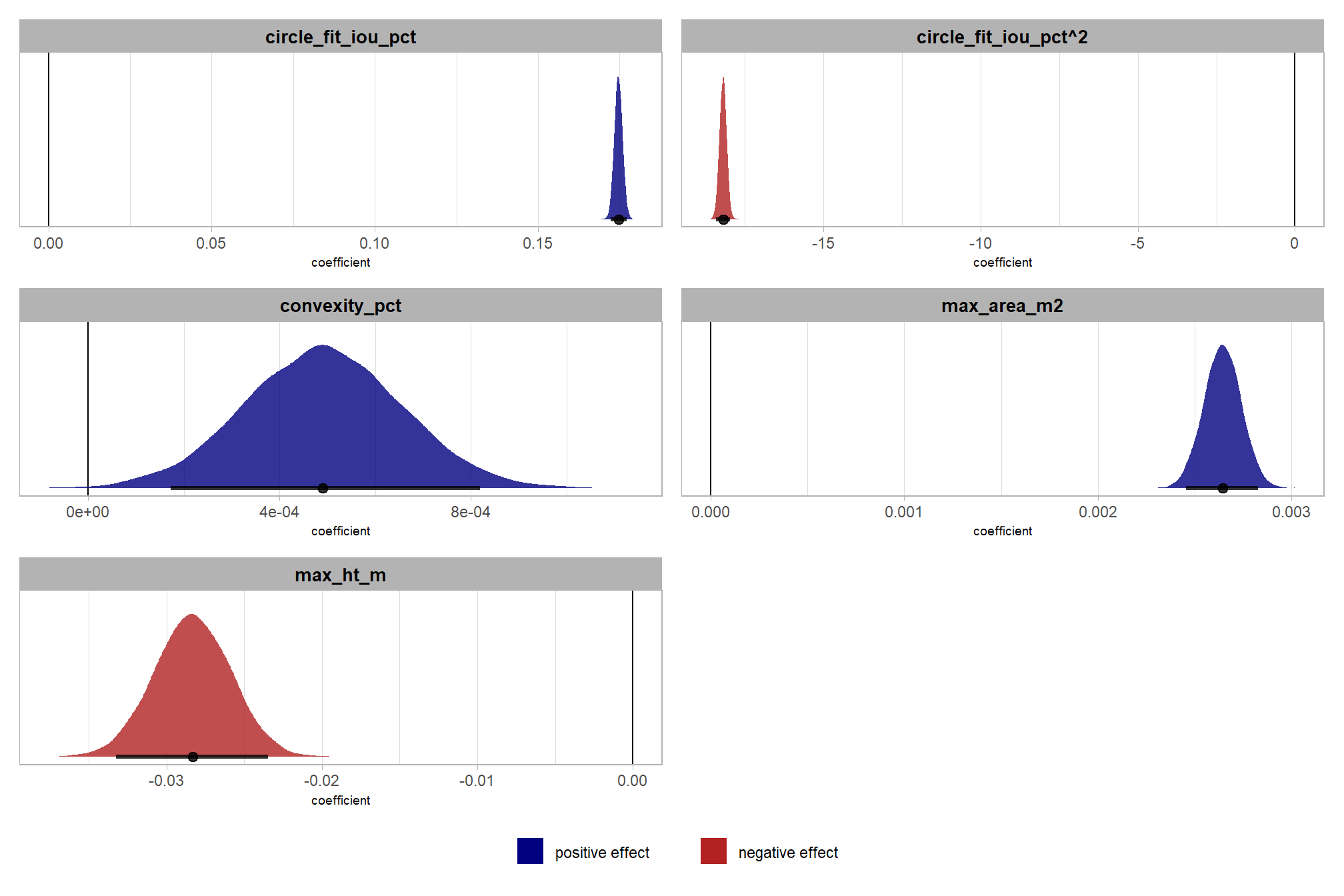

first, lets look at densities of the posterior samples per parameter

brms::mcmc_plot(brms_f_score_mod, type = "dens") +

# ggplot2::theme_light() +

ggplot2::theme(

strip.text = ggplot2::element_text(size = 7.5, face = "bold", color = "black")

)

and we can look at the default coefficient plot that is commonly used in reporting coefficient “significance” in frequentist analysis

Regarding interactions and polynomial models like this, McElreath (2015) notes:

parameters are the linear and square components of the curve, respectively. But that doesn’t make them transparent. You have to plot these model fits to understand what they are saying. (p. 112-113)

all of the interactions and the quadradic trend of this model combine to make these coefficients by themselves uninterpretable as the coefficients are only meaningful in the context of the other terms in the interaction or by adding the quadratic component

we can do this by checking for the main effects of the individual variables on F-score (averages across all other effects)

### ggplot version

# brms::conditional_effects(brms_f_score_mod) %>%

# purrr::pluck("max_ht_m") %>%

# ggplot(aes(x = max_ht_m)) +

# geom_ribbon(aes(ymin = lower__, ymax = upper__), fill = "blue", alpha = 0.2) +

# geom_line(aes(y = estimate__), color = "blue") +

# labs(

# x = "max_ht_m",

# y = "F-score",

# title = "Conditional Effects"

# )9.1.5 Posterior Predictive Expectation

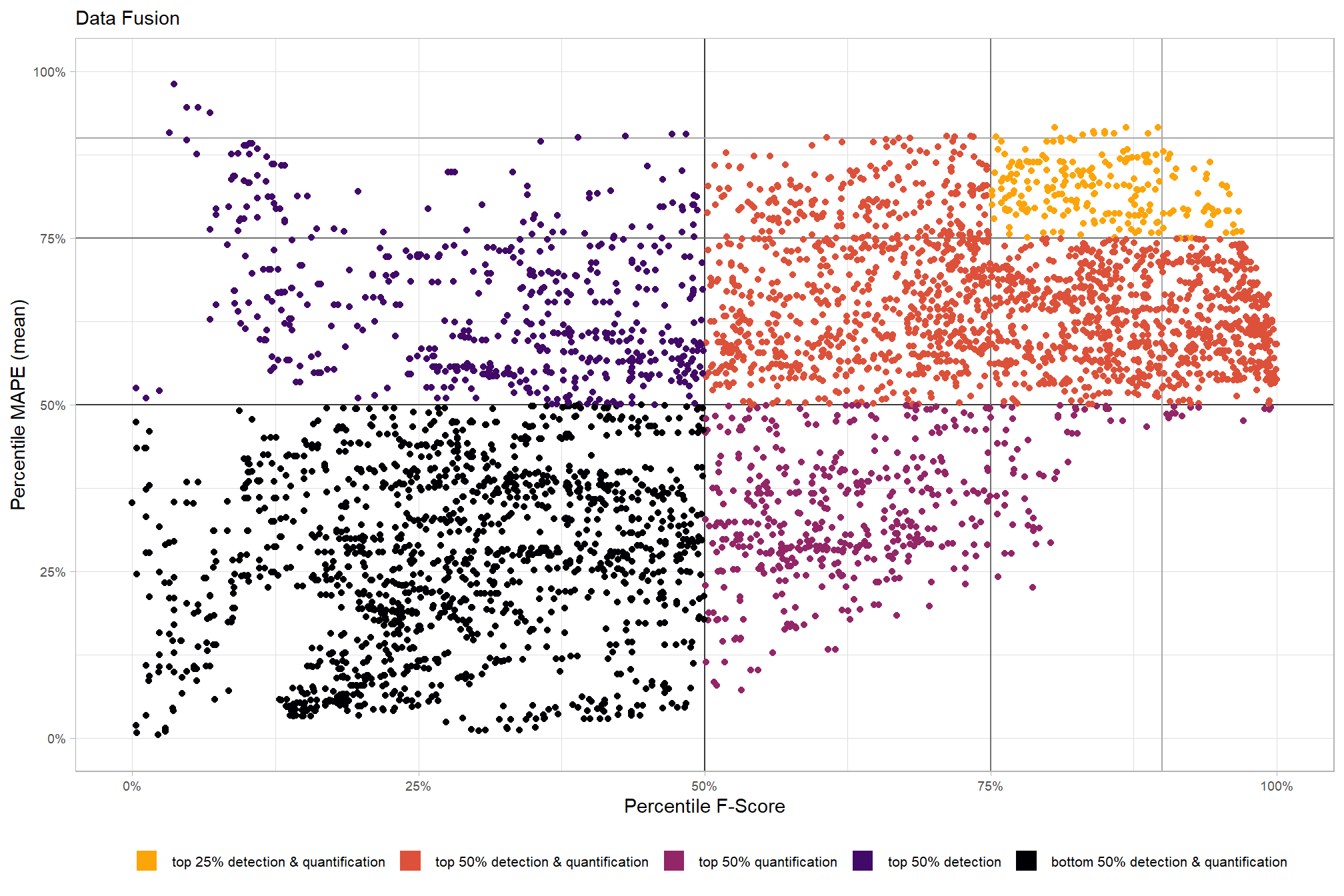

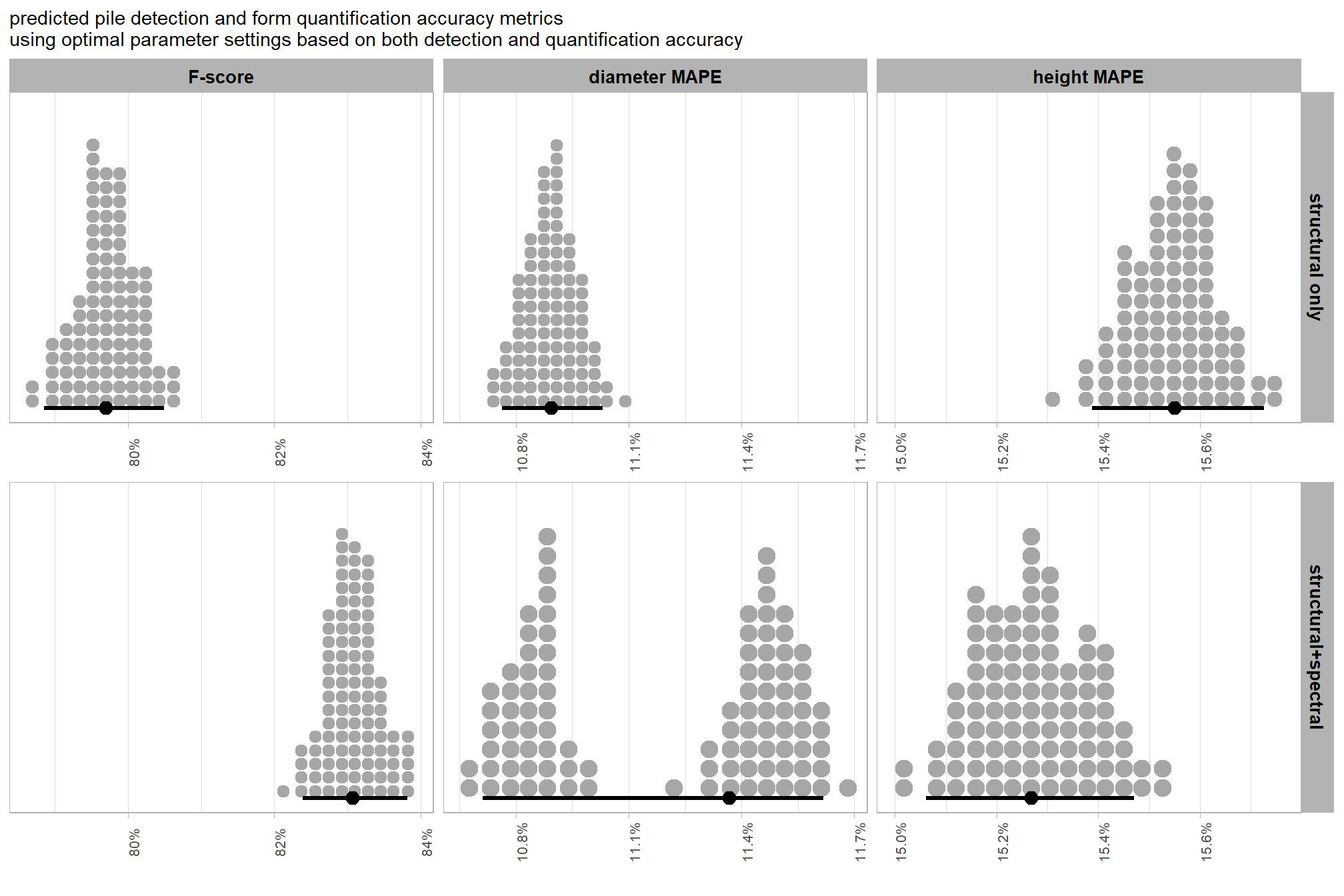

we will test our hypotheses using the posterior distributions of the expected values (i.e., the posterior predictions of the mean) obtained via tidybayes::add_epred_draws(). our analysis will include two stages using parameter levels of the four structural parameters: max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct. in practice, these values should be informed by the treatment and slash pile construction prescription implemented on the ground.

In the first stage, we will fix the max_ht_m and max_area_m2 parameters at levels expected based on the treatment and slash pile construction prescription implemented on the ground. We will then explore the influence of the two geometric shape filtering parameters (circle_fit_iou_pct and convexity_pct) over different levels of the spectral_weight parameter and CHM resolution data.

In the second stage, we will fix all four structural parameters (max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct) to explore the influence of the input data, which includes the presence or absence of spectral data and its weighting (i.e. spectral_weight) as well as the CHM resolution (chm_res_m). As in the first stage, the max_ht_m, max_area_m2 parameters will be fixed at expected levels based on the slash pile construction prescription while the convexity_pct, circle_fit_iou_pct will be fixed at the optimal levels determined based on the Bayesian posterior predictive distribution of detection accuracy

# let's fix the structural parameters based on expectations from the prescription

structural_params_settings <-

dplyr::tibble(

max_ht_m = 2.3

, max_area_m2 = 46

)

# dplyr::bind_rows(

# # structural only

# param_combos_ranked %>% dplyr::filter(is_top_overall) %>% dplyr::select(max_ht_m,max_area_m2,convexity_pct,circle_fit_iou_pct)

# # fusion

# , param_combos_spectral_ranked %>%

# dplyr::ungroup() %>%

# dplyr::filter(is_top_overall & spectral_weight!=0) %>%

# dplyr::arrange(ovrall_balanced_rank) %>% # same number of records as structural only

# dplyr::filter(dplyr::row_number()<=sum(param_combos_ranked$is_top_overall)) %>%

# dplyr::select(max_ht_m,max_area_m2,convexity_pct,circle_fit_iou_pct)

# ) %>%

# tidyr::pivot_longer(

# cols = dplyr::everything()

# , names_to = "metric"

# , values_to = "value"

# ) %>%

# dplyr::count(metric, value) %>%

# dplyr::group_by(metric) %>%

# dplyr::arrange(metric,desc(n),value) %>%

# dplyr::slice(1) %>%

# dplyr::select(-n) %>%

# tidyr::pivot_wider(names_from = metric, values_from = value) %>%

# dplyr::mutate(is_top_overall = T) %>%

# # we just need `max_ht_m`, `max_area_m2`

# dplyr::select(max_ht_m, max_area_m2)

# huh?

structural_params_settings %>% dplyr::glimpse()## Rows: 1

## Columns: 2

## $ max_ht_m <dbl> 2.3

## $ max_area_m2 <dbl> 46now we’ll get the posterior predictive draws but over a range of circle_fit_iou_pct and convexity_pct including the best setting

seq_temp <- seq(from = 0.05, to = 1.0, by = 0.1)

seq2_temp <- seq_temp[seq(1, length(seq_temp), by = 2)] # get every other element

# draws

draws_temp <-

# get the draws for levels of

# spectral_weight circle_fit_iou_pct convexity_pct

tidyr::crossing(

param_combos_spectral_ranked %>% dplyr::distinct(spectral_weight)

, circle_fit_iou_pct = seq_temp

, convexity_pct = seq_temp

, chm_res_m = seq(from = 0.1, to = 1.0, by = 0.1)

, max_ht_m = structural_params_settings$max_ht_m

, max_area_m2 = structural_params_settings$max_area_m2

) %>%

# dplyr::glimpse()

tidybayes::add_epred_draws(brms_f_score_mod, ndraws = 1111) %>%

dplyr::rename(value = .epred) %>%

dplyr::mutate(

is_seq = (convexity_pct %in% seq_temp) & (circle_fit_iou_pct %in% seq_temp)

)

# # huh?

draws_temp %>% dplyr::glimpse()## Rows: 6,666,000

## Columns: 12

## Groups: spectral_weight, circle_fit_iou_pct, convexity_pct, chm_res_m, max_ht_m, max_area_m2, .row [6,000]

## $ spectral_weight <fct> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ circle_fit_iou_pct <dbl> 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.0…

## $ convexity_pct <dbl> 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.05, 0.0…

## $ chm_res_m <dbl> 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0…

## $ max_ht_m <dbl> 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2…

## $ max_area_m2 <dbl> 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 46,…

## $ .row <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ .chain <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA,…

## $ .iteration <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA,…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, …

## $ value <dbl> 0.6778712, 0.6924090, 0.6793626, 0.6784690, 0.69338…

## $ is_seq <lgl> TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRU…9.1.5.1 Geometric shape regularity

let’s look at the influence of the parameters that control the geometric shape regularity filtering: circle_fit_iou_pct and convexity_pct. to do this, we will fix the max_ht_m and max_area_m2 parameters at levels expected based on the treatment and slash pile construction prescription implemented on the ground.

In the first stage, we will fix the max_ht_m and max_area_m2 parameters at levels expected based on the treatment and slash pile construction prescription implemented on the ground. We will then explore the influence of the two geometric shape filtering parameters (circle_fit_iou_pct and convexity_pct) over different levels of the spectral_weight parameter and CHM resolution data.

In the second stage, we will fix all four structural parameters (max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct) to explore the influence of the input data, which includes the presence or absence of spectral data and its weighting (i.e. spectral_weight) as well as the CHM resolution (chm_res_m). As in the first stage, the max_ht_m, max_area_m2 parameters will be fixed at expected levels based on the slash pile construction prescription while the convexity_pct, circle_fit_iou_pct will be fixed at the optimal levels determined based on the Bayesian posterior predictive distribution of detection accuracy

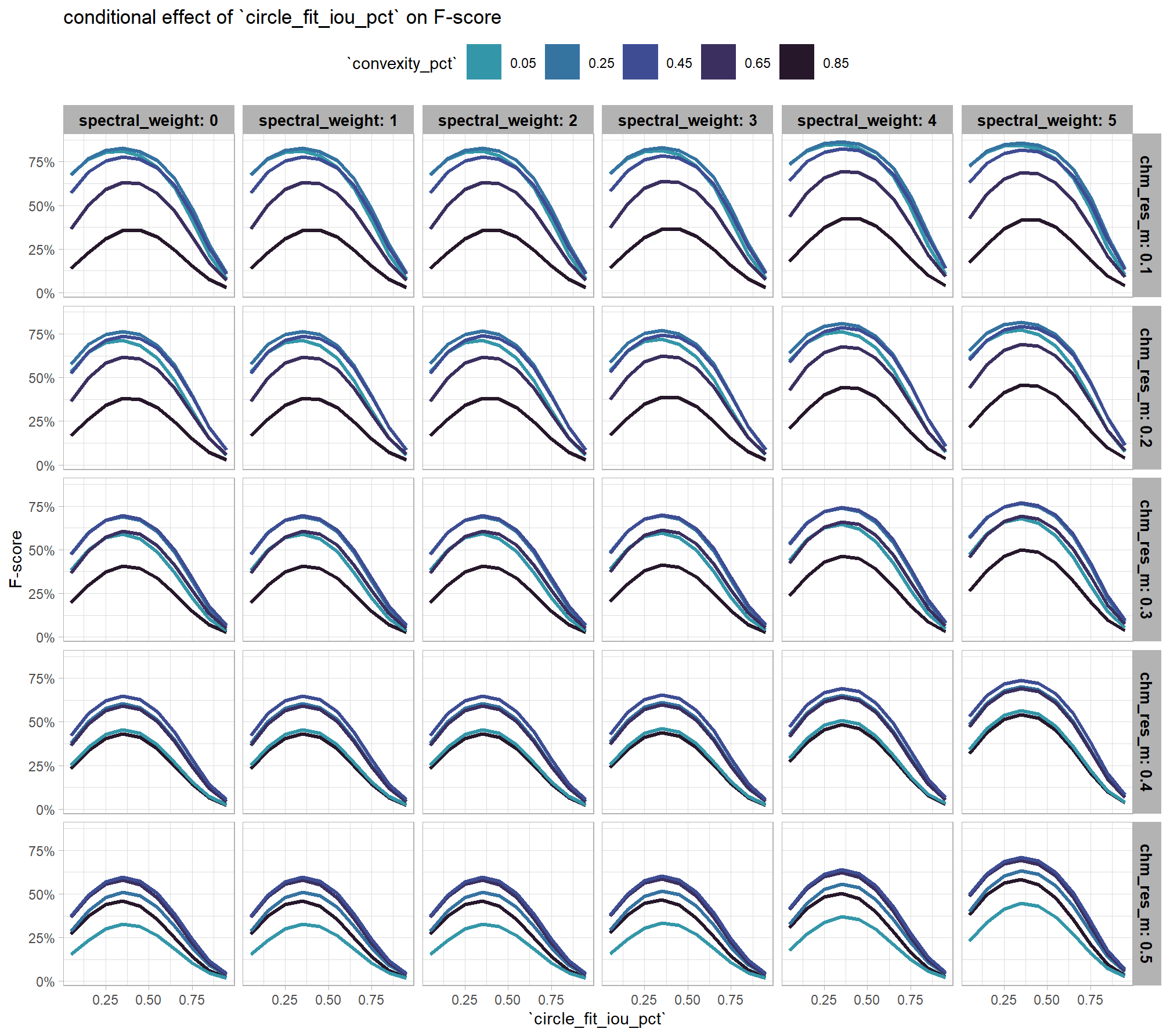

9.1.5.1.1 circle_fit_iou_pct

we need to look at the influence of circle_fit_iou_pct in the context of the other terms in the interaction

draws_temp %>%

dplyr::ungroup() %>%

dplyr::filter(

is_seq

, chm_res_m %in% seq(0.1,0.5,by=0.1)

, convexity_pct %in% seq2_temp

) %>%

dplyr::mutate(convexity_pct = factor(convexity_pct, ordered = T)) %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = circle_fit_iou_pct, y = value, color = convexity_pct)) +

tidybayes::stat_lineribbon(

point_interval = "median_hdi", .width = c(0.95)

, lwd = 1.1, fill = NA

) +

ggplot2::facet_grid(cols = dplyr::vars(spectral_weight), rows = dplyr::vars(chm_res_m), labeller = "label_both") +

ggplot2::scale_fill_brewer(palette = "Greys") +

ggplot2::scale_color_viridis_d(option = "mako", begin = 0.6, end = 0.1) +

ggplot2::scale_y_continuous(labels = scales::percent) +

ggplot2::labs(

title = "conditional effect of `circle_fit_iou_pct` on F-score"

# , subtitle = "Faceted by spectral_weight and chm_res_m"

, x = "`circle_fit_iou_pct`"

, y = "F-score"

, color = "`convexity_pct`"

) +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top"

, strip.text.x = ggplot2::element_text(size = 10, color = "black", face = "bold")

, strip.text.y = ggplot2::element_text(size = 10, color = "black", face = "bold")

) +

ggplot2::guides(

color = ggplot2::guide_legend(override.aes = list(shape = 15, lwd = 8, fill = NA))

, fill = "none"

)

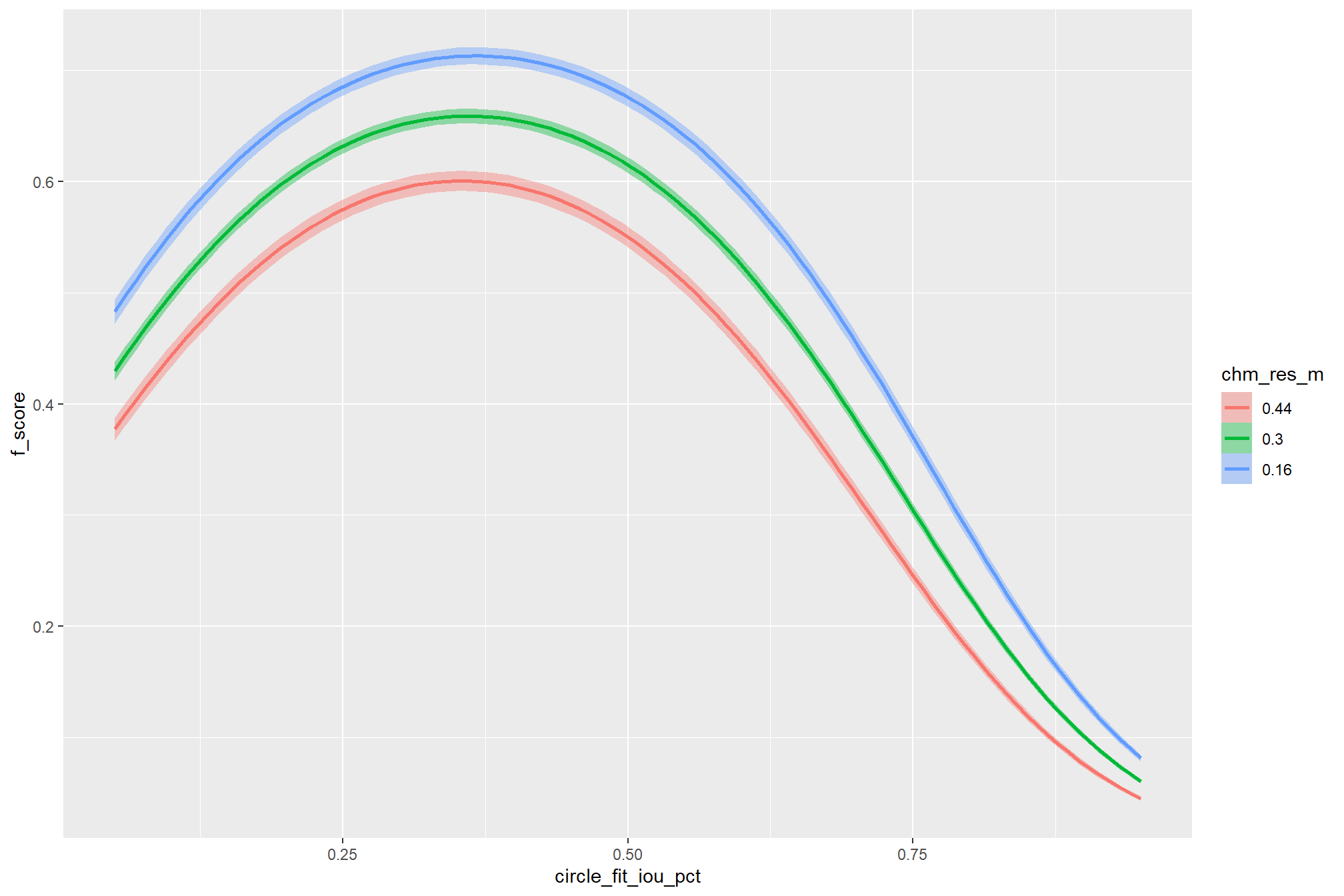

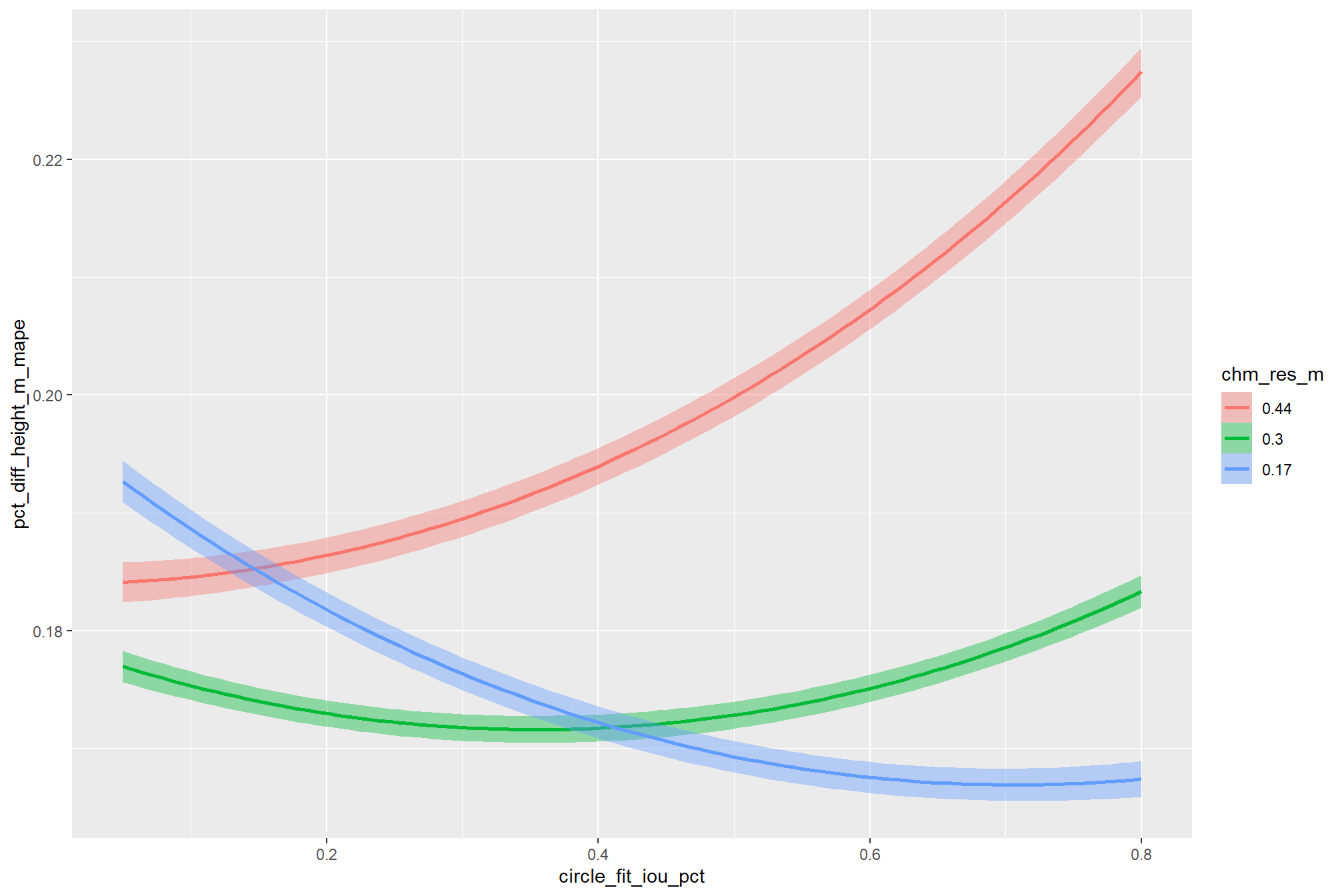

the non-linear relationship between the circle_fit_iou_pct parameter and the F-score is prominent, confirmed by the model’s quadratic coefficient. this trend is consistent across all levels of spectral_weight and CHM resolution. this figure also demonstrates how, within the optimal range of circle_fit_iou_pct, the intermediate values of the convexity_pct parameter seem to result in the best detection accuracy across CHM resolution levels. Across all CHM resolutions and spectral weighting levels, setting circle_fit_iou_pct too high (e.g > 0.7) results in steep declines in detection accuracy.

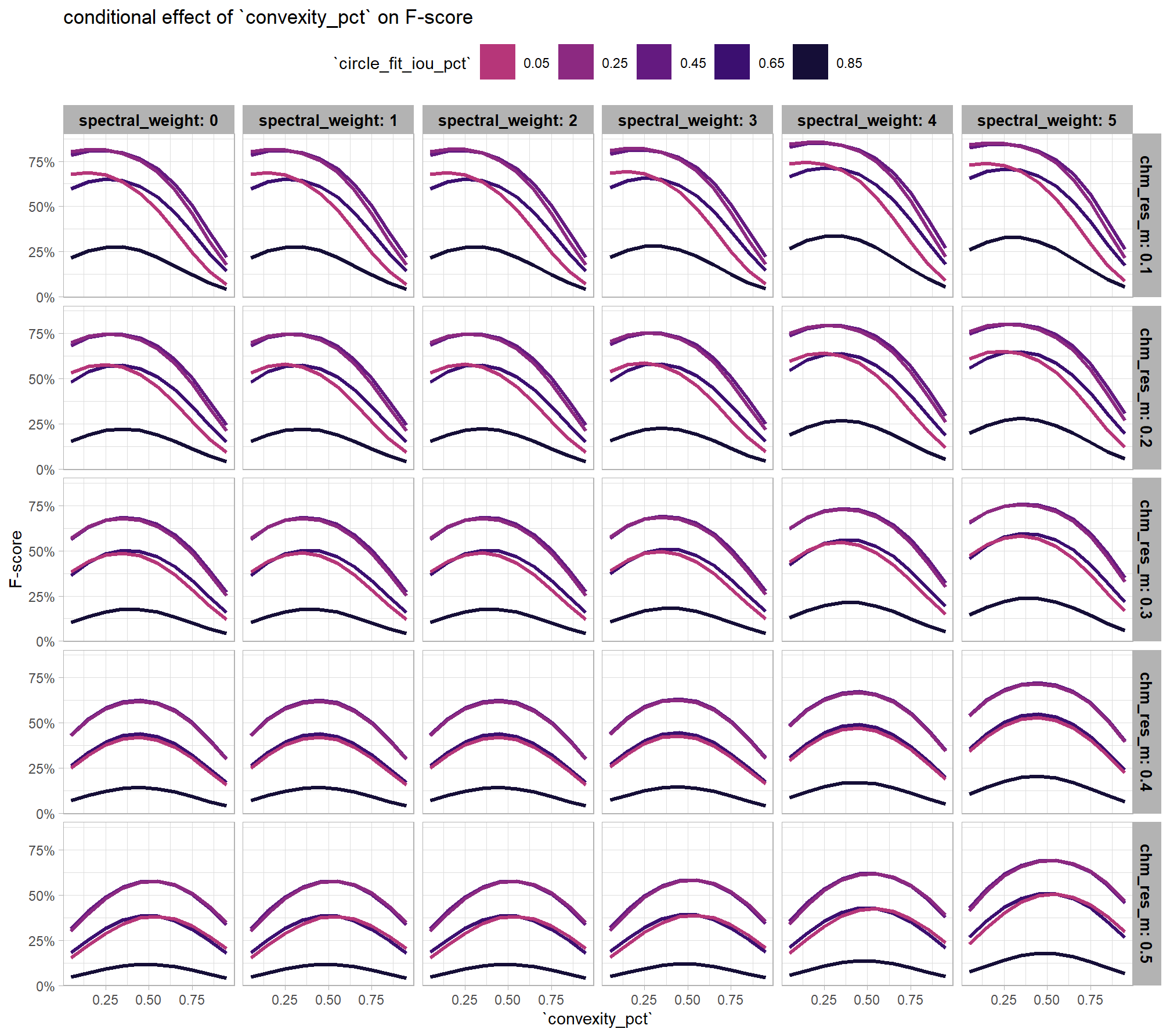

9.1.5.1.2 convexity_pct

we need to look at the influence of convexity_pct in the context of the other terms in the interaction

draws_temp %>%

dplyr::ungroup() %>%

dplyr::filter(

is_seq

, chm_res_m %in% seq(0.1,0.5,by=0.1)

, circle_fit_iou_pct %in% seq2_temp

) %>%

dplyr::mutate(circle_fit_iou_pct = factor(circle_fit_iou_pct, ordered = T)) %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = convexity_pct, y = value, color = circle_fit_iou_pct)) +

tidybayes::stat_lineribbon(

point_interval = "median_hdi", .width = c(0.5,0.95)

, lwd = 1.1

, fill = NA

) +

ggplot2::facet_grid(cols = dplyr::vars(spectral_weight), rows = dplyr::vars(chm_res_m), labeller = "label_both") +

ggplot2::scale_fill_brewer(palette = "Greys") +

ggplot2::scale_color_viridis_d(option = "magma", begin = 0.5, end = 0.1) +

ggplot2::scale_y_continuous(labels = scales::percent) +

ggplot2::labs(

title = "conditional effect of `convexity_pct` on F-score"

# , subtitle = "Faceted by spectral_weight and chm_res_m"

, x = "`convexity_pct`"

, y = "F-score"

, color = "`circle_fit_iou_pct`"

) +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top"

, strip.text.x = ggplot2::element_text(size = 10, color = "black", face = "bold")

, strip.text.y = ggplot2::element_text(size = 10, color = "black", face = "bold")

) +

ggplot2::guides(

color = ggplot2::guide_legend(override.aes = list(shape = 15, lwd = 8, fill = NA))

, fill = "none"

)

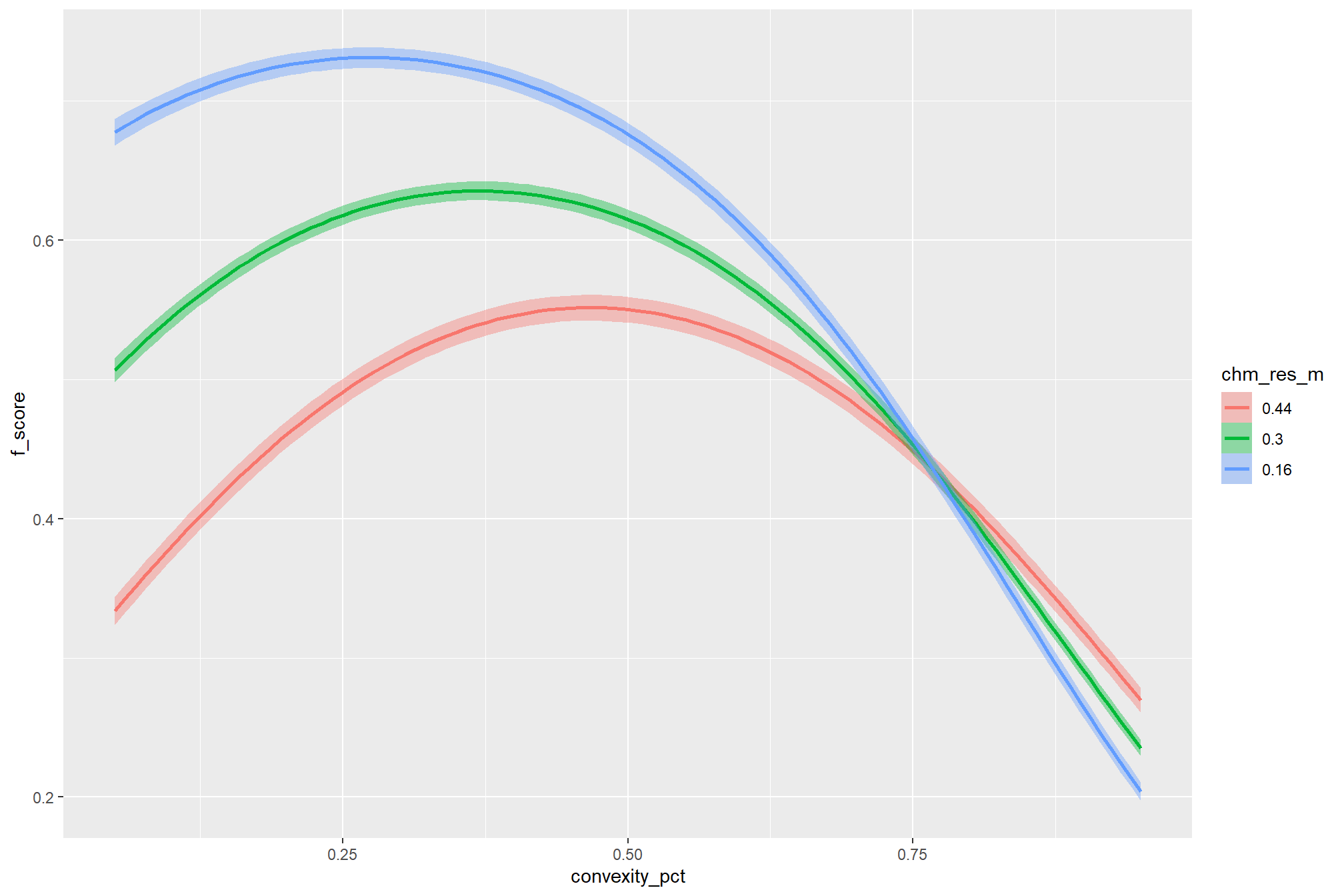

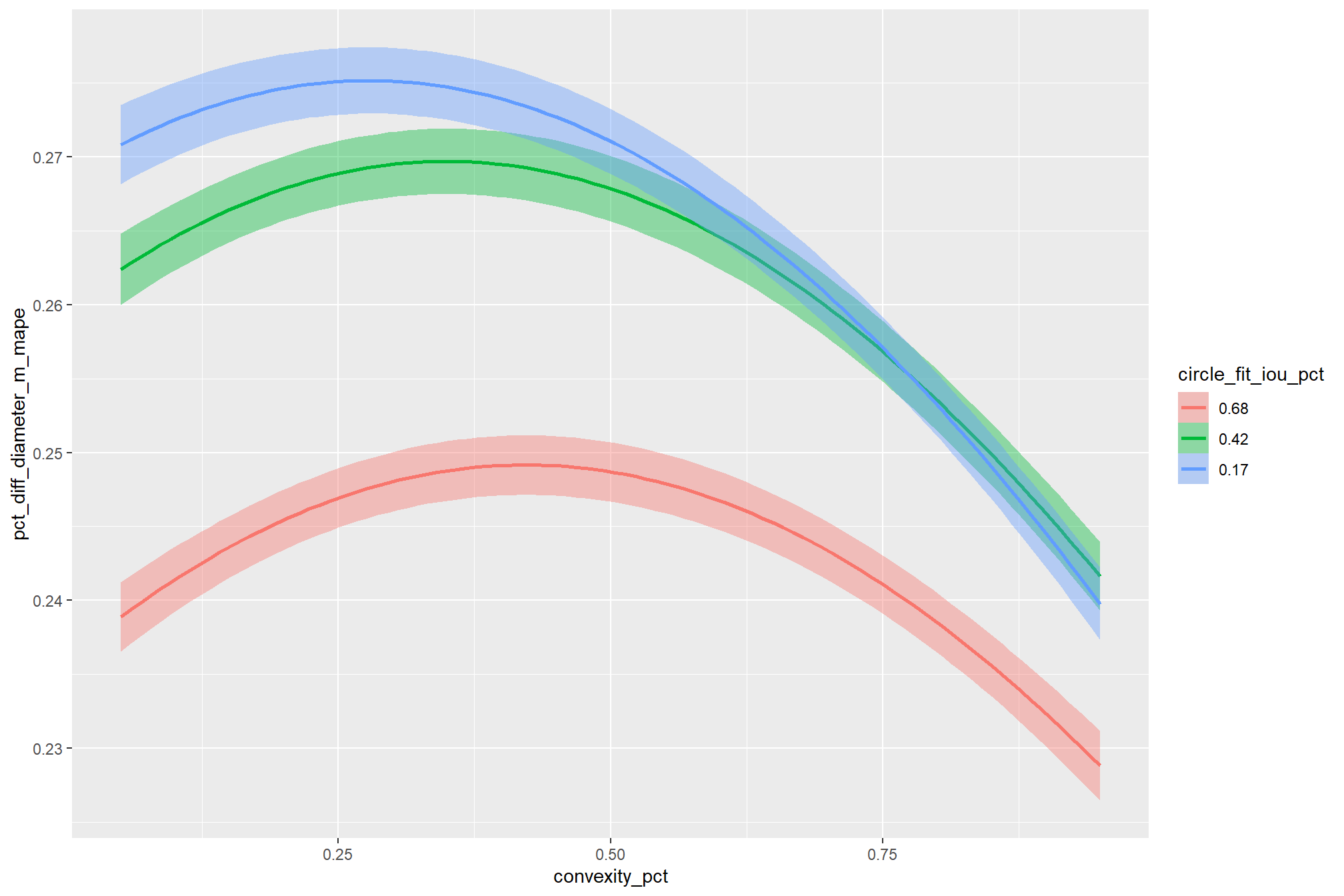

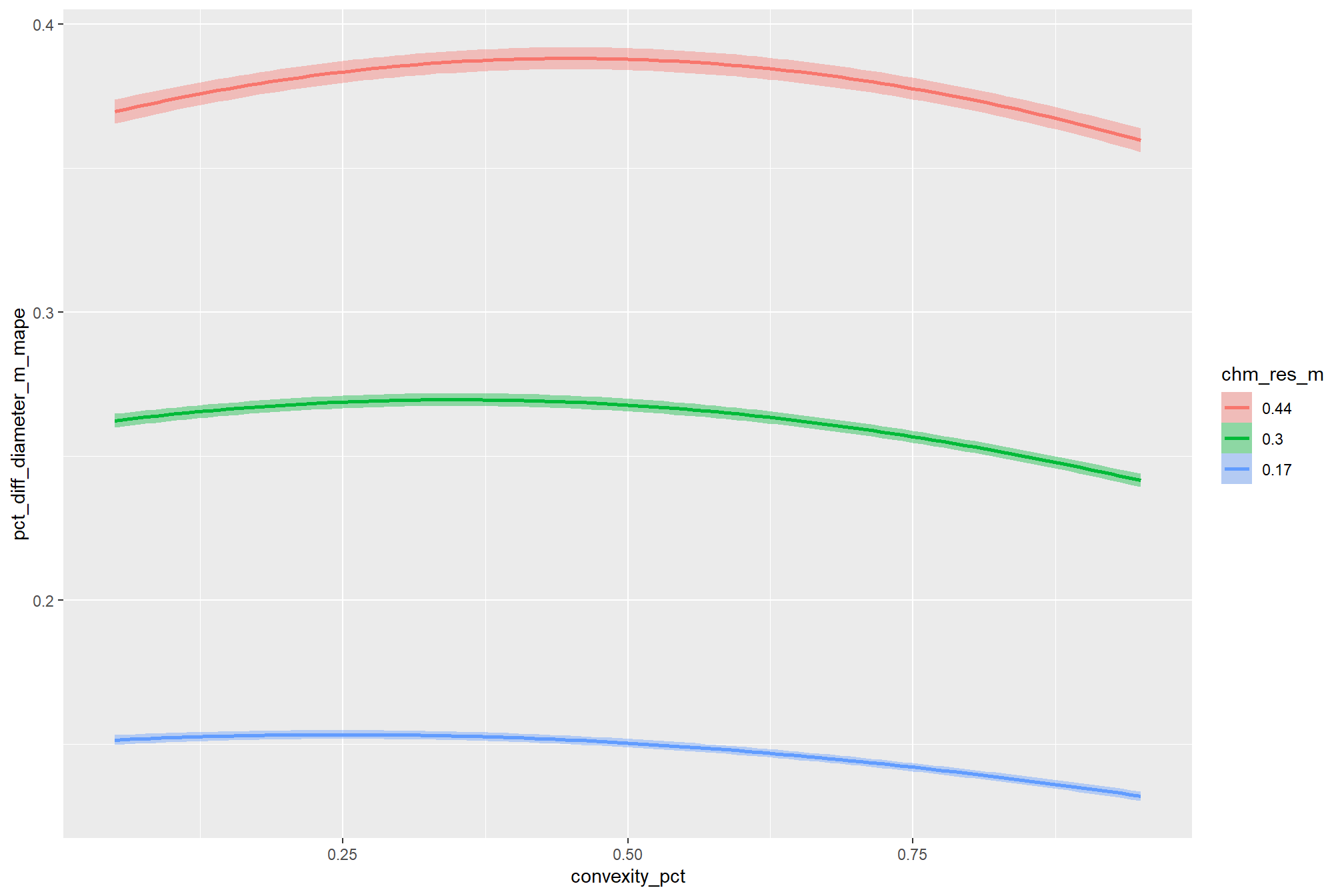

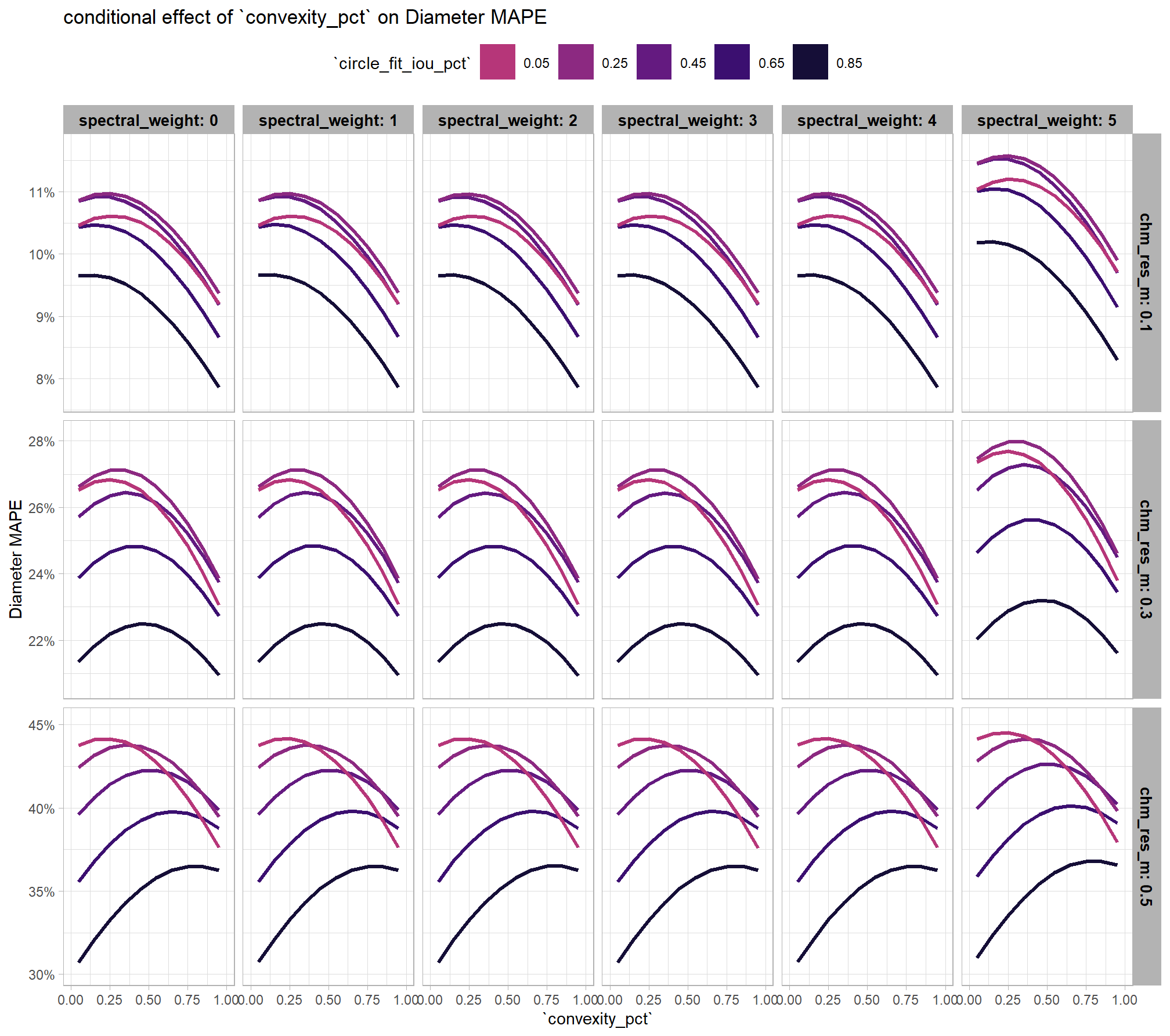

As expected, the model shows that the influence of the convexity_pct parameter on the F-score is conditional on the circle_fit_iou_pct setting. When circle_fit_iou_pct is set too high (requiring nearly perfectly circular pile perimeters), convexity_pct has a minimal impact on the F-score. When circle_fit_iou_pct is set to an optimal value near its vertex (e.g., ~0.25-0.55), the range of convexity_pct values below 0.5 result in the best pile detection for finer resolution CHM data (e.g. < 0.3 m) but for coarser resolution CHM data the optimal convexity_pct setting increases to ~0.37-0.62. Across all CHM resolutions and spectral weighting levels, setting convexity_pct too high (e.g > 0.7) results in steep declines in detection accuracy.

9.1.5.1.3 Optimizing geometric filtering

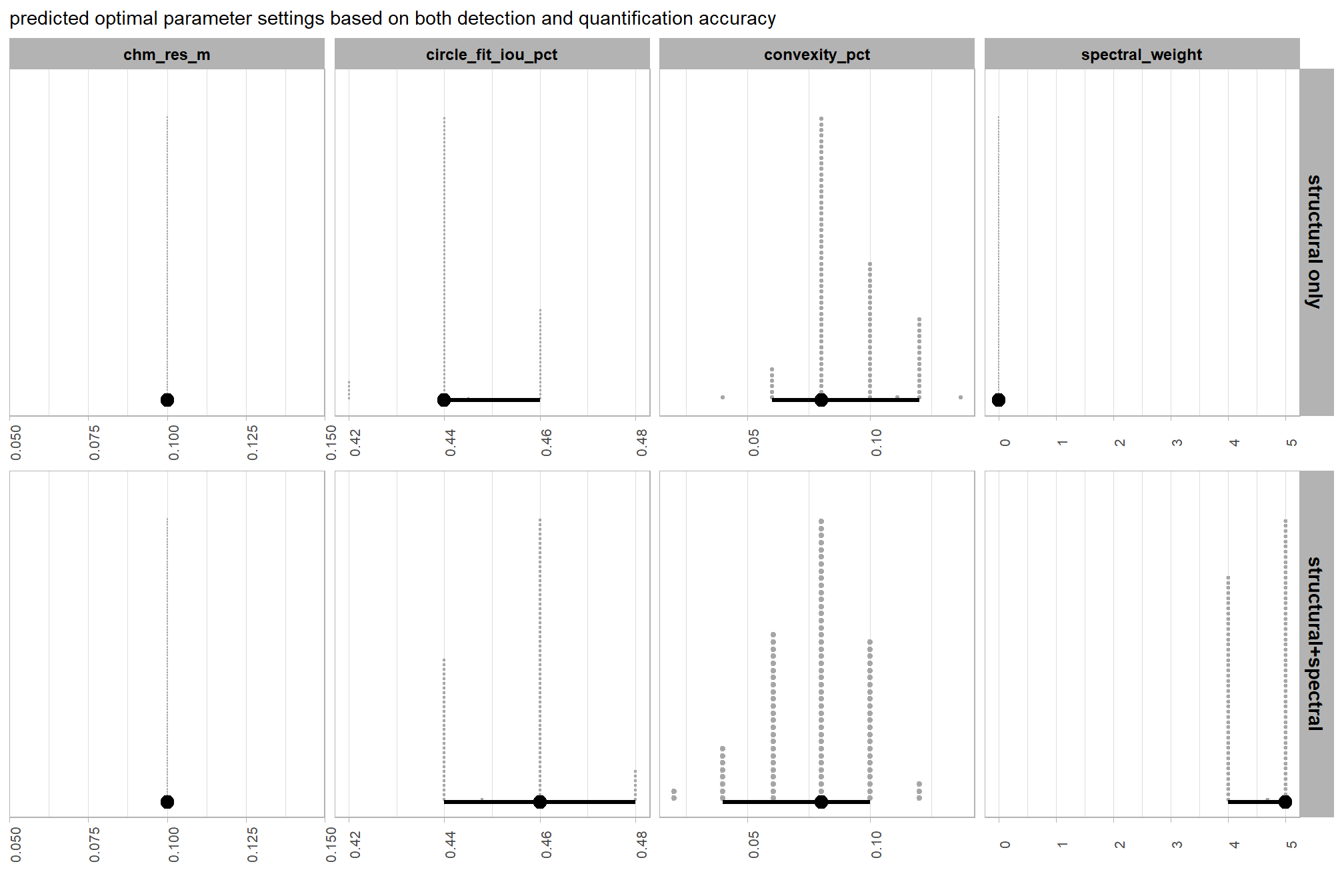

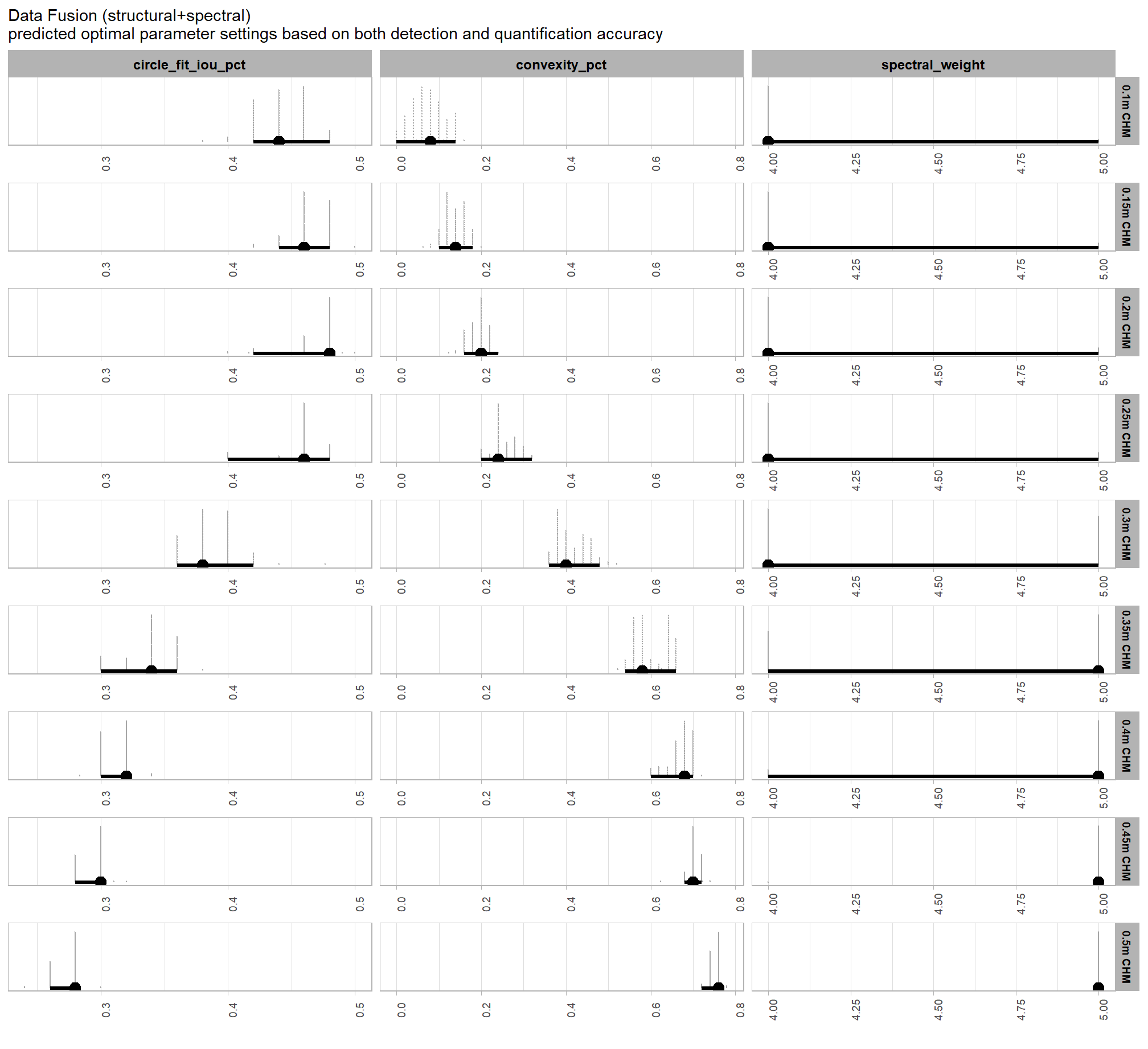

Given the complexity of our model, which includes a non-linear link function and parameter interactions, calculating the optimal parameter values by solving for them algebraically from the model’s coefficients would be prone to error. instead, we can use a robust Bayesian approach that leverages the model’s posterior predictive distribution. This method is powerful because it inherently accounts for all sources of model uncertainty.

first, we’ll generate a large number of predictions across a fine grid of parameter values (e.g. in steps of 0.01) for each posterior draw of the model coefficients. we’ll generate a large number (e.g. 1000+) of posterior predictive draws for each combination of parameter values. for each posterior predictive draw, we’ll then identify the parameter combination that maximizes the F-score and we’ll be left with a posterior distribution of optimal parameter combinations.

this approach demonstrates a key advantage of the Bayesian framework, allowing us to ask complex questions and find the most probable optimal parameter combination while fully accounting for uncertainty.

# let's get the draws at a very granular level

vertex_draws_temp <-

tidyr::crossing(

param_combos_spectral_ranked %>% dplyr::distinct(spectral_weight, spectral_weight_fact)

, circle_fit_iou_pct = seq(from = 0.0, to = 1, by = 0.01) # very granular to identify vertex

, convexity_pct = seq(from = 0.0, to = 1, by = 0.01) # very granular to identify vertex

, chm_res_m = seq(0.1,0.5,by=0.1)

, max_ht_m = structural_params_settings$max_ht_m

, max_area_m2 = structural_params_settings$max_area_m2

) %>%

tidybayes::add_epred_draws(brms_f_score_mod, ndraws = 1000, value = "value") %>%

dplyr::ungroup() %>%

# for each draw, get the highest f-score by chm_res_m, spectral_weight

# which we'll use to identify the optimal circle_fit_iou_pct,convexity_pct settings

# these are essentially "votes" based on likelihood

dplyr::group_by(

.draw

, chm_res_m, spectral_weight

) %>%

dplyr::arrange(desc(value),circle_fit_iou_pct,convexity_pct) %>%

dplyr::slice(1)

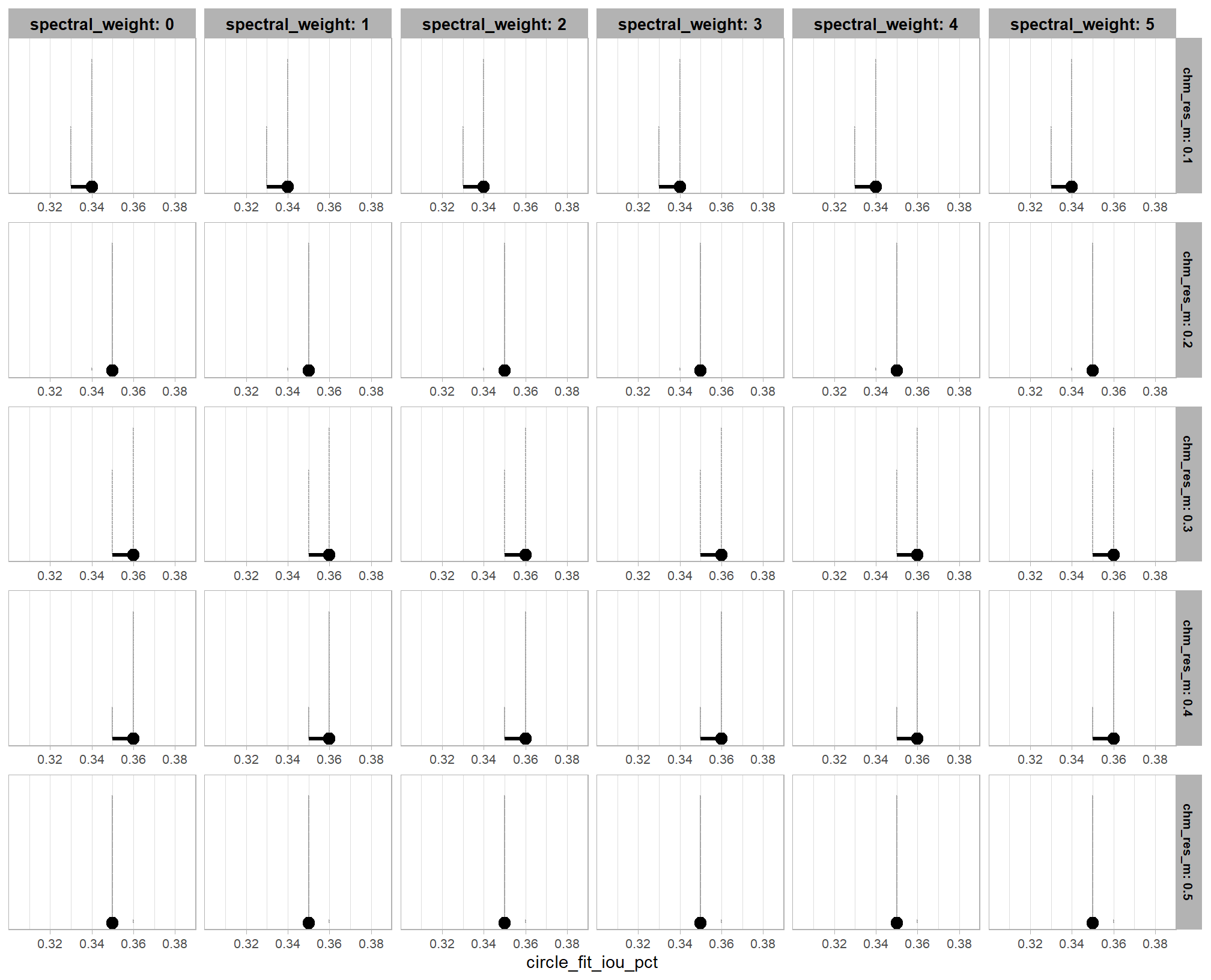

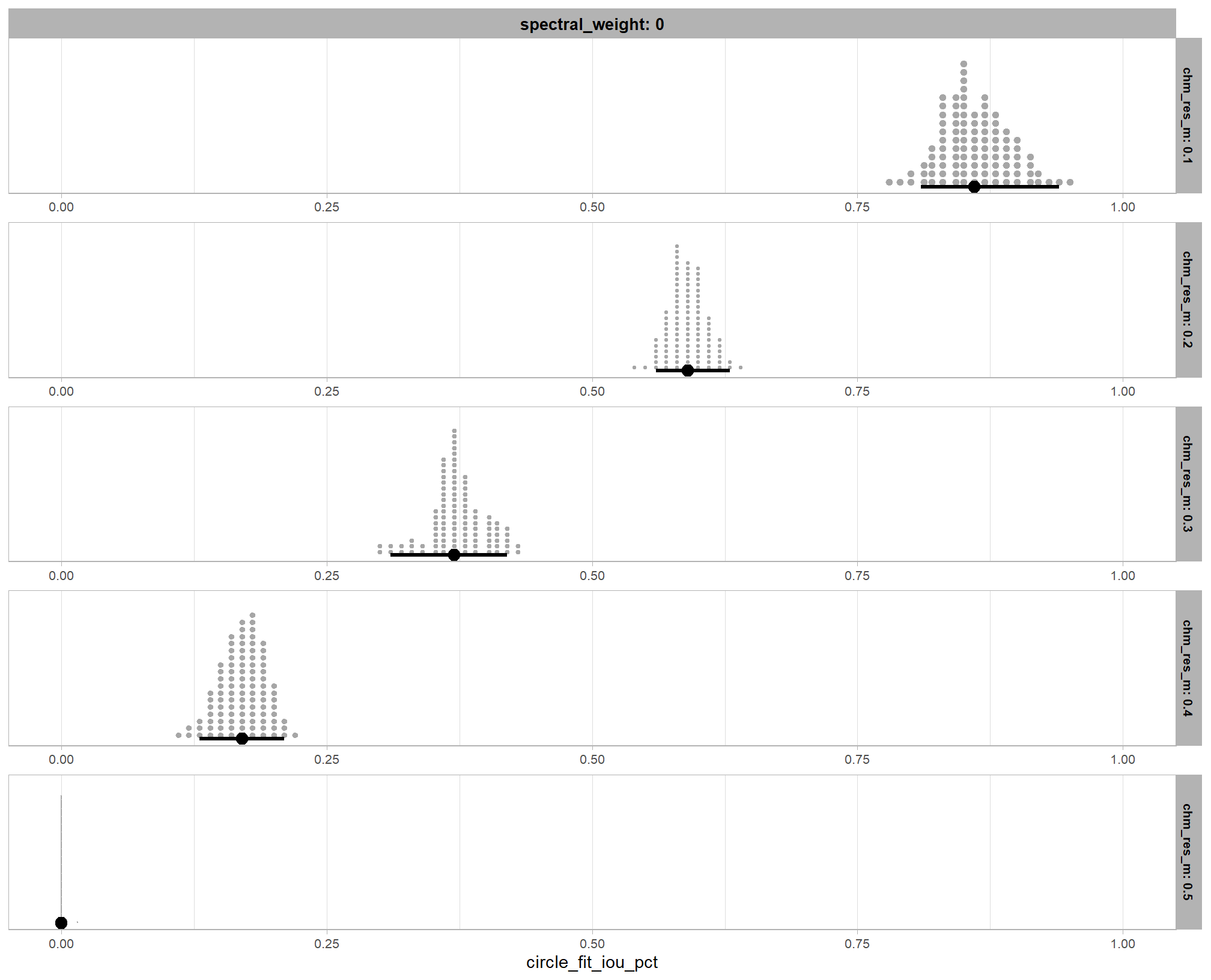

# vertex_draws_temp %>% dplyr::glimpse() # this thing is hugeplot the posterior distribution of optimal parameter setting for circle_fit_iou_pct

vertex_draws_temp %>%

# dplyr::filter(chm_res_m==0.4) %>%

dplyr::ungroup() %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = circle_fit_iou_pct)) +

tidybayes::stat_dotsinterval(

point_interval = "median_hdci", .width = c(0.95)

, quantiles = 100

, point_size = 3

) +

ggplot2::facet_grid(

cols = dplyr::vars(spectral_weight)

, rows = dplyr::vars(chm_res_m)

, scales = "free_y"

, labeller = "label_both"

, axes = "all_x"

) +

# ggplot2::scale_x_continuous(limits = c(0,1)) +

ggplot2::scale_x_continuous(expand = ggplot2::expansion(mult = 1), breaks = scales::breaks_extended(6)) +

ggplot2::scale_y_continuous(NULL, breaks = NULL) +

ggplot2::theme_light() +

ggplot2::theme(

strip.text.x = ggplot2::element_text(size = 10, color = "black", face = "bold")

, strip.text.y = ggplot2::element_text(size = 8, color = "black", face = "bold")

, axis.text.x = ggplot2::element_text(size = 8)

)

our model is very confident that the optimal circle_fit_iou_pct for maximizing detection accuracy is in a narrow range, just look at that x-axis scale

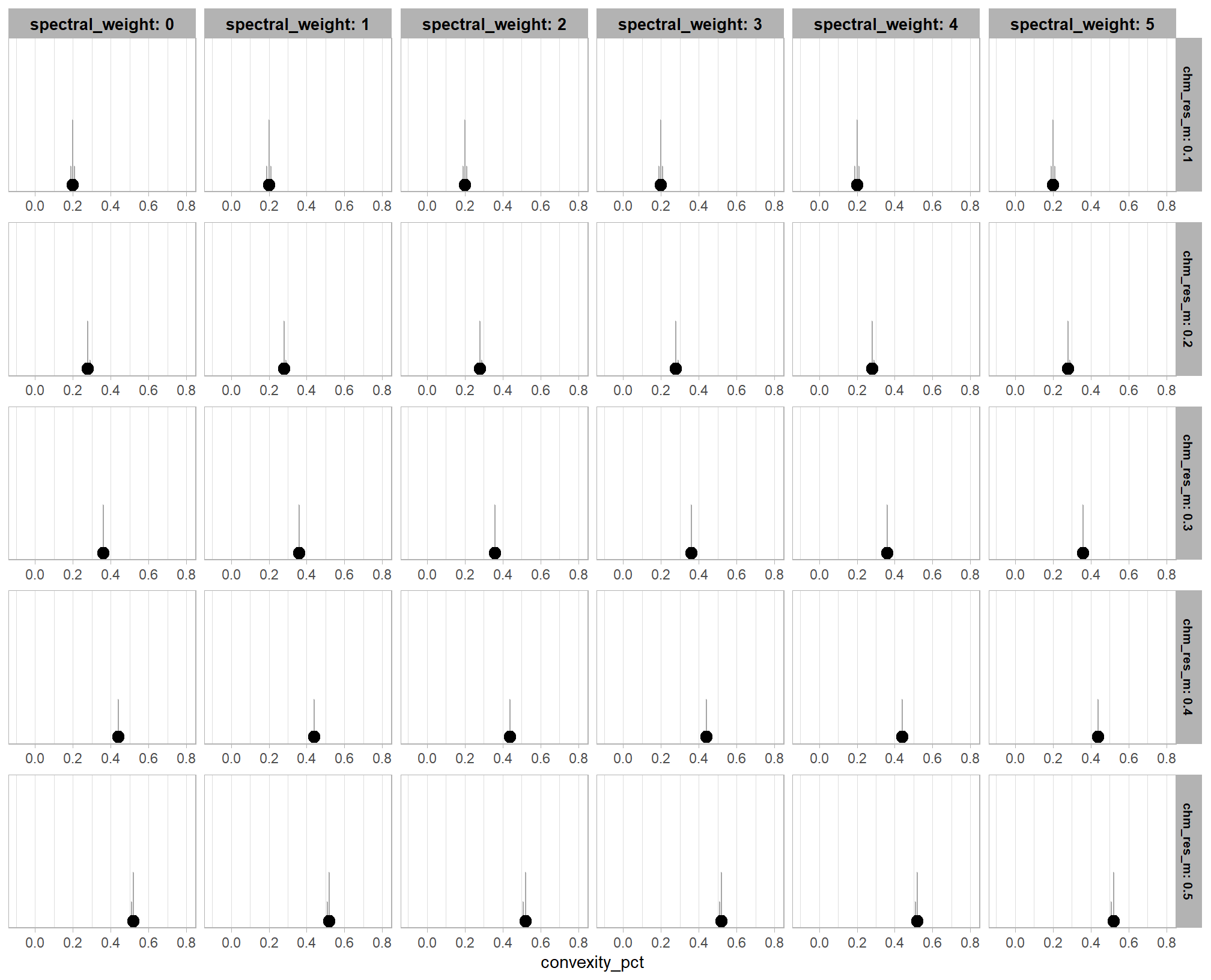

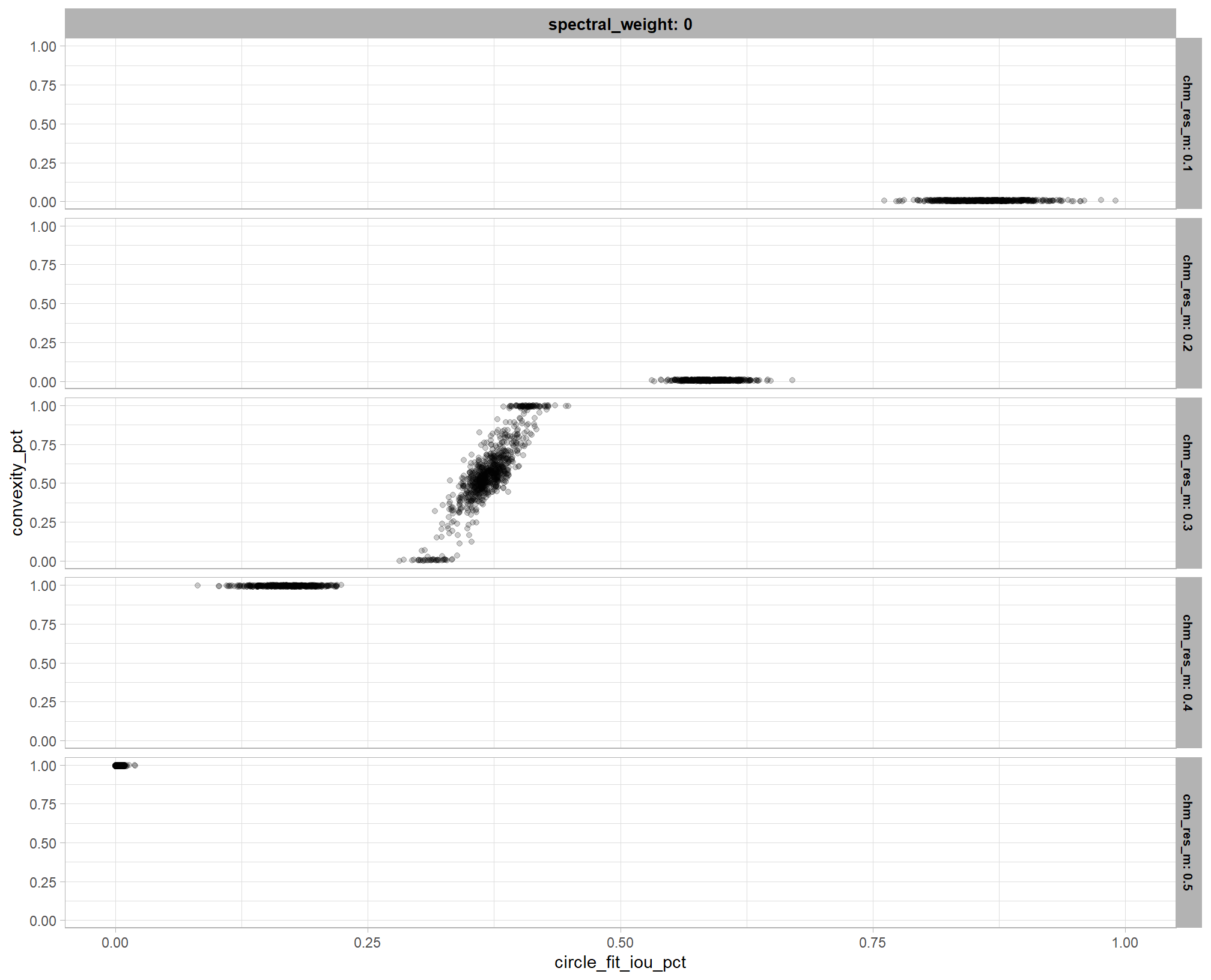

plot the posterior distribution of optimal parameter setting for convexity_pct

vertex_draws_temp %>%

dplyr::ungroup() %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = convexity_pct)) +

tidybayes::stat_dotsinterval(

point_interval = "median_hdci", .width = c(0.95)

, quantiles = 100

, point_size = 3

) +

ggplot2::facet_grid(

cols = dplyr::vars(spectral_weight)

, rows = dplyr::vars(chm_res_m)

, scales = "free_y"

, labeller = "label_both"

, axes = "all_x"

) +

# ggplot2::scale_x_continuous(limits = c(0,1)) +

ggplot2::scale_x_continuous(expand = ggplot2::expansion(mult = 1), breaks = scales::breaks_extended(6)) +

ggplot2::scale_y_continuous(NULL, breaks = NULL) +

ggplot2::theme_light() +

ggplot2::theme(

strip.text.x = ggplot2::element_text(size = 10, color = "black", face = "bold")

, strip.text.y = ggplot2::element_text(size = 8, color = "black", face = "bold")

)

when the circle_fit_iou_pct parameter is optimized, the influence of convexity_pct on detection accuracy is dependent on the CHM resolution. for coarser CHM data (>0.3 m), the model’s predictions indicate with high certainty that the optimal convexity_pct is higher (i.e. requiring more smooth boundaries) than the optimal convexity_pct setting (i.e. requiring less smooth boundaries) for finer resoultion CHM data. this finding is consistent with our previous results and provides additional evidence that the smoothing effect of coarser resolution CHM data makes convexity_pct most effective at filtering irregular objects only when it is set to an intermediate level (e.g. ~0.5).

we can look at this another way, check it

vertex_draws_temp %>%

dplyr::ungroup() %>%

ggplot2::ggplot(mapping = ggplot2::aes(y = convexity_pct, x = circle_fit_iou_pct)) +

# geom_point(alpha=0.2) +

ggplot2::geom_jitter(alpha=0.2, height = .01, width = .01) +

ggplot2::facet_grid(

cols = dplyr::vars(spectral_weight)

, rows = dplyr::vars(chm_res_m)

# , scales = "free_y"

, labeller = "label_both"

, axes = "all_x"

) +

# ggplot2::scale_x_continuous(limits = c(0,1)) +

ggplot2::scale_x_continuous(expand = ggplot2::expansion(mult = 1), breaks = scales::breaks_extended(6)) +

# ggplot2::scale_y_continuous(NULL, breaks = NULL) +

ggplot2::theme_light() +

ggplot2::theme(

strip.text.x = ggplot2::element_text(size = 10, color = "black", face = "bold")

, strip.text.y = ggplot2::element_text(size = 8, color = "black", face = "bold")

, axis.text = ggplot2::element_text(size = 8)

)

note, in the plot above, we slightly “jitter” the points so that they are visible where they would otherwise be stacked on top of each other and only look like a few points instead of the 1000 draws from the posterior we used

let’s table the HDI of the optimal values

# summarize it

table_vertex_draws_temp <- vertex_draws_temp %>%

dplyr::group_by(

chm_res_m, spectral_weight

) %>%

dplyr::summarise(

# get median_hdi

median_hdi_est_circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$y

, median_hdi_lower_circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$ymin

, median_hdi_upper_circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$ymax

# get median_hdi

, median_hdi_est_convexity_pct = tidybayes::median_hdci(convexity_pct)$y

, median_hdi_lower_convexity_pct = tidybayes::median_hdci(convexity_pct)$ymin

, median_hdi_upper_convexity_pct = tidybayes::median_hdci(convexity_pct)$ymax

) %>%

dplyr::ungroup()

# table it

table_vertex_draws_temp %>%

kableExtra::kbl(

digits = 2

, caption = ""

, col.names = c(

"CHM resolution", "spectral_weight"

, rep(c("median", "HDI low", "HDI high"),2)

)

, escape = F

) %>%

kableExtra::kable_styling() %>%

kableExtra::collapse_rows(columns = 1, valign = "top") %>%

kableExtra::add_header_above(c(

" "=2

, "circle_fit_iou_pct" = 3

, "convexity_pct" = 3

)) %>%

kableExtra::scroll_box(height = "8in")| CHM resolution | spectral_weight | median | HDI low | HDI high | median | HDI low | HDI high |

|---|---|---|---|---|---|---|---|

| 0.1 | 0 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 |

| 1 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 | |

| 2 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 | |

| 3 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 | |

| 4 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 | |

| 5 | 0.34 | 0.33 | 0.34 | 0.20 | 0.19 | 0.21 | |

| 0.2 | 0 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 |

| 1 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 | |

| 2 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 | |

| 3 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 | |

| 4 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 | |

| 5 | 0.35 | 0.35 | 0.35 | 0.28 | 0.28 | 0.29 | |

| 0.3 | 0 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 |

| 1 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 | |

| 2 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 | |

| 3 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 | |

| 4 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 | |

| 5 | 0.36 | 0.35 | 0.36 | 0.36 | 0.36 | 0.37 | |

| 0.4 | 0 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 |

| 1 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 | |

| 2 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 | |

| 3 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 | |

| 4 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 | |

| 5 | 0.36 | 0.35 | 0.36 | 0.44 | 0.43 | 0.44 | |

| 0.5 | 0 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 |

| 1 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 | |

| 2 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 | |

| 3 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 | |

| 4 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 | |

| 5 | 0.35 | 0.35 | 0.35 | 0.52 | 0.51 | 0.52 |

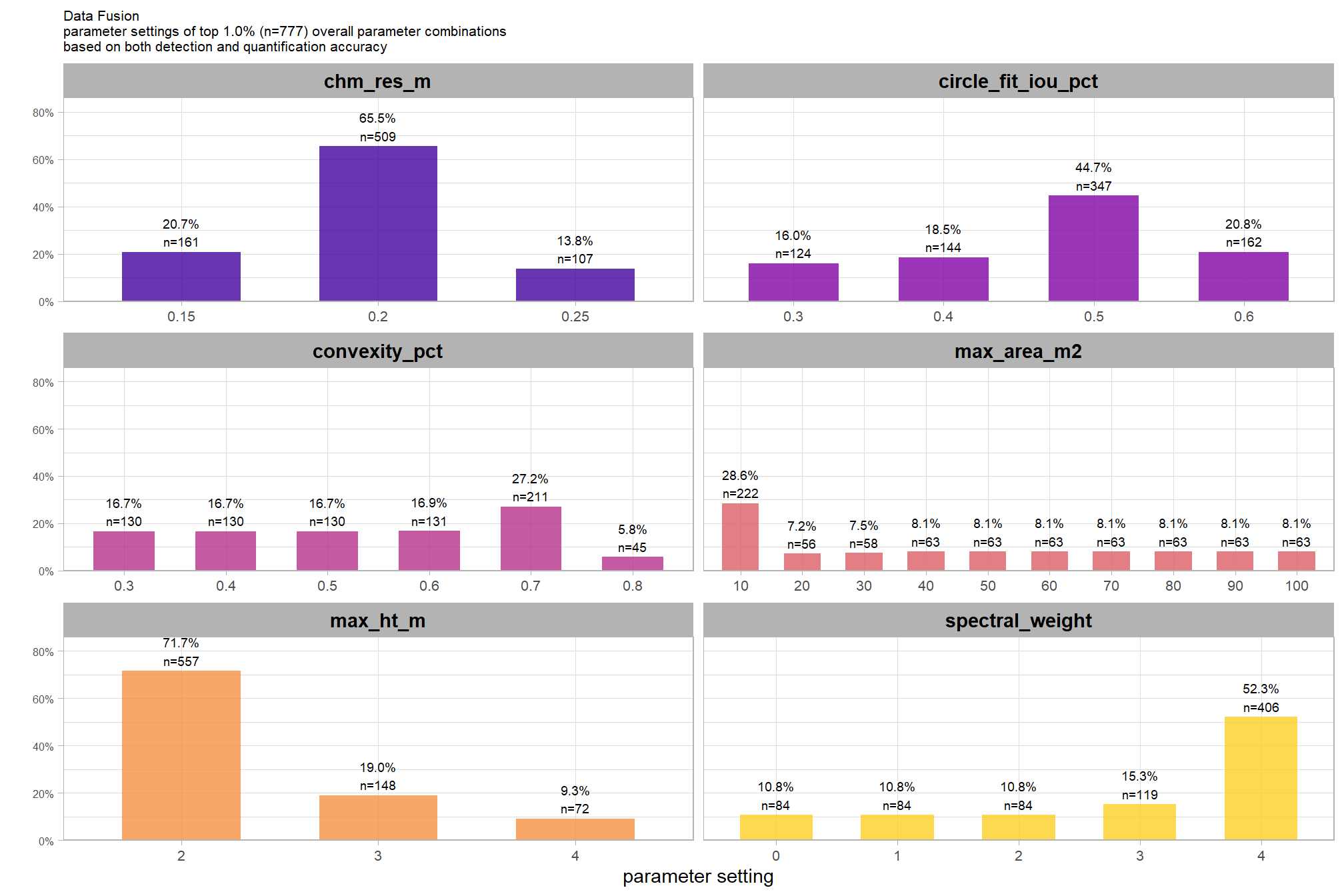

to fix our structural parameter levels so that we can continue to explore the influence of the input data, we’ll select the median of the optimal setting of circle_fit_iou_pct and convexity_pct by CHM resolution levels and spectral weighting. This will result in no one CHM resolution and spectral weighting combination using it’s optimal circle_fit_iou_pct and convexity_pct setting but will provide us with values in the plausible range of optimal settings that work across the CHM resolutions tested with empirical data.

structural_params_settings <-

vertex_draws_temp %>%

dplyr::ungroup() %>%

dplyr::summarise(

# get median_hdi

circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$y

# , median_hdi_lower_circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$ymin

# , median_hdi_upper_circle_fit_iou_pct = tidybayes::median_hdci(circle_fit_iou_pct)$ymax

# get median_hdi

, convexity_pct = tidybayes::median_hdci(convexity_pct)$y

# , median_hdi_lower_convexity_pct = tidybayes::median_hdci(convexity_pct)$ymin

# , median_hdi_upper_convexity_pct = tidybayes::median_hdci(convexity_pct)$ymax

) %>%

dplyr::bind_cols(

structural_params_settings

)

# what?

structural_params_settings %>% dplyr::glimpse()## Rows: 1

## Columns: 4

## $ circle_fit_iou_pct <dbl> 0.35

## $ convexity_pct <dbl> 0.36

## $ max_ht_m <dbl> 2.3

## $ max_area_m2 <dbl> 469.1.5.2 Input data

to explore the influence of the input data, which includes the presence or absence of spectral data and its weighting (i.e. spectral_weight) as well as the CHM resolution (chm_res_m), we will fix all four structural parameters (max_ht_m, max_area_m2, convexity_pct, circle_fit_iou_pct). the max_ht_m, max_area_m2 parameters will be fixed at expected levels based on the slash pile construction prescription while the convexity_pct, circle_fit_iou_pct will be fixed at the optimal levels determined based on the Bayesian posterior predictive distribution of detection accuracy

we’ll make contrasts of the posterior predictions to probabilistically quantify the influence of the input data (e.g. inclusion of spectral data and it’s weighting and CHM resolution). before we do this we’re going to borrow code from (Tinkham and Woolsey 2024) to make and plot the Bayesian contrasts

############################################

# make the variables for the contrast

############################################

make_contrast_vars <- function(my_data){

my_data %>%

dplyr::mutate(

# get median_hdi

median_hdi_est = tidybayes::median_hdci(value)$y

, median_hdi_lower = tidybayes::median_hdci(value)$ymin

, median_hdi_upper = tidybayes::median_hdci(value)$ymax

# check probability of contrast

, pr_gt_zero = mean(value > 0) %>%

scales::percent(accuracy = 1)

, pr_lt_zero = mean(value < 0) %>%

scales::percent(accuracy = 1)

# check probability that this direction is true

, is_diff_dir = dplyr::case_when(

median_hdi_est >= 0 ~ value > 0

, median_hdi_est < 0 ~ value < 0

)

, pr_diff = mean(is_diff_dir)

# make a label

, pr_diff_lab = dplyr::case_when(

median_hdi_est > 0 ~ paste0(

"Pr("

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr("

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

)

# make a SMALLER label

, pr_diff_lab_sm = dplyr::case_when(

median_hdi_est >= 0 ~ paste0(

"Pr(>0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr(<0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

)

, pr_diff_lab_pos = dplyr::case_when(

median_hdi_est > 0 ~ median_hdi_upper

, median_hdi_est < 0 ~ median_hdi_lower

) * 1.075

, sig_level = dplyr::case_when(

pr_diff > 0.99 ~ 0

, pr_diff > 0.95 ~ 1

, pr_diff > 0.9 ~ 2

, pr_diff > 0.8 ~ 3

, T ~ 4

) %>%

factor(levels = c(0:4), labels = c(">99%","95%","90%","80%","<80%"), ordered = T)

)

}

############################################

# plot the contrast

############################################

plt_contrast <- function(

my_data

, x = "value"

, y = "contrast"

, fill = "pr_diff"

, label = "pr_diff_lab"

, label_pos = "pr_diff_lab_pos"

, label_size = 3

, x_expand = c(0.1, 0.1)

, facet = NA

, y_axis_title = ""

, x_axis_title = "difference"

, caption_text = "" # form_temp

, annotate_size = 2.2

, annotate_which = "both" # "both", "left", "right"

, include_zero = T

) {

# df for annotation

get_annotation_df <- function(

my_text_list = c(

"Bottom Left (h0,v0)","Top Left (h0,v1)"

,"Bottom Right h1,v0","Top Right h1,v1"

)

, hjust = c(0,0,1,1) # higher values = right, lower values = left

, vjust = c(0,1.3,0,1.3) # higher values = down, lower values = up

){

df = data.frame(

xpos = c(-Inf,-Inf,Inf,Inf)

, ypos = c(-Inf, Inf,-Inf,Inf)

, annotate_text = my_text_list

, hjustvar = hjust

, vjustvar = vjust

)

return(df)

}

if(annotate_which=="left"){

text_list <- c(

"","L.H.S. < R.H.S."

,"",""

)

}else if(annotate_which=="right"){

text_list <- c(

"",""

,"","L.H.S. > R.H.S."

)

}else{

text_list <- c(

"","L.H.S. < R.H.S."

,"","L.H.S. > R.H.S."

)

}

# plot base

plt <-

my_data %>%

ggplot(aes(x = .data[[x]], y = .data[[y]]))

if(include_zero){

plt <- plt + geom_vline(xintercept = 0, linetype = "solid", color = "gray33", lwd = 1.1)

}

# plot meat

plt <- plt +

tidybayes::stat_halfeye(

mapping = aes(fill = .data[[fill]])

, point_interval = median_hdi, .width = c(0.5,0.95)

# , slab_fill = "gray22", slab_alpha = 1

, interval_color = "black", point_color = "black", point_fill = "black"

, point_size = 0.9

, justification = -0.01

) +

geom_text(

data = get_annotation_df(

my_text_list = text_list

)

, mapping = aes(

x = xpos, y = ypos

, hjust = hjustvar, vjust = vjustvar

, label = annotate_text

, fontface = "bold"

)

, size = annotate_size

, color = "gray30" # "#2d2a4d" #"#204445"

) +

# scale_fill_fermenter(

# n.breaks = 5 # 10 use 10 if can go full range 0-1

# , palette = "PuOr" # "RdYlBu"

# , direction = 1

# , limits = c(0.5,1) # use c(0,1) if can go full range 0-1

# , labels = scales::percent

# ) +

scale_fill_stepsn(

n.breaks = 5 # 10 use 10 if can go full range 0-1

, colors = harrypotter::hp(n=5, option="ravenclaw", direction = -1) # RColorBrewer::brewer.pal(11,"PuOr")[c(3,4,8,10,11)]

, limits = c(0.5,1) # use c(0,1) if can go full range 0-1

, labels = scales::percent

) +

scale_x_continuous(expand = expansion(mult = x_expand)) +

labs(

y = y_axis_title

, x = x_axis_title

, fill = "Pr(contrast)"

, subtitle = "posterior predictive distribution of group constrasts with 95% & 50% HDI"

, caption = caption_text

) +

theme_light() +

theme(

legend.text = element_text(size = 7)

, legend.title = element_text(size = 8)

, axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1.05)

, strip.text = element_text(color = "black", face = "bold")

) +

guides(fill = guide_colorbar(theme = theme(

legend.key.width = unit(1, "lines"),

legend.key.height = unit(12, "lines")

)))

# label or not

if(!is.na(label) && !is.na(label_pos) && !is.na(label_size)){

plt <- plt +

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos>=0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

, facet

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = 0, size = label_size

) +

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos<0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

, facet

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = +1, size = label_size

)

}

# return facet or not

if(max(is.na(facet))==0){

return(

plt +

facet_grid(cols = vars(.data[[facet]]))

)

}

else{return(plt)}

}now get the posterior predictive draws

draws_temp <-

tidyr::crossing(

structural_params_settings

, param_combos_spectral_ranked %>% dplyr::distinct(spectral_weight_fact, spectral_weight)

, param_combos_spectral_ranked %>% dplyr::distinct(chm_res_m)

) %>%

tidybayes::add_epred_draws(brms_f_score_mod, ndraws = 1111) %>%

dplyr::rename(value = .epred)

# # huh?

draws_temp %>% dplyr::glimpse()## Rows: 33,330

## Columns: 12

## Groups: circle_fit_iou_pct, convexity_pct, max_ht_m, max_area_m2, spectral_weight_fact, spectral_weight, chm_res_m, .row [30]

## $ circle_fit_iou_pct <dbl> 0.35, 0.35, 0.35, 0.35, 0.35, 0.35, 0.35, 0.35, 0…

## $ convexity_pct <dbl> 0.36, 0.36, 0.36, 0.36, 0.36, 0.36, 0.36, 0.36, 0…

## $ max_ht_m <dbl> 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3, 2.3,…

## $ max_area_m2 <dbl> 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 46, 4…

## $ spectral_weight_fact <fct> structural only, structural only, structural only…

## $ spectral_weight <fct> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0…

## $ chm_res_m <dbl> 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,…

## $ .row <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ .chain <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ .iteration <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15…

## $ value <dbl> 0.8102877, 0.8088862, 0.8099895, 0.8118048, 0.811…9.1.5.2.1 CHM resolution

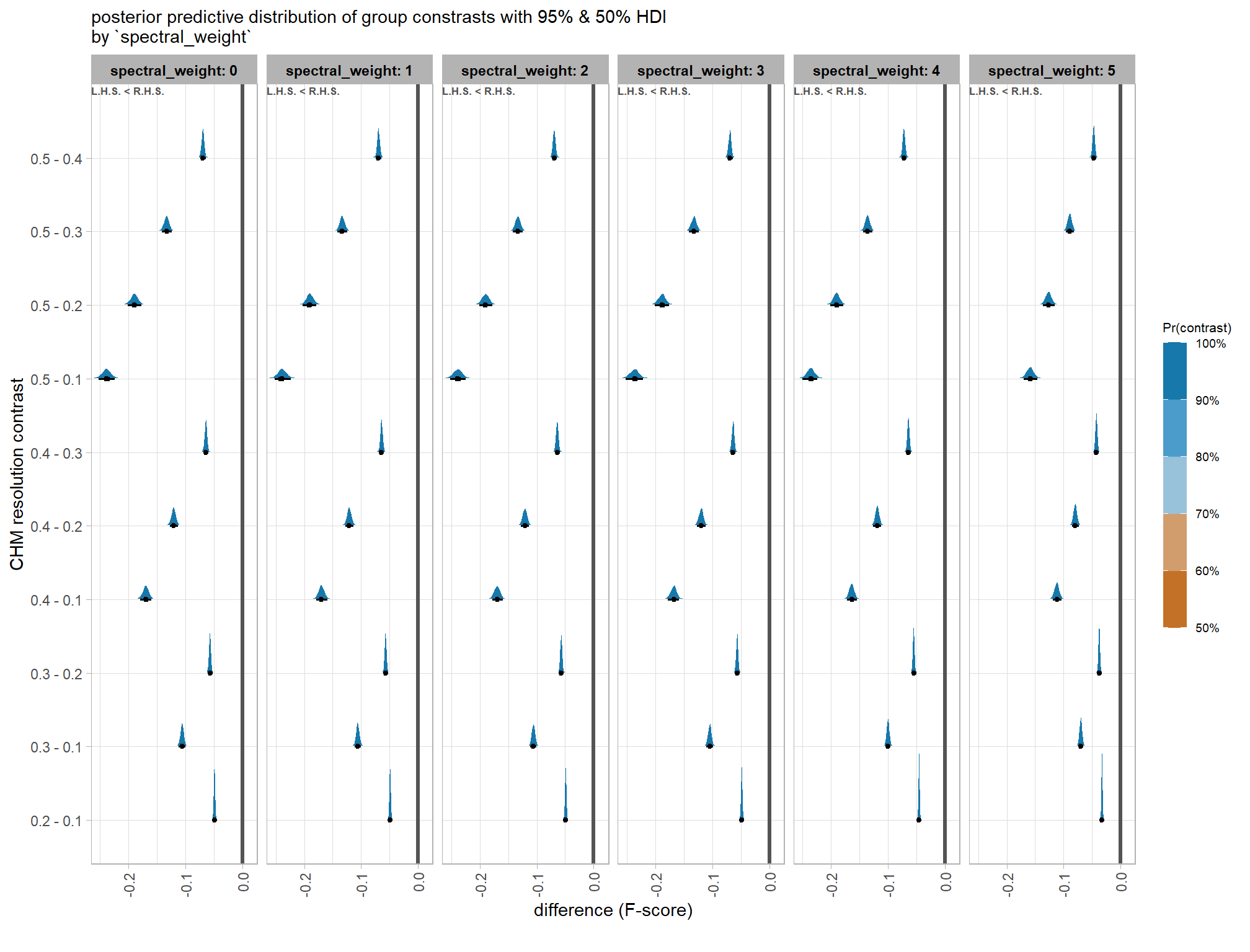

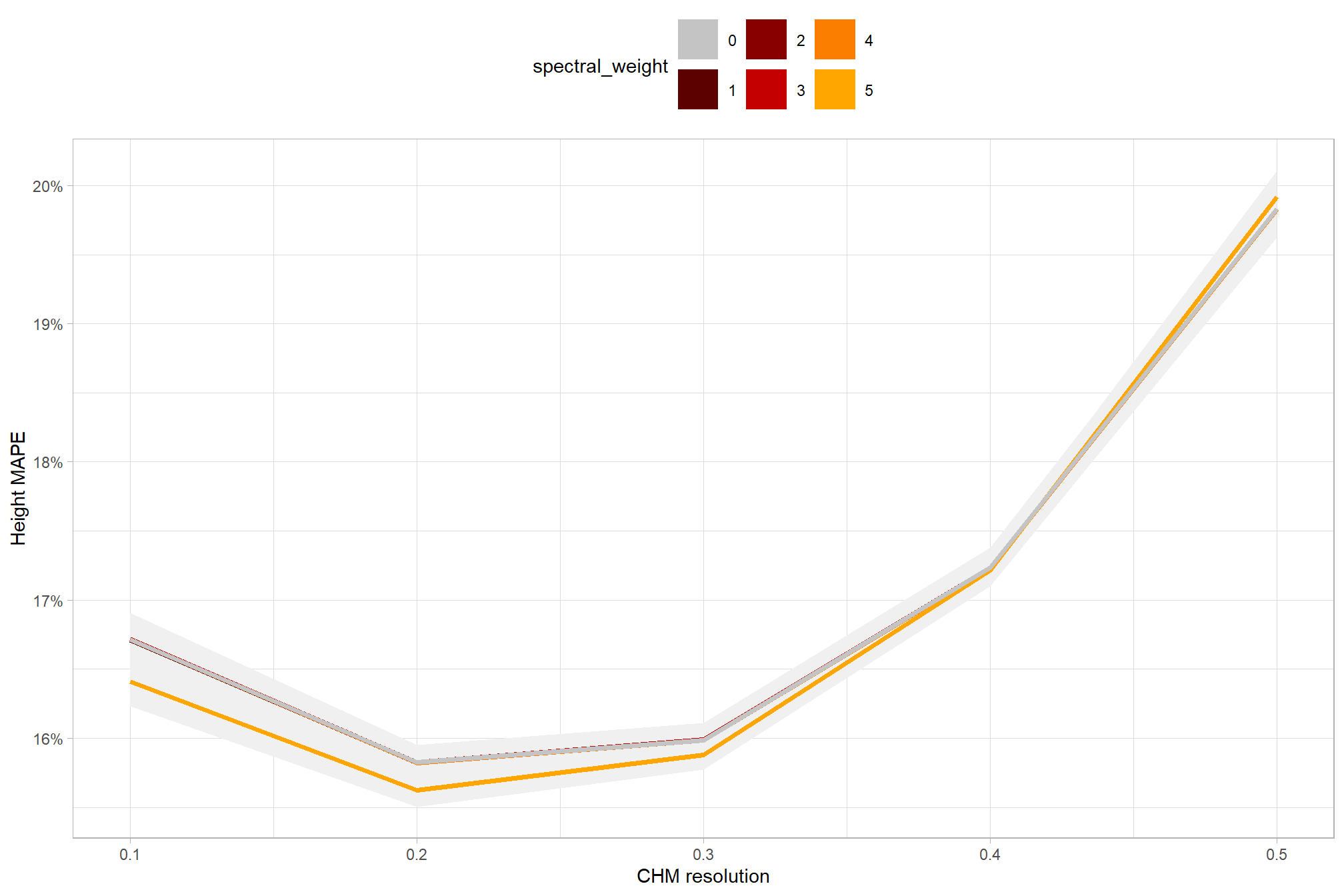

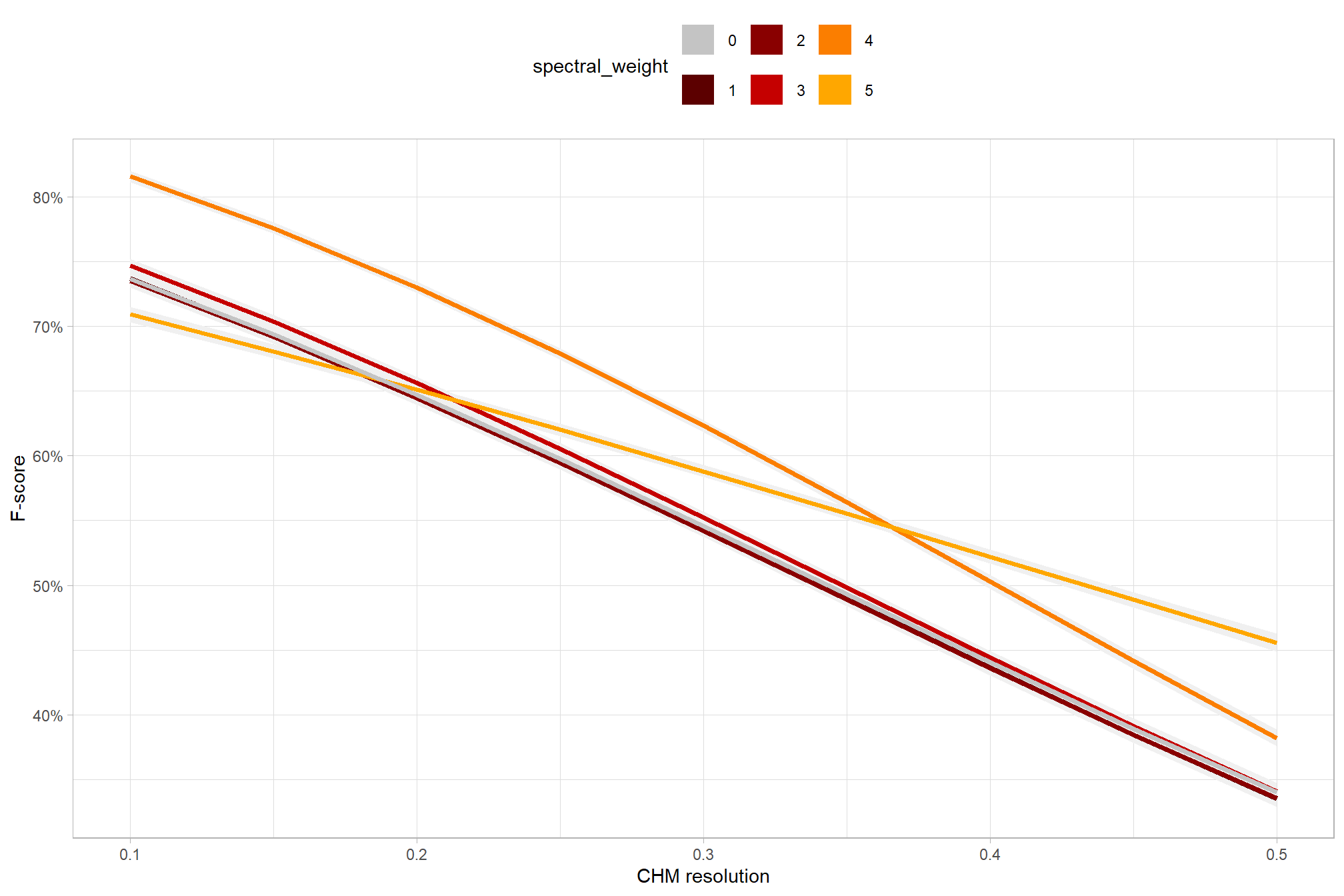

first, we’ll look at the impact of changing CHM resolution by the spectral_weight parameter where a value of “0” indicates no spectral data was used (i.e. structural only), the lowest weighting of the spectral data is “1” (only one spectral index threshold must be met), and the highest weighting of spectral data is “5” (all spectral index thresholds must be met).

our questions regarding CHM resolution were:

- question 1: does CHM resolution influences detection accuracy?

- question 2: does the effect of CHM resolution change based on the inclusion of spectral data versus using only structural data?

we can answer those questions using our model by considering the credible slope of the CHM resolution predictor as a function of the spectral_weight parameter (e.g. Kurz 2025; Kruschke (2015, Ch. 18))

pal_spectral_weight <- c("gray77", harrypotter::hp(n=5, option = "gryffindor", direction = 1)) # %>% scales::show_col()

# brms::posterior_summary(brms_f_score_mod)

draws_temp %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = chm_res_m, y = value, color = spectral_weight)) +

# tidybayes::stat_halfeye() +

tidybayes::stat_lineribbon(point_interval = "median_hdi", .width = c(0.95)) +

# ggplot2::facet_wrap(facets = dplyr::vars(spectral_weight))

ggplot2::scale_fill_brewer(palette = "Greys") +

ggplot2::scale_color_manual(values = pal_spectral_weight) +

ggplot2::scale_y_continuous(labels = scales::percent) +

ggplot2::labs(x = "CHM resolution", y = "F-score") +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top"

) +

ggplot2::guides(

color = ggplot2::guide_legend(override.aes = list(shape = 15, lwd = 8, fill = NA))

, fill = "none"

)

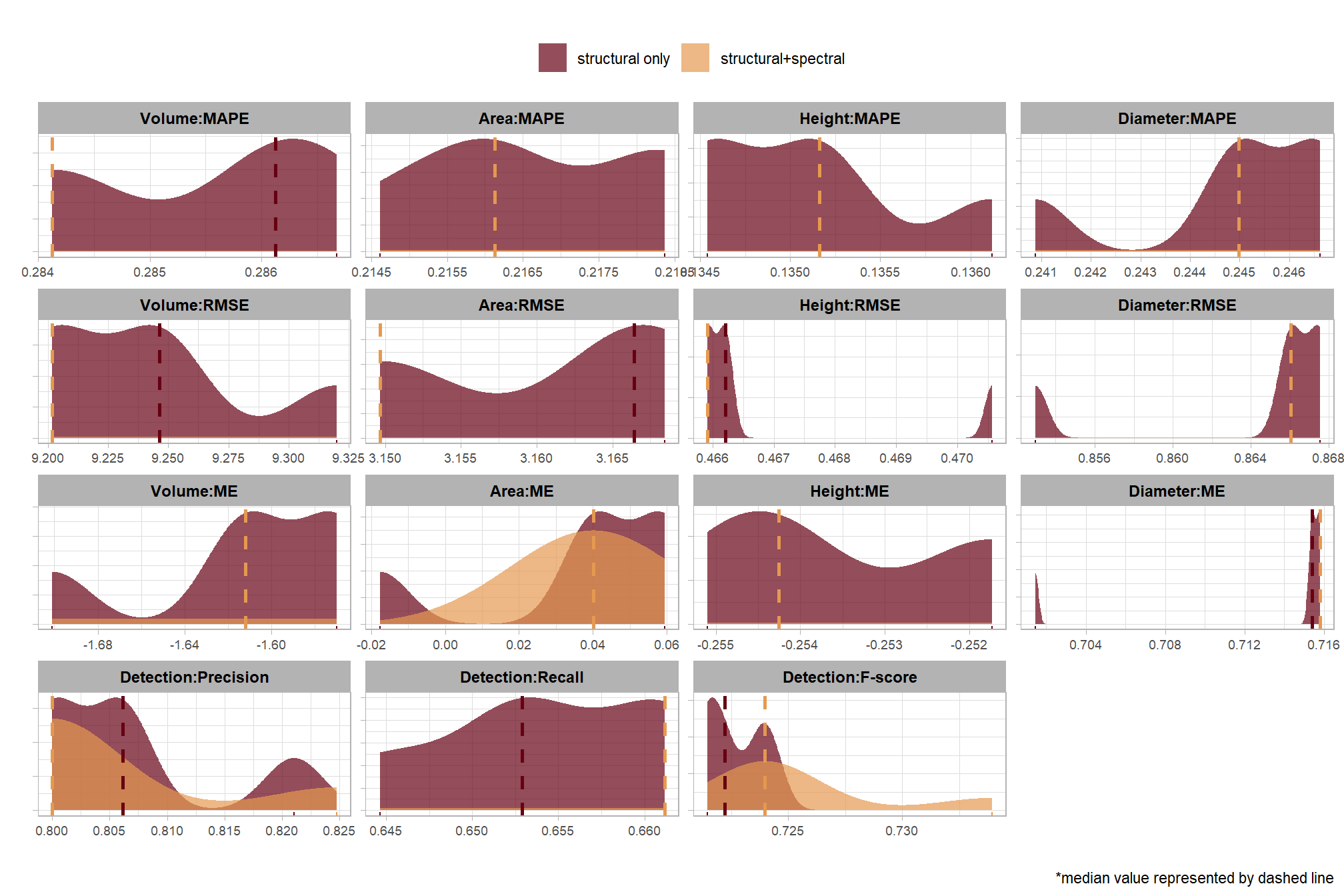

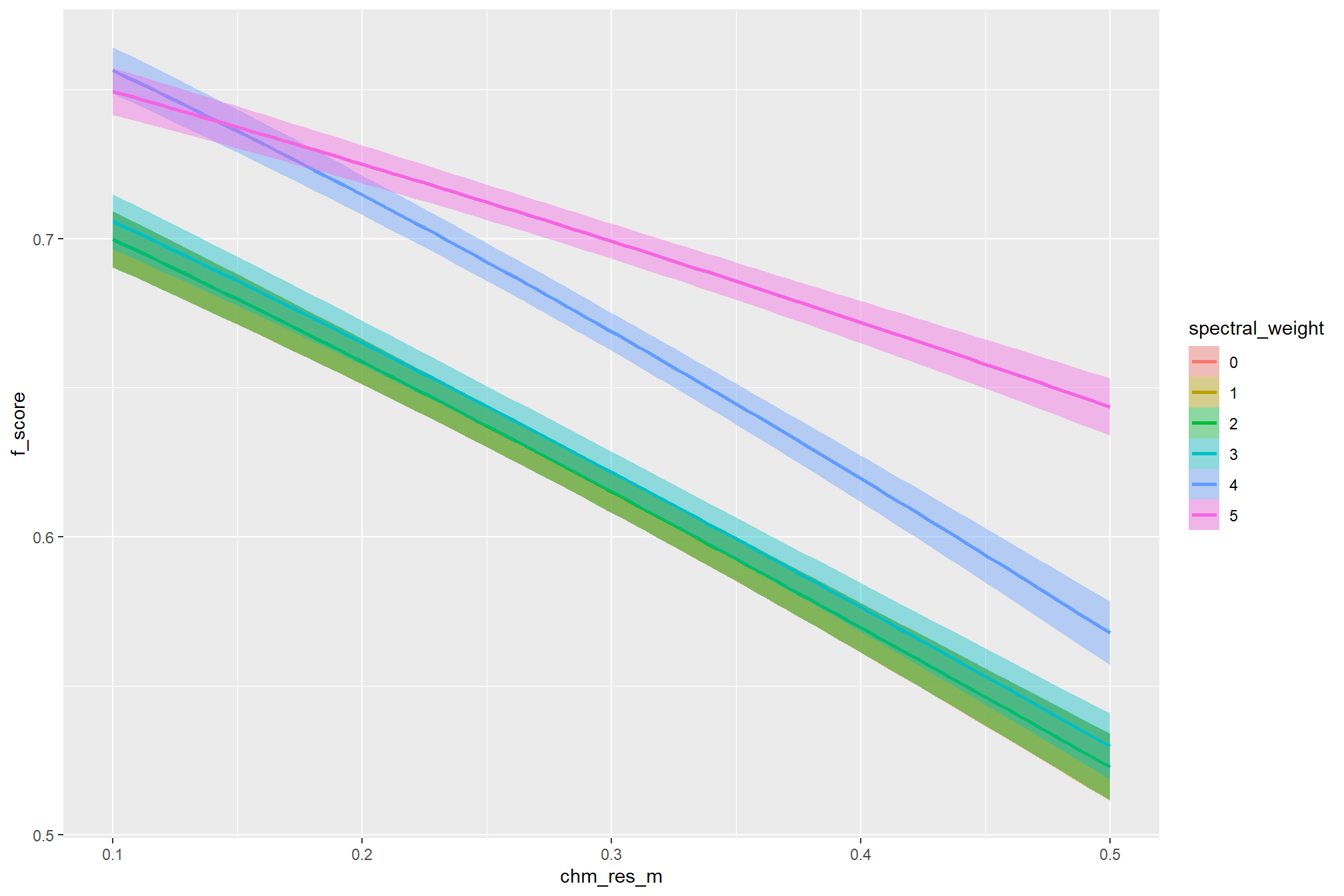

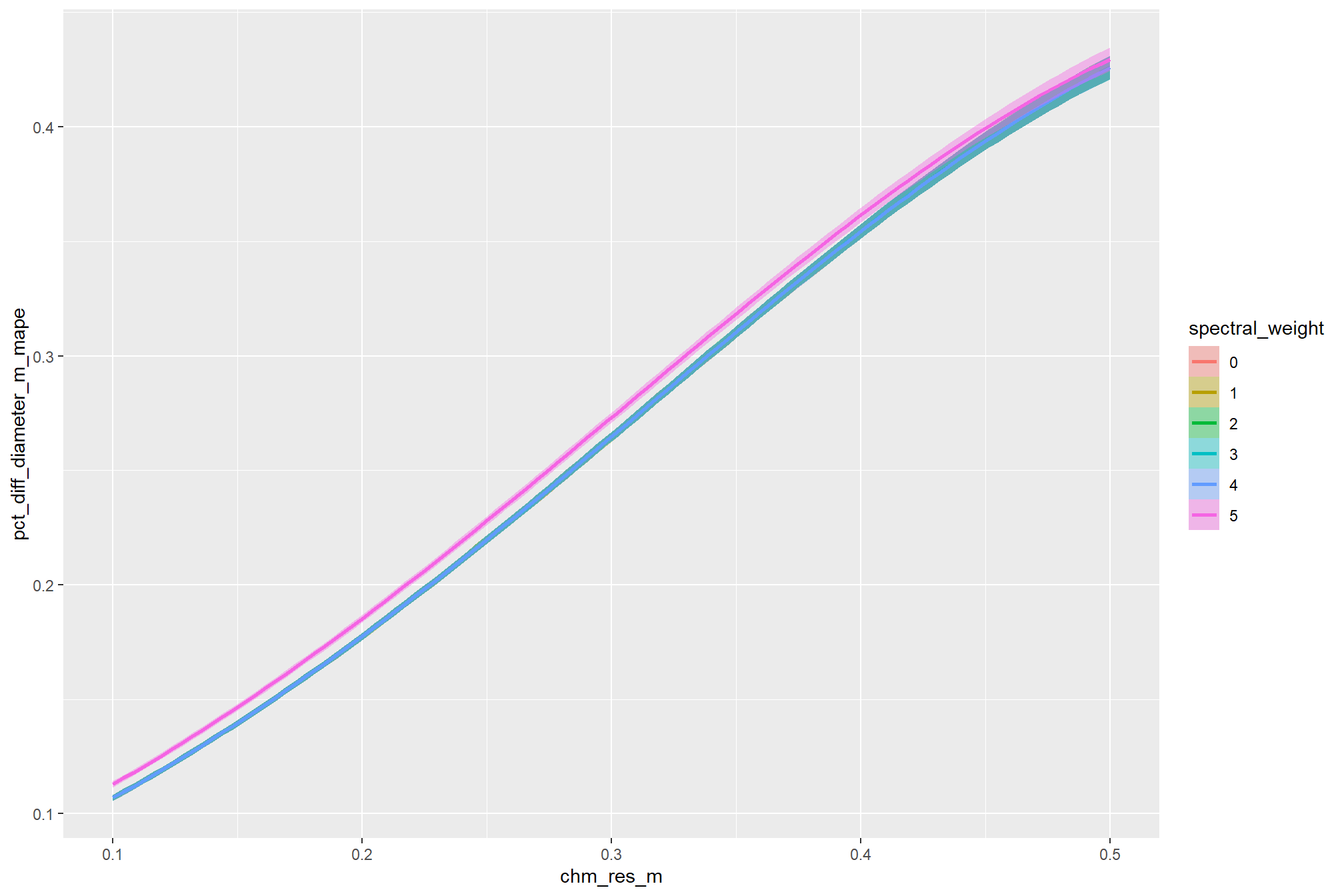

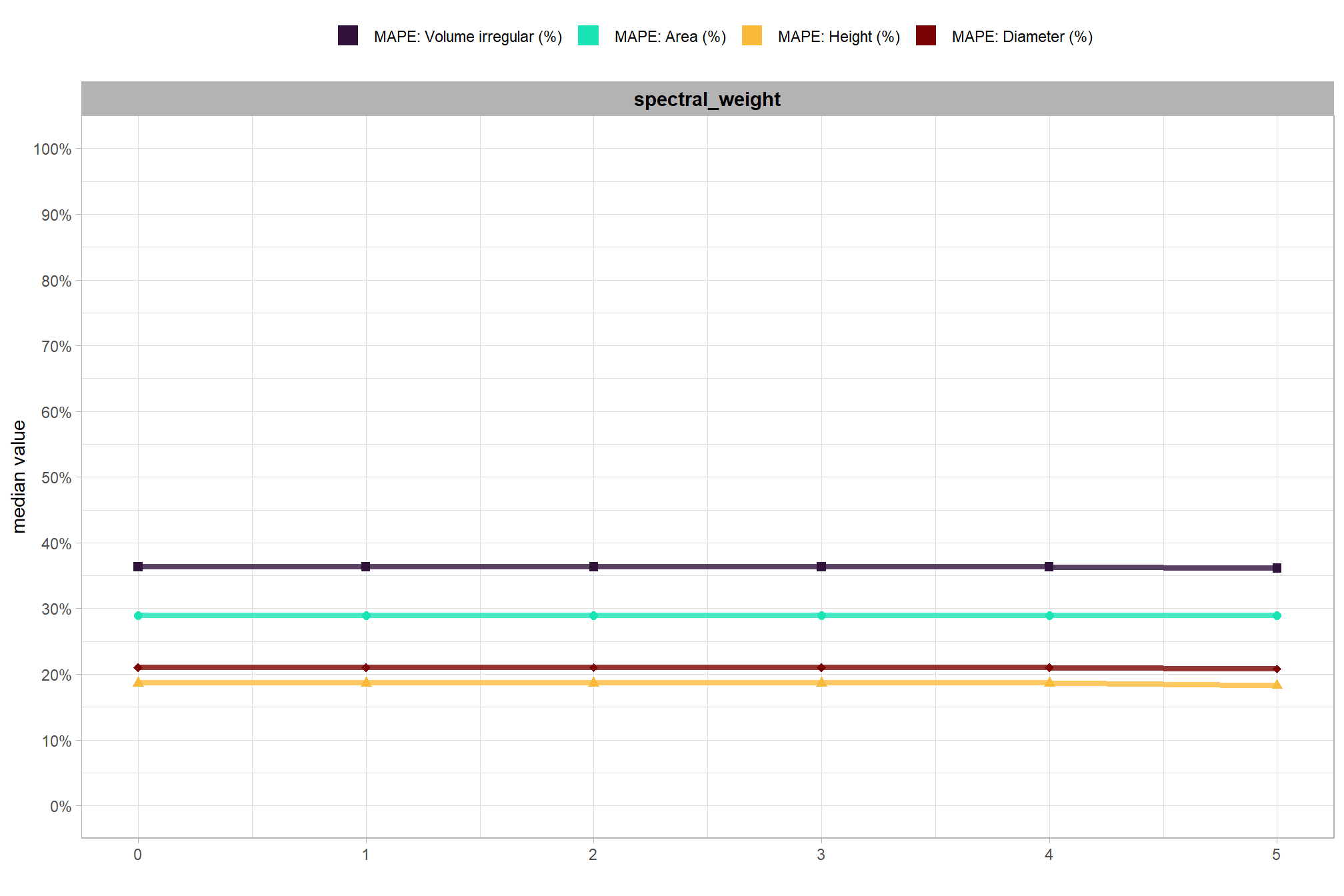

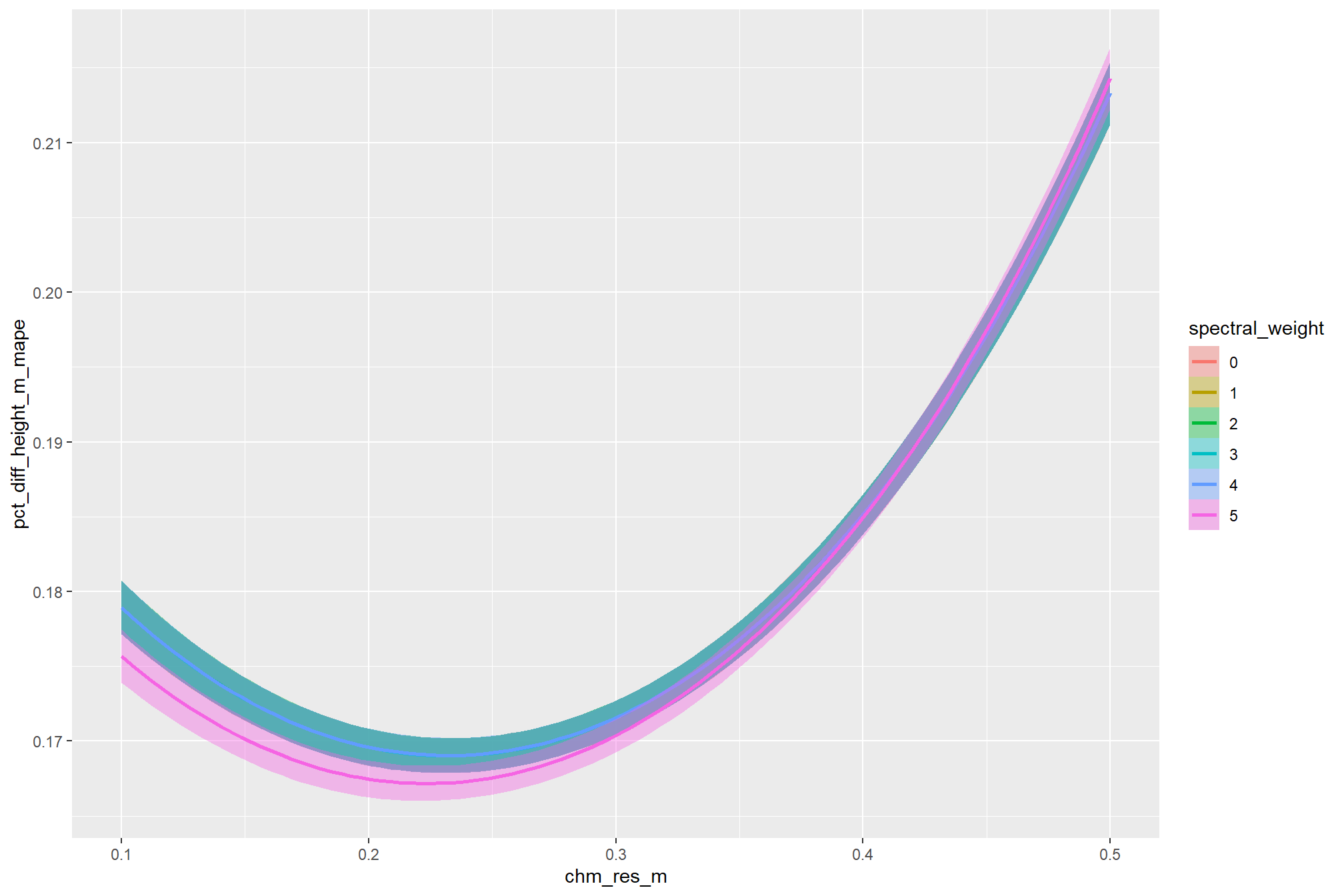

there are a few takeaways from this plot:

- including spectral data and setting the

spectral_weightto “1”, “2”, or “3” appears to be not much different than not including spectral data at all (but we’ll probabilistically test this later on) - including spectral data and setting the

spectral_weightto “4” yields a larger negative impact of decreasing CHM resolution on detection accuracy - including spectral data and setting the

spectral_weightto “5” yields a smaller negative impact of decreasing CHM resolution on detection accuracy and beyond a CHM resolution of ~0.13 m, detection accuracy is maximized when setting thespectral_weightto “5”. this makes intuitive sense because as the structural information about slash piles becomes less fine grain (i.e. more coarse), we should put more weight into the spectral data for detecting slash piles

all of this is to say that the impact of CHM resolution varies based on the spectral_weight setting and vice-versa

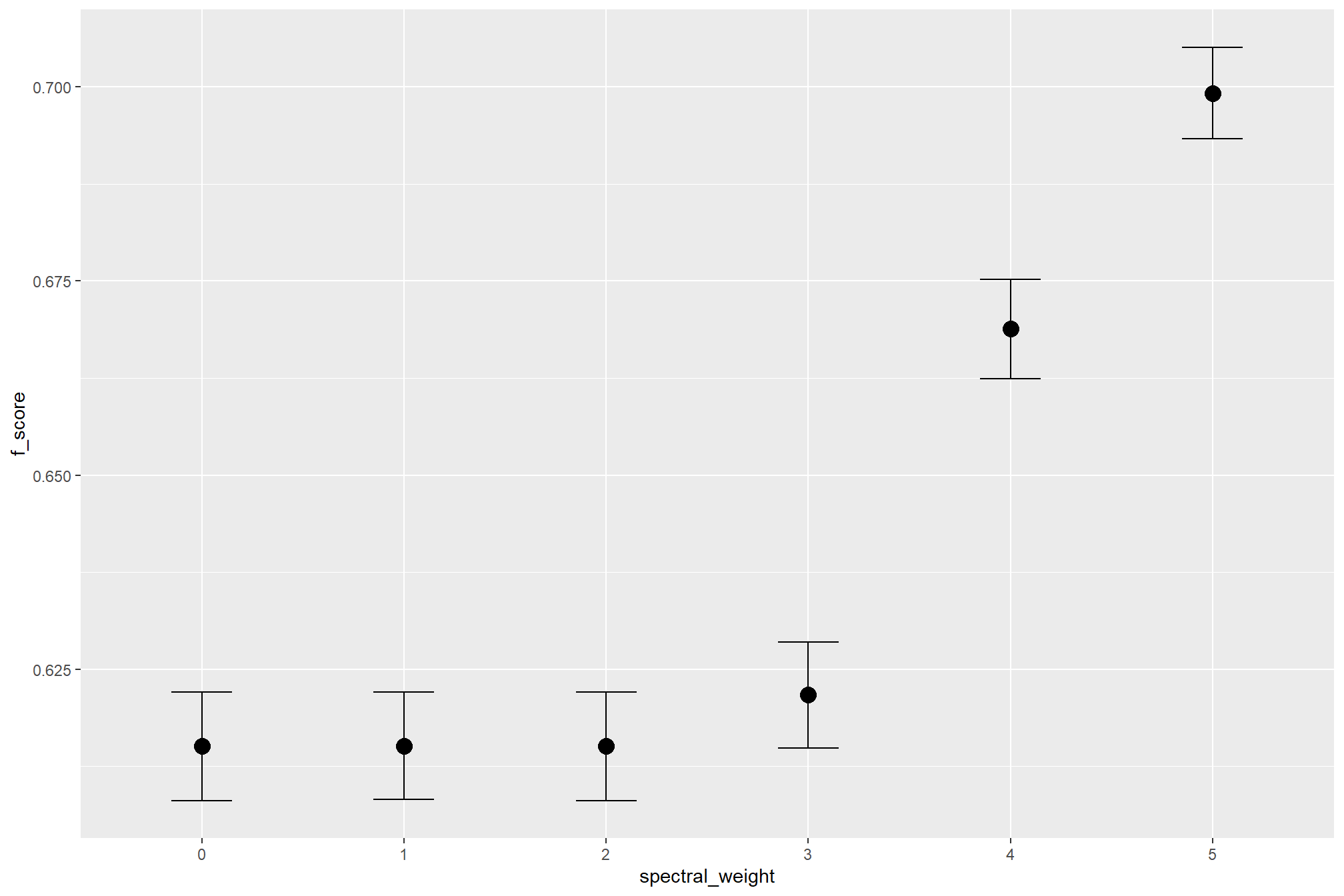

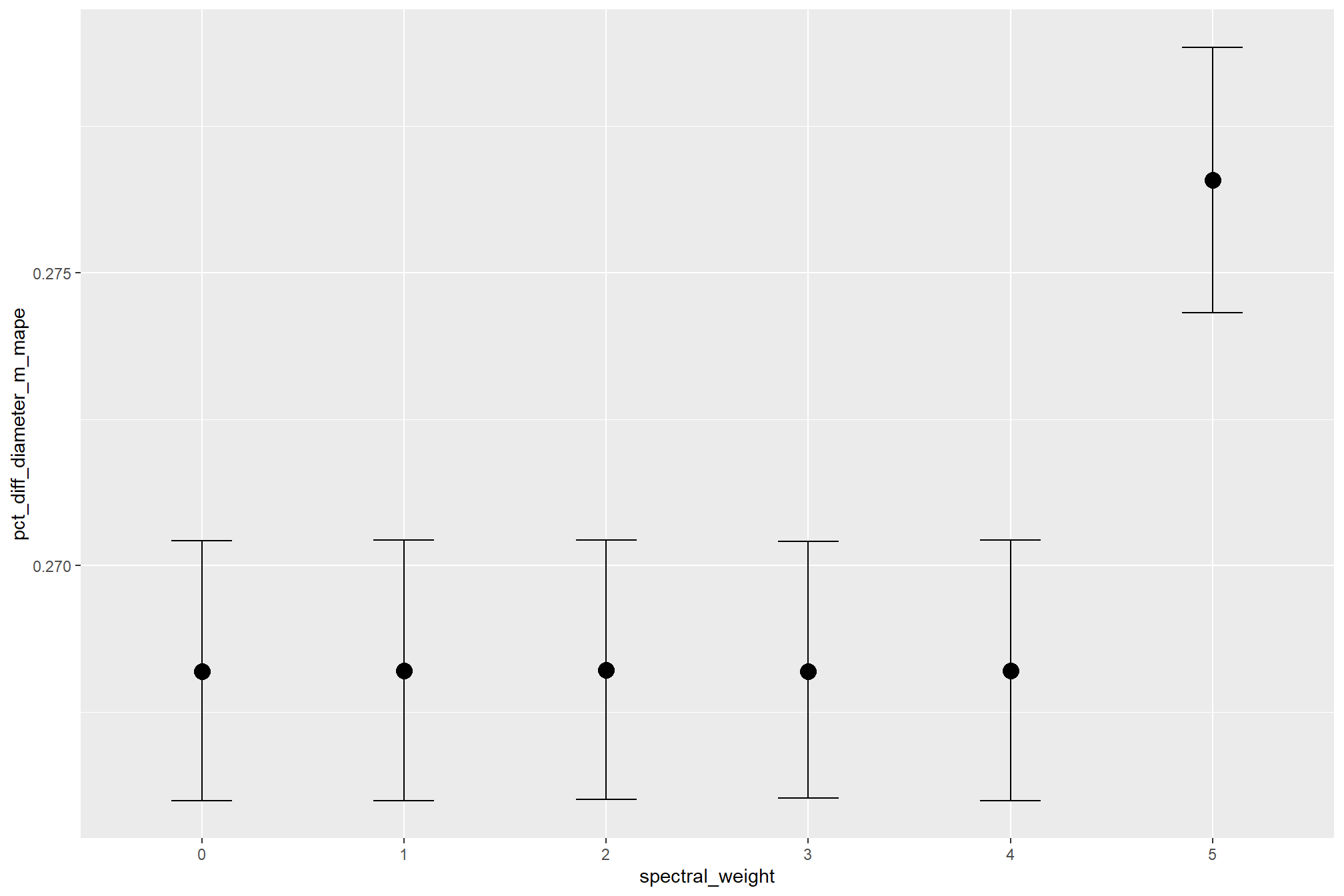

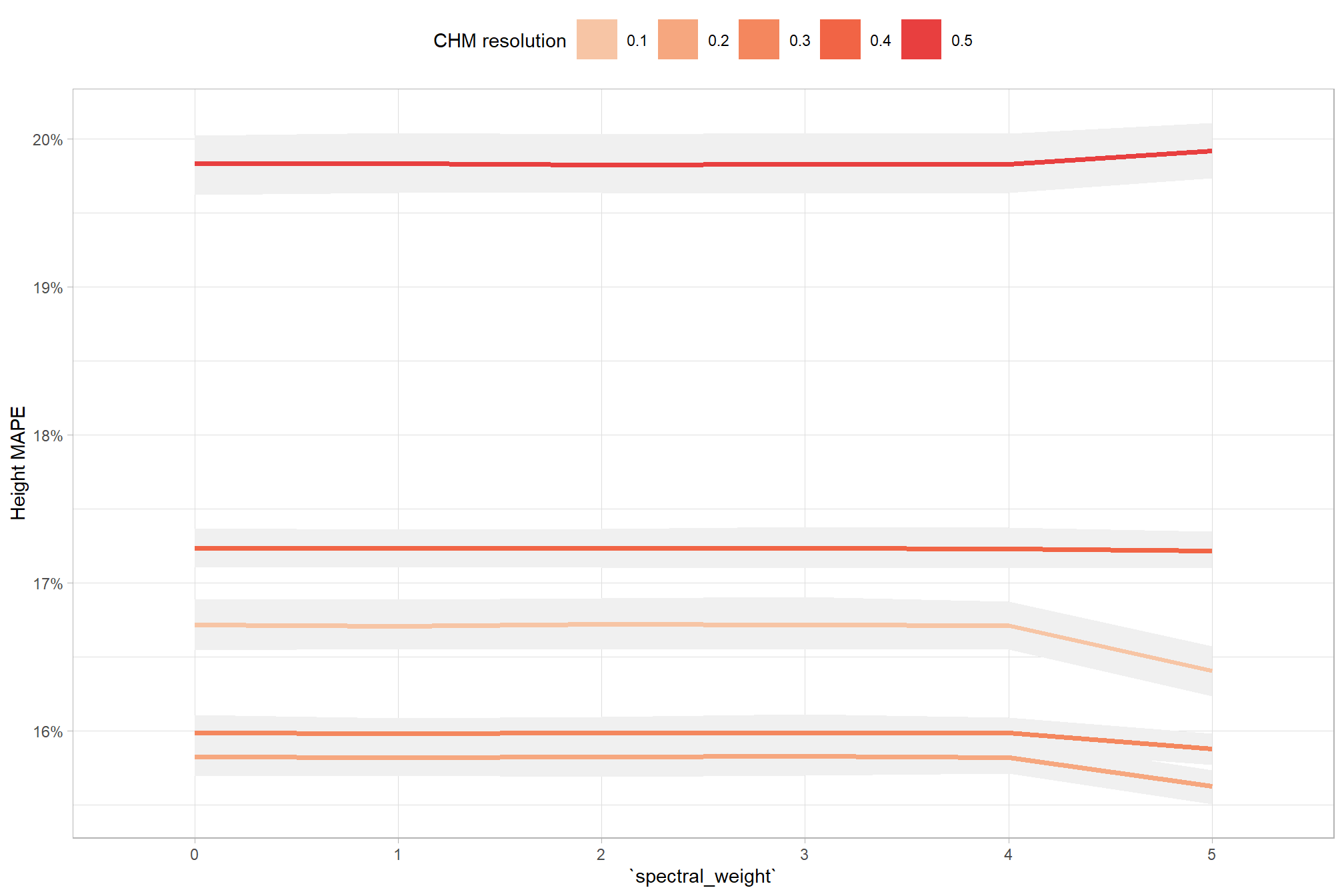

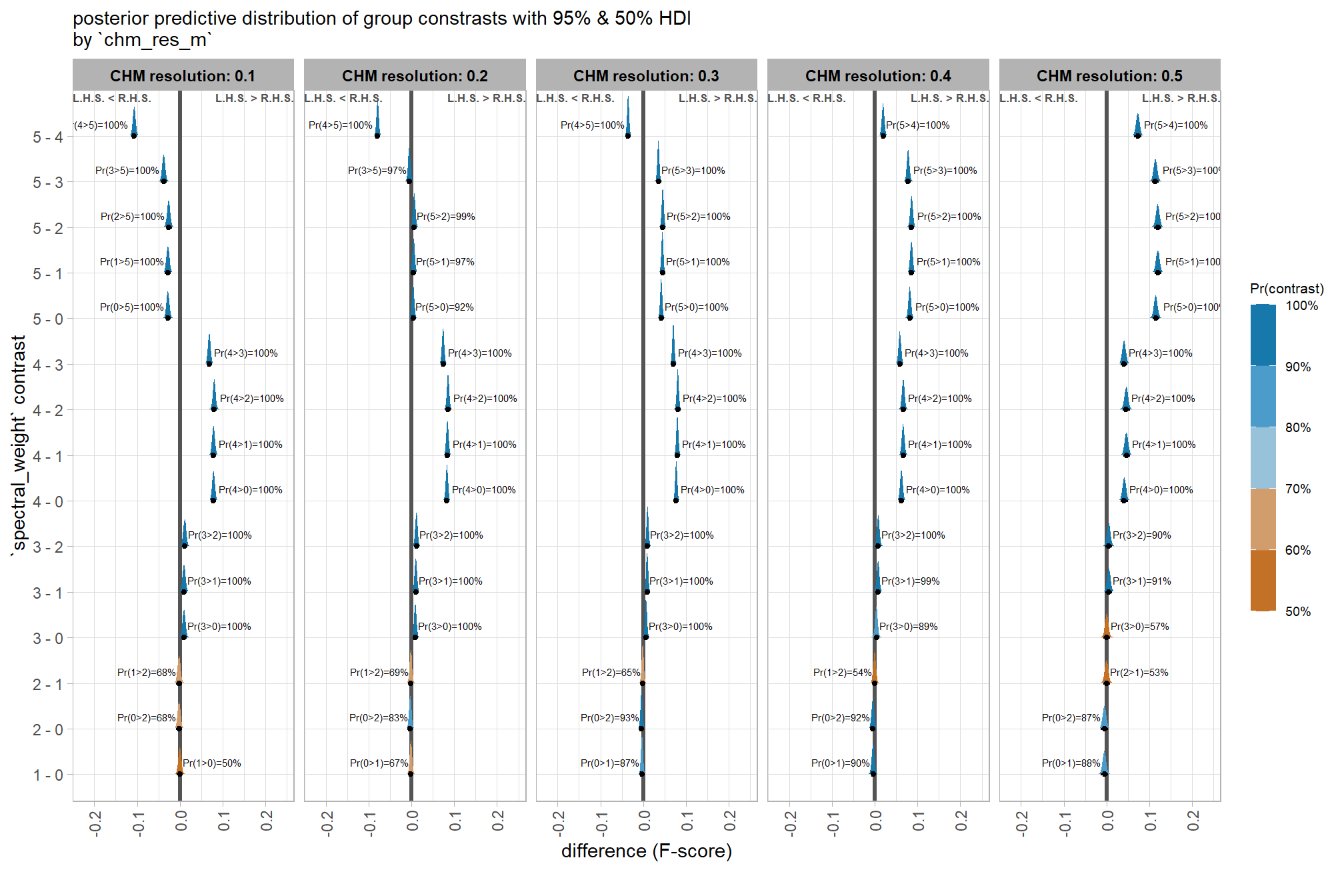

averaging across all other parameters to look at the main effect of including spectral data with the spectral_weight parameter, these results align with what we saw during our data summarization exploration: increasing the spectral_weight (where “5” requires all spectral index thresholds to be met) had minimal impact on metrics until a value of “3”, at which point F-score saw a minimal increase; at a spectral_weight of “4”, the F-score significantly improved, but at a value of “5” F-score was lower than not including the spectral data at all when CHM resolution was fine (e.g. <0.3) but for coarse resolution CHM data the spectral data became more important for detection accuracy.

let’s test including predictions at CHM resolutions outside of the bounds of the data tested (e.g. > 0.5 m resolution)

tidyr::crossing(

structural_params_settings

, param_combos_spectral_ranked %>% dplyr::distinct(spectral_weight_fact, spectral_weight)

, chm_res_m = seq(0.05,1,by = 0.05)

) %>%

tidybayes::add_epred_draws(brms_f_score_mod, ndraws = 1111) %>%

dplyr::rename(value = .epred) %>%

ggplot2::ggplot(mapping = ggplot2::aes(x = chm_res_m, y = value, color = spectral_weight)) +

# tidybayes::stat_halfeye() +

tidybayes::stat_lineribbon(point_interval = "median_hdi", .width = c(0.95)) +

# ggplot2::facet_wrap(facets = dplyr::vars(spectral_weight))

ggplot2::scale_fill_brewer(palette = "Greys") +

ggplot2::scale_color_manual(values = pal_spectral_weight) +

ggplot2::scale_y_continuous(labels = scales::percent) +

ggplot2::scale_x_continuous(breaks = scales::breaks_extended(n=8)) +

ggplot2::labs(x = "CHM resolution", y = "F-score") +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "top"

) +

ggplot2::guides(

color = ggplot2::guide_legend(override.aes = list(shape = 15, lwd = 8, fill = NA))

, fill = "none"

)

even at the coarse CHM resolutions not represented in the data, the model is still confident that coarser resolution CHM data decreases detection accuracy

we can look at the posterior distributions of the expected F-score at different CHM resolution levels by the inclusion (or exclusion) of spectral data and it’s weighting

draws_temp %>%

ggplot2::ggplot(mapping = ggplot2::aes(y=value, x=chm_res_m)) +

tidybayes::stat_eye(

mapping = ggplot2::aes(fill = spectral_weight)

, point_interval = median_hdi, .width = .95

, slab_alpha = 0.95

, interval_color = "gray44", linewidth = 1

, point_color = "gray44", point_fill = "gray44", point_size = 1

) +

ggplot2::facet_grid(cols = dplyr::vars(spectral_weight), labeller = "label_both") +

ggplot2::scale_fill_manual(values = pal_spectral_weight) +

ggplot2::scale_y_continuous(limits = c(0,NA), labels = scales::percent, breaks = scales::breaks_extended(16)) +

ggplot2::labs(x = "CHM resolution", y = "F-score") +

ggplot2::theme_light() +

ggplot2::theme(

legend.position = "none"

, strip.text = ggplot2::element_text(size = 8, color = "black", face = "bold")

)

table that

draws_temp %>%

dplyr::mutate(spectral_weight = forcats::fct_relabel(spectral_weight,~paste0("spectral_weight: ", .x, recycle0 = T))) %>%

dplyr::group_by(spectral_weight, chm_res_m) %>%

dplyr::summarise(

# get median_hdi

median_hdi_est = tidybayes::median_hdci(value)$y

, median_hdi_lower = tidybayes::median_hdci(value)$ymin

, median_hdi_upper = tidybayes::median_hdci(value)$ymax

) %>%

dplyr::ungroup() %>%

dplyr::arrange(spectral_weight, chm_res_m) %>%

# format pcts

dplyr::mutate(dplyr::across(

tidyselect::starts_with("median_hdi_")

, ~ scales::percent(.x, accuracy = 0.1)

)) %>%

kableExtra::kbl(

digits = 2

, caption = "F-score<br>95% HDI of the posterior predictive distribution"

, col.names = c(

"spectral_weight", "CHM resolution"

, c("F-score<br>median", "HDI low", "HDI high")

)

, escape = F

) %>%

kableExtra::kable_styling() %>%

kableExtra::collapse_rows(columns = 1, valign = "top") %>%

kableExtra::scroll_box(height = "8in")| spectral_weight | CHM resolution |

F-score median |

HDI low | HDI high |

|---|---|---|---|---|

| spectral_weight: 0 | 0.1 | 80.9% | 80.2% | 81.5% |

| 0.2 | 76.0% | 75.3% | 76.6% | |

| 0.3 | 70.3% | 69.7% | 70.9% | |

| 0.4 | 63.9% | 63.1% | 64.7% | |

| 0.5 | 57.0% | 55.8% | 58.1% | |

| spectral_weight: 1 | 0.1 | 80.9% | 80.2% | 81.6% |

| 0.2 | 76.0% | 75.4% | 76.7% | |

| 0.3 | 70.3% | 69.7% | 71.0% | |

| 0.4 | 63.9% | 63.1% | 64.7% | |

| 0.5 | 57.0% | 55.9% | 58.1% | |

| spectral_weight: 2 | 0.1 | 80.9% | 80.2% | 81.6% |

| 0.2 | 76.0% | 75.4% | 76.7% | |

| 0.3 | 70.3% | 69.6% | 70.9% | |

| 0.4 | 63.9% | 63.1% | 64.8% | |

| 0.5 | 57.0% | 55.8% | 58.1% | |

| spectral_weight: 3 | 0.1 | 81.3% | 80.7% | 82.1% |

| 0.2 | 76.5% | 76.0% | 77.3% | |

| 0.3 | 70.9% | 70.3% | 71.5% | |

| 0.4 | 64.6% | 63.8% | 65.4% | |

| 0.5 | 57.7% | 56.4% | 58.7% | |

| spectral_weight: 4 | 0.1 | 84.9% | 84.3% | 85.5% |

| 0.2 | 80.4% | 79.9% | 80.9% | |

| 0.3 | 74.9% | 74.4% | 75.6% | |

| 0.4 | 68.5% | 67.8% | 69.3% | |

| 0.5 | 61.4% | 60.2% | 62.4% | |

| spectral_weight: 5 | 0.1 | 84.4% | 84.0% | 85.0% |

| 0.2 | 81.2% | 80.8% | 81.7% | |

| 0.3 | 77.5% | 77.0% | 78.0% | |

| 0.4 | 73.3% | 72.6% | 73.9% | |

| 0.5 | 68.6% | 67.6% | 69.5% |

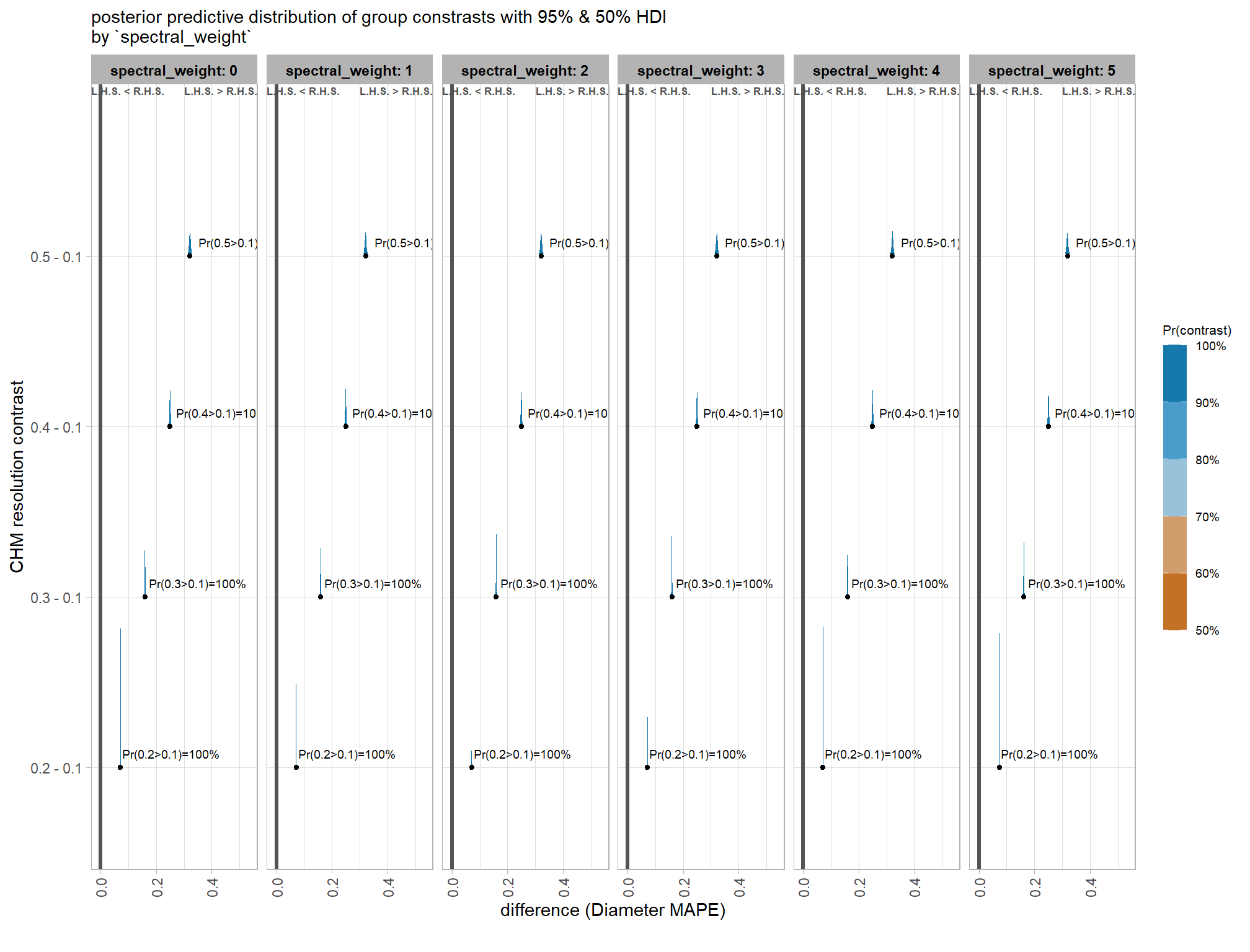

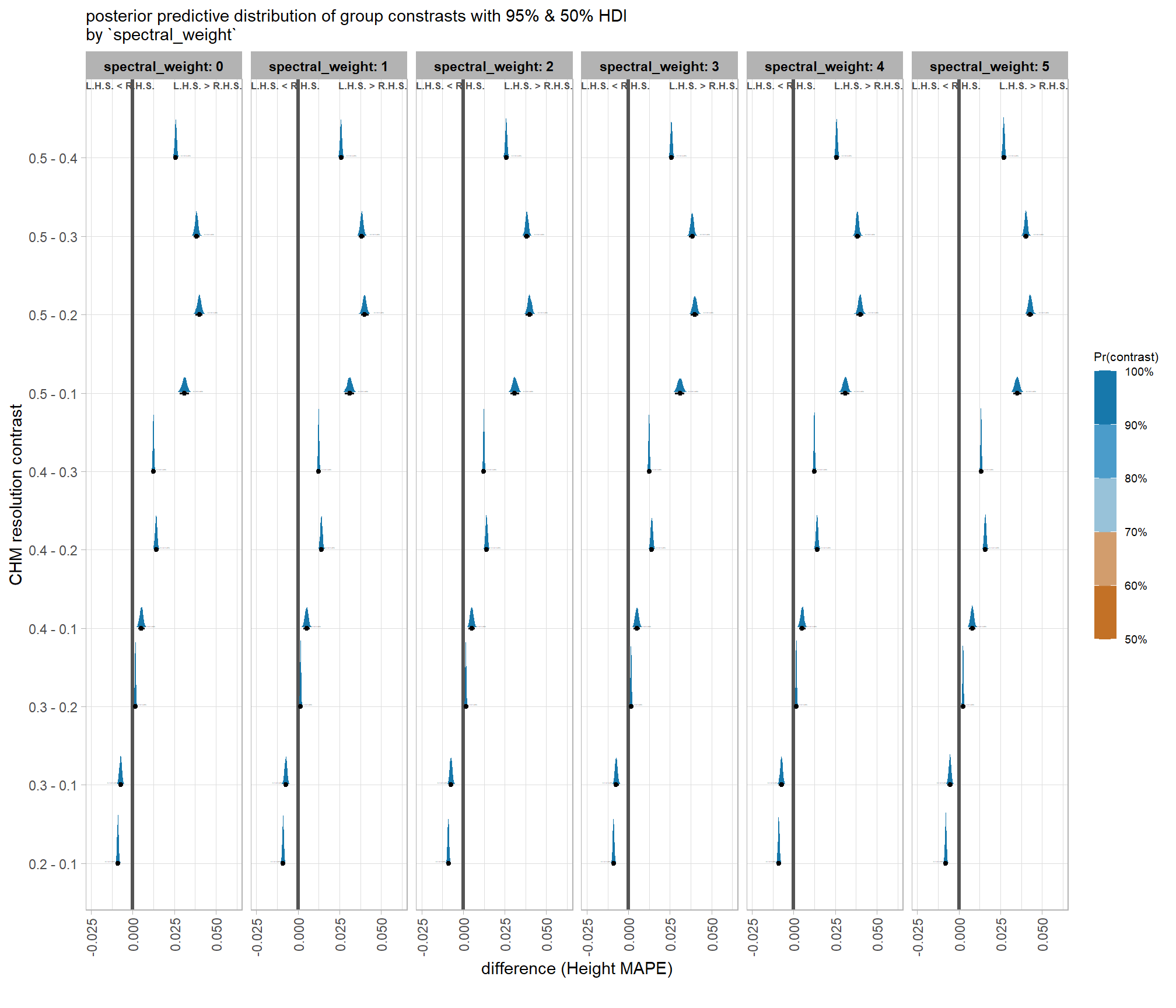

now we’ll probabilistically test the hypothesis that coarser resolution CHM data results in lower detection accuracy and quantify by how much. we’ll look at the influence of CHM resolution based on the inclusion of spectral data (or exclusion) and it’s weighting determined by the spectral_weight parameter

contrast_temp <-

draws_temp %>%

dplyr::filter(

round(chm_res_m,2) == round(chm_res_m,1) # let's just look at every 0.1 m (10 cm)

) %>%

dplyr::group_by(spectral_weight) %>%

tidybayes::compare_levels(

value

, by = chm_res_m

, comparison =

# "control"

# tidybayes::emmeans_comparison("revpairwise")

"pairwise"

) %>%

# dplyr::glimpse()

dplyr::rename(contrast = chm_res_m) %>%

# group the data before calculating contrast variables %>%

dplyr::group_by(spectral_weight, contrast) %>%

make_contrast_vars() %>%

# relabel the label for the facets

dplyr::mutate(spectral_weight = forcats::fct_relabel(spectral_weight,~paste0("spectral_weight: ", .x, recycle0 = T)))

# huh?

# contrast_temp %>% dplyr::glimpse()

# plot it

plt_contrast(

contrast_temp

# , caption_text = form_temp

, y_axis_title = "CHM resolution contrast"

, x_axis_title = "difference (F-score)"

, facet = "spectral_weight"

, label_size = NA

, x_expand = c(0,0.1)

, annotate_which = "left"

) +

labs(

subtitle = "posterior predictive distribution of group constrasts with 95% & 50% HDI\nby `spectral_weight`"

)

let’s table it

contrast_temp %>%

dplyr::distinct(

spectral_weight, contrast

, median_hdi_est, median_hdi_lower, median_hdi_upper

, pr_lt_zero # , pr_gt_zero

) %>%

dplyr::arrange(spectral_weight, contrast) %>%

# format pcts

dplyr::mutate(dplyr::across(

tidyselect::starts_with("median_hdi_")

, ~ scales::percent(.x, accuracy = 0.1)

)) %>%

kableExtra::kbl(

digits = 2

, caption = "brms::brm model: 95% HDI of the posterior predictive distribution of group constrasts"

, col.names = c(

"spectral_weight", "CHM res. contrast"

, "difference (F-score)"

, "HDI low", "HDI high"

, "Pr(diff<0)"

)

, escape = F

) %>%

kableExtra::kable_styling() %>%

kableExtra::collapse_rows(columns = 1, valign = "top") %>%

kableExtra::scroll_box(height = "8in")| spectral_weight | CHM res. contrast | difference (F-score) | HDI low | HDI high | Pr(diff<0) |

|---|---|---|---|---|---|

| spectral_weight: 0 | 0.2 - 0.1 | -4.9% | -5.1% | -4.7% | 100% |

| 0.3 - 0.1 | -10.6% | -11.2% | -10.0% | 100% | |

| 0.3 - 0.2 | -5.7% | -6.0% | -5.4% | 100% | |

| 0.4 - 0.1 | -17.0% | -17.9% | -16.0% | 100% | |

| 0.4 - 0.2 | -12.1% | -12.8% | -11.3% | 100% | |

| 0.4 - 0.3 | -6.4% | -6.8% | -6.0% | 100% | |

| 0.5 - 0.1 | -23.9% | -25.3% | -22.4% | 100% | |

| 0.5 - 0.2 | -19.0% | -20.2% | -17.8% | 100% | |

| 0.5 - 0.3 | -13.3% | -14.1% | -12.4% | 100% | |

| 0.5 - 0.4 | -6.9% | -7.3% | -6.4% | 100% | |

| spectral_weight: 1 | 0.2 - 0.1 | -4.9% | -5.1% | -4.6% | 100% |

| 0.3 - 0.1 | -10.6% | -11.1% | -10.0% | 100% | |

| 0.3 - 0.2 | -5.7% | -6.0% | -5.3% | 100% | |

| 0.4 - 0.1 | -17.0% | -17.8% | -15.9% | 100% | |

| 0.4 - 0.2 | -12.1% | -12.8% | -11.3% | 100% | |

| 0.4 - 0.3 | -6.4% | -6.8% | -6.0% | 100% | |

| 0.5 - 0.1 | -23.9% | -25.2% | -22.4% | 100% | |

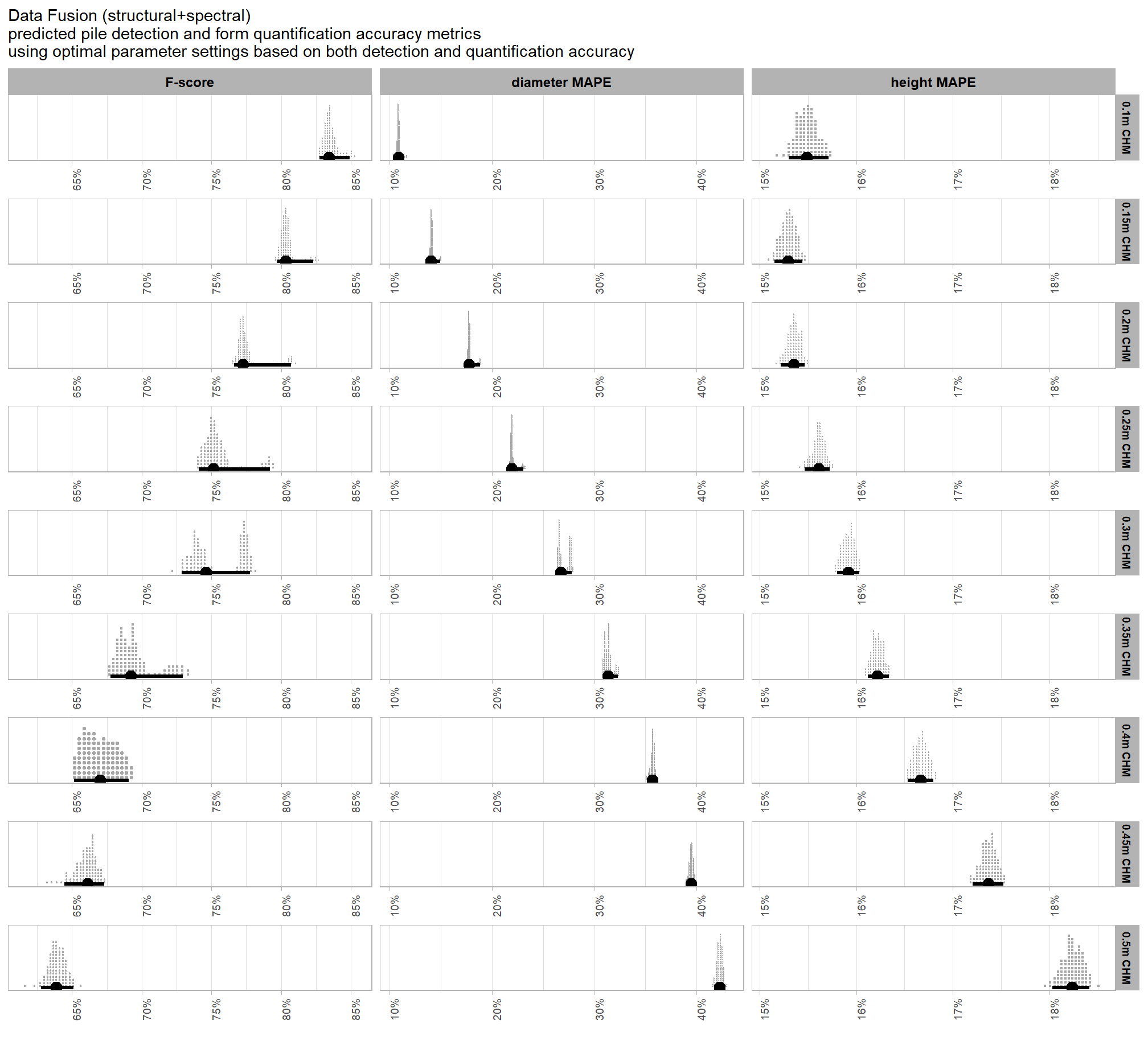

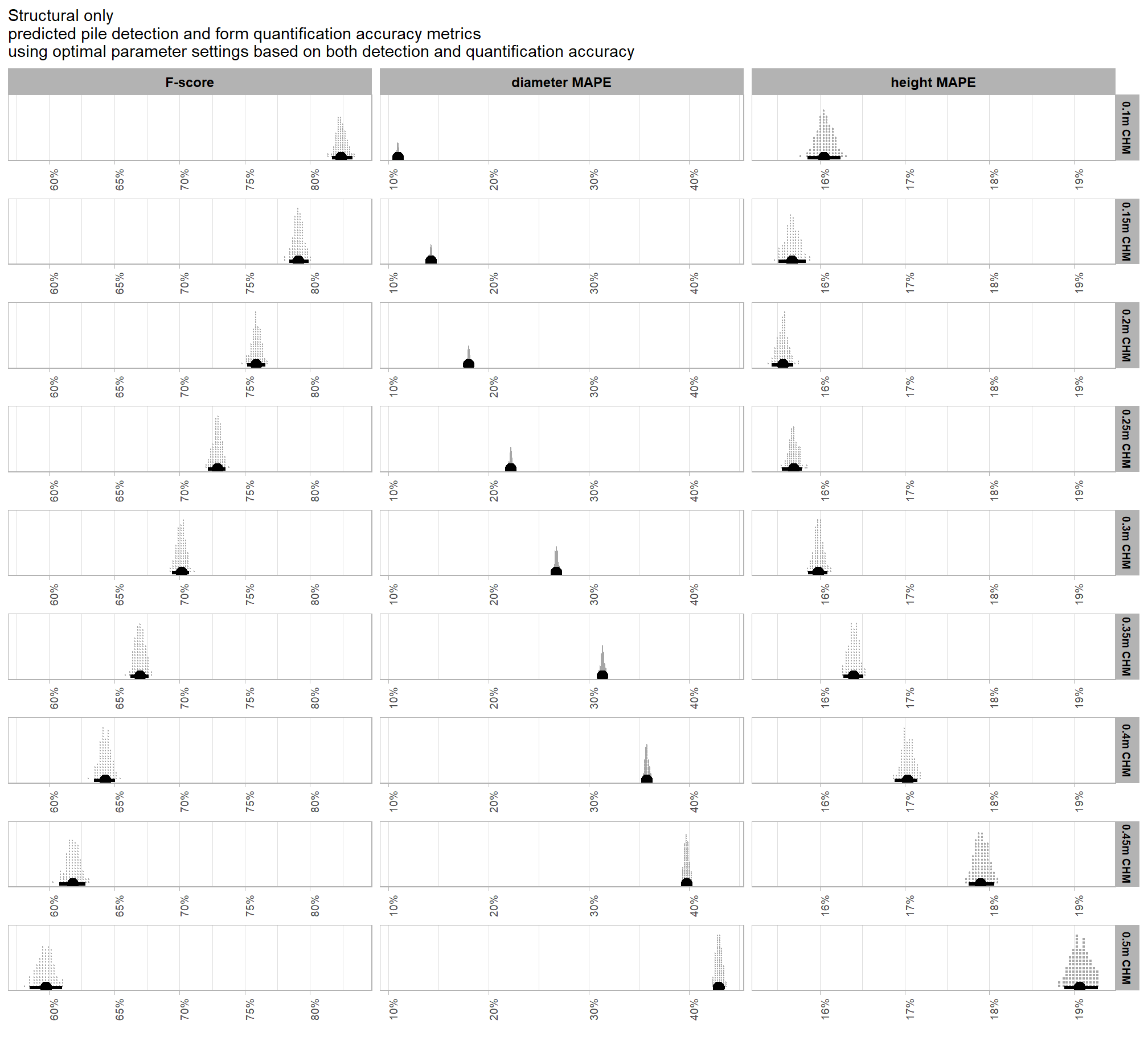

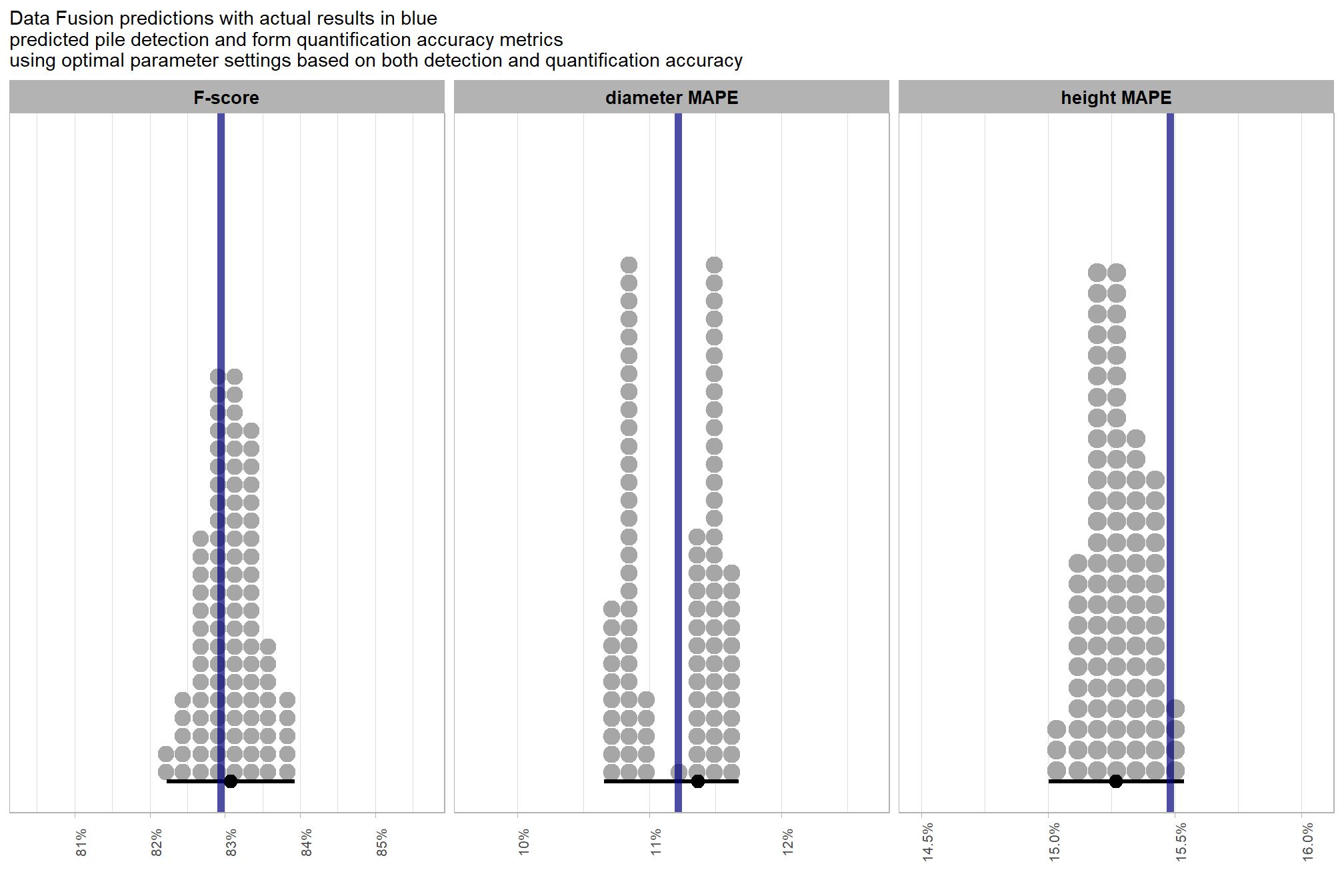

| 0.5 - 0.2 | -19.0% | -20.2% | -17.8% | 100% | |