Section 6 Statistical Analysis: Validation

In this section, we’ll evaluate the influence of the processing parameters on UAS-derived tree detection and monitoring.

The UAS and Field validation data was built and described in this section.

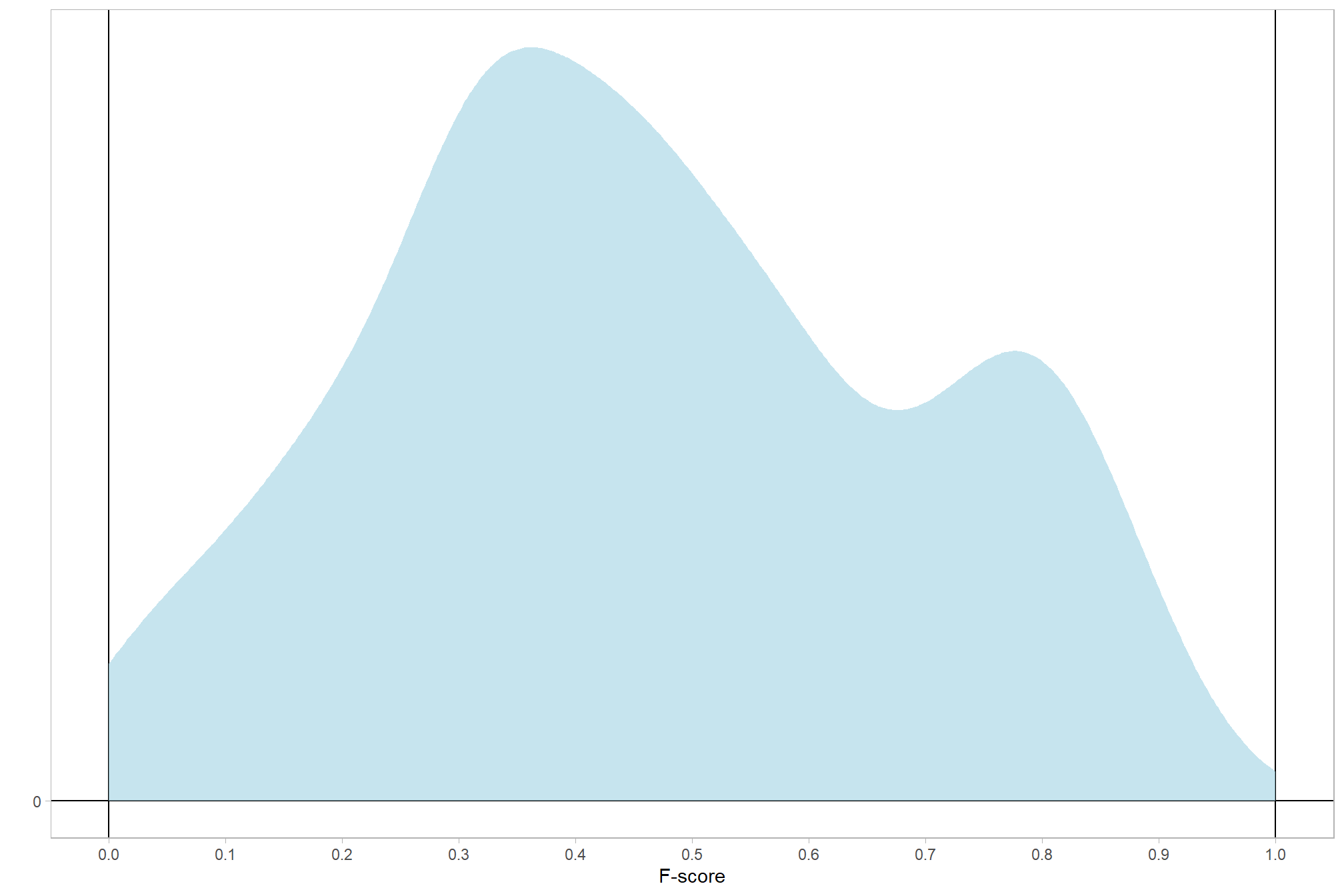

The objective of this study is to determine the influence of different structure from motion (SfM) software (e.g. Agisoft Metashap, OpenDroneMap, Pix4D) and processing parameters on F-score which is a measure of overall tree detection performance.

All of the predictor variables of interest in this study are categorical (a.k.a. factor or nominal) while the predicted variables are metric and include F-score (ranges from 0-1) and error (e.g. MAPE, RMSE). This type of statistical analysis is described in the second edition of Kruschke’s Doing Bayesian data analysis (2015) and here we will build a Bayesian approach based on Kruschke (2015). This analysis was greatly enhanced by A. Solomon Kurz’s ebook supplement to Kruschke (2015).

For a more in-depth review of the traditional treatment of this sort of data structure called multifactor analysis of variance (ANOVA) compared to the Bayesian hierarchical generalization of the traditional ANOVA model used here see this previous section.

6.1 Setup

first we’re going to define a function to ingest a formula as text and separate it into multiple rows based on the number of characters for plotting

# function to pull the formula for labeling below

get_frmla_text = function(frmla_chr, split_chrs = 100){

cumsum_group = function(x, threshold) {

cumsum = 0

group = 1

result = numeric()

for (i in 1:length(x)) {

cumsum = cumsum + x[i]

if (cumsum > threshold) {

group = group + 1

cumsum = x[i]

}

result = c(result, group)

}

return (result)

}

r = stringr::str_sub(

frmla_chr

, # get the two column matrix of start end

frmla_chr %>%

stringr::str_locate_all("\\+") %>%

.[[1]] %>%

dplyr::as_tibble() %>%

dplyr::select(start) %>%

dplyr::mutate(

len = dplyr::coalesce(start-dplyr::lag(start),0)

, ld = dplyr::coalesce(dplyr::lead(start)-1, stringr::str_length(frmla_chr))

, cum = cumsum_group(len, split_chrs)

, start = ifelse(dplyr::row_number()==1,1,start)

) %>%

dplyr::group_by(cum) %>%

dplyr::summarise(start = min(start), end = max(ld)) %>%

dplyr::ungroup() %>%

dplyr::select(-cum) %>%

as.matrix()

) %>%

stringr::str_squish() %>%

paste0(collapse = "\n")

return(r)

}6.2 Summary Statistics

What is this data?

# load data if needed

if(ls()[ls() %in% "ptcld_validation_data"] %>% length()==0){

ptcld_validation_data = readr::read_csv("../data/ptcld_full_analysis_data.csv") %>%

dplyr::mutate(

depth_maps_generation_quality = factor(

depth_maps_generation_quality %>%

tolower() %>%

stringr::str_replace_all("ultrahigh", "ultra high")

, ordered = TRUE

, levels = c(

"lowest"

, "low"

, "medium"

, "high"

, "ultra high"

)

) %>% forcats::fct_rev()

, depth_maps_generation_filtering_mode = factor(

depth_maps_generation_filtering_mode %>% tolower()

, ordered = TRUE

, levels = c(

"disabled"

, "mild"

, "moderate"

, "aggressive"

)

) %>% forcats::fct_rev()

)

}

# replace 0 F-score with very small positive to run models

ptcld_validation_data = ptcld_validation_data %>%

dplyr::mutate(dplyr::across(

.cols = tidyselect::ends_with("f_score")

, .fns = ~ ifelse(.x==0,1e-4,.x)

))

# what is this data?

ptcld_validation_data %>% dplyr::glimpse()## Rows: 260

## Columns: 114

## $ tracking_file_full_path <chr> "D:\\SfM_Software_Comparison\\Me…

## $ software <chr> "METASHAPE", "METASHAPE", "METAS…

## $ study_site <chr> "KAIBAB_HIGH", "KAIBAB_HIGH", "K…

## $ processing_attribute1 <chr> "HIGH", "HIGH", "HIGH", "HIGH", …

## $ processing_attribute2 <chr> "AGGRESSIVE", "DISABLED", "MILD"…

## $ processing_attribute3 <chr> NA, NA, NA, NA, NA, NA, NA, NA, …

## $ file_name <chr> "HIGH_AGGRESSIVE", "HIGH_DISABLE…

## $ number_of_points <int> 52974294, 72549206, 69858217, 69…

## $ las_area_m2 <dbl> 86661.27, 87175.42, 86404.78, 86…

## $ timer_tile_time_mins <dbl> 0.63600698, 2.49318542, 0.841338…

## $ timer_class_dtm_norm_chm_time_mins <dbl> 3.6559556, 5.3289152, 5.1638296,…

## $ timer_treels_time_mins <dbl> 8.9065272, 19.2119663, 12.339179…

## $ timer_itd_time_mins <dbl> 0.02202115, 0.02449968, 0.037984…

## $ timer_competition_time_mins <dbl> 0.10590740, 0.17865245, 0.121248…

## $ timer_estdbh_time_mins <dbl> 0.02290262, 0.02382533, 0.021991…

## $ timer_silv_time_mins <dbl> 0.012565533, 0.015940932, 0.0150…

## $ timer_total_time_mins <dbl> 13.361886, 27.276985, 18.540606,…

## $ sttng_input_las_dir <chr> "D:/Metashape_Testing_2024", "D:…

## $ sttng_use_parallel_processing <lgl> FALSE, FALSE, FALSE, FALSE, FALS…

## $ sttng_desired_chm_res <dbl> 0.25, 0.25, 0.25, 0.25, 0.25, 0.…

## $ sttng_max_height_threshold_m <int> 60, 60, 60, 60, 60, 60, 60, 60, …

## $ sttng_minimum_tree_height_m <int> 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

## $ sttng_dbh_max_size_m <int> 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

## $ sttng_local_dbh_model <chr> "rf", "rf", "rf", "rf", "rf", "r…

## $ sttng_user_supplied_epsg <lgl> NA, NA, NA, NA, NA, NA, NA, NA, …

## $ sttng_accuracy_level <int> 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

## $ sttng_pts_m2_for_triangulation <int> 20, 20, 20, 20, 20, 20, 20, 20, …

## $ sttng_normalization_with <chr> "triangulation", "triangulation"…

## $ sttng_competition_buffer_m <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5,…

## $ depth_maps_generation_quality <ord> high, high, high, high, low, low…

## $ depth_maps_generation_filtering_mode <ord> aggressive, disabled, mild, mode…

## $ total_sfm_time_min <dbl> 54.800000, 60.316667, 55.933333,…

## $ number_of_points_sfm <dbl> 52974294, 72549206, 69858217, 69…

## $ total_sfm_time_norm <dbl> 0.1117823680, 0.1237564664, 0.11…

## $ processed_data_dir <chr> "D:/SfM_Software_Comparison/Meta…

## $ processing_id <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1…

## $ true_positive_n_trees <dbl> 229, 261, 260, 234, 220, 175, 23…

## $ commission_n_trees <dbl> 173, 222, 213, 193, 148, 223, 16…

## $ omission_n_trees <dbl> 772, 740, 741, 767, 781, 826, 77…

## $ f_score <dbl> 0.3264433, 0.3517520, 0.3527815,…

## $ tree_height_m_me <dbl> 0.270336679, 0.283568790, 0.3122…

## $ tree_height_m_mpe <dbl> 0.002357383, 0.013286785, 0.0142…

## $ tree_height_m_mae <dbl> 0.7873610, 0.6886235, 0.6914983,…

## $ tree_height_m_mape <dbl> 0.06624939, 0.06903969, 0.060550…

## $ tree_height_m_smape <dbl> 0.06776453, 0.06838733, 0.060410…

## $ tree_height_m_mse <dbl> 0.9842433, 0.8507862, 0.8259923,…

## $ tree_height_m_rmse <dbl> 0.9920904, 0.9223807, 0.9088412,…

## $ dbh_cm_me <dbl> 2.0551269, 1.2718827, 1.7505679,…

## $ dbh_cm_mpe <dbl> 0.077076168, 0.056392083, 0.0755…

## $ dbh_cm_mae <dbl> 5.091373, 4.375871, 4.674437, 4.…

## $ dbh_cm_mape <dbl> 0.2076874, 0.2185989, 0.2110014,…

## $ dbh_cm_smape <dbl> 0.1966263, 0.2081000, 0.1986588,…

## $ dbh_cm_mse <dbl> 44.38957, 35.29251, 38.33622, 38…

## $ dbh_cm_rmse <dbl> 6.662549, 5.940750, 6.191625, 6.…

## $ uas_basal_area_m2 <dbl> 55.75278, 60.26123, 58.67391, 57…

## $ field_basal_area_m2 <dbl> 69.04409, 69.04409, 69.04409, 69…

## $ uas_basal_area_m2_per_ha <dbl> 31.97437, 34.55997, 33.64964, 33…

## $ field_basal_area_m2_per_ha <dbl> 39.59697, 39.59697, 39.59697, 39…

## $ basal_area_m2_error <dbl> -13.291309, -8.782866, -10.37018…

## $ basal_area_m2_per_ha_error <dbl> -7.622601, -5.036997, -5.947326,…

## $ basal_area_pct_error <dbl> -0.19250466, -0.12720663, -0.150…

## $ basal_area_abs_pct_error <dbl> 0.19250466, 0.12720663, 0.150196…

## $ overstory_commission_n_trees <dbl> 141, 178, 178, 160, 95, 173, 120…

## $ understory_commission_n_trees <dbl> 32, 44, 35, 33, 53, 50, 43, 39, …

## $ overstory_omission_n_trees <dbl> 558, 560, 545, 556, 554, 598, 54…

## $ understory_omission_n_trees <dbl> 214, 180, 196, 211, 227, 228, 22…

## $ overstory_true_positive_n_trees <dbl> 185, 183, 198, 187, 189, 145, 19…

## $ understory_true_positive_n_trees <dbl> 44, 78, 62, 47, 31, 30, 33, 40, …

## $ overstory_f_score <dbl> 0.3461179, 0.3315217, 0.3538874,…

## $ understory_f_score <dbl> 0.2634731, 0.4105263, 0.3492958,…

## $ overstory_tree_height_m_me <dbl> 0.41693172, 0.44114110, 0.442167…

## $ understory_tree_height_m_me <dbl> -0.34602886, -0.08612009, -0.102…

## $ overstory_tree_height_m_mpe <dbl> 0.020790675, 0.024558478, 0.0241…

## $ understory_tree_height_m_mpe <dbl> -0.075146232, -0.013158341, -0.0…

## $ overstory_tree_height_m_mae <dbl> 0.8201433, 0.7820879, 0.7770369,…

## $ understory_tree_height_m_mae <dbl> 0.6495266, 0.4693415, 0.4183269,…

## $ overstory_tree_height_m_mape <dbl> 0.04662933, 0.04863237, 0.048708…

## $ understory_tree_height_m_mape <dbl> 0.14874284, 0.11691842, 0.098369…

## $ overstory_tree_height_m_smape <dbl> 0.04589942, 0.04776615, 0.047912…

## $ understory_tree_height_m_smape <dbl> 0.15969736, 0.11676780, 0.100322…

## $ overstory_tree_height_m_mse <dbl> 1.0623763, 1.0055835, 0.9739823,…

## $ understory_tree_height_m_mse <dbl> 0.6557300, 0.4876080, 0.3533791,…

## $ overstory_tree_height_m_rmse <dbl> 1.0307164, 1.0027878, 0.9869054,…

## $ understory_tree_height_m_rmse <dbl> 0.8097715, 0.6982893, 0.5944570,…

## $ overstory_dbh_cm_me <dbl> 2.88225065, 2.37098111, 2.694675…

## $ understory_dbh_cm_me <dbl> -1.4225525, -1.3067712, -1.26448…

## $ overstory_dbh_cm_mpe <dbl> 0.11199444, 0.09928650, 0.110477…

## $ understory_dbh_cm_mpe <dbl> -0.06973931, -0.04424482, -0.035…

## $ overstory_dbh_cm_mae <dbl> 5.753010, 5.298094, 5.472454, 5.…

## $ understory_dbh_cm_mae <dbl> 2.309487, 2.212192, 2.125931, 2.…

## $ overstory_dbh_cm_mape <dbl> 0.1862021, 0.1848729, 0.1898205,…

## $ understory_dbh_cm_mape <dbl> 0.2980235, 0.2977254, 0.2786439,…

## $ overstory_dbh_cm_smape <dbl> 0.1686851, 0.1699578, 0.1735200,…

## $ understory_dbh_cm_smape <dbl> 0.3141064, 0.2975874, 0.2789409,…

## $ overstory_dbh_cm_mse <dbl> 52.78250, 46.57941, 47.70797, 46…

## $ understory_dbh_cm_mse <dbl> 9.101103, 8.811704, 8.407077, 9.…

## $ overstory_dbh_cm_rmse <dbl> 7.265156, 6.824911, 6.907095, 6.…

## $ understory_dbh_cm_rmse <dbl> 3.016803, 2.968451, 2.899496, 3.…

## $ overstory_uas_basal_area_m2 <dbl> 55.49096, 59.79139, 58.30184, 57…

## $ understory_uas_basal_area_m2 <dbl> 0.2618258, 0.4698415, 0.3720740,…

## $ overstory_field_basal_area_m2 <dbl> 67.50326, 67.50326, 67.50326, 67…

## $ understory_field_basal_area_m2 <dbl> 1.540832, 1.540832, 1.540832, 1.…

## $ overstory_uas_basal_area_m2_per_ha <dbl> 31.82421, 34.29052, 33.43626, 32…

## $ understory_uas_basal_area_m2_per_ha <dbl> 0.15015781, 0.26945534, 0.213385…

## $ overstory_field_basal_area_m2_per_ha <dbl> 38.7133, 38.7133, 38.7133, 38.71…

## $ understory_field_basal_area_m2_per_ha <dbl> 0.883671, 0.883671, 0.883671, 0.…

## $ overstory_basal_area_m2_per_ha_error <dbl> -6.889088, -4.422782, -5.277041,…

## $ understory_basal_area_m2_per_ha_error <dbl> -0.7335132, -0.6142157, -0.67028…

## $ overstory_basal_area_pct_error <dbl> -0.17795146, -0.11424450, -0.136…

## $ understory_basal_area_pct_error <dbl> -0.8300750, -0.6950728, -0.75852…

## $ overstory_basal_area_abs_pct_error <dbl> 0.17795146, 0.11424450, 0.136310…

## $ understory_basal_area_abs_pct_error <dbl> 0.8300750, 0.6950728, 0.7585239,…

## $ validation_file_full_path <chr> "D:/SfM_Software_Comparison/Meta…

## $ overstory_ht_m <dbl> 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7,…# a row is unique by...

identical(

nrow(ptcld_validation_data)

, ptcld_validation_data %>%

dplyr::distinct(

study_site, software

, depth_maps_generation_quality

, depth_maps_generation_filtering_mode

, processing_attribute3 # need to align all by software so this will go away or be filled

) %>%

nrow()

)## [1] TRUESummary by metrics of interest

sum_stats_dta = function(my_var){

sum_fns = list(

n = ~sum(ifelse(is.na(.x), 0, 1))

, min = ~min(.x, na.rm = TRUE)

, max = ~max(.x, na.rm = TRUE)

, mean = ~mean(.x, na.rm = TRUE)

, median = ~median(.x, na.rm = TRUE)

, sd = ~sd(.x, na.rm = TRUE)

)

# plot

(

ggplot(

data = ptcld_validation_data %>%

dplyr::group_by(.data[[my_var]]) %>%

dplyr::mutate(m = median(f_score))

, mapping = aes(

y = .data[[my_var]]

, x = f_score, fill = m)

) +

geom_violin(color = NA) +

geom_boxplot(width = 0.1, outlier.shape = NA, fill = NA, color = "black") +

geom_rug() +

scale_fill_viridis_c(option = "mako", begin = 0.3, end = 0.9, direction = -1) +

labs(

x = "F-score"

, y = stringr::str_replace_all(my_var, pattern = "_", replacement = " ")

, subtitle = stringr::str_replace_all(my_var, pattern = "_", replacement = " ") %>%

stringr::str_to_title()

) +

theme_light() +

theme(legend.position = "none")

)

# # summarize data

# (

# ptcld_validation_data %>%

# dplyr::group_by(dplyr::across(dplyr::all_of(my_var))) %>%

# dplyr::summarise(

# dplyr::across(f_score, sum_fns)

# , .groups = 'drop_last'

# ) %>%

# kableExtra::kbl() %>%

# kableExtra::kable_styling()

# )

}

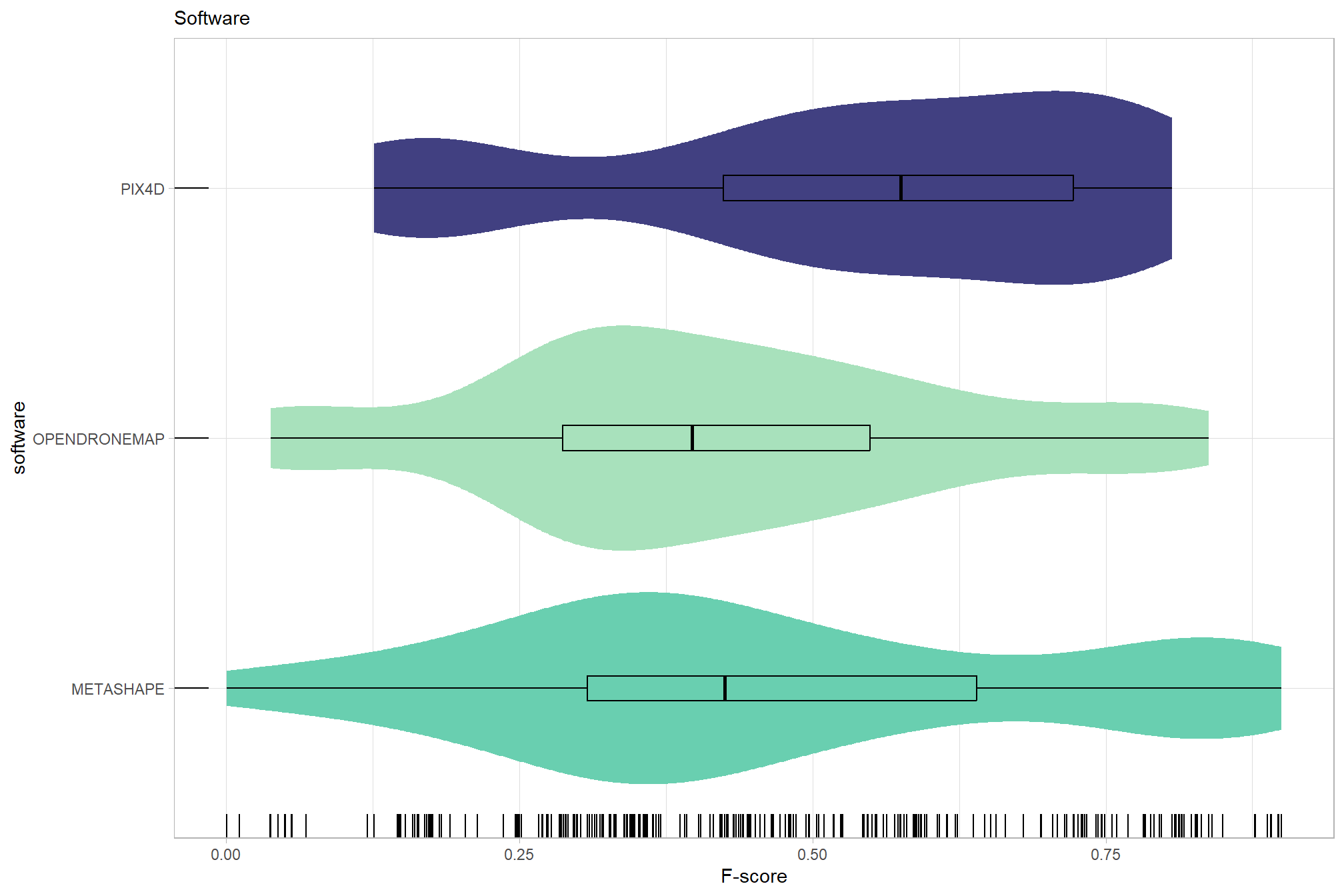

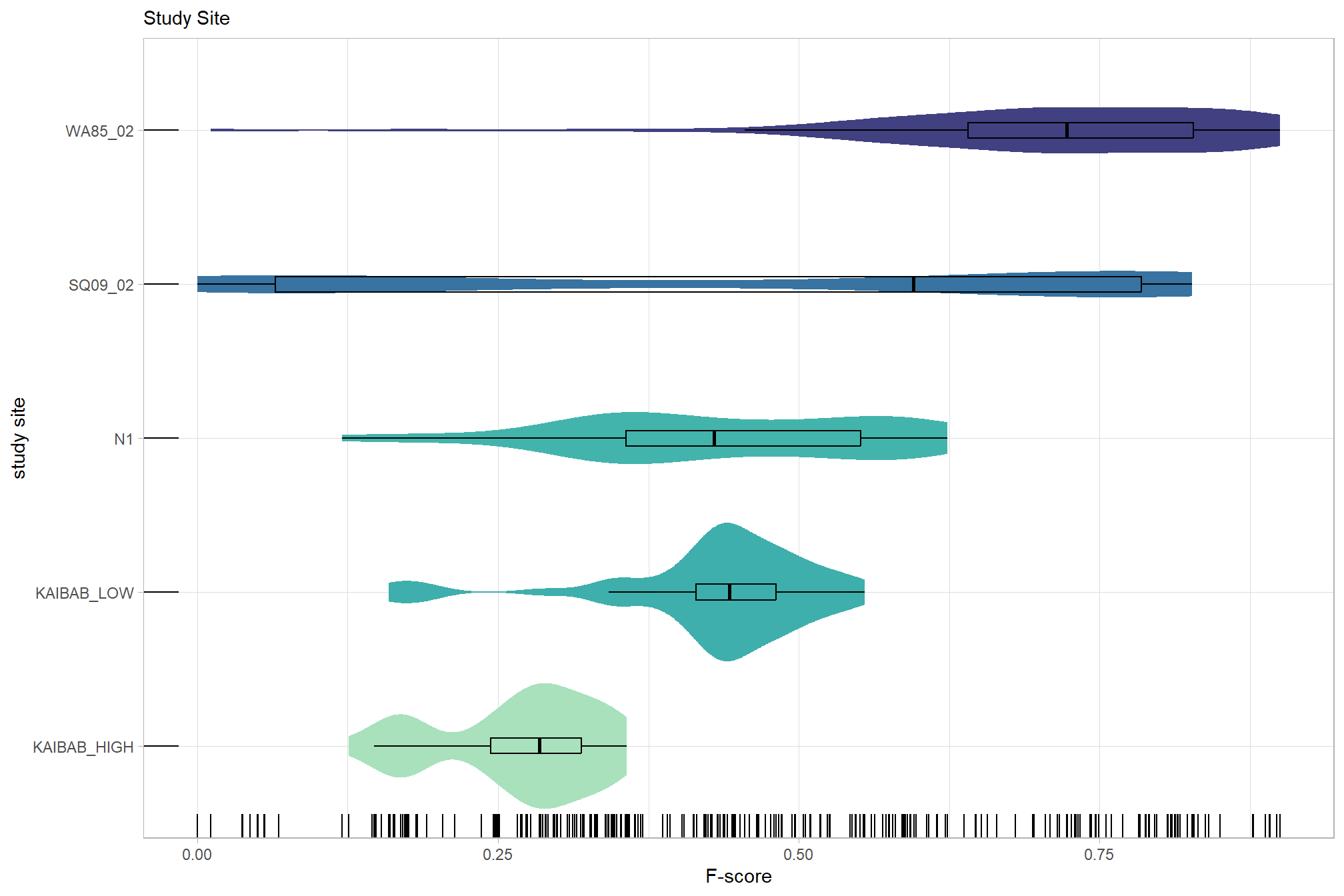

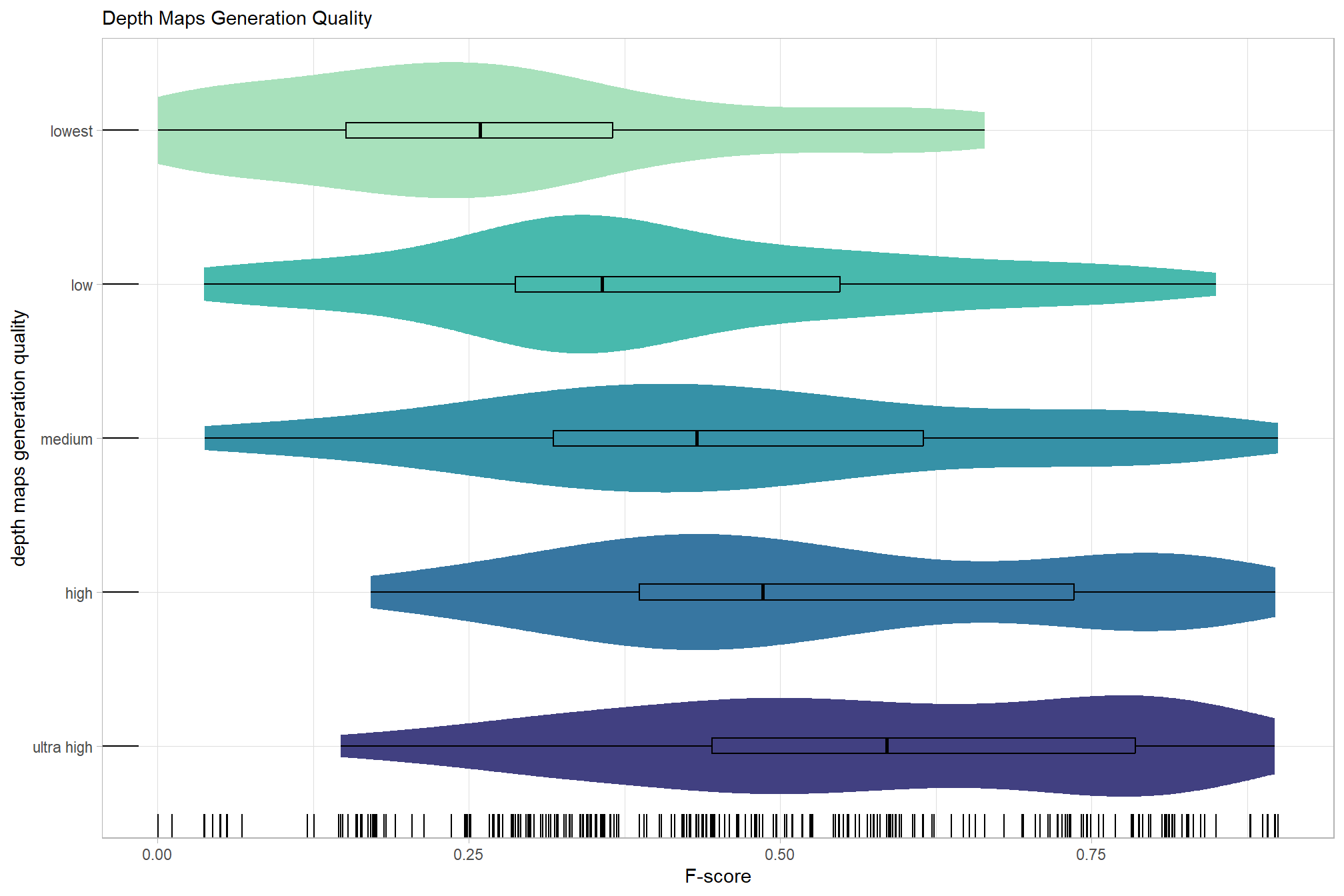

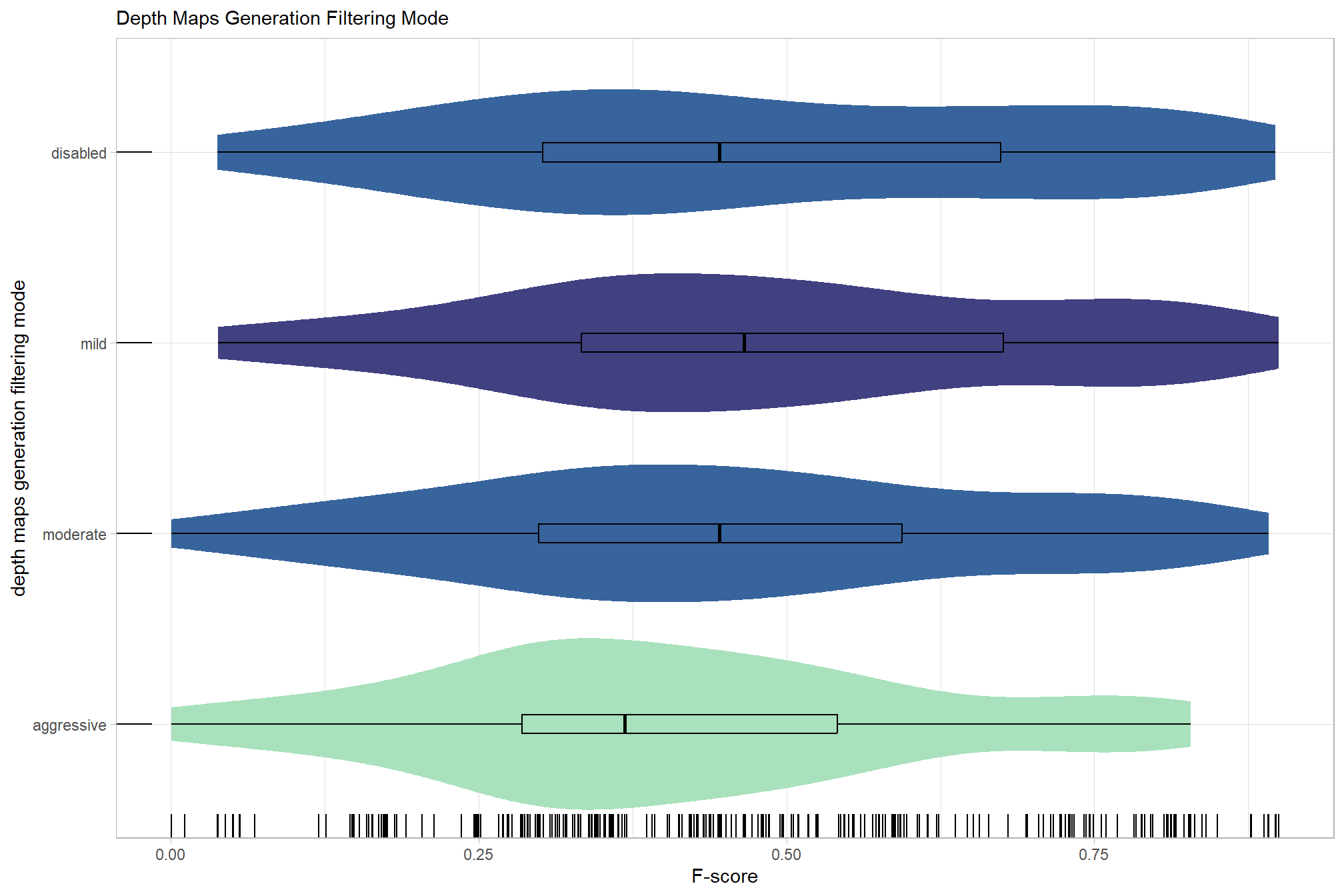

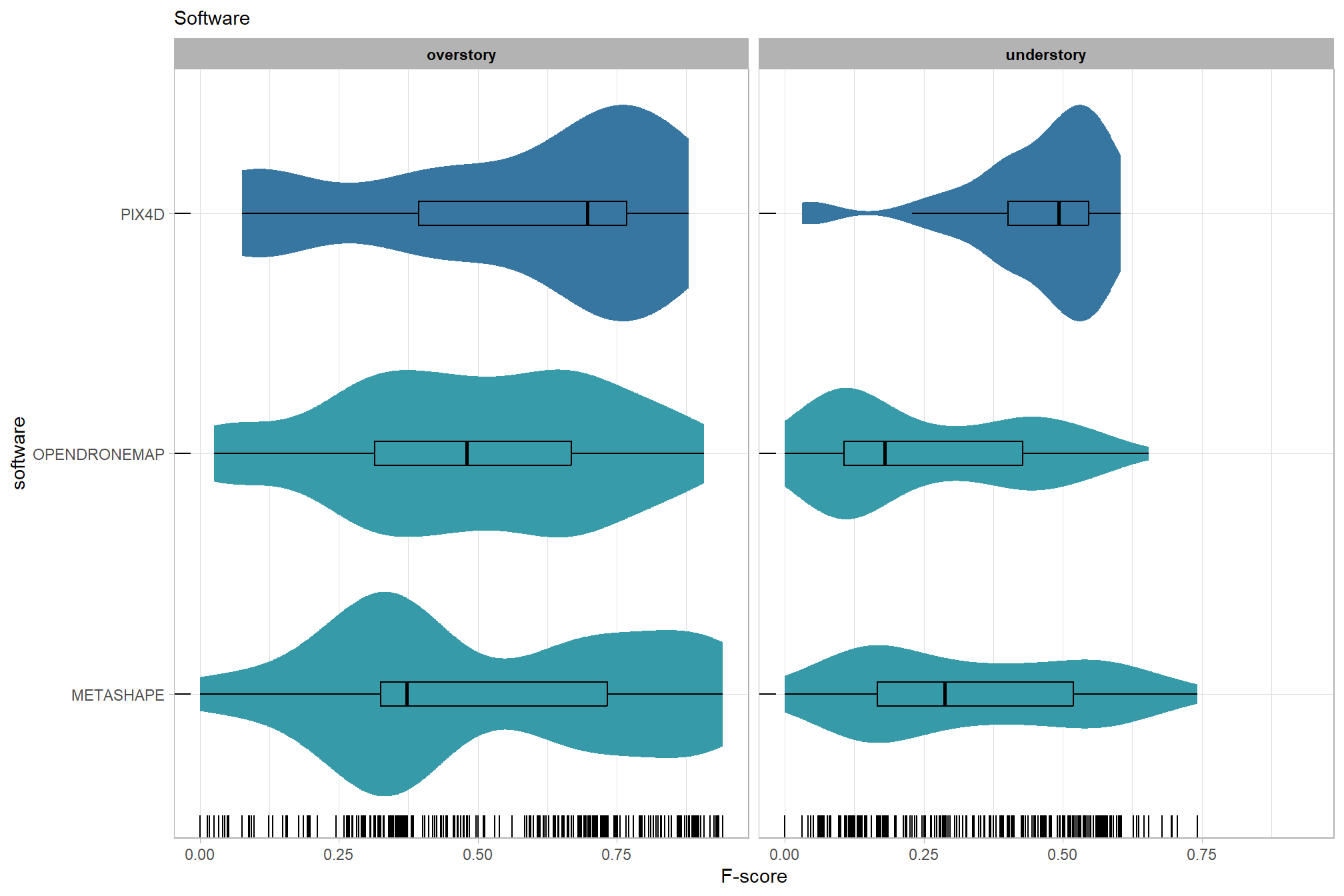

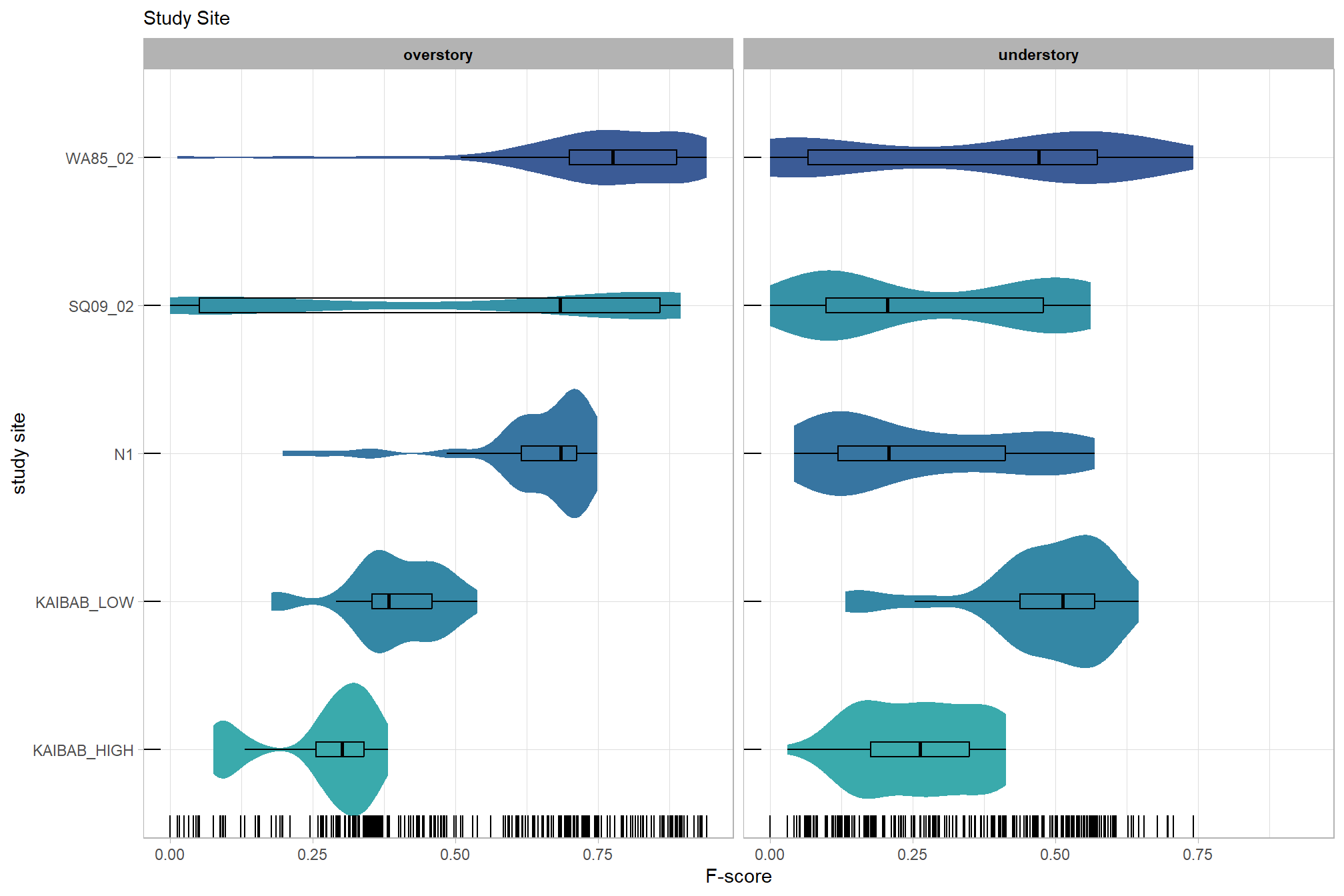

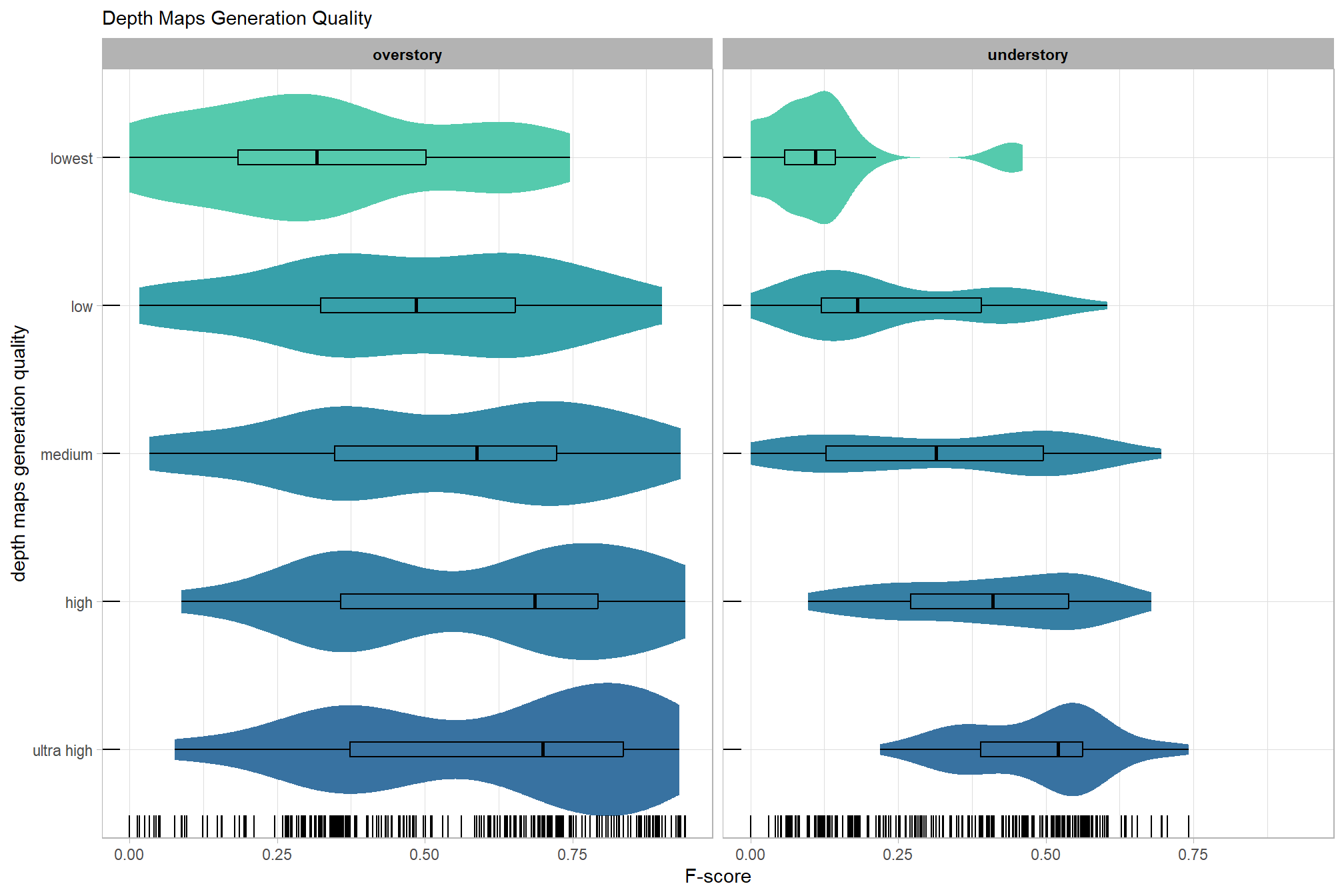

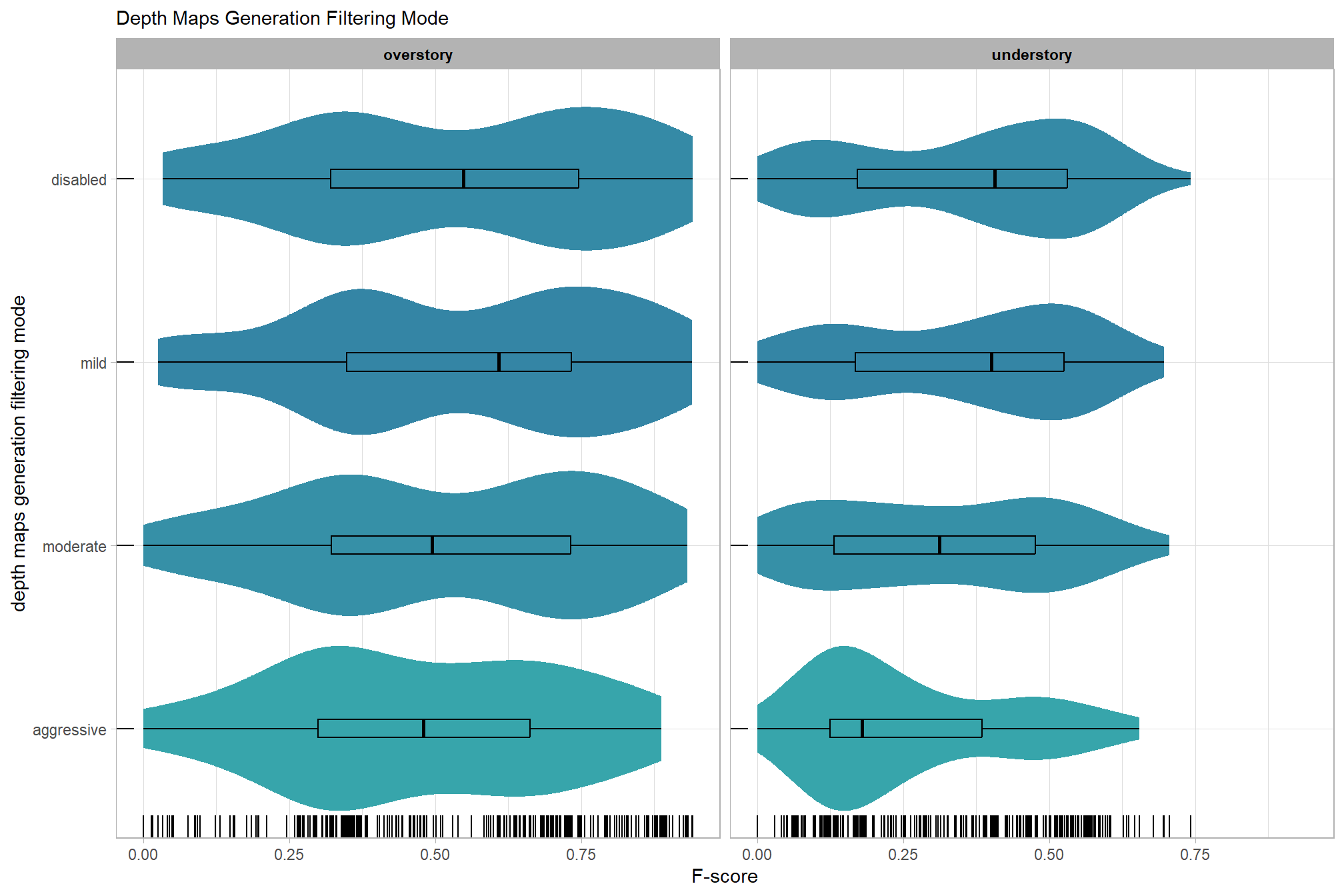

# sum_stats_dta("software")summarize for all variables of interest

c("software", "study_site"

, "depth_maps_generation_quality"

, "depth_maps_generation_filtering_mode"

) %>%

purrr::map(sum_stats_dta)## [[1]]

##

## [[2]]

##

## [[3]]

##

## [[4]]

6.3 One Nominal Predictor

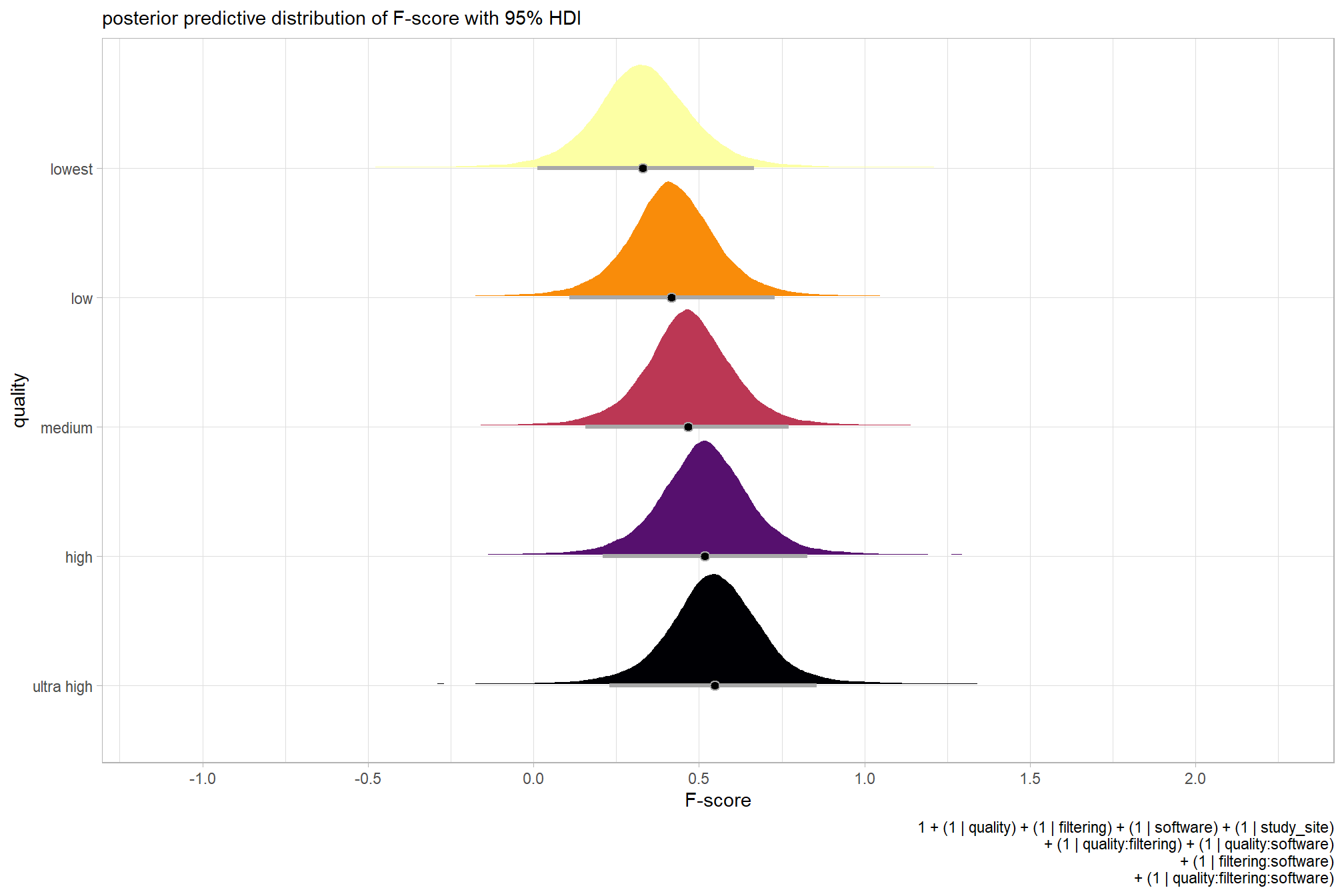

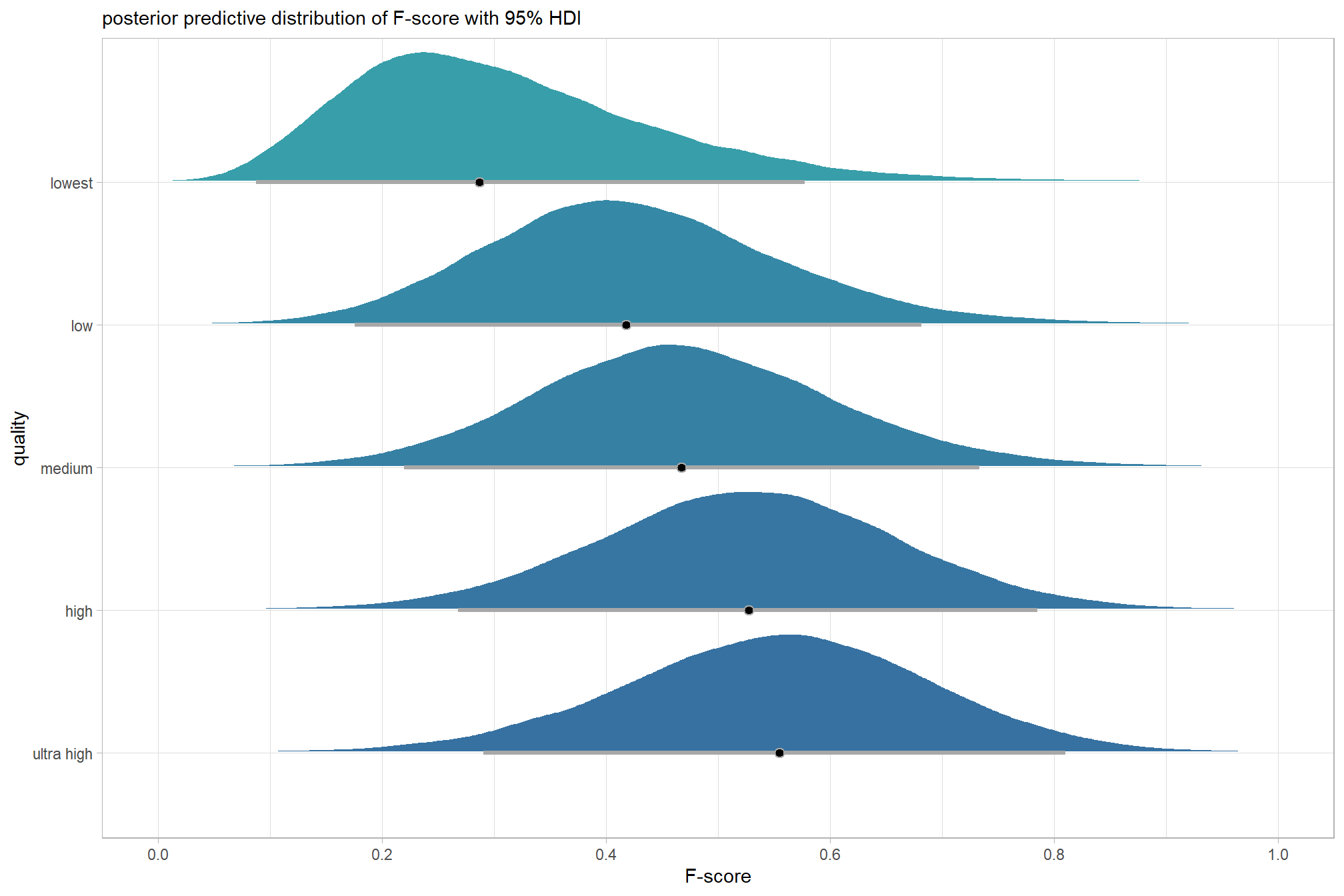

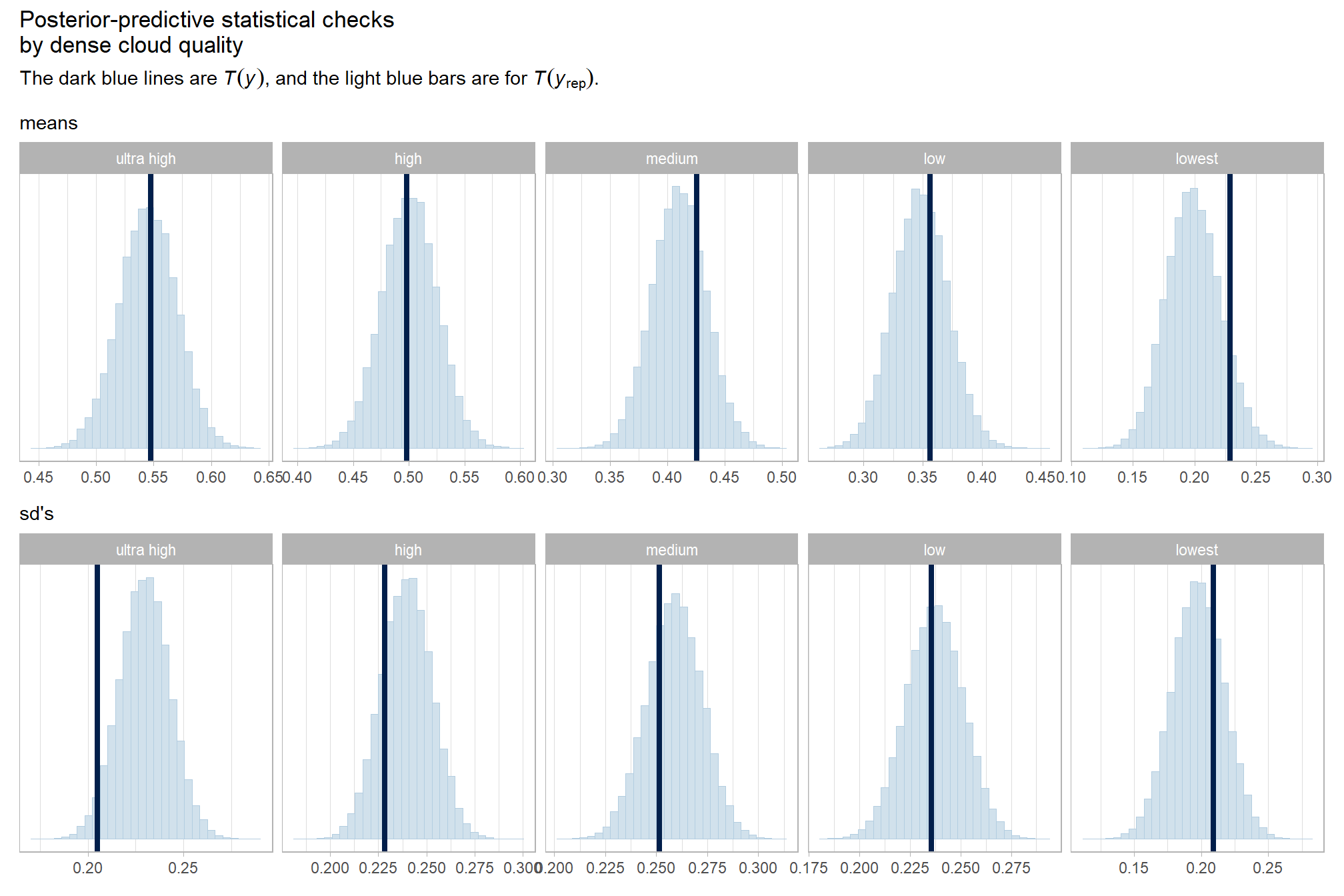

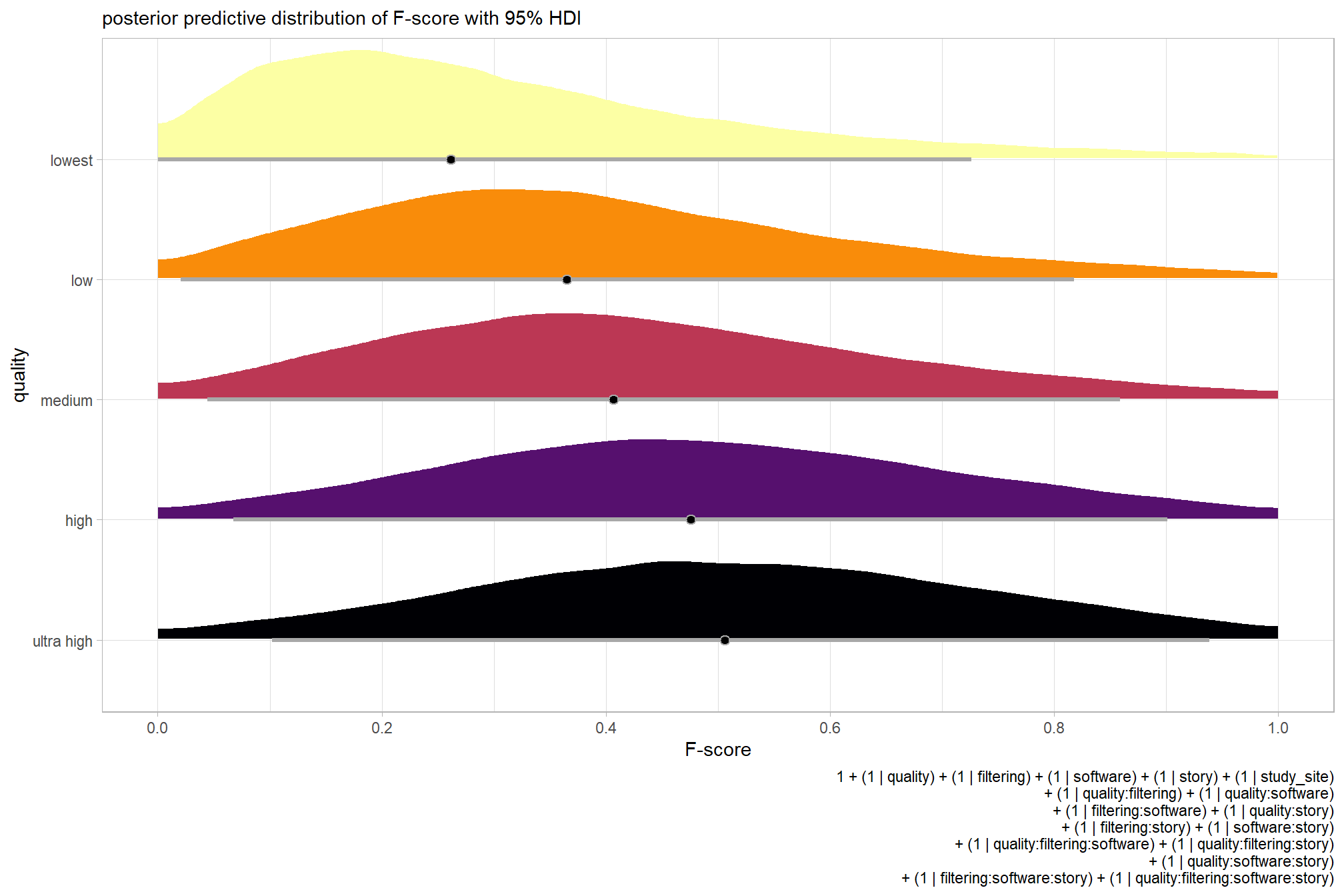

We’ll start by exploring the influence of the depth map generation quality parameter on the SfM-derived tree detection performance based on the F-score.

6.3.1 Summary Statistics

Summary statistics by group:

ptcld_validation_data %>%

dplyr::group_by(depth_maps_generation_quality) %>%

dplyr::summarise(

mean_f_score = mean(f_score, na.rm = T)

# , med_f_score = median(f_score, na.rm = T)

, sd_f_score = sd(f_score, na.rm = T)

, n = dplyr::n()

) %>%

kableExtra::kbl(digits = 2, caption = "summary statistics: F-score by dense cloud quality") %>%

kableExtra::kable_styling()| depth_maps_generation_quality | mean_f_score | sd_f_score | n |

|---|---|---|---|

| ultra high | 0.58 | 0.21 | 55 |

| high | 0.54 | 0.21 | 55 |

| medium | 0.47 | 0.22 | 55 |

| low | 0.40 | 0.20 | 55 |

| lowest | 0.27 | 0.19 | 40 |

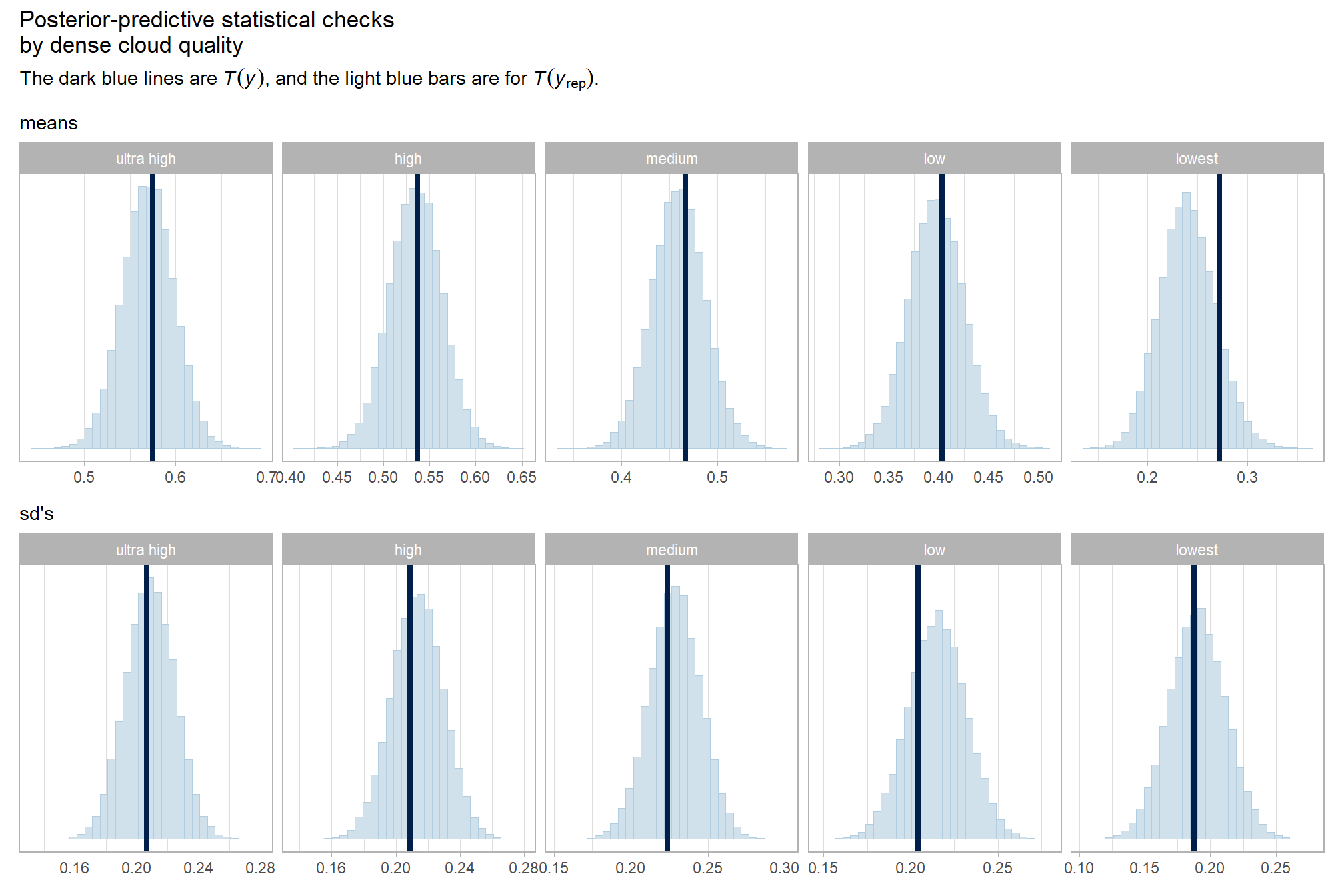

6.3.2 Bayesian

Kruschke (2015) notes:

The terminology, “analysis of variance,” comes from a decomposition of overall data variance into within-group variance and between-group variance (Fisher, 1925). Algebraically, the sum of squared deviations of the scores from their overall mean equals the sum of squared deviations of the scores from their respective group means plus the sum of squared deviations of the group means from the overall mean. In other words, the total variance can be partitioned into within-group variance plus between-group variance. Because one definition of the word “analysis” is separation into constituent parts, the term ANOVA accurately describes the underlying algebra in the traditional methods. That algebraic relation is not used in the hierarchical Bayesian approach presented here. The Bayesian method can estimate component variances, however. Therefore, the Bayesian approach is not ANOVA, but is analogous to ANOVA. (p. 556)

and see section 19 from Kurz’s ebook supplement

The metric predicted variable with one nominal predictor variable model has the form:

\[\begin{align*} y_{i} &\sim \operatorname{Normal} \bigl(\mu_{i}, \sigma_{y} \bigr) \\ \mu_{i} &= \beta_0 + \sum_{j=1}^{J} \beta_{1[j]} x_{1[j]} \bigl(i\bigr) \\ \beta_{0} &\sim \operatorname{Normal}(0,10) \\ \beta_{1[j]} &\sim \operatorname{Normal}(0,\sigma_{\beta_{1}}) \\ \sigma_{\beta_{1}} &\sim {\sf uniform} (0,100) \\ \sigma_{y} &\sim {\sf uniform} (0,100) \\ \end{align*}\]

, where \(j\) is the depth map generation quality setting corresponding to observation \(i\)

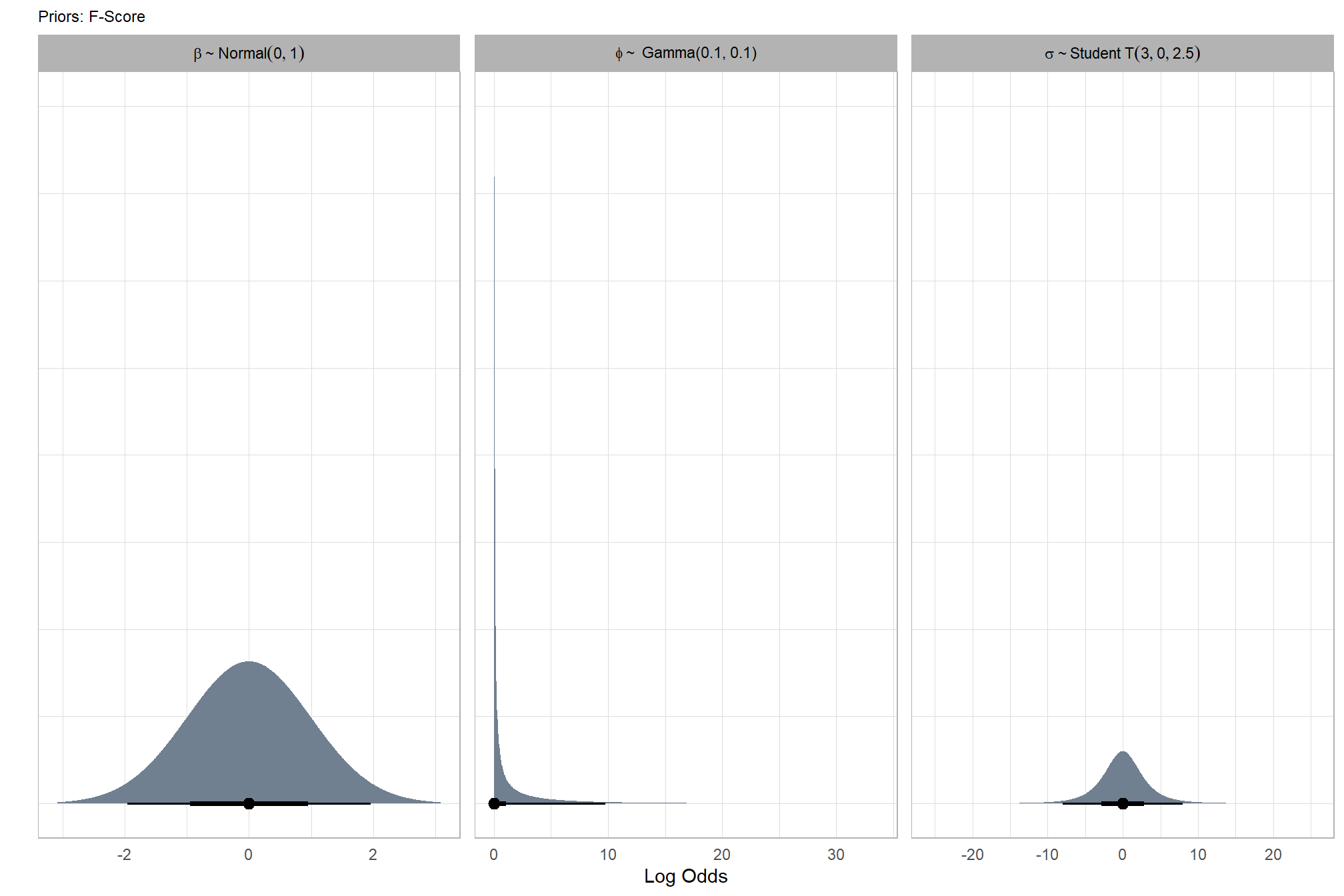

to start, we’ll use the default brms::brm prior settings which may not match those described in the model specification above

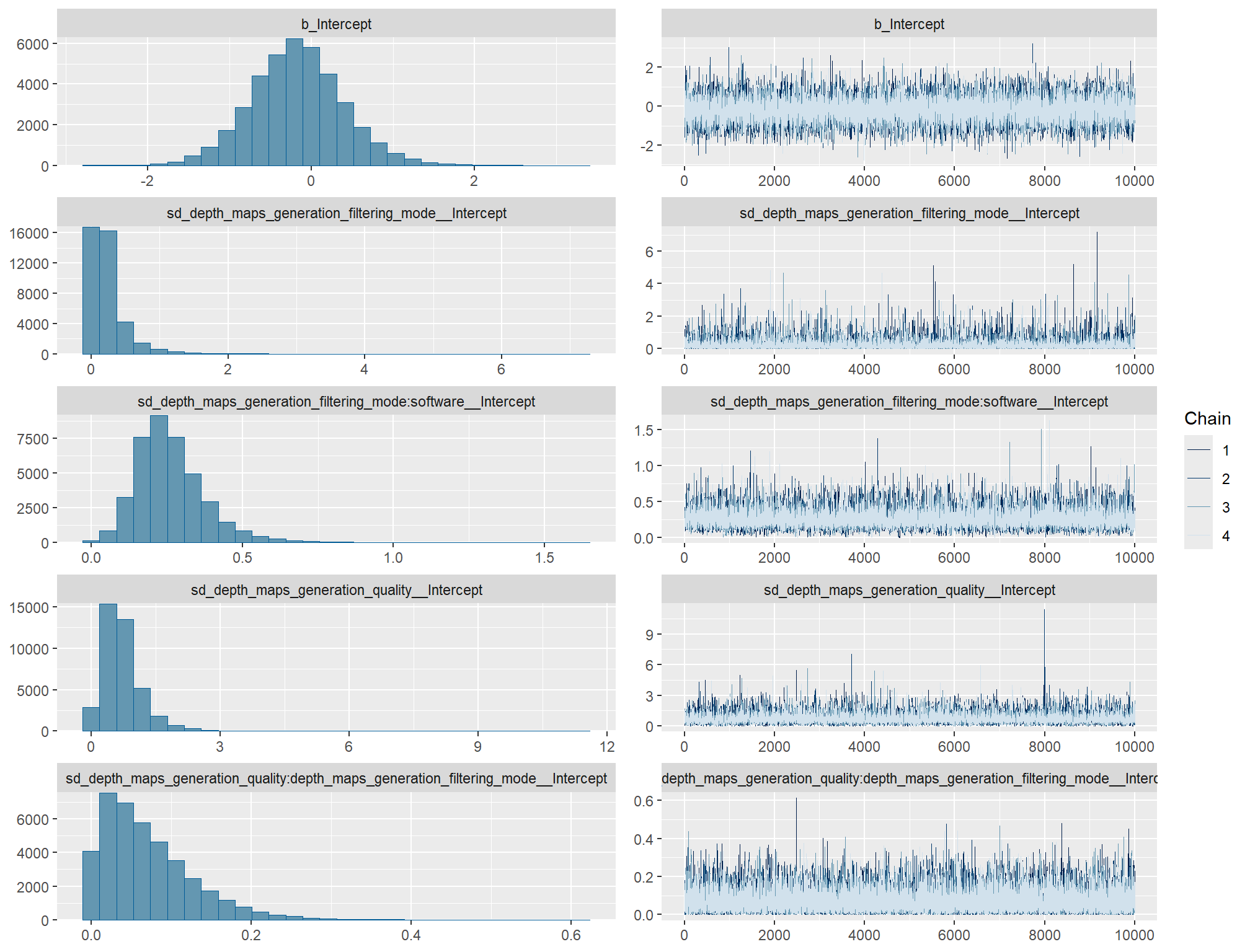

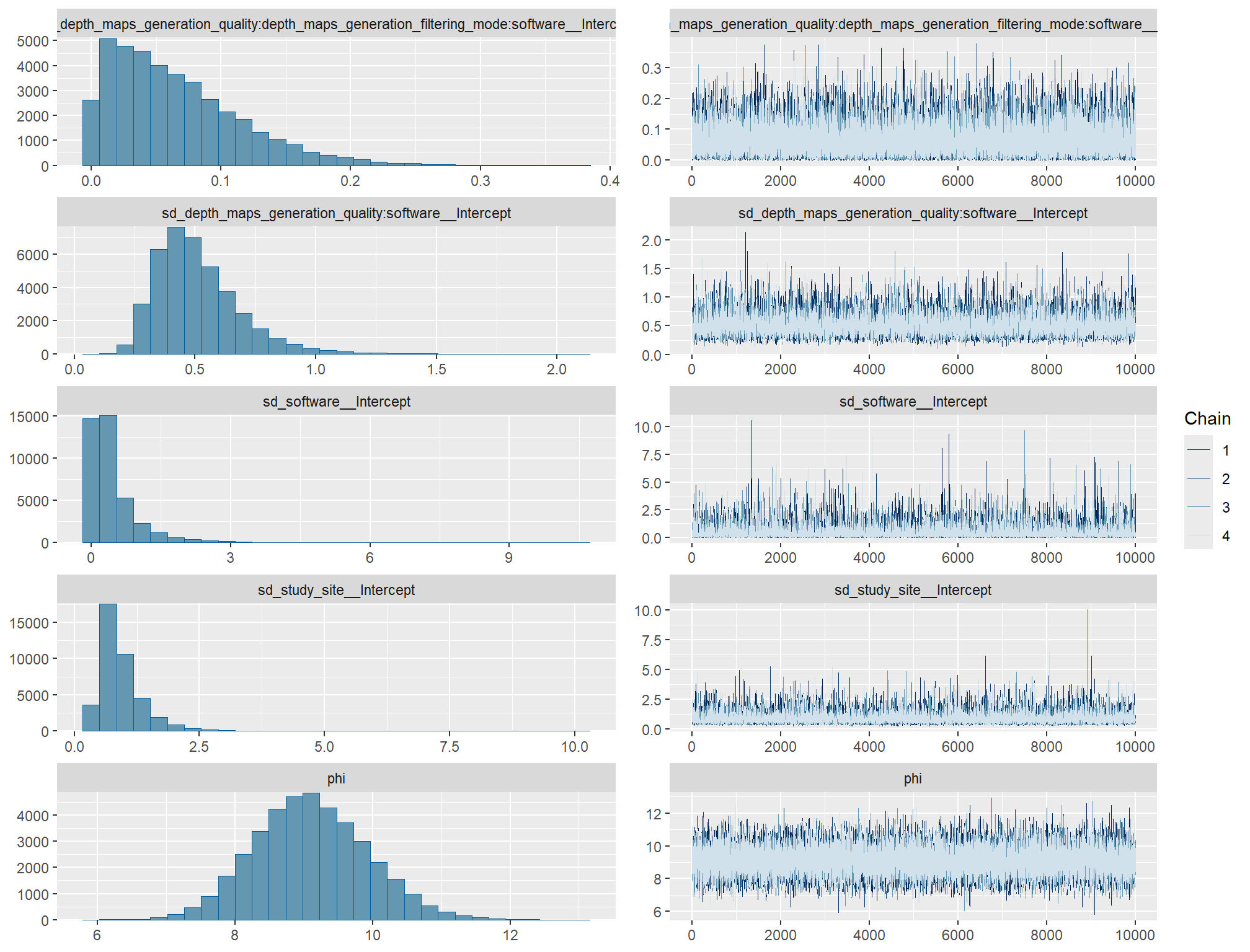

brms_f_mod1 = brms::brm(

formula = f_score ~ 1 + (1 | depth_maps_generation_quality)

, data = ptcld_validation_data

, family = brms::brmsfamily(family = "gaussian")

, iter = 4000, warmup = 2000, chains = 4

, cores = round(parallel::detectCores()/2)

, file = paste0(rootdir, "/fits/brms_f_mod1")

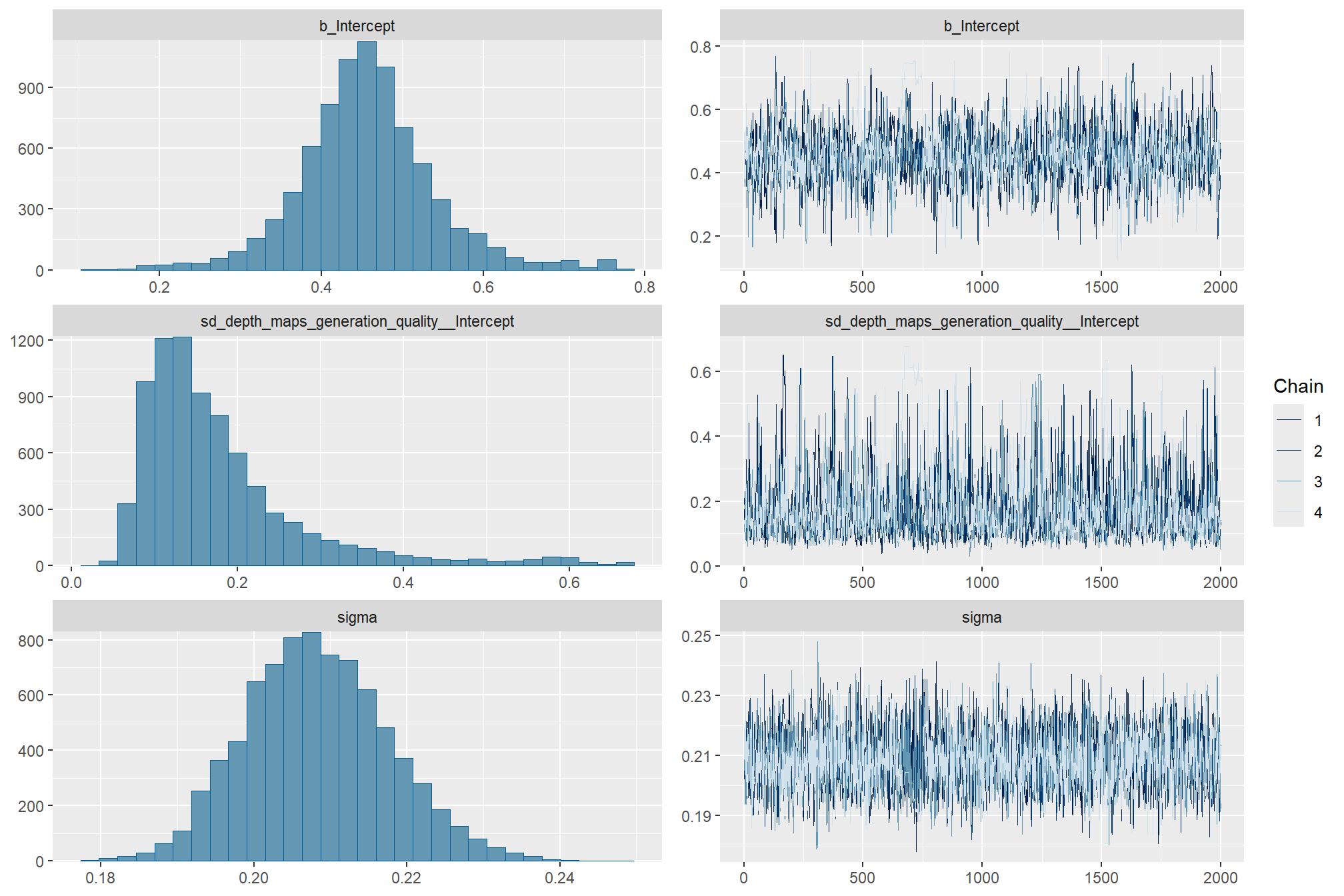

)check the trace plots for problems with convergence of the Markov chains

check the prior distributions

# check priors

brms::prior_summary(brms_f_mod1) %>%

kableExtra::kbl() %>%

kableExtra::kable_styling()| prior | class | coef | group | resp | dpar | nlpar | lb | ub | source |

|---|---|---|---|---|---|---|---|---|---|

| student_t(3, 0.4, 2.5) | Intercept | default | |||||||

| student_t(3, 0, 2.5) | sd | 0 | default | ||||||

| sd | depth_maps_generation_quality | default | |||||||

| sd | Intercept | depth_maps_generation_quality | default | ||||||

| student_t(3, 0, 2.5) | sigma | 0 | default |

The brms::brm model summary

brms_f_mod1 %>%

brms::posterior_summary() %>%

as.data.frame() %>%

tibble::rownames_to_column(var = "parameter") %>%

dplyr::rename_with(tolower) %>%

dplyr::filter(

stringr::str_starts(parameter, "b_")

| stringr::str_starts(parameter, "r_")

| parameter == "sigma"

) %>%

dplyr::mutate(

parameter = parameter %>%

stringr::str_remove_all("b_depth_maps_generation_quality") %>%

stringr::str_remove_all("r_depth_maps_generation_quality")

) %>%

kableExtra::kbl(digits = 2, caption = "Bayesian one nominal predictor: F-score by dense cloud quality") %>%

kableExtra::kable_styling()| parameter | estimate | est.error | q2.5 | q97.5 |

|---|---|---|---|---|

| b_Intercept | 0.46 | 0.08 | 0.29 | 0.65 |

| sigma | 0.21 | 0.01 | 0.19 | 0.23 |

| [ultra.high,Intercept] | 0.11 | 0.09 | -0.08 | 0.29 |

| [high,Intercept] | 0.08 | 0.09 | -0.12 | 0.25 |

| [medium,Intercept] | 0.01 | 0.09 | -0.19 | 0.18 |

| [low,Intercept] | -0.05 | 0.09 | -0.25 | 0.12 |

| [lowest,Intercept] | -0.17 | 0.09 | -0.37 | 0.00 |

With the stats::coef function, we can get the group-level summaries in a “non-deflection” metric. In the model, the group means represented by \(\beta_{1[j]}\) are deflections from overall baseline, such that the deflections sum to zero (see Kruschke (2015, p.554)). Summaries of the group-specific deflections are available via the brms::ranef function.

stats::coef(brms_f_mod1) %>%

as.data.frame() %>%

tibble::rownames_to_column(var = "group") %>%

dplyr::rename_with(

.cols = -c("group")

, .fn = ~ stringr::str_remove_all(.x, "depth_maps_generation_quality.")

) %>%

kableExtra::kbl(digits = 2, caption = "brms::brm model: F-score by dense cloud quality") %>%

kableExtra::kable_styling()| group | Estimate.Intercept | Est.Error.Intercept | Q2.5.Intercept | Q97.5.Intercept |

|---|---|---|---|---|

| ultra high | 0.57 | 0.03 | 0.51 | 0.62 |

| high | 0.53 | 0.03 | 0.48 | 0.59 |

| medium | 0.47 | 0.03 | 0.41 | 0.52 |

| low | 0.40 | 0.03 | 0.35 | 0.46 |

| lowest | 0.28 | 0.03 | 0.22 | 0.35 |

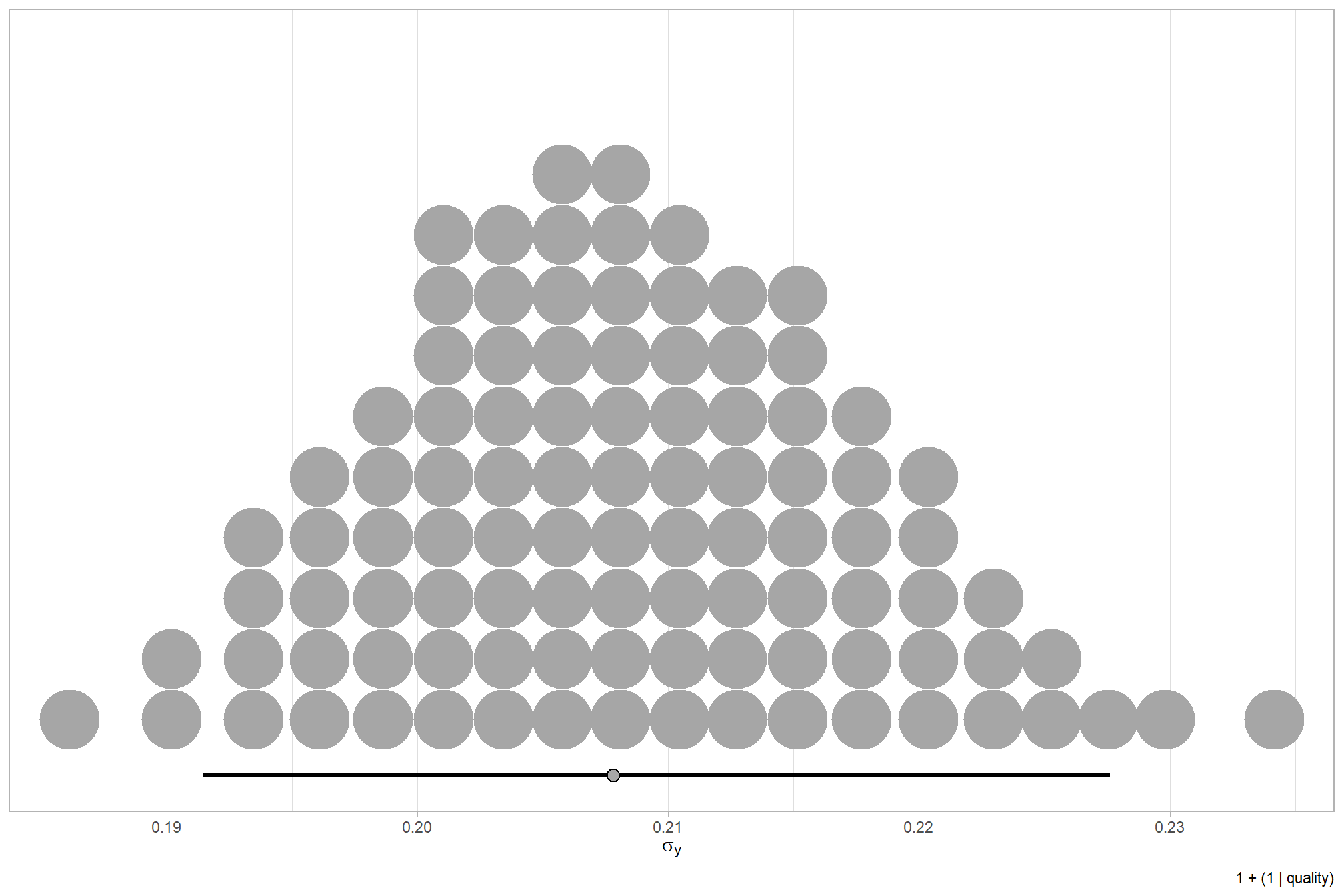

We can look at the model noise standard deviation \(\sigma_y\)

# get formula

form_temp = brms_f_mod1$formula$formula[3] %>%

as.character() %>% get_frmla_text() %>%

stringr::str_replace_all("depth_maps_generation_quality", "quality") %>%

stringr::str_replace_all("depth_maps_generation_filtering_mode", "filtering")

# extract the posterior draws

brms::as_draws_df(brms_f_mod1) %>%

# plot

ggplot(aes(x = sigma, y = 0)) +

tidybayes::stat_dotsinterval(

point_interval = median_hdi, .width = .95

, justification = -0.04

, shape = 21, point_size = 3

, quantiles = 100

) +

scale_y_continuous(NULL, breaks = NULL) +

labs(

x = latex2exp::TeX("$\\sigma_y$")

, caption = form_temp

) +

theme_light()

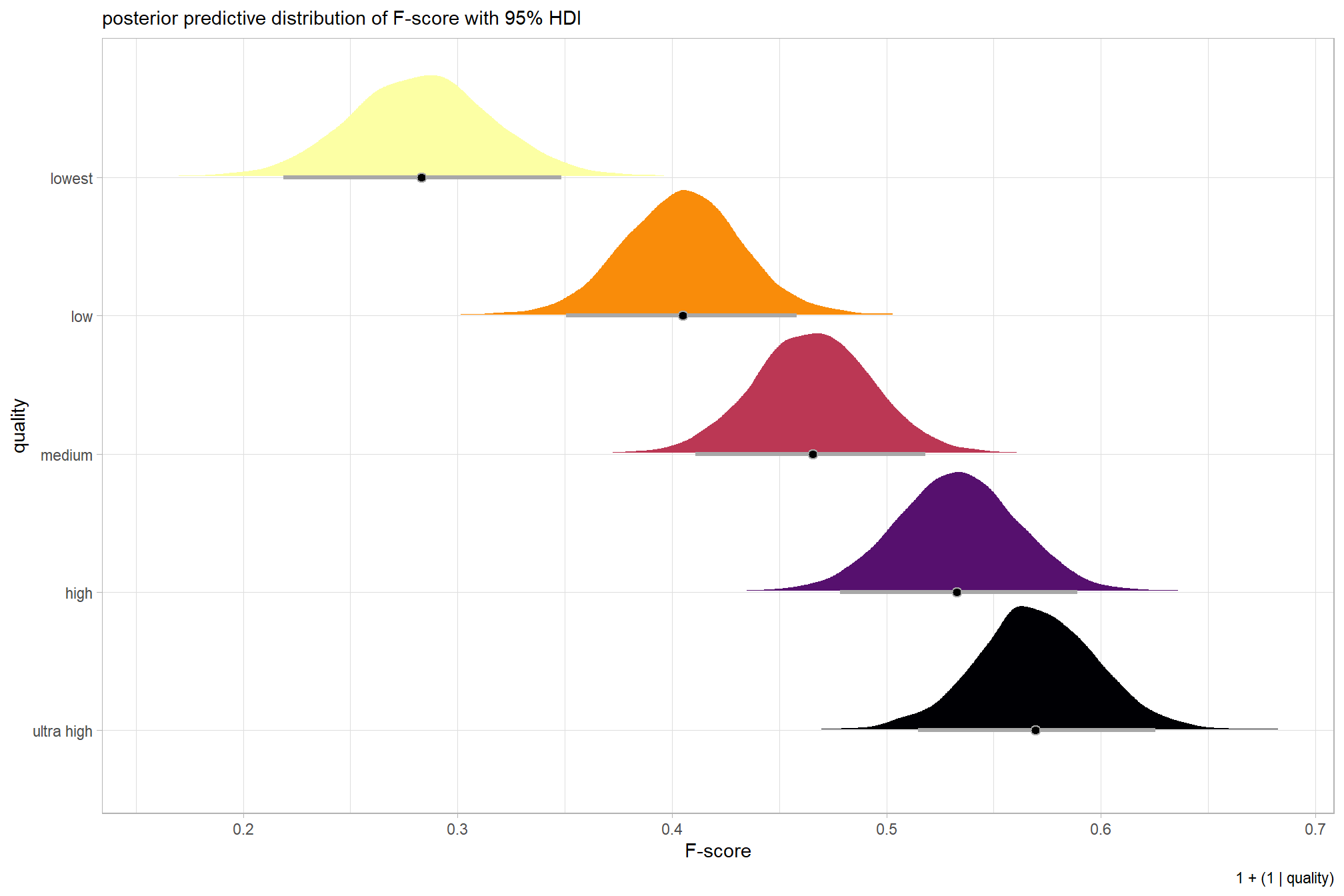

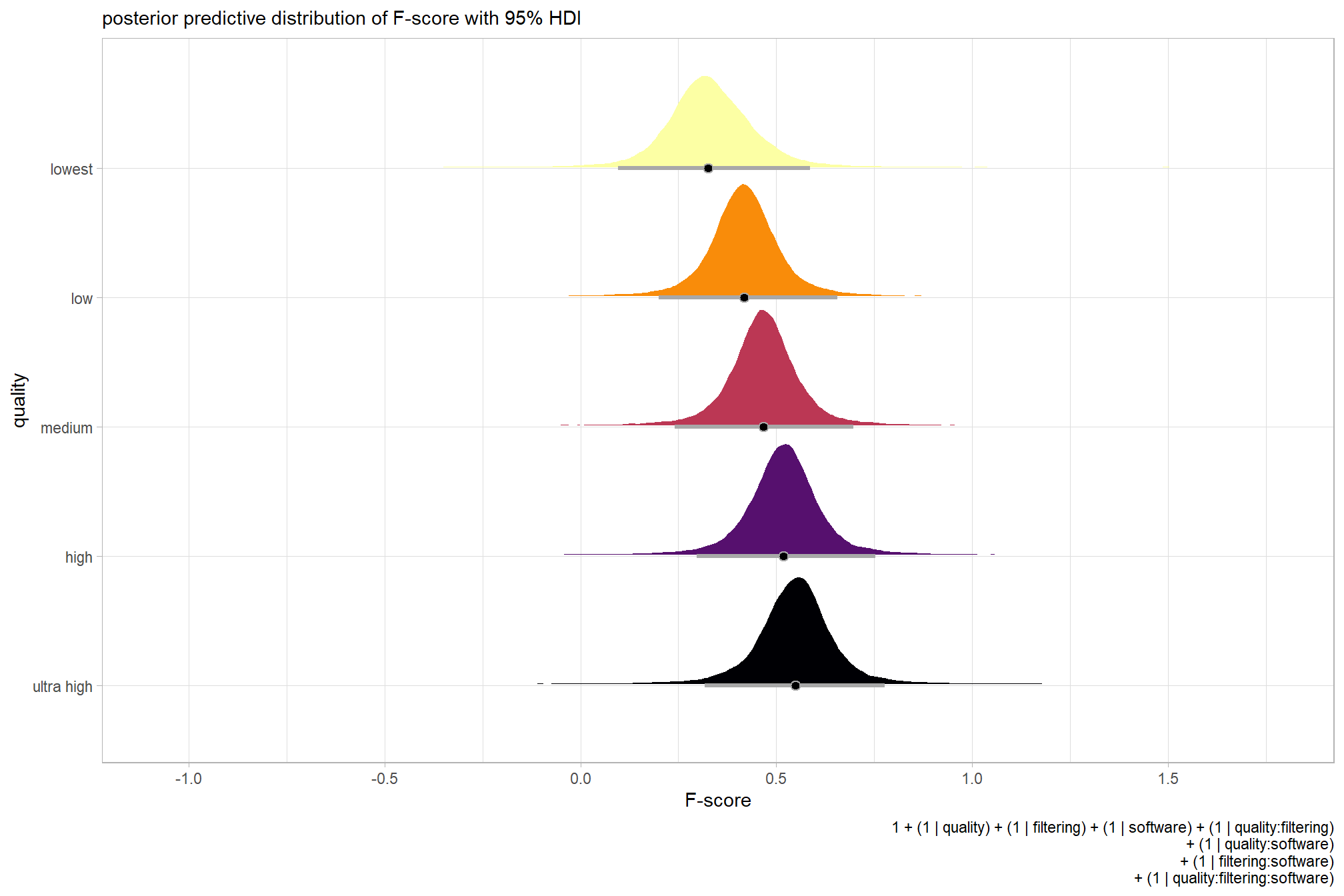

plot the posterior predictive distributions of the conditional means with the median F-score and the 95% highest posterior density interval (HDI)

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality) %>%

tidybayes::add_epred_draws(brms_f_mod1) %>%

dplyr::mutate(value = .epred) %>%

# plot

ggplot(

mapping = aes(

x = value, y = depth_maps_generation_quality

, fill = depth_maps_generation_quality

)

) +

tidybayes::stat_halfeye(

point_interval = median_hdi, .width = .95

, interval_color = "gray66"

, shape = 21, point_color = "gray66", point_fill = "black"

, justification = -0.01

) +

scale_fill_viridis_d(option = "inferno", drop = F) +

scale_x_continuous(breaks = scales::extended_breaks(n=8)) +

labs(

y = "quality", x = "F-score"

, subtitle = "posterior predictive distribution of F-score with 95% HDI"

, caption = form_temp

) +

theme_light() +

theme(legend.position = "none")

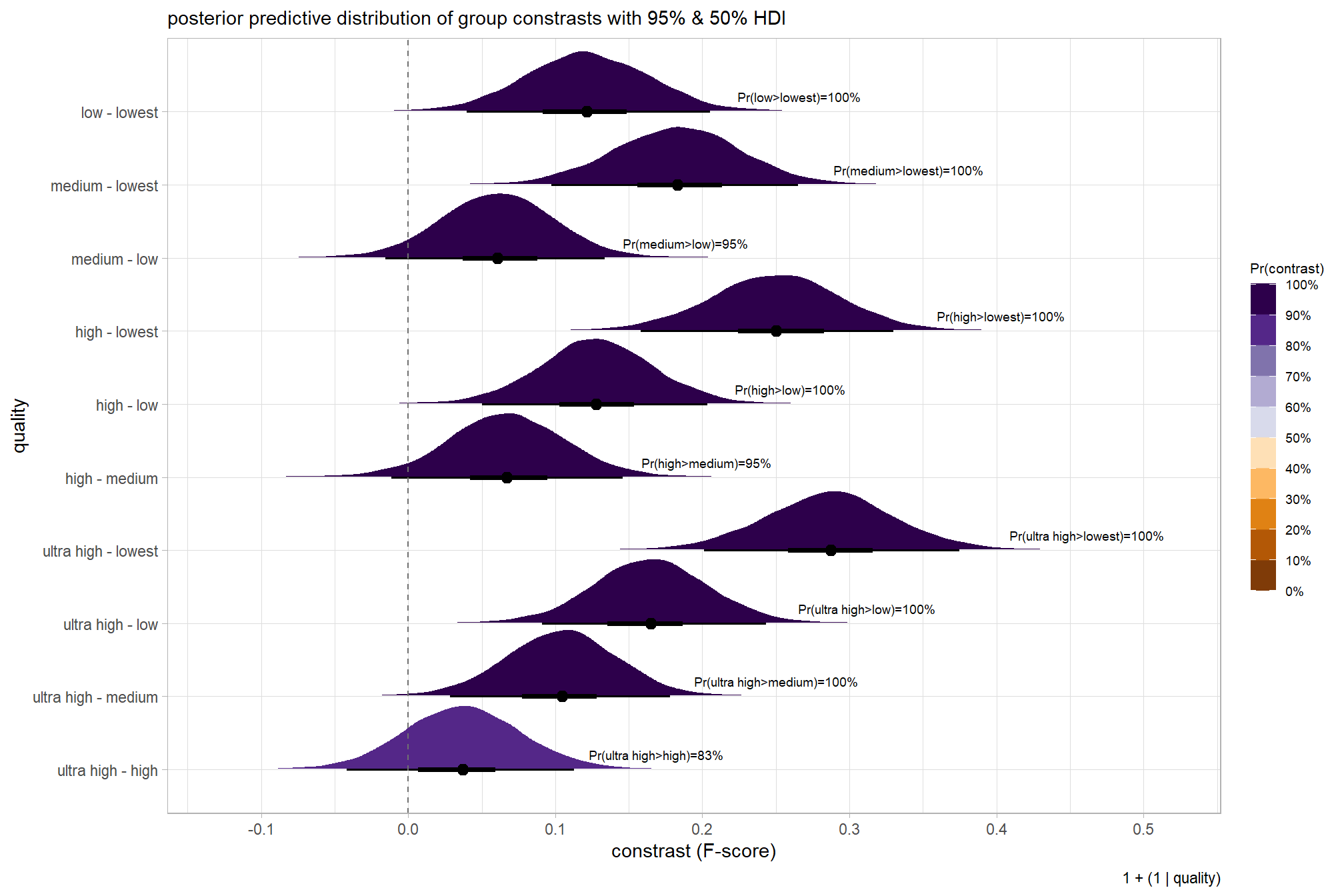

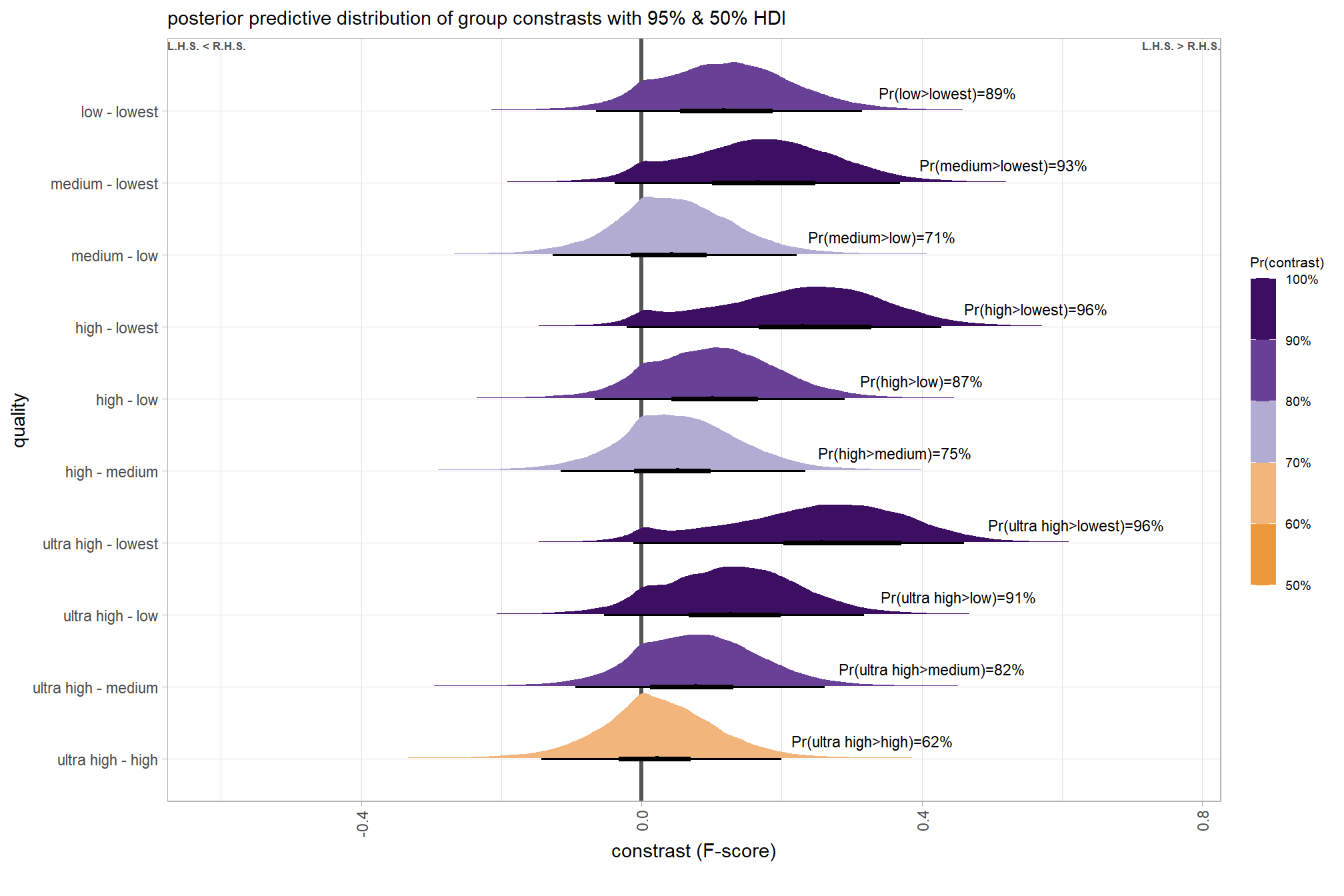

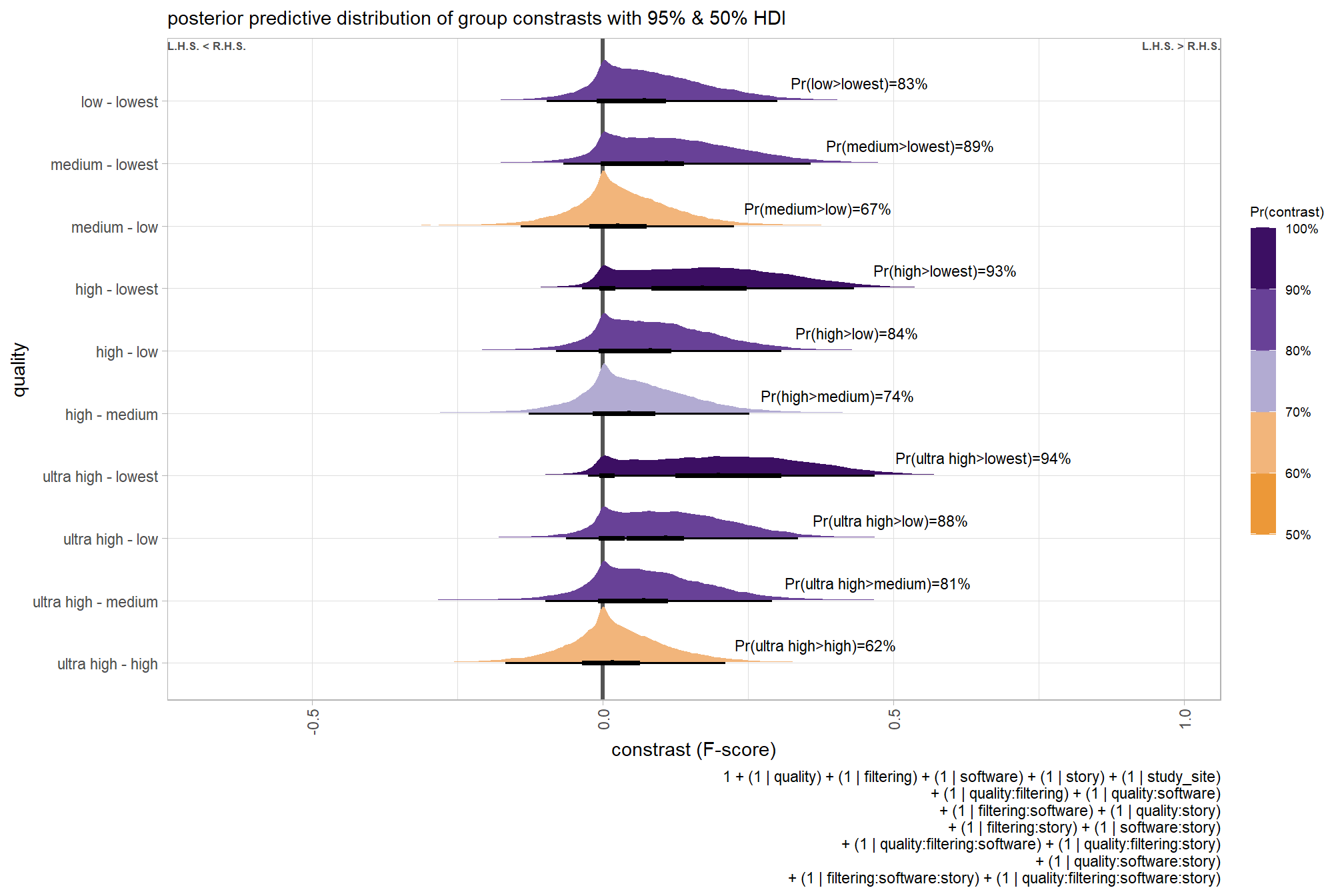

we can also make pairwise comparisons

# first we need to define the contrasts to make

contrast_list =

tidyr::crossing(

x1 = unique(ptcld_validation_data$depth_maps_generation_quality)

, x2 = unique(ptcld_validation_data$depth_maps_generation_quality)

) %>%

dplyr::mutate(

dplyr::across(

dplyr::everything()

, .fns = function(x){factor(

x, ordered = T

, levels = levels(ptcld_validation_data$depth_maps_generation_quality)

)}

)

) %>%

dplyr::filter(x1<x2) %>%

dplyr::arrange(x1,x2) %>%

dplyr::mutate(dplyr::across(dplyr::everything(), as.character)) %>%

purrr::transpose()

# contrast_list

# obtain posterior draws and calculate contrasts using tidybayes::compare_levels

brms_contrast_temp =

brms_f_mod1 %>%

tidybayes::spread_draws(r_depth_maps_generation_quality[depth_maps_generation_quality]) %>%

dplyr::mutate(

depth_maps_generation_quality = depth_maps_generation_quality %>%

stringr::str_replace_all("\\.", " ") %>%

factor(

levels = levels(ptcld_validation_data$depth_maps_generation_quality)

, ordered = T

)

) %>%

dplyr::rename(value = r_depth_maps_generation_quality) %>%

tidybayes::compare_levels(

value

, by = depth_maps_generation_quality

, comparison = contrast_list

# tidybayes::emmeans_comparison("revpairwise")

#"pairwise"

)

# generate the contrast column for creating an ordered factor

brms_contrast_temp =

brms_contrast_temp %>%

dplyr::ungroup() %>%

tidyr::separate_wider_delim(

cols = depth_maps_generation_quality

, delim = " - "

, names = paste0(

"sorter"

, 1:(max(stringr::str_count(brms_contrast_temp$depth_maps_generation_quality, "-"))+1)

)

, too_few = "align_start"

, cols_remove = F

) %>%

dplyr::filter(sorter1!=sorter2) %>%

dplyr::mutate(

dplyr::across(

tidyselect::starts_with("sorter")

, .fns = function(x){factor(

x, ordered = T

, levels = levels(ptcld_validation_data$depth_maps_generation_quality)

)}

)

, contrast = depth_maps_generation_quality %>% forcats::fct_reorder(

paste0(as.numeric(sorter1), as.numeric(sorter2)) %>%

as.numeric()

)

)

# median_hdi summary for coloring

brms_contrast_temp = brms_contrast_temp %>%

dplyr::group_by(contrast) %>%

dplyr::mutate(

# get median_hdi

median_hdi_est = tidybayes::median_hdi(value)$y

, median_hdi_lower = tidybayes::median_hdi(value)$ymin

, median_hdi_upper = tidybayes::median_hdi(value)$ymax

# check probability of contrast

, pr_gt_zero = mean(value > 0) %>%

scales::percent(accuracy = 1)

, pr_lt_zero = mean(value < 0) %>%

scales::percent(accuracy = 1)

# check probability that this direction is true

, is_diff_dir = dplyr::case_when(

median_hdi_est >= 0 ~ value > 0

, median_hdi_est < 0 ~ value < 0

)

, pr_diff = mean(is_diff_dir)

# make a label

, pr_diff_lab = dplyr::case_when(

median_hdi_est > 0 ~ paste0(

"Pr("

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr("

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

)

# make a SMALLER label

, pr_diff_lab_sm = dplyr::case_when(

median_hdi_est >= 0 ~ paste0(

"Pr(>0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr(<0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

)

, pr_diff_lab_pos = dplyr::case_when(

median_hdi_est > 0 ~ median_hdi_upper

, median_hdi_est < 0 ~ median_hdi_lower

) * 1.09

, sig_level = dplyr::case_when(

pr_diff > 0.99 ~ 0

, pr_diff > 0.95 ~ 1

, pr_diff > 0.9 ~ 2

, pr_diff > 0.8 ~ 3

, T ~ 4

) %>%

factor(levels = c(0:4), labels = c(">99%","95%","90%","80%","<80%"), ordered = T)

)

# what?

brms_contrast_temp %>%

dplyr::ungroup() %>%

dplyr::count(contrast, median_hdi_est, pr_diff_lab,pr_diff_lab_sm)## # A tibble: 10 × 5

## contrast median_hdi_est pr_diff_lab pr_diff_lab_sm n

## <fct> <dbl> <chr> <chr> <int>

## 1 ultra high - high 0.0370 Pr(ultra high>high)=… Pr(>0)=83% 8000

## 2 ultra high - medium 0.105 Pr(ultra high>medium… Pr(>0)=100% 8000

## 3 ultra high - low 0.165 Pr(ultra high>low)=1… Pr(>0)=100% 8000

## 4 ultra high - lowest 0.287 Pr(ultra high>lowest… Pr(>0)=100% 8000

## 5 high - medium 0.0670 Pr(high>medium)=95% Pr(>0)=95% 8000

## 6 high - low 0.128 Pr(high>low)=100% Pr(>0)=100% 8000

## 7 high - lowest 0.250 Pr(high>lowest)=100% Pr(>0)=100% 8000

## 8 medium - low 0.0607 Pr(medium>low)=95% Pr(>0)=95% 8000

## 9 medium - lowest 0.183 Pr(medium>lowest)=10… Pr(>0)=100% 8000

## 10 low - lowest 0.121 Pr(low>lowest)=100% Pr(>0)=100% 8000plot it

# plot, finally

brms_contrast_temp %>%

ggplot(aes(x = value, y = contrast, fill = pr_diff)) +

tidybayes::stat_halfeye(

point_interval = median_hdi, .width = c(0.5,0.95)

# , slab_fill = "gray22", slab_alpha = 1

, interval_color = "black", point_color = "black", point_fill = "black"

, justification = -0.01

) +

geom_vline(xintercept = 0, linetype = "dashed", color = "gray44") +

geom_text(

data = brms_contrast_temp %>%

dplyr::ungroup() %>%

dplyr::count(contrast, pr_diff_lab, pr_diff_lab_pos, pr_diff)

, mapping = aes(x = pr_diff_lab_pos, label = pr_diff_lab)

, vjust = -1, hjust = 0, size = 2.5

) +

scale_fill_fermenter(

n.breaks = 10, palette = "PuOr"

, direction = 1

, limits = c(0,1)

, labels = scales::percent

) +

scale_x_continuous(breaks = scales::extended_breaks(n=8), expand = expansion(mult = c(0.1,0.2))) +

labs(

y = "quality"

, x = "constrast (F-score)"

, fill = "Pr(contrast)"

, subtitle = "posterior predictive distribution of group constrasts with 95% & 50% HDI"

, caption = form_temp

) +

theme_light() +

theme(

legend.text = element_text(size = 7)

, legend.title = element_text(size = 8)

) +

guides(fill = guide_colorbar(theme = theme(

legend.key.width = unit(1, "lines"),

legend.key.height = unit(12, "lines")

)))

and summarize these contrasts

# # can also use the following as substitute for the "tidybayes::spread_draws" used above to get same result

brms_contrast_temp %>%

dplyr::group_by(contrast) %>%

tidybayes::median_hdi(value) %>%

select(-c(.point,.interval, .width)) %>%

dplyr::arrange(desc(contrast)) %>%

kableExtra::kbl(

digits = 2

, caption = "brms::brm model: 95% HDI of the posterior predictive distribution of group constrasts in F-score"

, col.names = c(

"quality contrast"

, "difference (F-score)"

, "HDI low", "HDI high"

)

) %>%

kableExtra::kable_styling()| quality contrast | difference (F-score) | HDI low | HDI high |

|---|---|---|---|

| low - lowest | 0.12 | 0.04 | 0.21 |

| medium - lowest | 0.18 | 0.10 | 0.27 |

| medium - low | 0.06 | -0.02 | 0.13 |

| high - lowest | 0.25 | 0.16 | 0.33 |

| high - low | 0.13 | 0.05 | 0.20 |

| high - medium | 0.07 | -0.01 | 0.15 |

| ultra high - lowest | 0.29 | 0.20 | 0.37 |

| ultra high - low | 0.16 | 0.09 | 0.24 |

| ultra high - medium | 0.10 | 0.03 | 0.18 |

| ultra high - high | 0.04 | -0.04 | 0.11 |

Before we move on to the next section, look above at how many arguments we fiddled with to configure our tidybayes::stat_halfeye() plot. Given how many more contrast plots we have looming in our not-too-distant future, we might go ahead and save these settings as a new function. We’ll call it plt_contrast().

plt_contrast <- function(

my_data

, x = "value"

, y = "contrast"

, fill = "pr_diff"

, label = "pr_diff_lab"

, label_pos = "pr_diff_lab_pos"

, label_size = 3

, x_expand = c(0.1, 0.1)

, facet = NA

, y_axis_title = ""

, caption_text = "" # form_temp

, annotate_size = 2.2

) {

# df for annotation

get_annotation_df <- function(

my_text_list = c(

"Bottom Left (h0,v0)","Top Left (h0,v1)"

,"Bottom Right h1,v0","Top Right h1,v1"

)

, hjust = c(0,0,1,1) # higher values = right, lower values = left

, vjust = c(0,1.3,0,1.3) # higher values = down, lower values = up

){

df = data.frame(

xpos = c(-Inf,-Inf,Inf,Inf)

, ypos = c(-Inf, Inf,-Inf,Inf)

, annotate_text = my_text_list

, hjustvar = hjust

, vjustvar = vjust

)

return(df)

}

# plot

plt =

my_data %>%

ggplot(aes(x = .data[[x]], y = .data[[y]])) +

geom_vline(xintercept = 0, linetype = "solid", color = "gray33", lwd = 1.1) +

tidybayes::stat_halfeye(

mapping = aes(fill = .data[[fill]])

, point_interval = median_hdi, .width = c(0.5,0.95)

# , slab_fill = "gray22", slab_alpha = 1

, interval_color = "black", point_color = "black", point_fill = "black"

, point_size = 0.9

, justification = -0.01

) +

geom_text(

data = get_annotation_df(

my_text_list = c(

"","L.H.S. < R.H.S."

,"","L.H.S. > R.H.S."

)

)

, mapping = aes(

x = xpos, y = ypos

, hjust = hjustvar, vjust = vjustvar

, label = annotate_text

, fontface = "bold"

)

, size = annotate_size

, color = "gray30" # "#2d2a4d" #"#204445"

) +

# scale_fill_fermenter(

# n.breaks = 5 # 10 use 10 if can go full range 0-1

# , palette = "PuOr" # "RdYlBu"

# , direction = 1

# , limits = c(0.5,1) # use c(0,1) if can go full range 0-1

# , labels = scales::percent

# ) +

scale_fill_stepsn(

n.breaks = 5 # 10 use 10 if can go full range 0-1

, colors = RColorBrewer::brewer.pal(11,"PuOr")[c(3,4,8,10,11)]

, limits = c(0.5,1) # use c(0,1) if can go full range 0-1

, labels = scales::percent

) +

scale_x_continuous(expand = expansion(mult = x_expand)) +

labs(

y = y_axis_title

, x = "constrast (F-score)"

, fill = "Pr(contrast)"

, subtitle = "posterior predictive distribution of group constrasts with 95% & 50% HDI"

, caption = caption_text

) +

theme_light() +

theme(

legend.text = element_text(size = 7)

, legend.title = element_text(size = 8)

, axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1.05)

, strip.text = element_text(color = "black", face = "bold")

) +

guides(fill = guide_colorbar(theme = theme(

legend.key.width = unit(1, "lines"),

legend.key.height = unit(12, "lines")

)))

# return facet or not

if(max(is.na(facet))==0){

return(

plt +

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos>=0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

, facet

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = 0, size = label_size

) +

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos<0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

, facet

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = +1, size = label_size

) +

facet_grid(cols = vars(.data[[facet]]))

)

}

else{

return(

plt +

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos>=0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = 0, size = label_size

)+

geom_text(

data = my_data %>%

dplyr::filter(pr_diff_lab_pos<0) %>%

dplyr::ungroup() %>%

dplyr::select(tidyselect::all_of(c(

y

, fill

, label

, label_pos

))) %>%

dplyr::distinct()

, mapping = aes(x = .data[[label_pos]], label = .data[[label]])

, vjust = -1, hjust = +1, size = label_size

)

)

}

}

# plt_contrast(brms_contrast_temp, label = "pr_diff_lab_sm")We’ll also create a function to create all of the probability labeling columns in the contrast data called make_contrast_vars()

Note, here we use tidybayes::median_hdci() to avoid potential for returning multiple rows by group if our data is grouped. See the documentation for the ggdist package which notes that “If the distribution is multimodal, hdi may return multiple intervals for each probability level (these will be spread over rows).”

make_contrast_vars = function(my_data){

my_data %>%

dplyr::mutate(

# get median_hdi

median_hdi_est = tidybayes::median_hdci(value)$y

, median_hdi_lower = tidybayes::median_hdci(value)$ymin

, median_hdi_upper = tidybayes::median_hdci(value)$ymax

# check probability of contrast

, pr_gt_zero = mean(value > 0) %>%

scales::percent(accuracy = 1)

, pr_lt_zero = mean(value < 0) %>%

scales::percent(accuracy = 1)

# check probability that this direction is true

, is_diff_dir = dplyr::case_when(

median_hdi_est >= 0 ~ value > 0

, median_hdi_est < 0 ~ value < 0

)

, pr_diff = mean(is_diff_dir)

# make a label

, pr_diff_lab = dplyr::case_when(

median_hdi_est > 0 ~ paste0(

"Pr("

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr("

, stringr::word(contrast, 2, sep = fixed("-")) %>%

stringr::str_squish()

, ">"

, stringr::word(contrast, 1, sep = fixed("-")) %>%

stringr::str_squish()

, ")="

, pr_diff %>% scales::percent(accuracy = 1)

)

) %>%

stringr::str_replace_all("OPENDRONEMAP", "ODM") %>%

stringr::str_replace_all("METASHAPE", "MtaShp") %>%

stringr::str_replace_all("PIX4D", "Pix4D")

# make a SMALLER label

, pr_diff_lab_sm = dplyr::case_when(

median_hdi_est >= 0 ~ paste0(

"Pr(>0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

, median_hdi_est < 0 ~ paste0(

"Pr(<0)="

, pr_diff %>% scales::percent(accuracy = 1)

)

)

, pr_diff_lab_pos = dplyr::case_when(

median_hdi_est > 0 ~ median_hdi_upper

, median_hdi_est < 0 ~ median_hdi_lower

) * 1.075

, sig_level = dplyr::case_when(

pr_diff > 0.99 ~ 0

, pr_diff > 0.95 ~ 1

, pr_diff > 0.9 ~ 2

, pr_diff > 0.8 ~ 3

, T ~ 4

) %>%

factor(levels = c(0:4), labels = c(">99%","95%","90%","80%","<80%"), ordered = T)

)

}

# brms_contrast_temp %>% dplyr::group_by(contrast) %>% make_contrast_vars() %>% dplyr::glimpse()6.4 Two Nominal Predictors

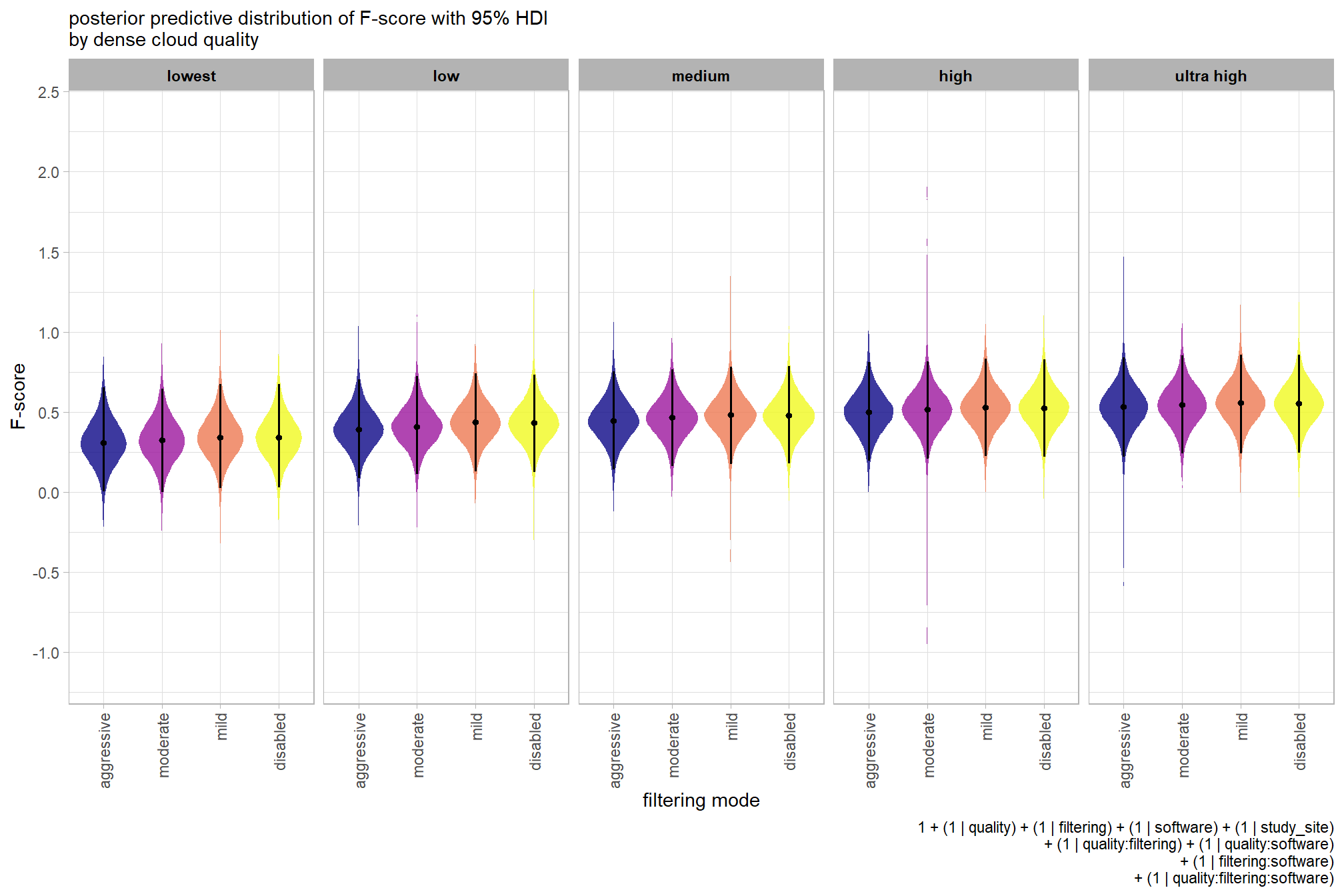

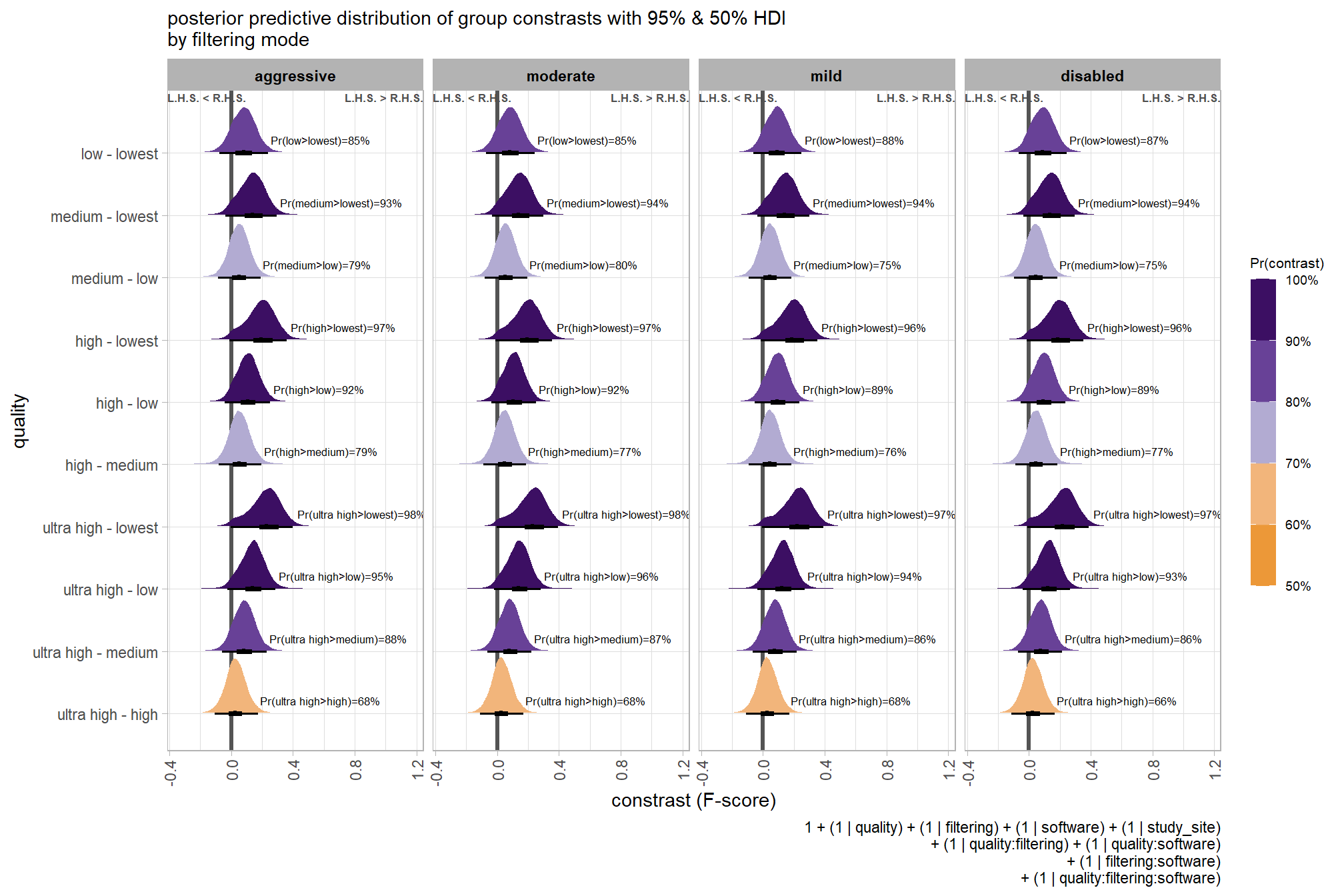

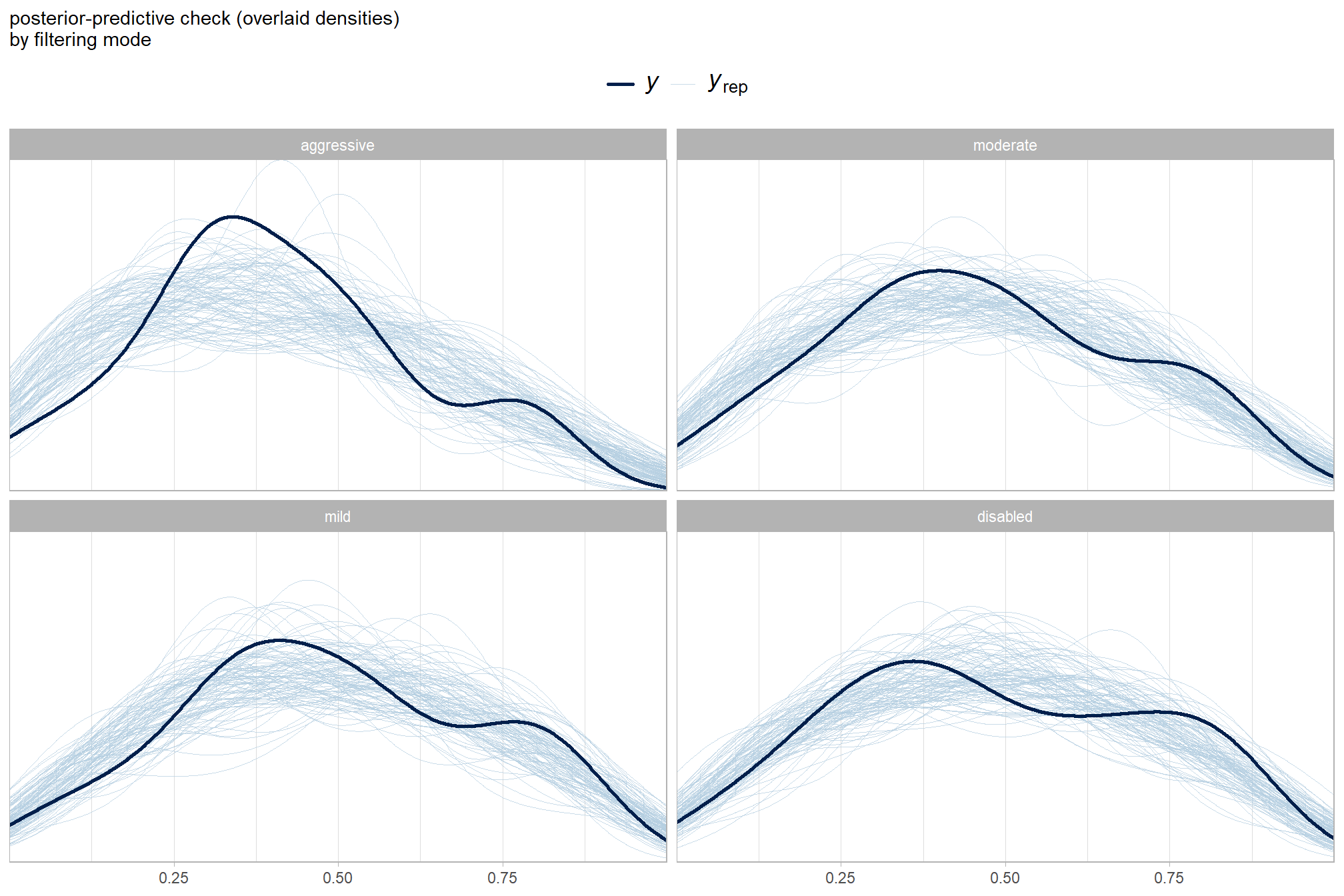

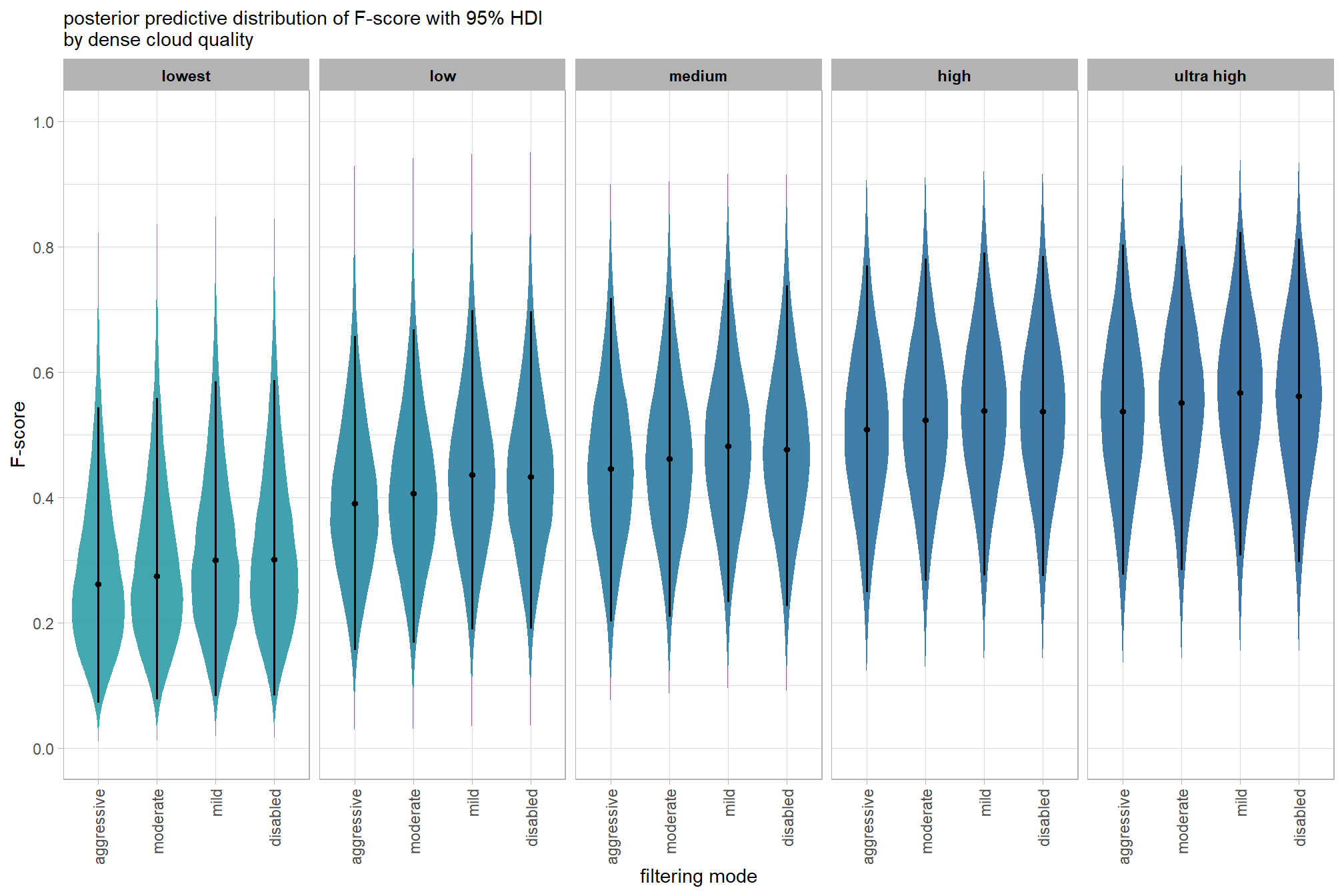

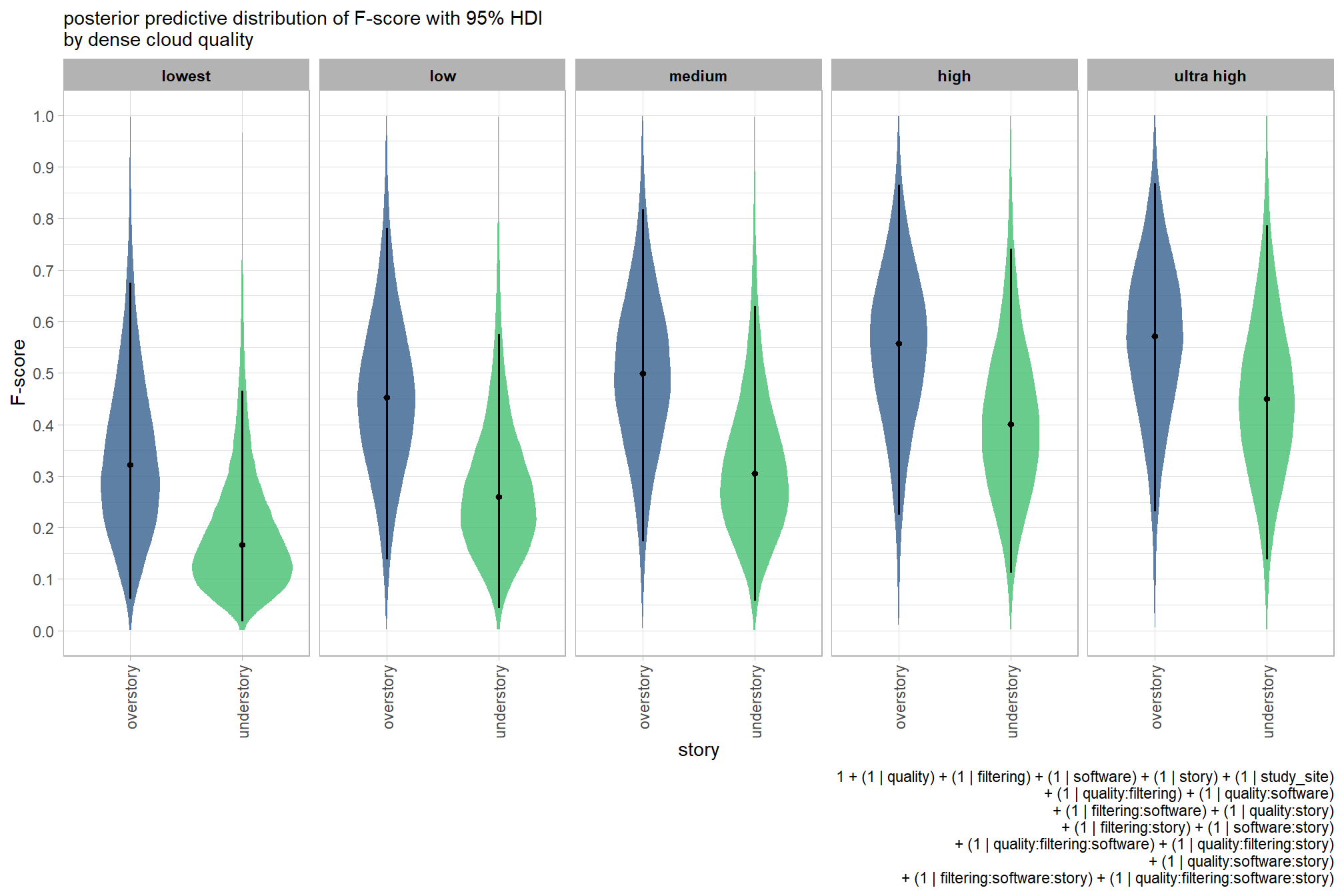

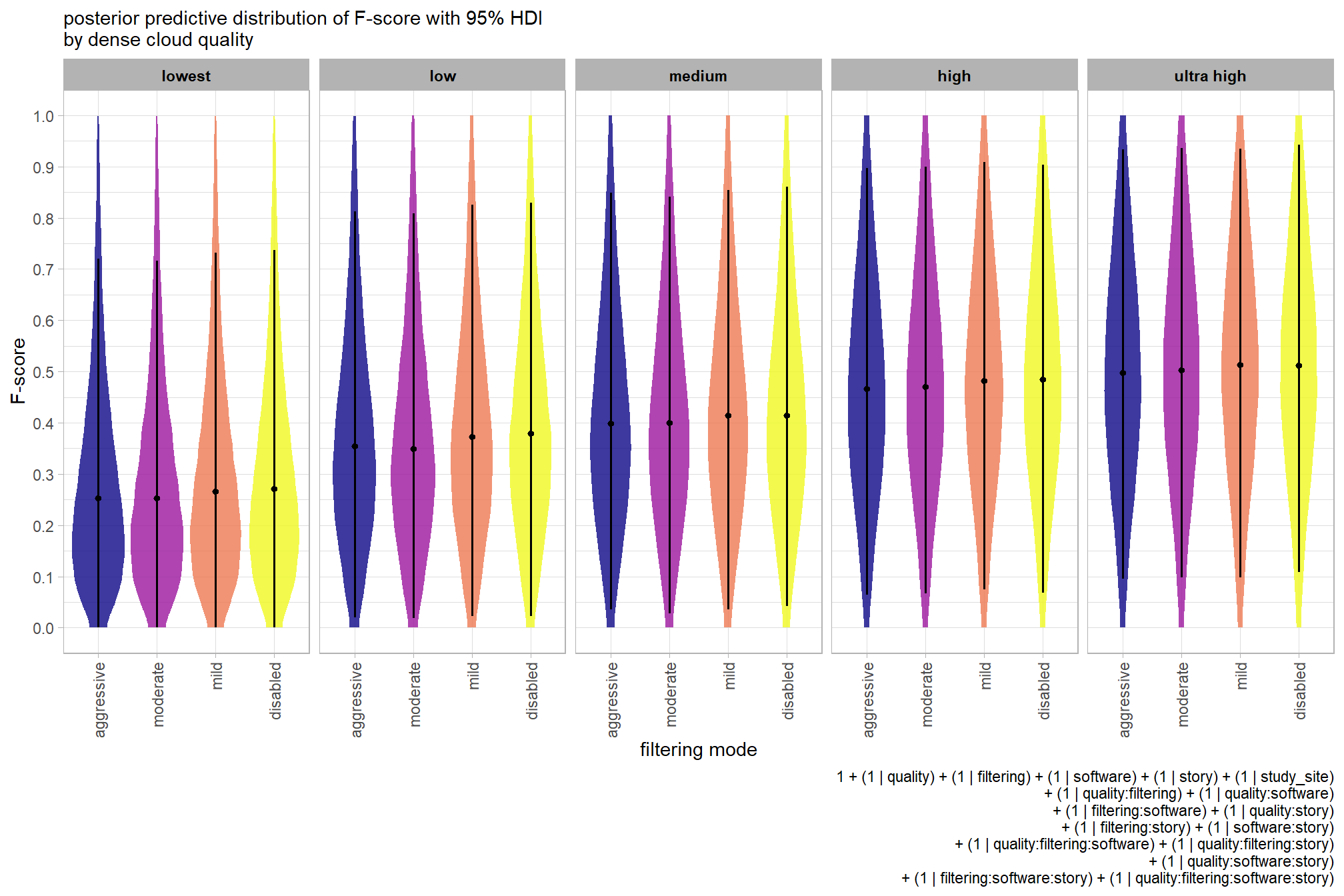

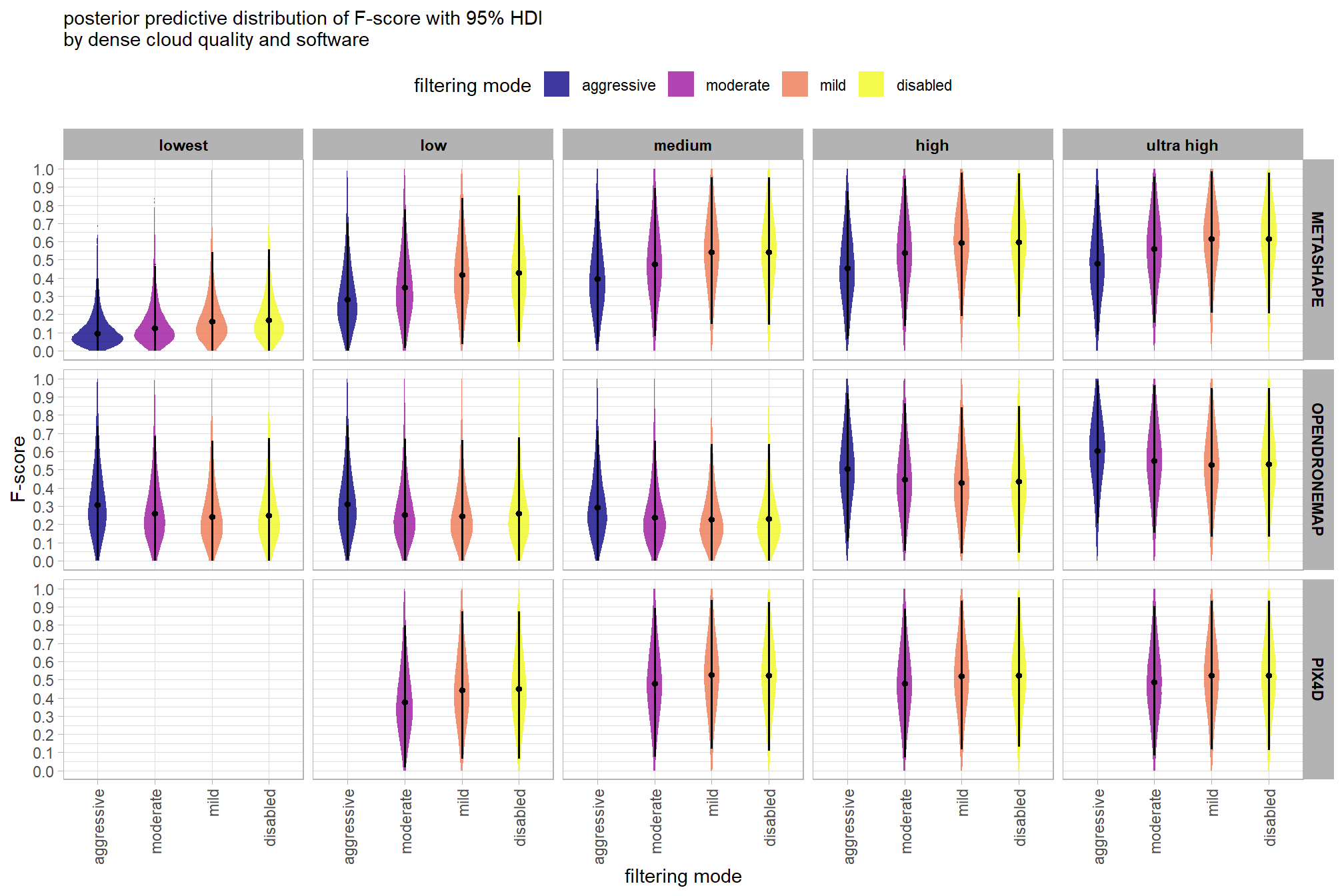

Now, we’ll determine the combined influence of the depth map generation quality and the depth map filtering parameters on the SfM-derived tree detection performance based on the F-score.

6.4.1 Summary Statistics

Summary statistics by group:

ptcld_validation_data %>%

dplyr::group_by(depth_maps_generation_quality, depth_maps_generation_filtering_mode) %>%

dplyr::summarise(

mean_f_score = mean(f_score, na.rm = T)

# , med_f_score = median(f_score, na.rm = T)

, sd_f_score = sd(f_score, na.rm = T)

, n = dplyr::n()

) %>%

kableExtra::kbl(

digits = 2

, caption = "summary statistics: F-score by dense cloud quality and filtering mode"

, col.names = c(

"quality"

, "filtering mode"

, "mean F-score"

, "sd"

, "n"

)

) %>%

kableExtra::kable_styling() %>%

kableExtra::scroll_box(height = "6in")| quality | filtering mode | mean F-score | sd | n |

|---|---|---|---|---|

| ultra high | aggressive | 0.58 | 0.20 | 10 |

| ultra high | moderate | 0.58 | 0.21 | 15 |

| ultra high | mild | 0.58 | 0.21 | 15 |

| ultra high | disabled | 0.56 | 0.23 | 15 |

| high | aggressive | 0.52 | 0.17 | 10 |

| high | moderate | 0.54 | 0.22 | 15 |

| high | mild | 0.54 | 0.22 | 15 |

| high | disabled | 0.54 | 0.23 | 15 |

| medium | aggressive | 0.39 | 0.16 | 10 |

| medium | moderate | 0.47 | 0.22 | 15 |

| medium | mild | 0.50 | 0.25 | 15 |

| medium | disabled | 0.48 | 0.25 | 15 |

| low | aggressive | 0.30 | 0.13 | 10 |

| low | moderate | 0.37 | 0.18 | 15 |

| low | mild | 0.46 | 0.22 | 15 |

| low | disabled | 0.45 | 0.23 | 15 |

| lowest | aggressive | 0.24 | 0.18 | 10 |

| lowest | moderate | 0.25 | 0.20 | 10 |

| lowest | mild | 0.30 | 0.18 | 10 |

| lowest | disabled | 0.30 | 0.21 | 10 |

6.4.2 Bayesian

Kruschke (2015) describes the Hierarchical Bayesian approach to describe groups of metric data with multiple nominal predictors:

This chapter considers data structures that consist of a metric predicted variable and two (or more) nominal predictors….The traditional treatment of this sort of data structure is called multifactor analysis of variance (ANOVA). Our Bayesian approach will be a hierarchical generalization of the traditional ANOVA model. The chapter also considers generalizations of the traditional models, because it is straight forward in Bayesian software to implement heavy-tailed distributions to accommodate outliers, along with hierarchical structure to accommodate heterogeneous variances in the different groups. (pp. 583–584)

and see section 20 from Kurz’s ebook supplement

The metric predicted variable with two nominal predictor variables model has the form:

\[\begin{align*} y_{i} &\sim \operatorname{Normal} \bigl(\mu_{i}, \sigma_{y} \bigr) \\ \mu_{i} &= \beta_0 + \sum_{j} \beta_{1[j]} x_{1[j]} + \sum_{k} \beta_{2[k]} x_{2[k]} + \sum_{j,k} \beta_{1\times2[j,k]} x_{1\times2[j,k]} \\ \beta_{0} &\sim \operatorname{Normal}(0,100) \\ \beta_{1[j]} &\sim \operatorname{Normal}(0,\sigma_{\beta_{1}}) \\ \beta_{2[k]} &\sim \operatorname{Normal}(0,\sigma_{\beta_{2}}) \\ \beta_{1\times2[j,k]} &\sim \operatorname{Normal}(0,\sigma_{\beta_{1\times2}}) \\ \sigma_{\beta_{1}} &\sim \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{2}} &\sim \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{1\times2}} &\sim \operatorname{Gamma}(1.28,0.005) \\ \sigma_{y} &\sim {\sf Cauchy} (0,109) \\ \end{align*}\]

, where \(j\) is the depth map generation quality setting corresponding to observation \(i\) and \(k\) is the depth map filtering mode setting corresponding to observation \(i\)

for this model, we’ll define the priors following Kurz who notes that:

The noise standard deviation \(\sigma_y\) is depicted in the prior statement including the argument

class = sigma…in order to be weakly informative, we will use the half-Cauchy. Recall that since the brms default is to set the lower bound for any variance parameter to 0, there’s no need to worry about doing so ourselves. So even though the syntax only indicatescauchy, it’s understood to mean Cauchy with a lower bound at zero; since the mean is usually 0, that makes this a half-Cauchy…The tails of the half-Cauchy are sufficiently fat that, in practice, I’ve found it doesn’t matter much what you set the \(SD\) of its prior to.

# from Kurz:

gamma_a_b_from_omega_sigma = function(mode, sd) {

if (mode <= 0) stop("mode must be > 0")

if (sd <= 0) stop("sd must be > 0")

rate = (mode + sqrt(mode^2 + 4 * sd^2)) / (2 * sd^2)

shape = 1 + mode * rate

return(list(shape = shape, rate = rate))

}

mean_y_temp = mean(ptcld_validation_data$f_score)

sd_y_temp = sd(ptcld_validation_data$f_score)

omega_temp = sd_y_temp / 2

sigma_temp = 2 * sd_y_temp

s_r_temp = gamma_a_b_from_omega_sigma(mode = omega_temp, sd = sigma_temp)

stanvars_temp =

brms::stanvar(mean_y_temp, name = "mean_y") +

brms::stanvar(sd_y_temp, name = "sd_y") +

brms::stanvar(s_r_temp$shape, name = "alpha") +

brms::stanvar(s_r_temp$rate, name = "beta")Now fit the model.

brms_f_mod2 = brms::brm(

formula = f_score ~ 1 +

(1 | depth_maps_generation_quality) +

(1 | depth_maps_generation_filtering_mode) +

(1 | depth_maps_generation_quality:depth_maps_generation_filtering_mode)

, data = ptcld_validation_data

, family = brms::brmsfamily(family = "gaussian")

, iter = 6000, warmup = 3000, chains = 4

, cores = round(parallel::detectCores()/2)

, prior = c(

brms::prior(normal(mean_y, sd_y * 5), class = "Intercept")

, brms::prior(gamma(alpha, beta), class = "sd")

, brms::prior(cauchy(0, sd_y), class = "sigma")

)

, stanvars = stanvars_temp

, file = paste0(rootdir, "/fits/brms_f_mod2")

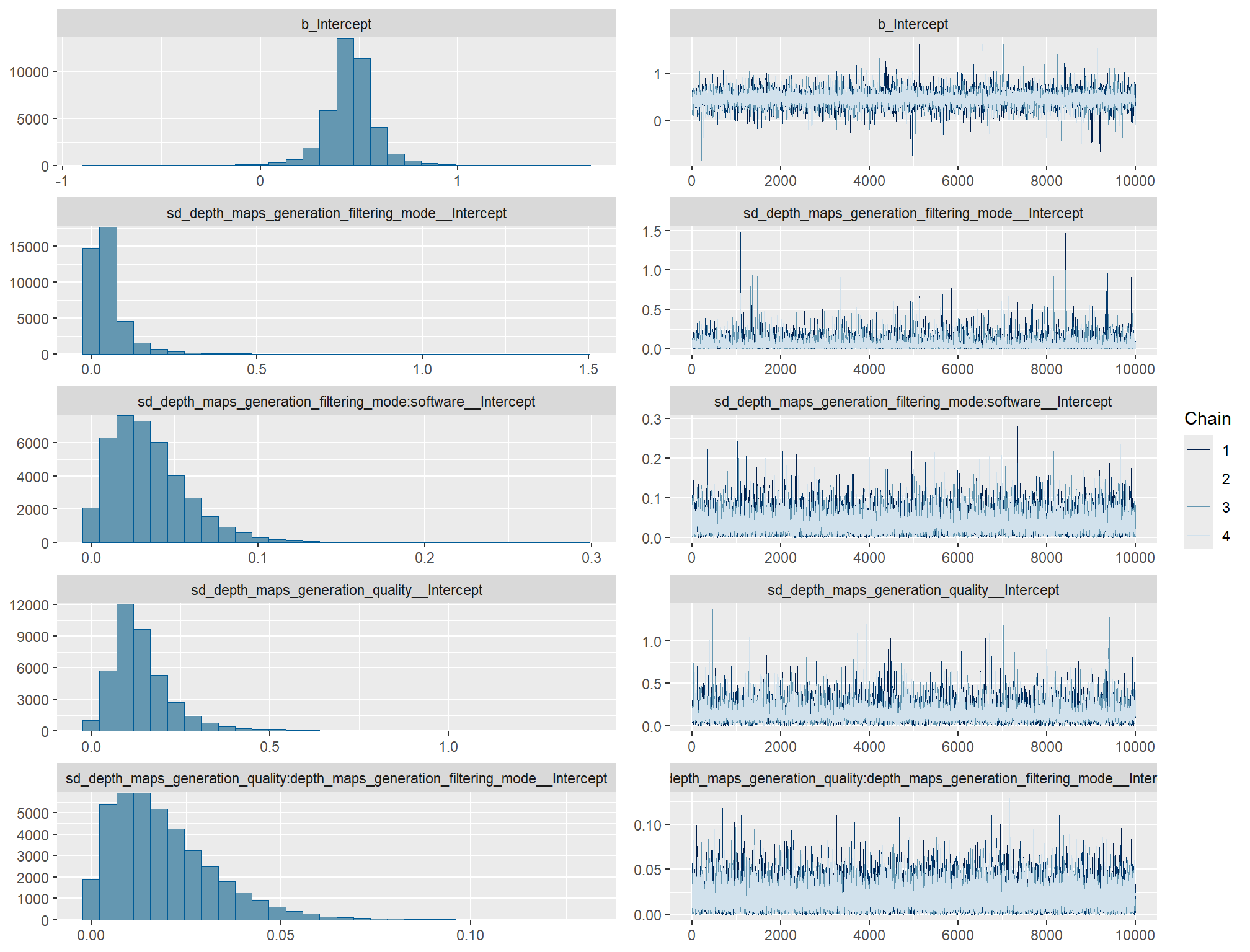

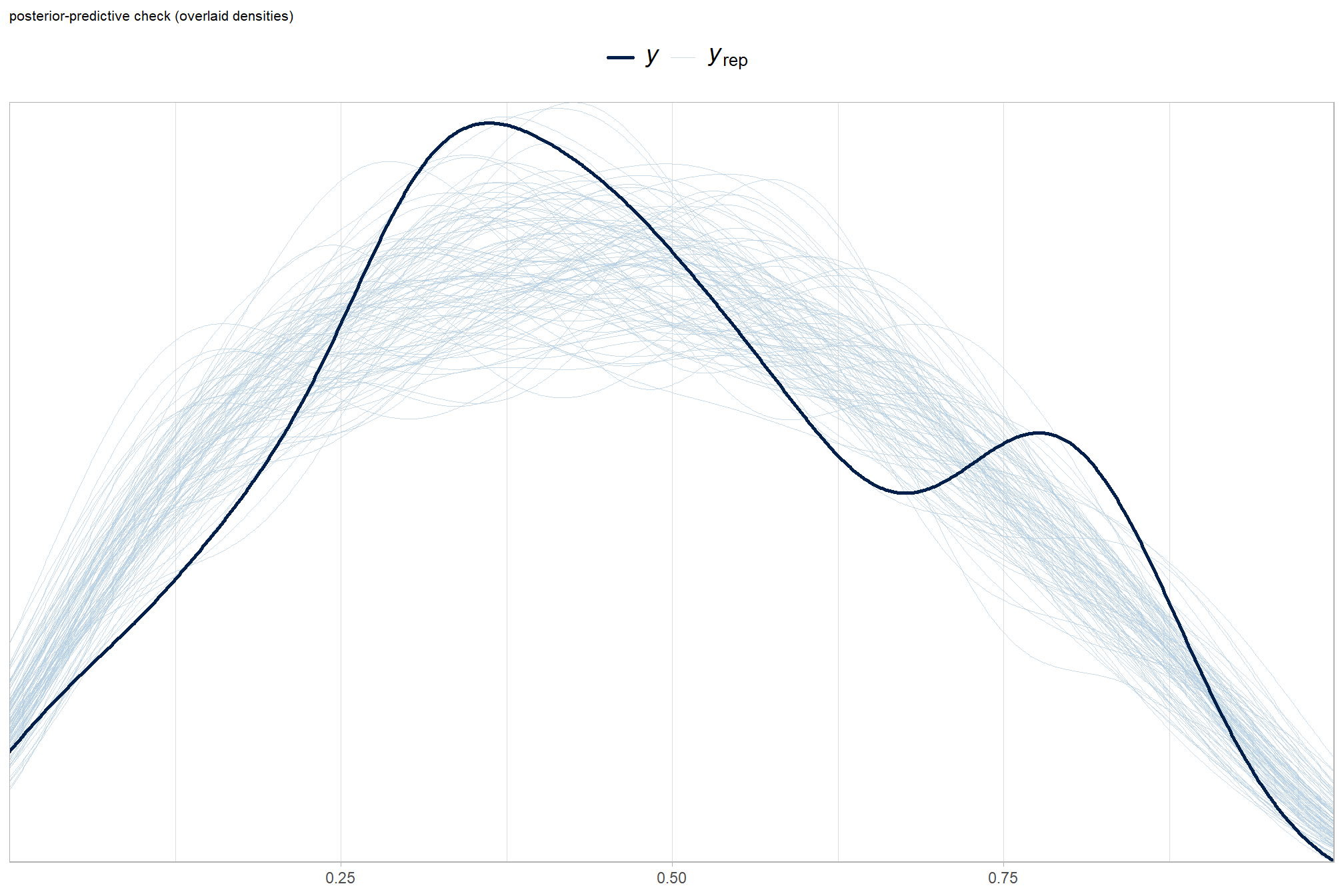

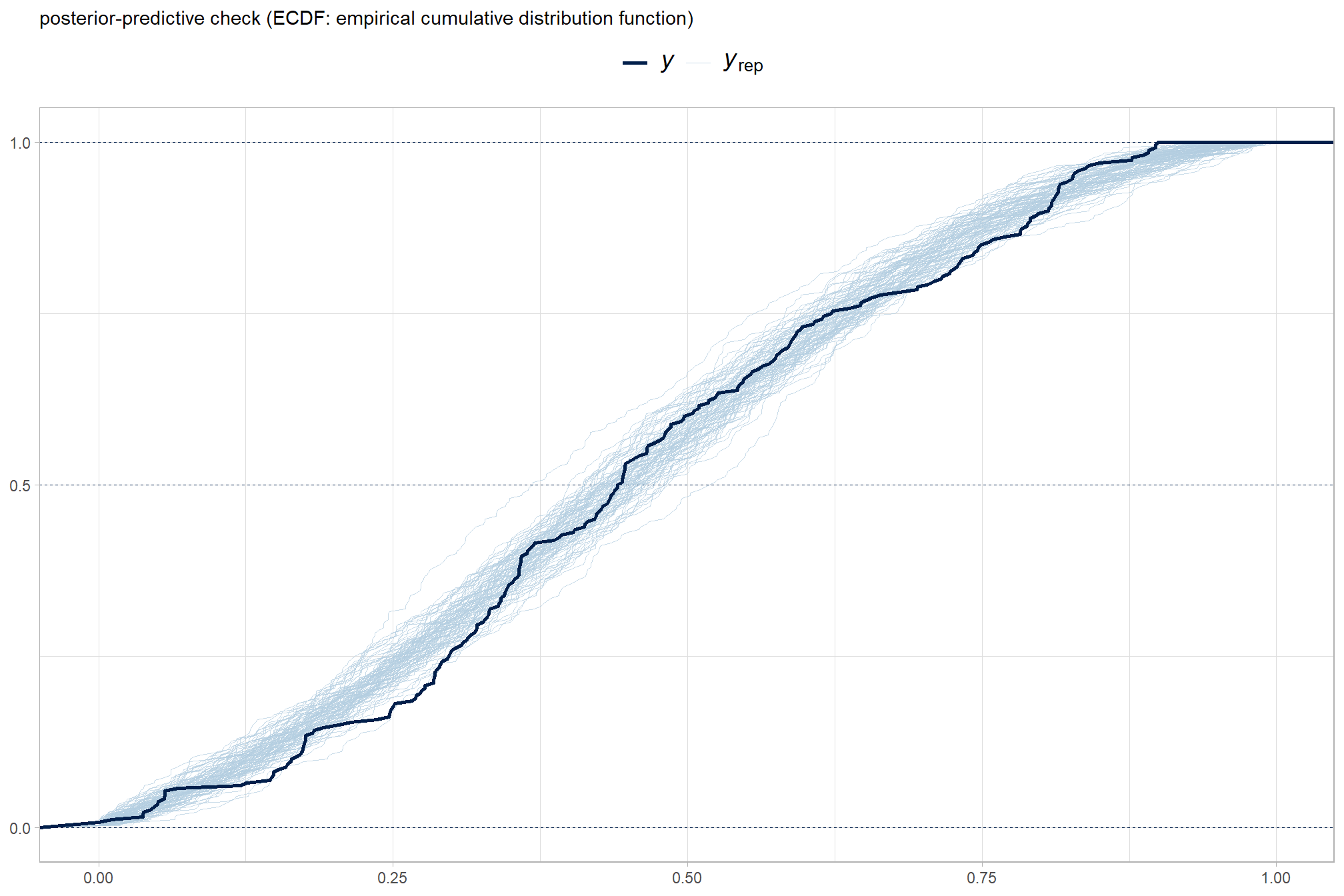

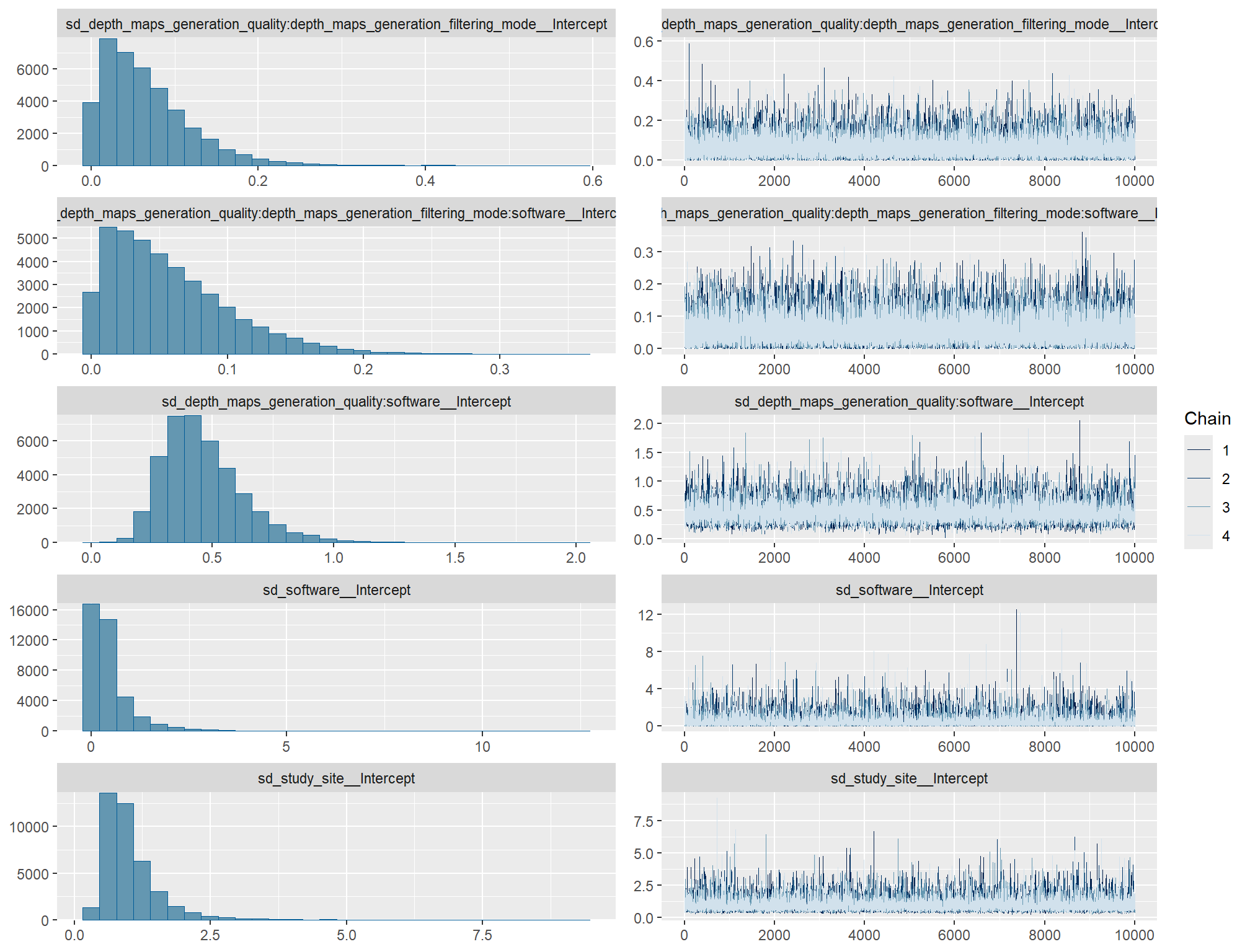

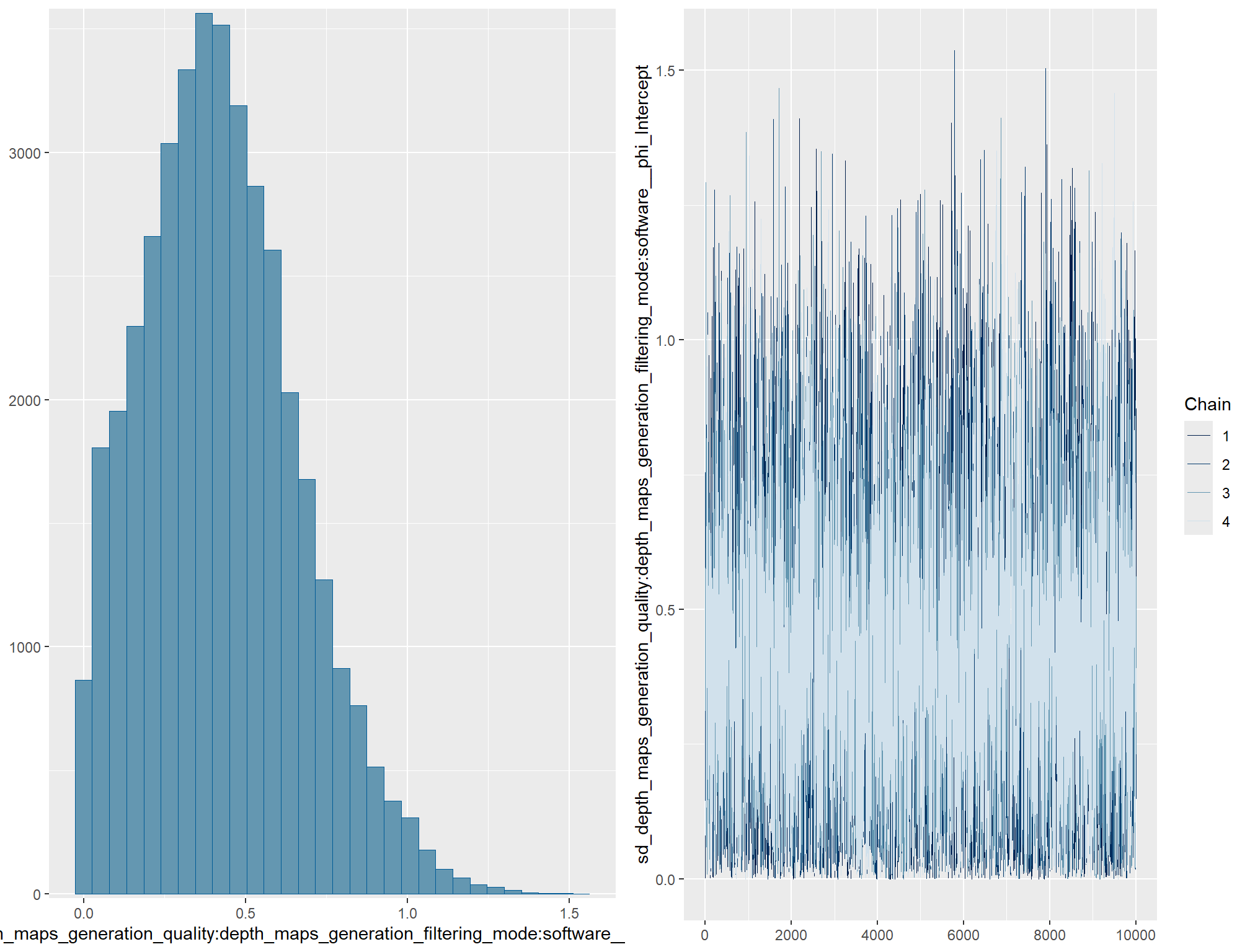

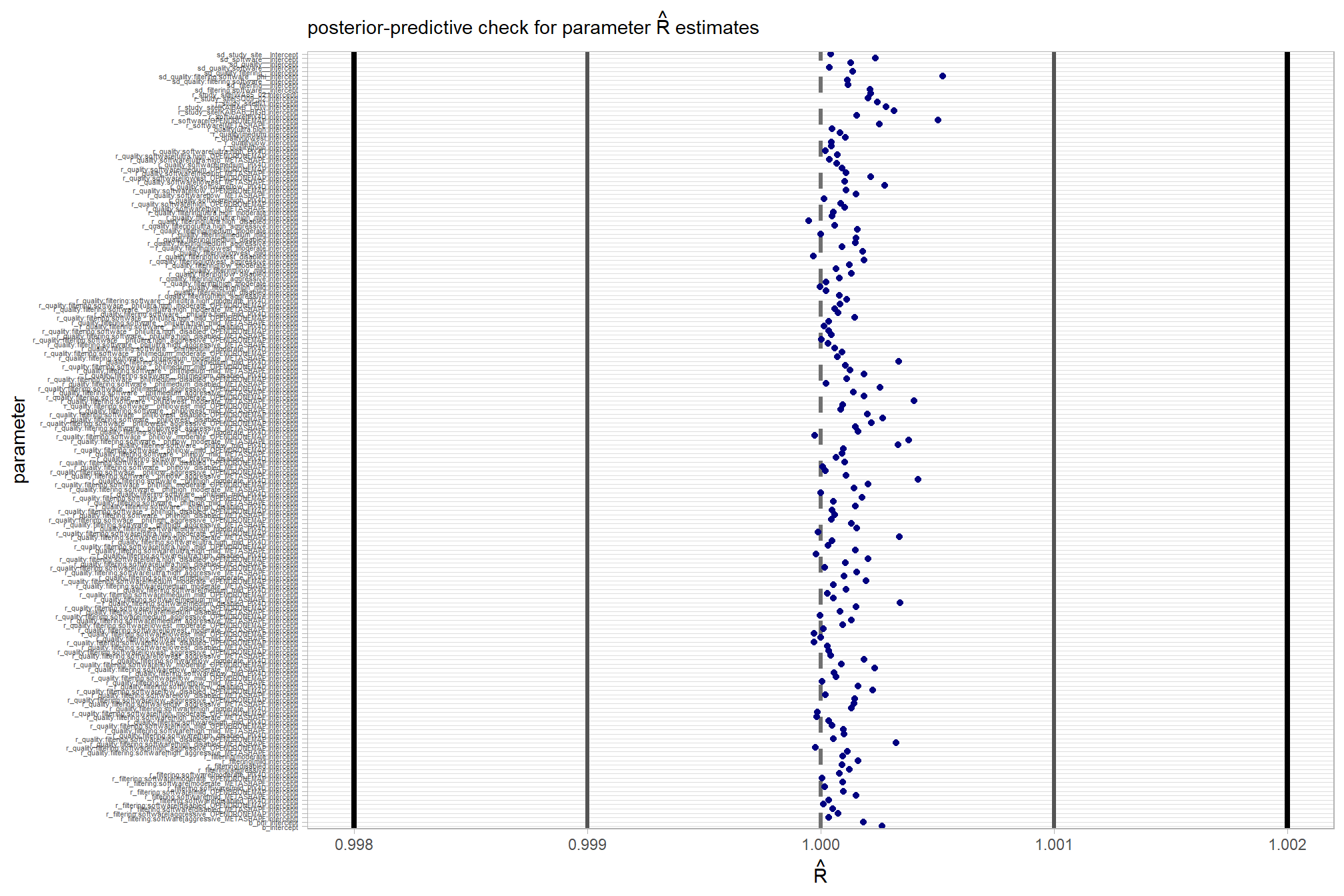

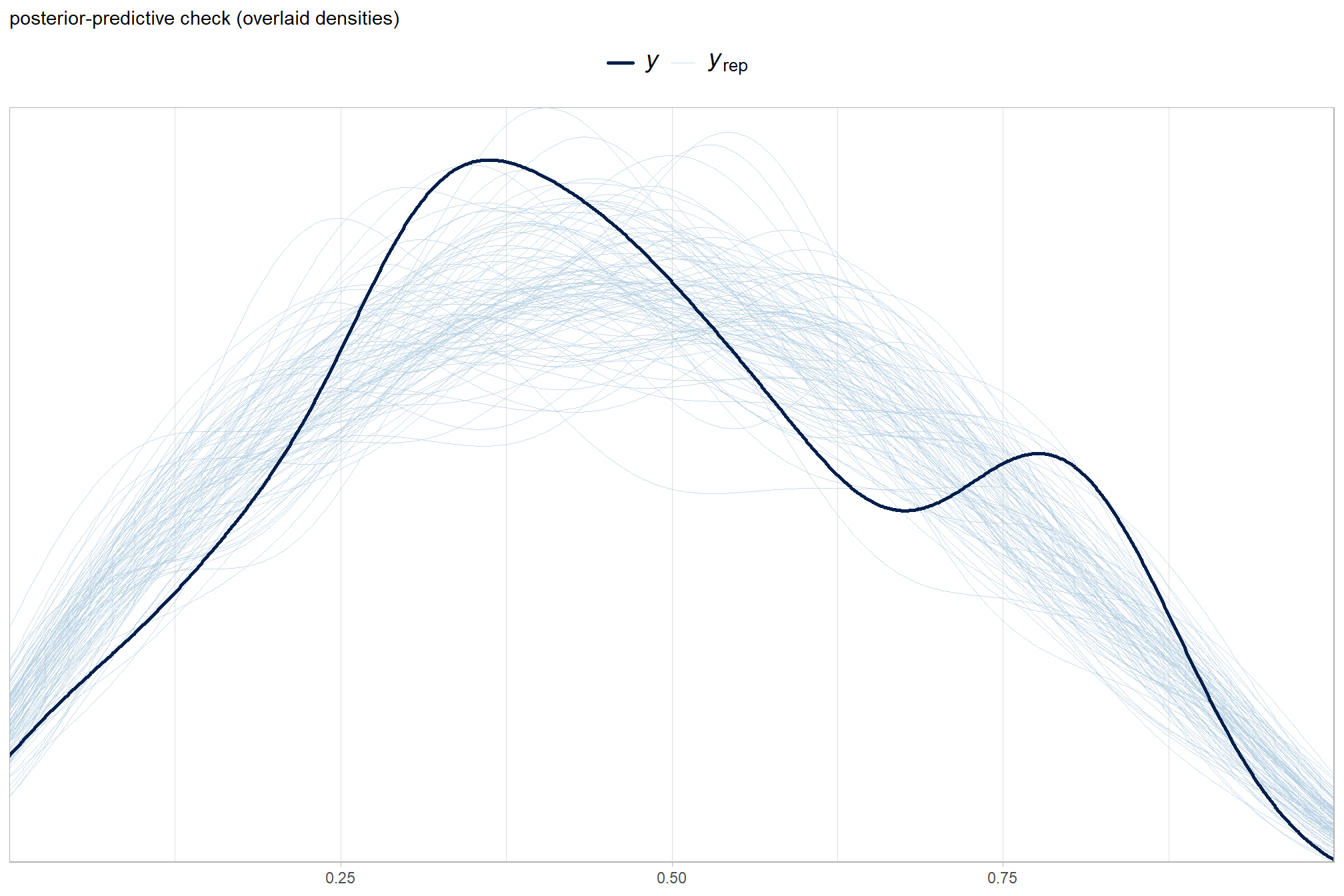

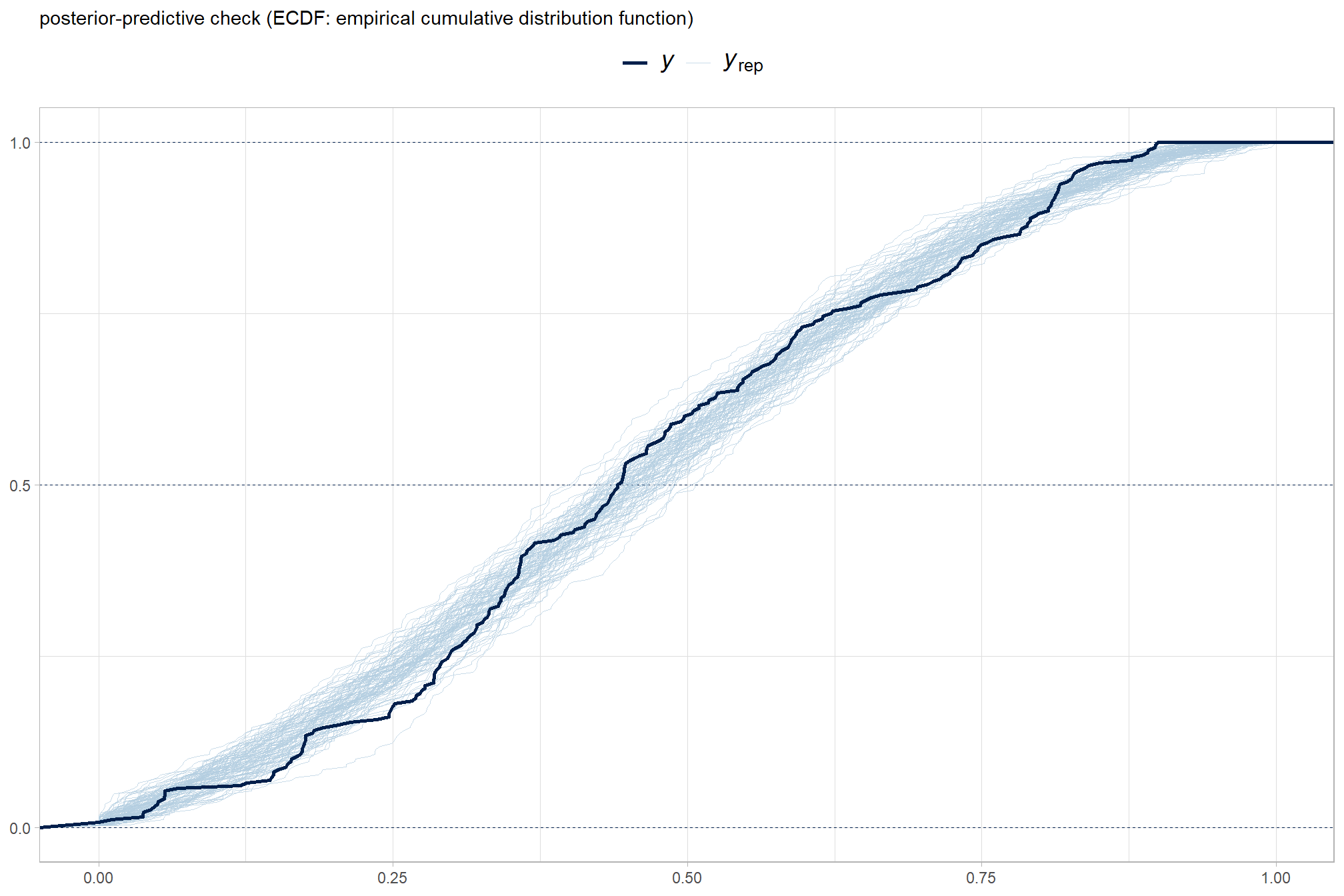

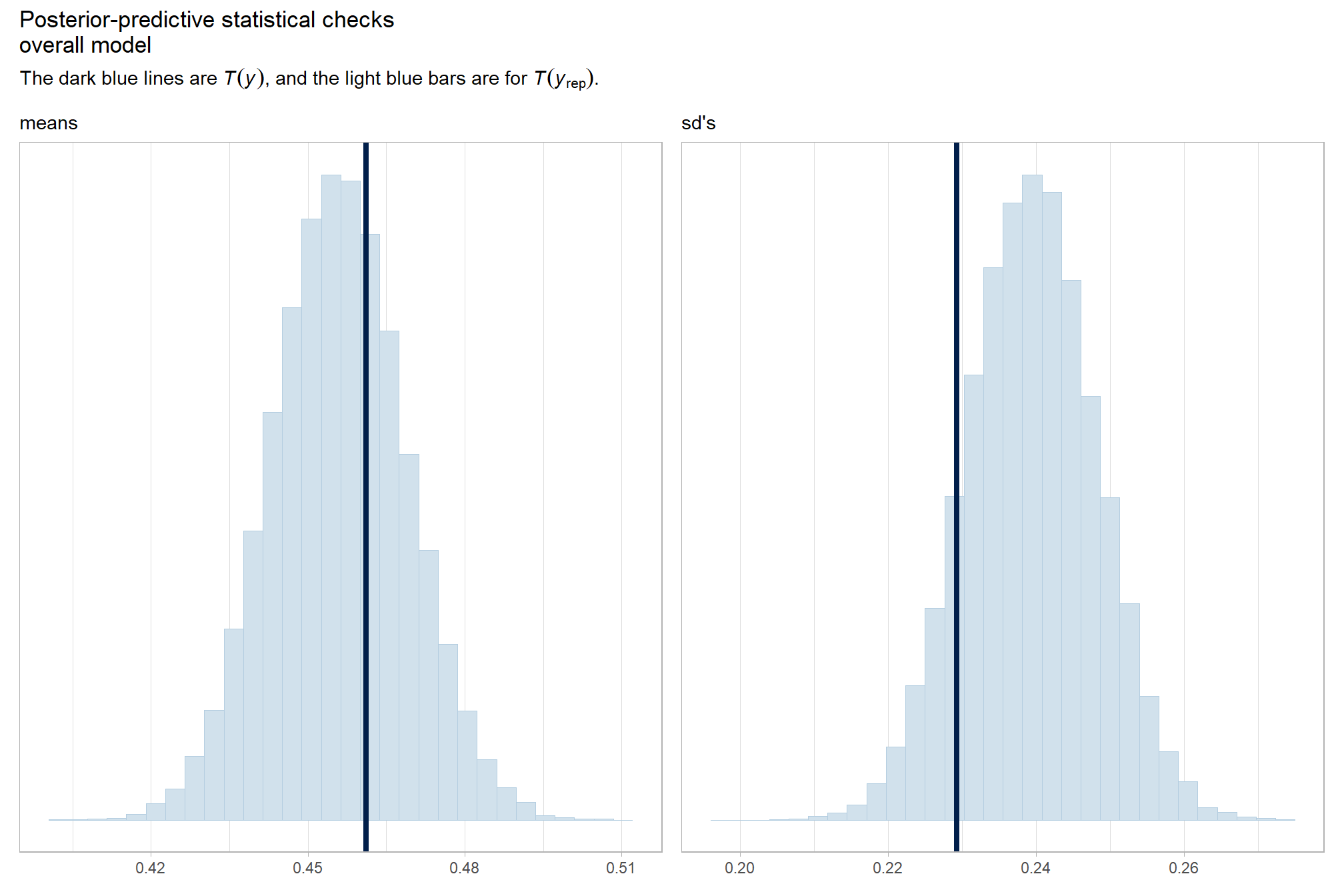

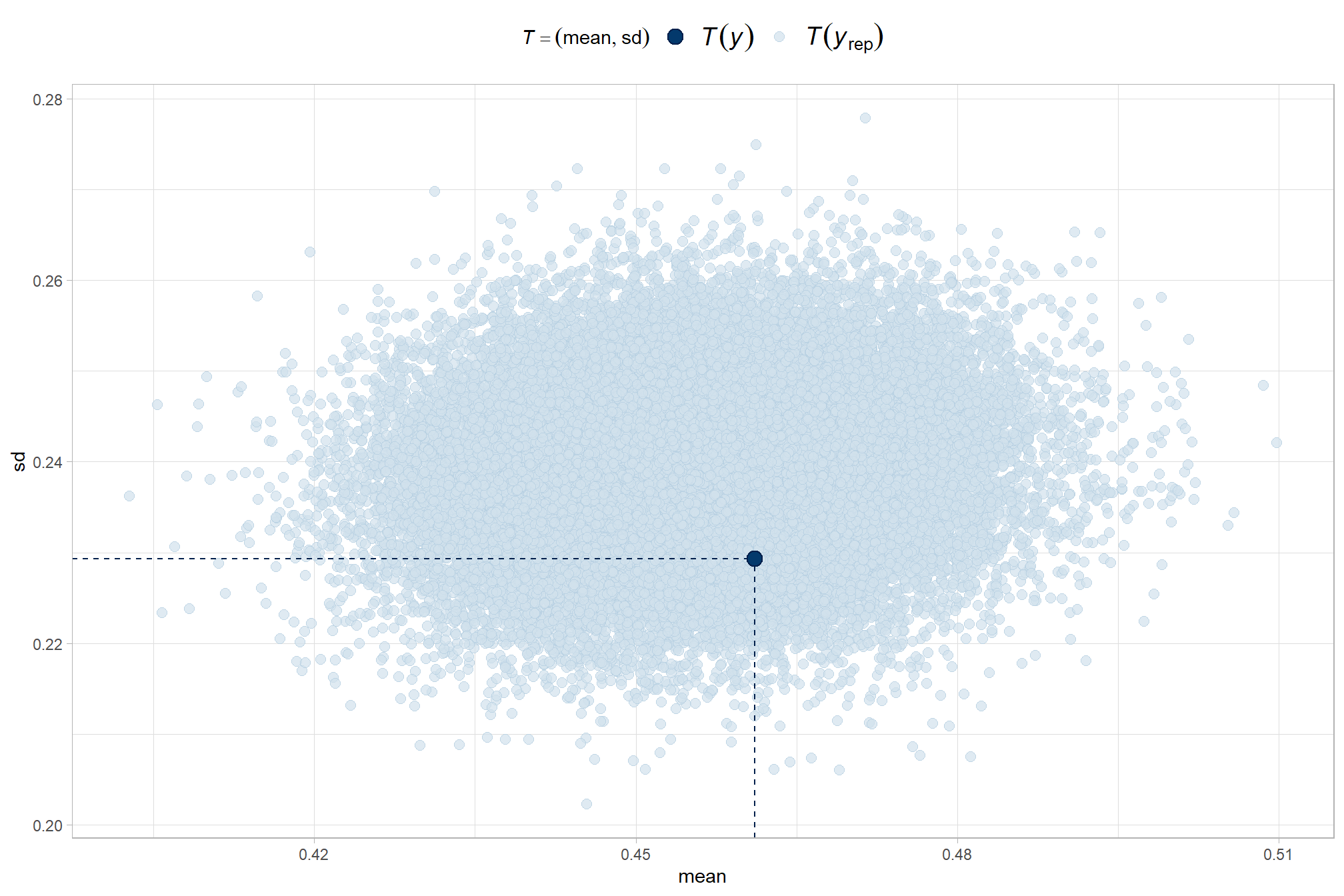

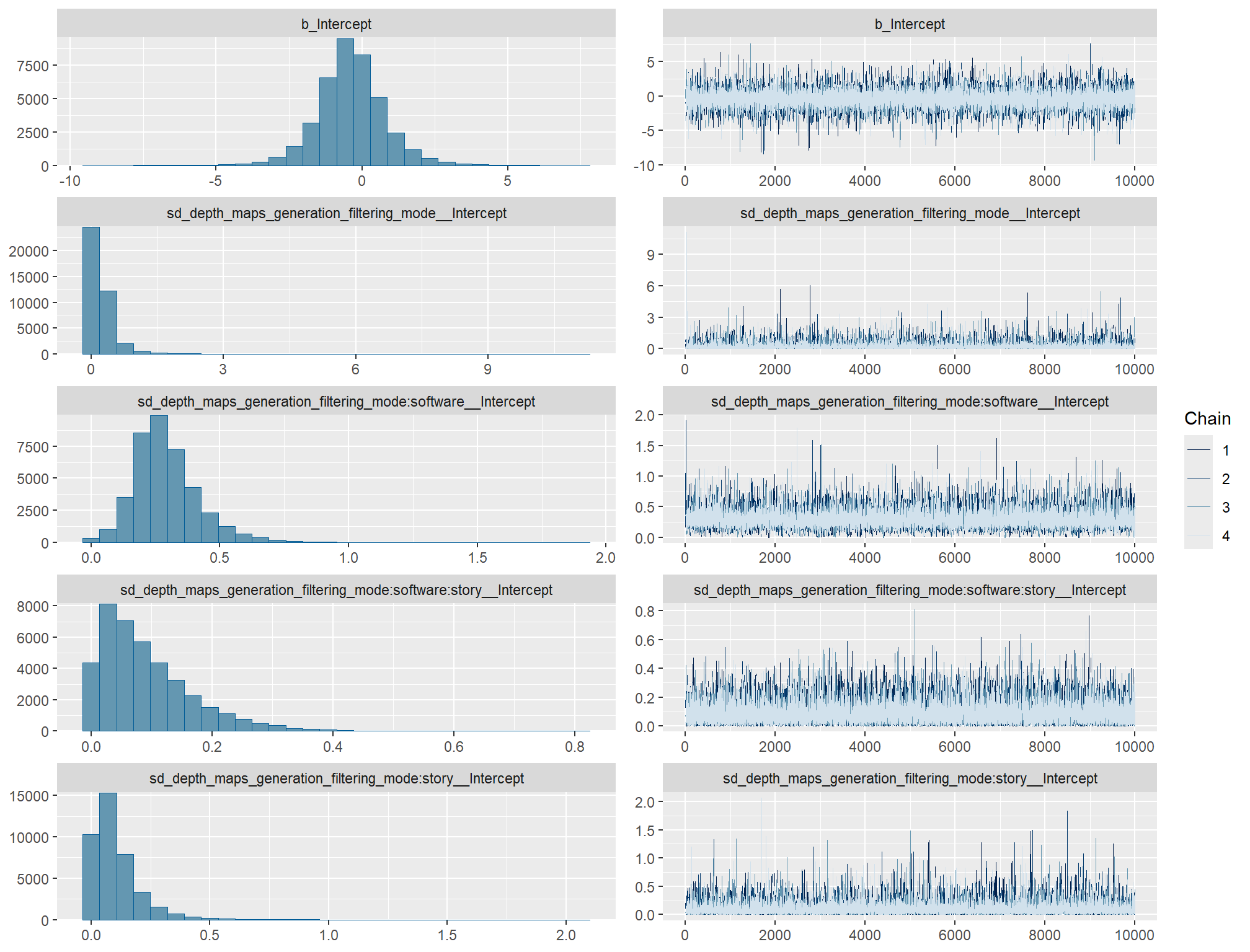

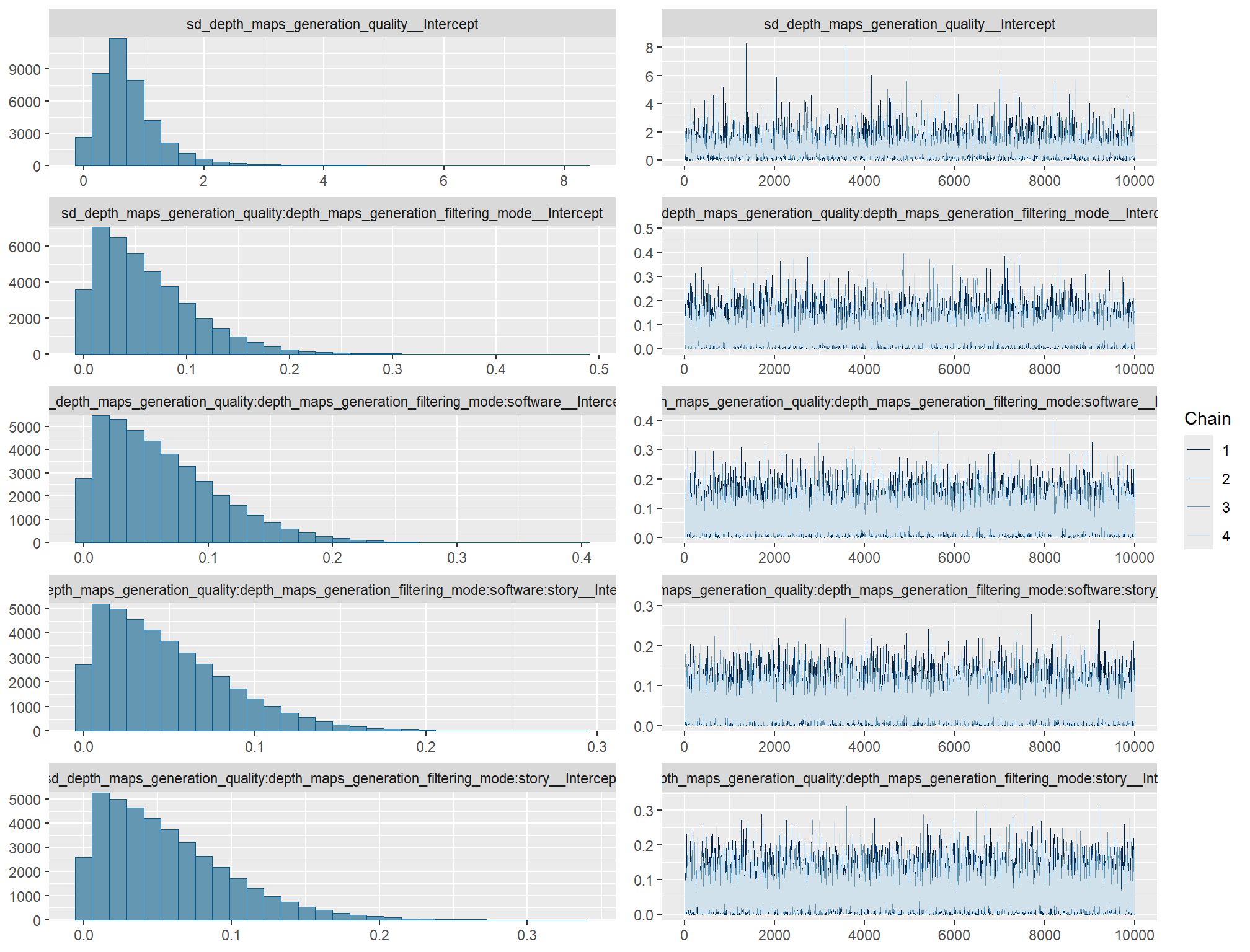

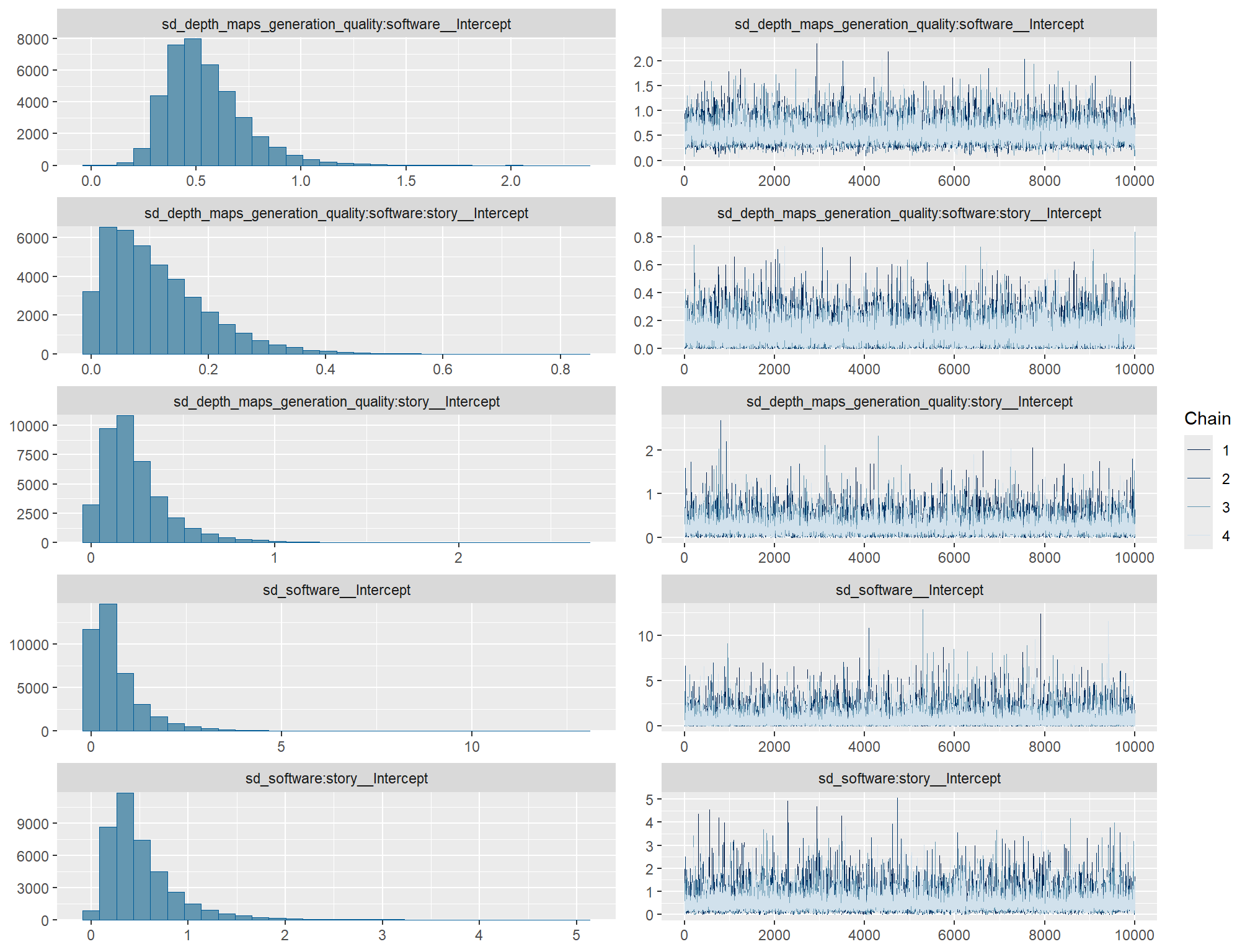

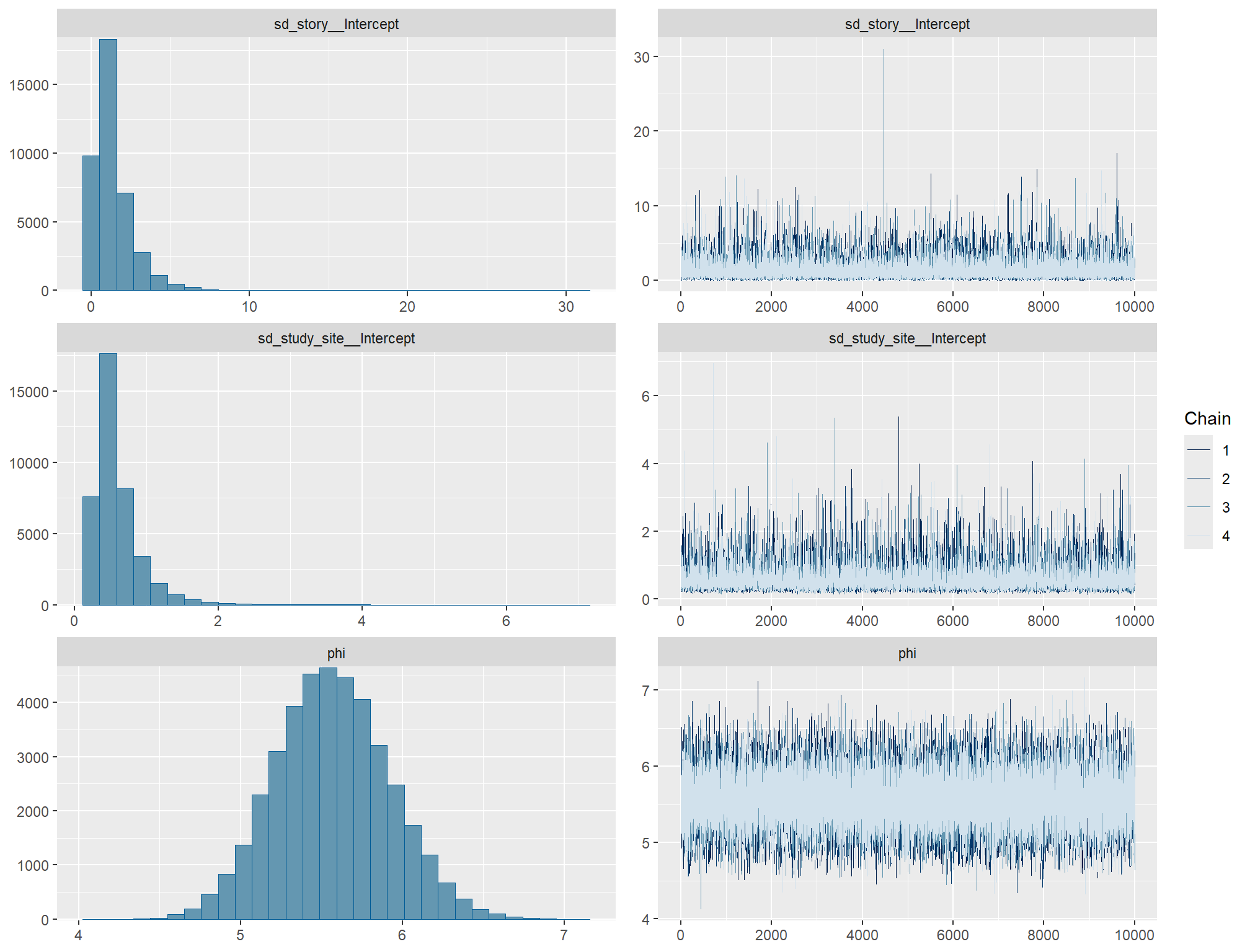

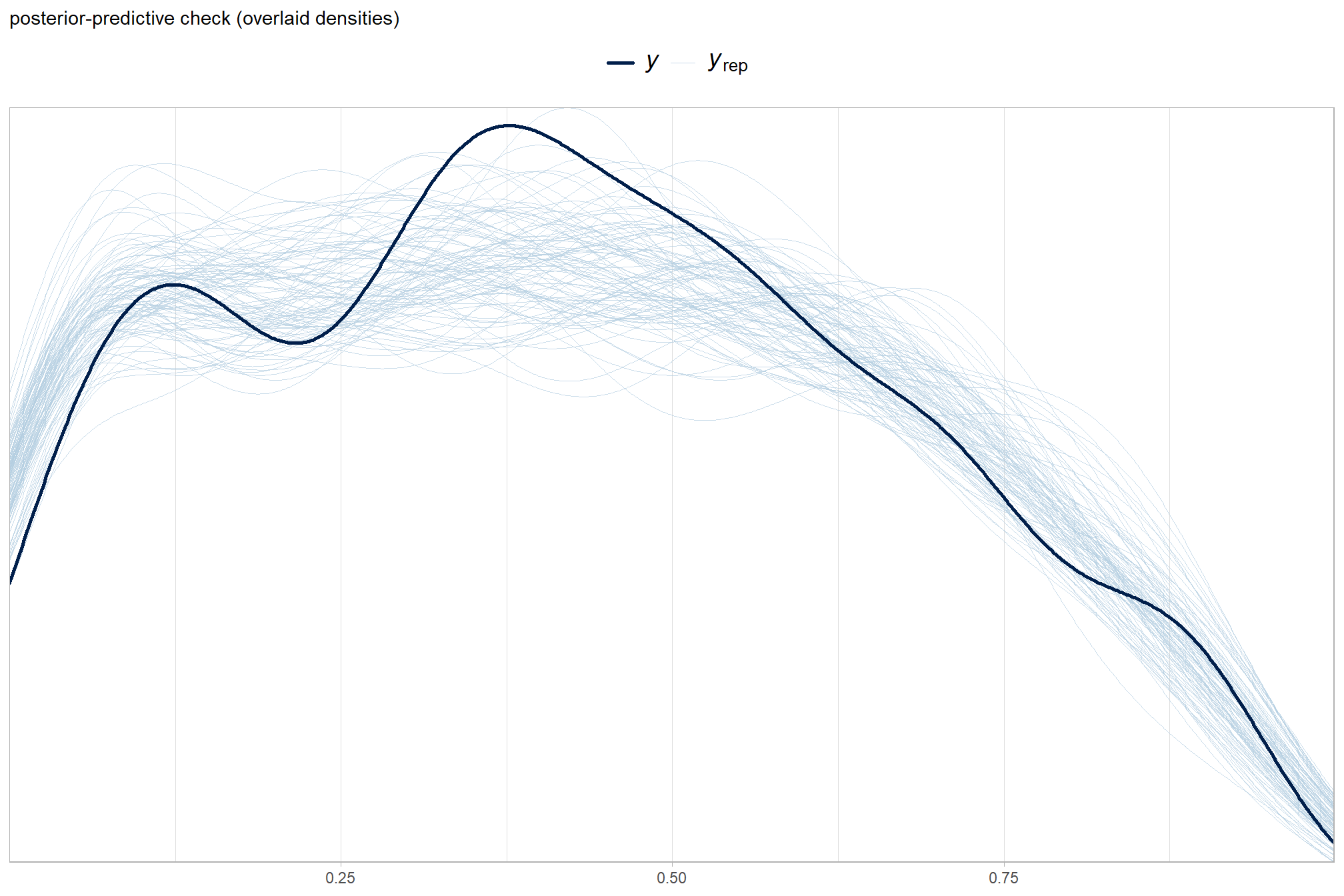

)check the trace plots for problems with convergence of the Markov chains

check the prior distributions

# check priors

brms::prior_summary(brms_f_mod2) %>%

kableExtra::kbl() %>%

kableExtra::kable_styling()| prior | class | coef | group | resp | dpar | nlpar | lb | ub | source |

|---|---|---|---|---|---|---|---|---|---|

| normal(mean_y, sd_y * 5) | Intercept | user | |||||||

| gamma(alpha, beta) | sd | 0 | user | ||||||

| sd | depth_maps_generation_filtering_mode | default | |||||||

| sd | Intercept | depth_maps_generation_filtering_mode | default | ||||||

| sd | depth_maps_generation_quality | default | |||||||

| sd | Intercept | depth_maps_generation_quality | default | ||||||

| sd | depth_maps_generation_quality:depth_maps_generation_filtering_mode | default | |||||||

| sd | Intercept | depth_maps_generation_quality:depth_maps_generation_filtering_mode | default | ||||||

| cauchy(0, sd_y) | sigma | 0 | user |

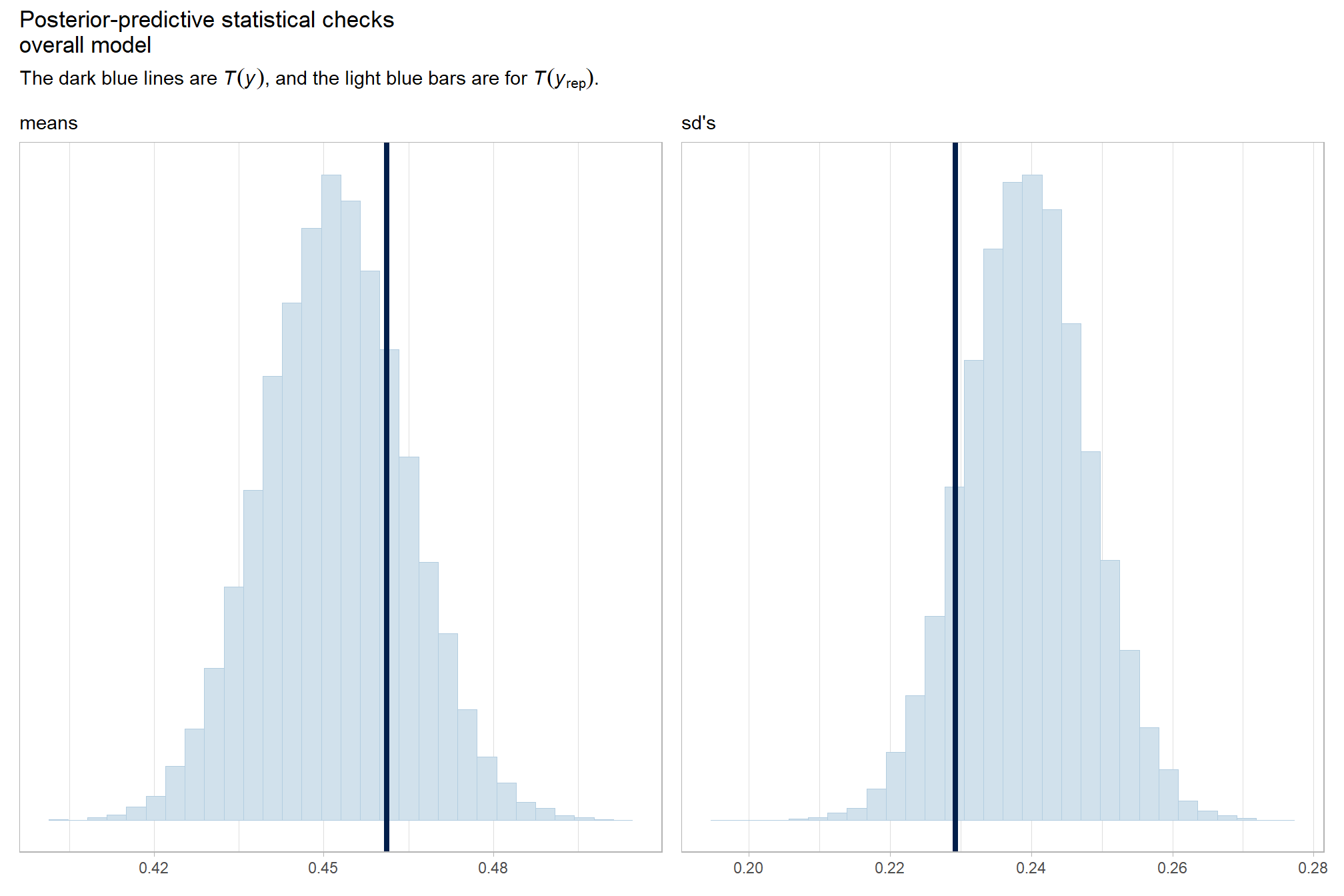

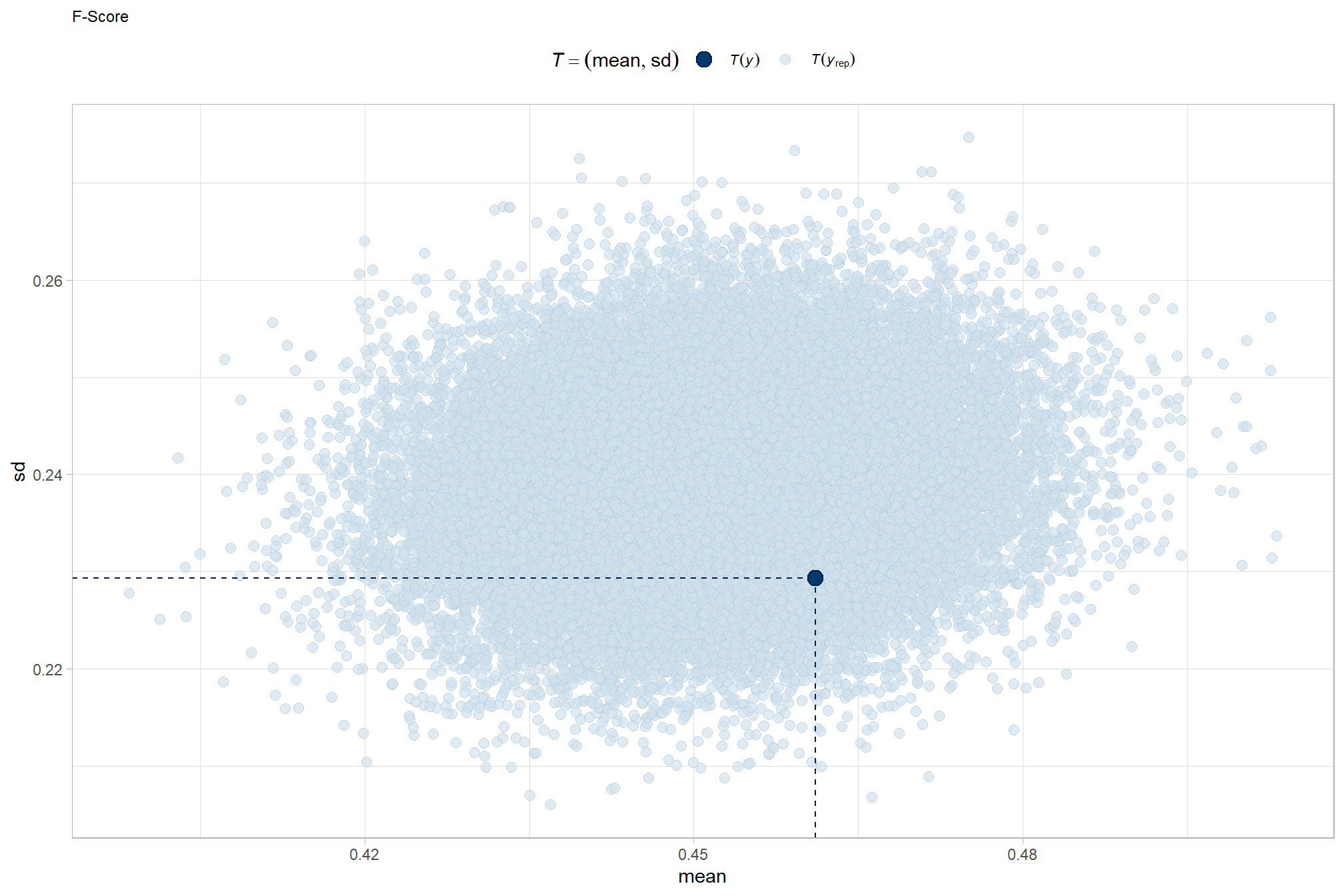

The brms::brm model summary

brms_f_mod2 %>%

brms::posterior_summary() %>%

as.data.frame() %>%

tibble::rownames_to_column(var = "parameter") %>%

dplyr::rename_with(tolower) %>%

dplyr::filter(

stringr::str_starts(parameter, "b_")

| stringr::str_starts(parameter, "r_")

| stringr::str_starts(parameter, "sd_")

| parameter == "sigma"

) %>%

dplyr::mutate(

parameter = parameter %>%

stringr::str_replace_all("depth_maps_generation_quality", "quality") %>%

stringr::str_replace_all("depth_maps_generation_filtering_mode", "filtering")

) %>%

kableExtra::kbl(digits = 2, caption = "Bayesian two nominal predictors: F-score by dense cloud quality and filtering mode") %>%

kableExtra::kable_styling() %>%

kableExtra::scroll_box(height = "6in")| parameter | estimate | est.error | q2.5 | q97.5 |

|---|---|---|---|---|

| b_Intercept | 0.45 | 0.09 | 0.27 | 0.63 |

| sd_filtering__Intercept | 0.05 | 0.05 | 0.00 | 0.19 |

| sd_quality__Intercept | 0.16 | 0.08 | 0.07 | 0.39 |

| sd_quality:filtering__Intercept | 0.02 | 0.01 | 0.00 | 0.05 |

| sigma | 0.21 | 0.01 | 0.19 | 0.23 |

| r_filtering[aggressive,Intercept] | -0.02 | 0.04 | -0.12 | 0.05 |

| r_filtering[moderate,Intercept] | 0.00 | 0.04 | -0.08 | 0.08 |

| r_filtering[mild,Intercept] | 0.02 | 0.04 | -0.05 | 0.11 |

| r_filtering[disabled,Intercept] | 0.01 | 0.04 | -0.06 | 0.10 |

| r_quality[ultra.high,Intercept] | 0.12 | 0.08 | -0.05 | 0.29 |

| r_quality[high,Intercept] | 0.08 | 0.08 | -0.09 | 0.25 |

| r_quality[medium,Intercept] | 0.01 | 0.08 | -0.15 | 0.18 |

| r_quality[low,Intercept] | -0.05 | 0.08 | -0.22 | 0.12 |

| r_quality[lowest,Intercept] | -0.17 | 0.08 | -0.34 | 0.00 |

| r_quality:filtering[high_aggressive,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[high_disabled,Intercept] | 0.00 | 0.02 | -0.05 | 0.05 |

| r_quality:filtering[high_mild,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[high_moderate,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[low_aggressive,Intercept] | -0.01 | 0.02 | -0.07 | 0.03 |

| r_quality:filtering[low_disabled,Intercept] | 0.00 | 0.02 | -0.04 | 0.06 |

| r_quality:filtering[low_mild,Intercept] | 0.01 | 0.02 | -0.04 | 0.06 |

| r_quality:filtering[low_moderate,Intercept] | 0.00 | 0.02 | -0.05 | 0.04 |

| r_quality:filtering[lowest_aggressive,Intercept] | 0.00 | 0.02 | -0.05 | 0.04 |

| r_quality:filtering[lowest_disabled,Intercept] | 0.00 | 0.02 | -0.05 | 0.05 |

| r_quality:filtering[lowest_mild,Intercept] | 0.00 | 0.02 | -0.05 | 0.05 |

| r_quality:filtering[lowest_moderate,Intercept] | 0.00 | 0.02 | -0.06 | 0.04 |

| r_quality:filtering[medium_aggressive,Intercept] | -0.01 | 0.02 | -0.06 | 0.04 |

| r_quality:filtering[medium_disabled,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[medium_mild,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[medium_moderate,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

| r_quality:filtering[ultra.high_aggressive,Intercept] | 0.00 | 0.02 | -0.04 | 0.06 |

| r_quality:filtering[ultra.high_disabled,Intercept] | 0.00 | 0.02 | -0.05 | 0.04 |

| r_quality:filtering[ultra.high_mild,Intercept] | 0.00 | 0.02 | -0.05 | 0.05 |

| r_quality:filtering[ultra.high_moderate,Intercept] | 0.00 | 0.02 | -0.04 | 0.05 |

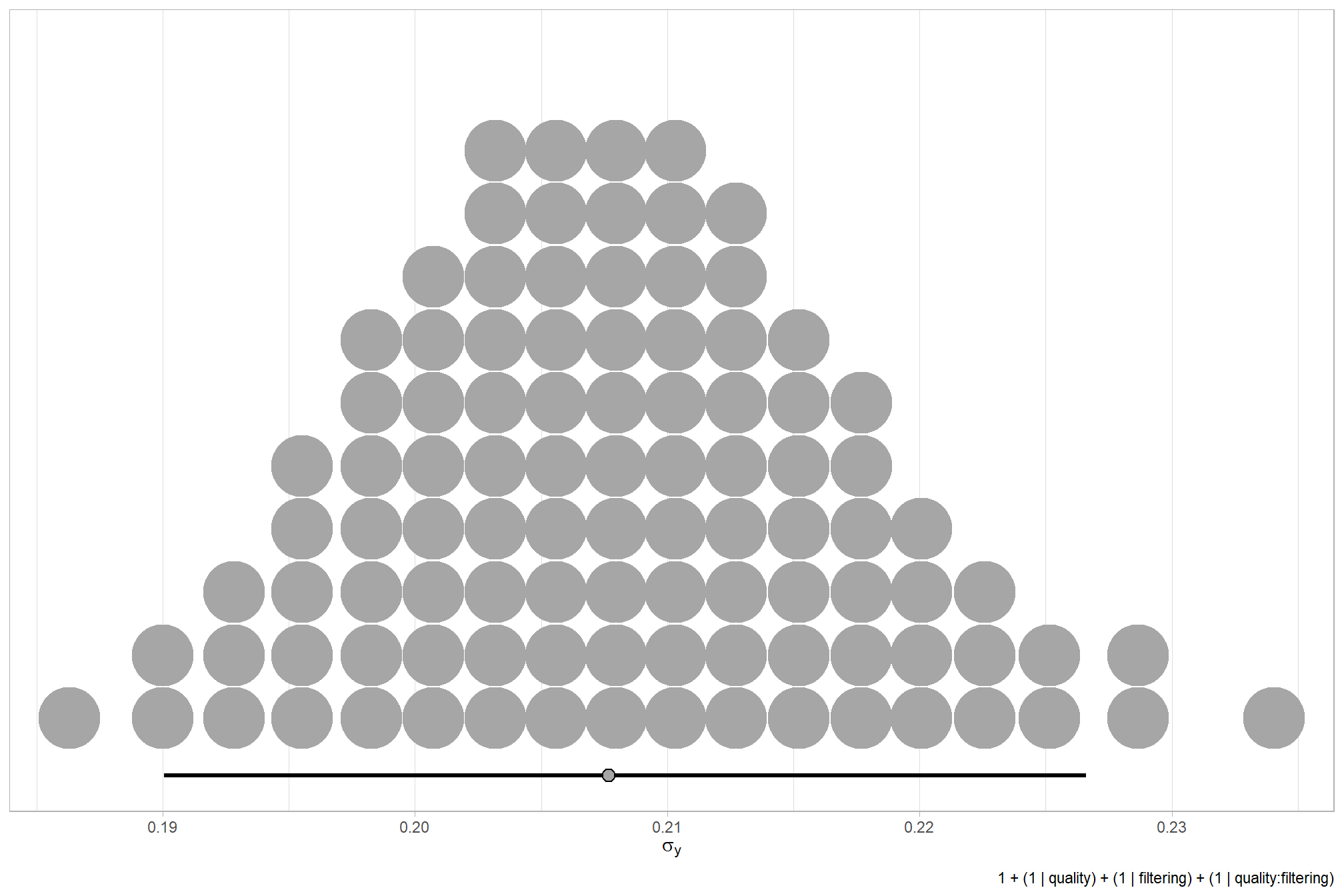

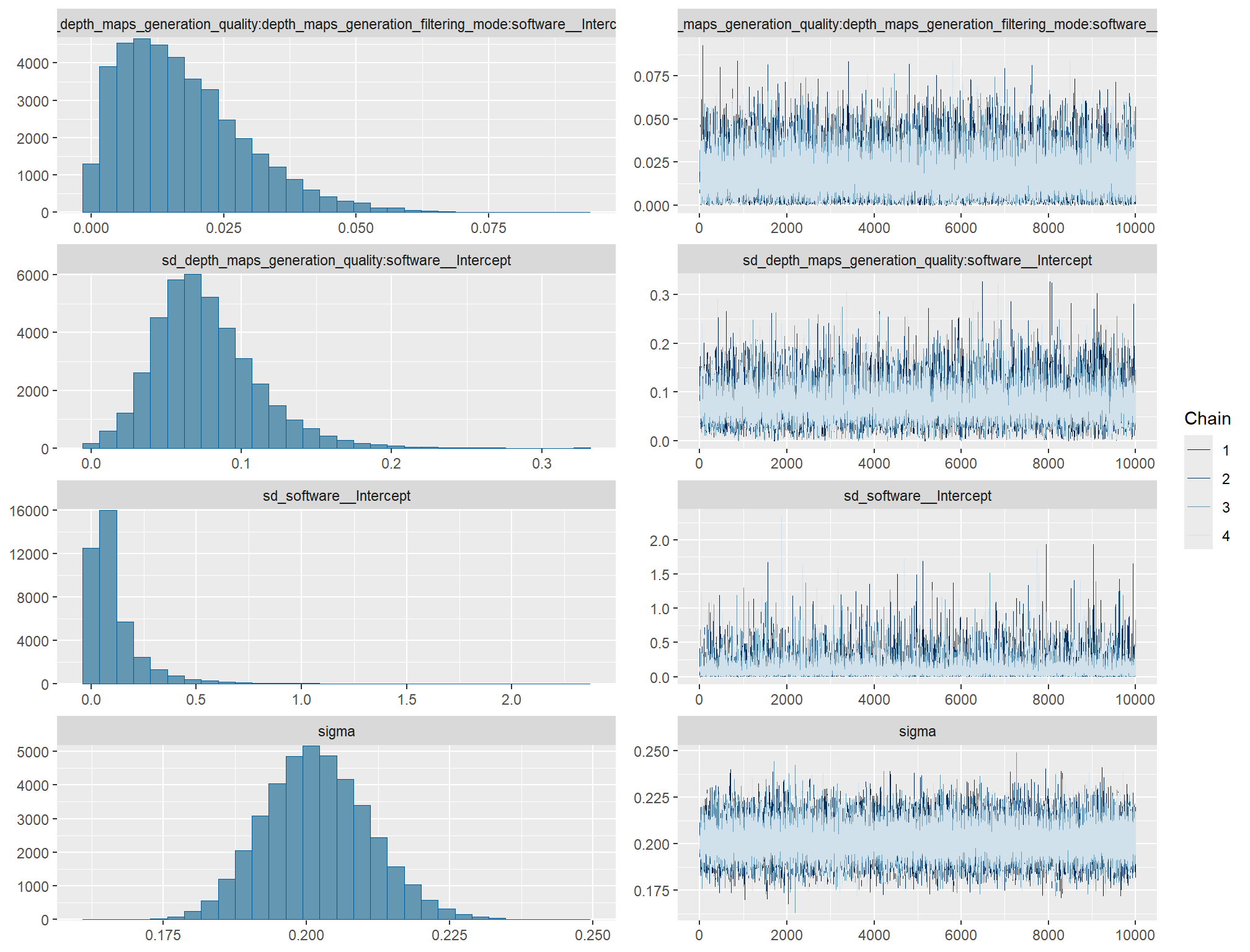

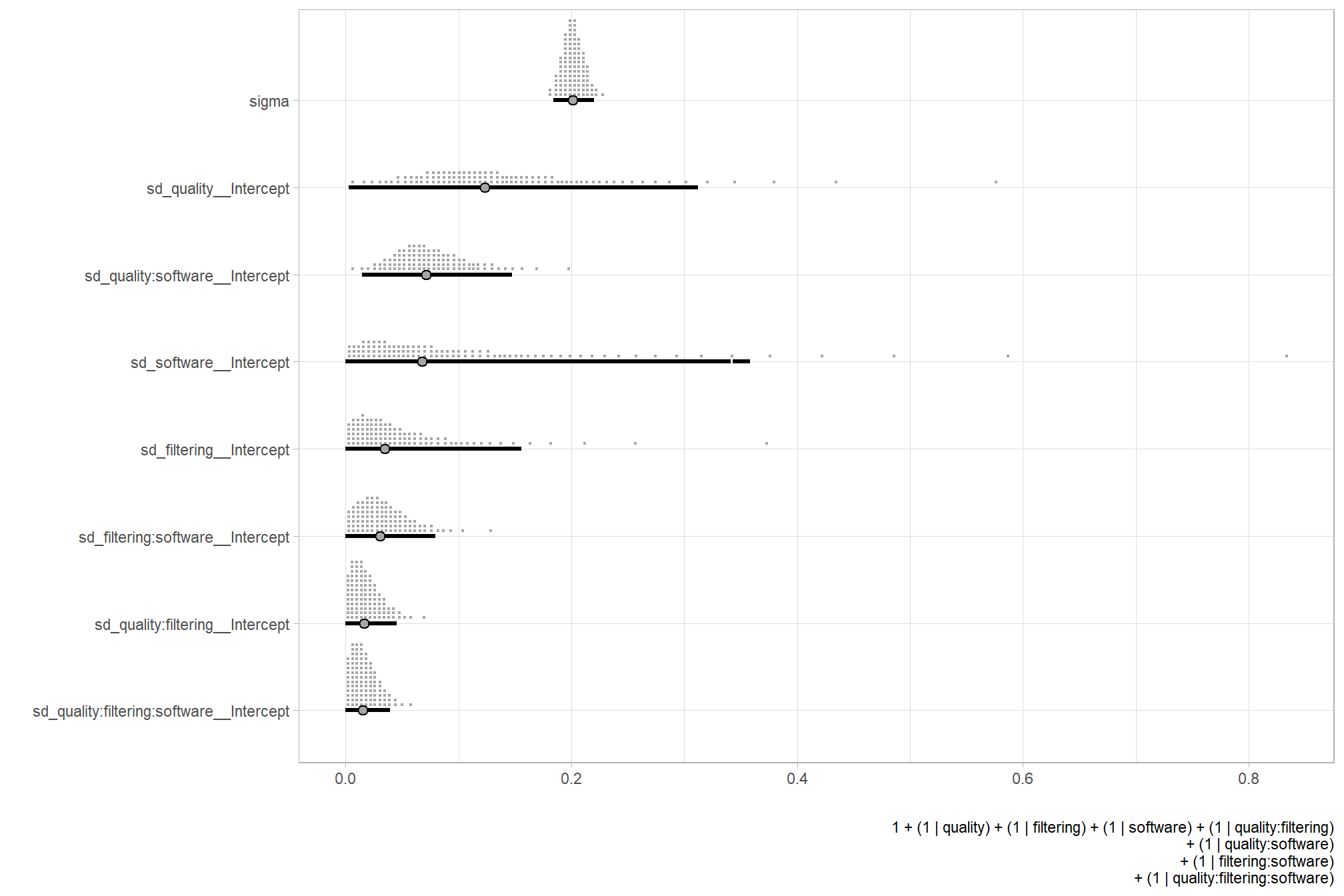

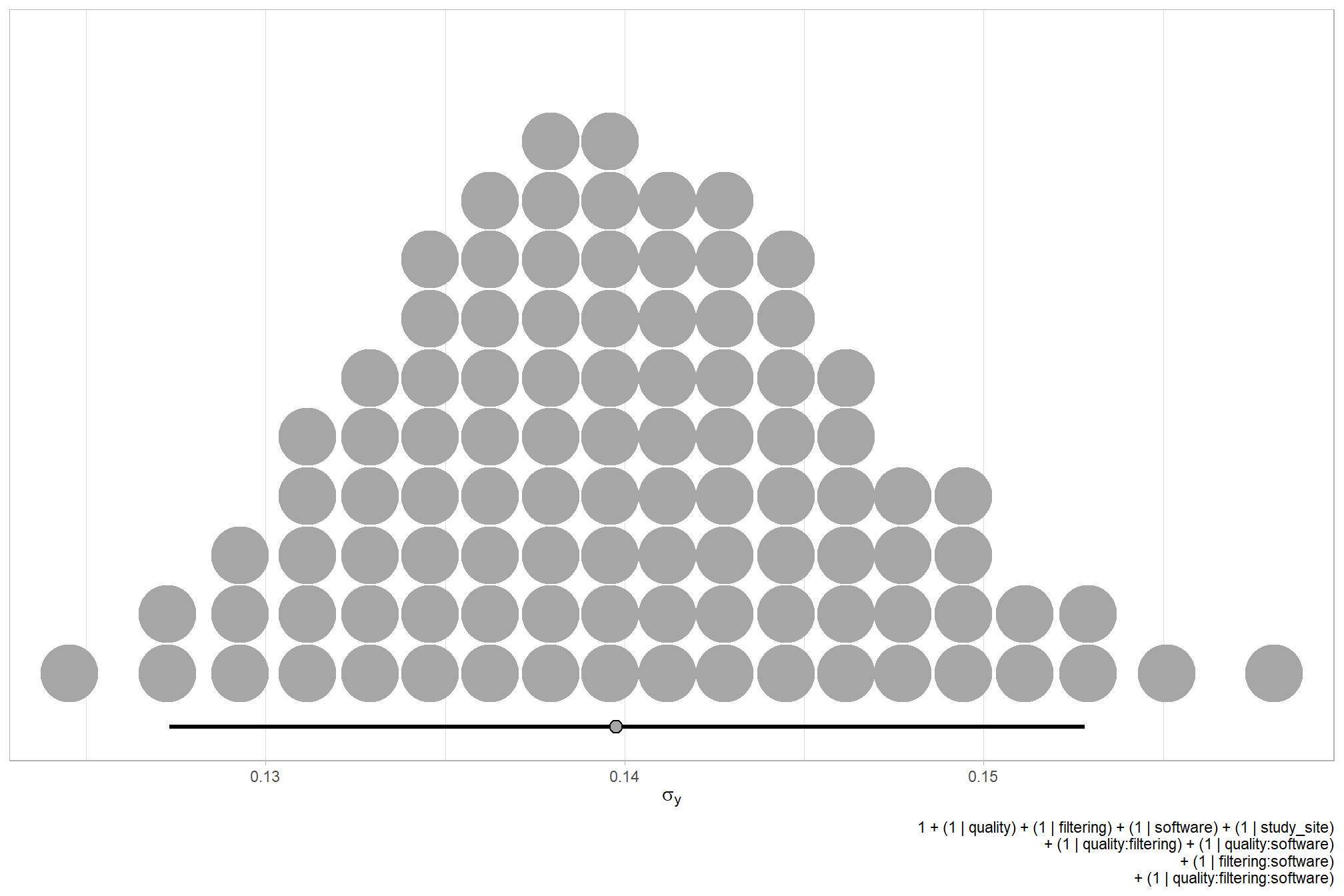

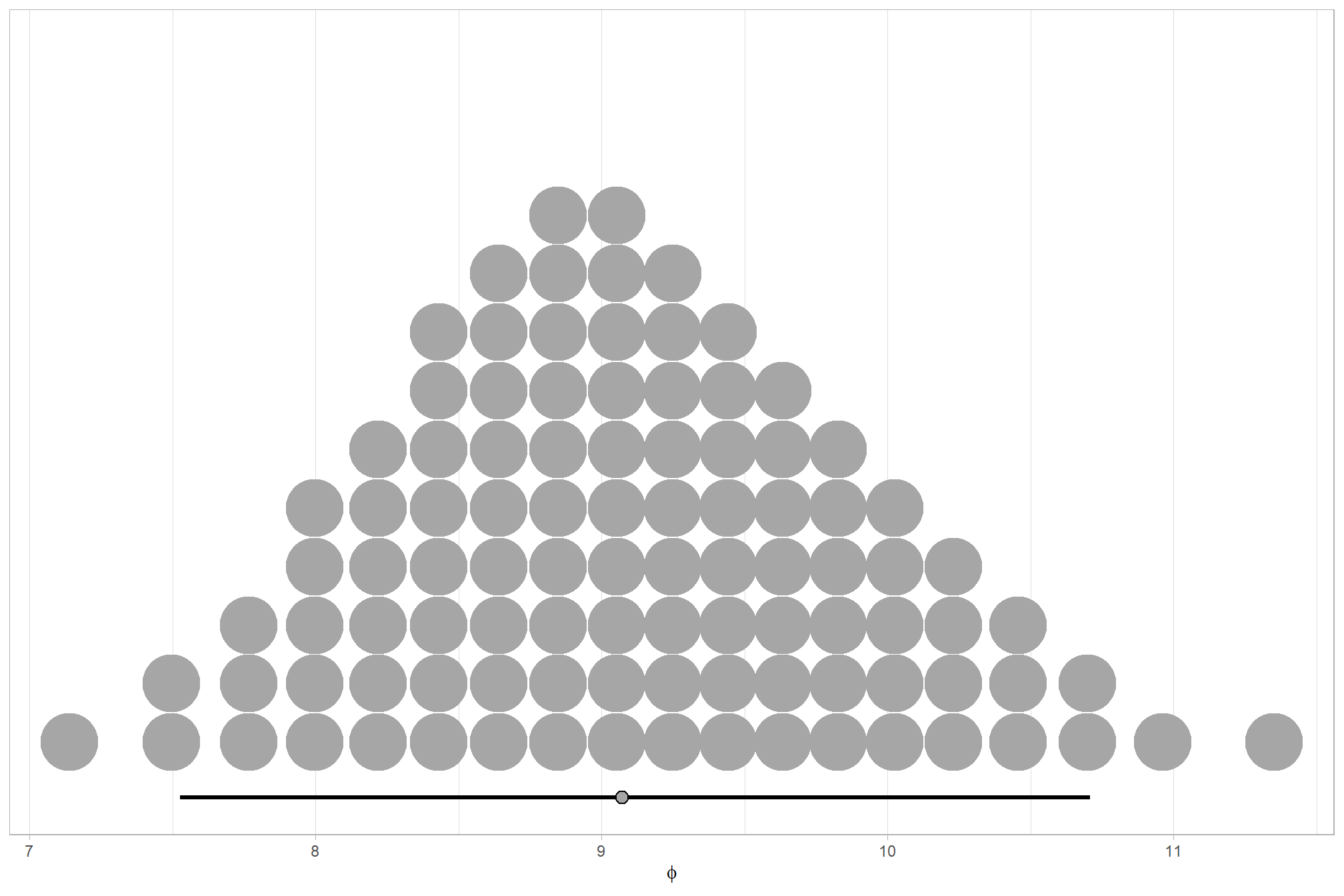

We can look at the model noise standard deviation \(\sigma_y\)

# get formula

form_temp = brms_f_mod2$formula$formula[3] %>%

as.character() %>% get_frmla_text() %>%

stringr::str_replace_all("depth_maps_generation_quality", "quality") %>%

stringr::str_replace_all("depth_maps_generation_filtering_mode", "filtering")

# extract the posterior draws

brms::as_draws_df(brms_f_mod2) %>%

# plot

ggplot(aes(x = sigma, y = 0)) +

tidybayes::stat_dotsinterval(

point_interval = median_hdi, .width = .95

, justification = -0.04

, shape = 21, point_size = 3

, quantiles = 100

) +

scale_y_continuous(NULL, breaks = NULL) +

labs(

x = latex2exp::TeX("$\\sigma_y$")

, caption = form_temp

) +

theme_light()

how is it compared to the first model?

# how is it compared to the first model

dplyr::bind_rows(

brms::as_draws_df(brms_f_mod1) %>% tidybayes::median_hdi(sigma) %>% dplyr::mutate(model = "one nominal predictor")

, brms::as_draws_df(brms_f_mod2) %>% tidybayes::median_hdi(sigma) %>% dplyr::mutate(model = "two nominal predictor")

) %>%

dplyr::relocate(model) %>%

kableExtra::kbl(digits = 2, caption = "brms::brm model noise standard deviation comparison") %>%

kableExtra::kable_styling()| model | sigma | .lower | .upper | .width | .point | .interval |

|---|---|---|---|---|---|---|

| one nominal predictor | 0.21 | 0.19 | 0.23 | 0.95 | median | hdi |

| two nominal predictor | 0.21 | 0.19 | 0.23 | 0.95 | median | hdi |

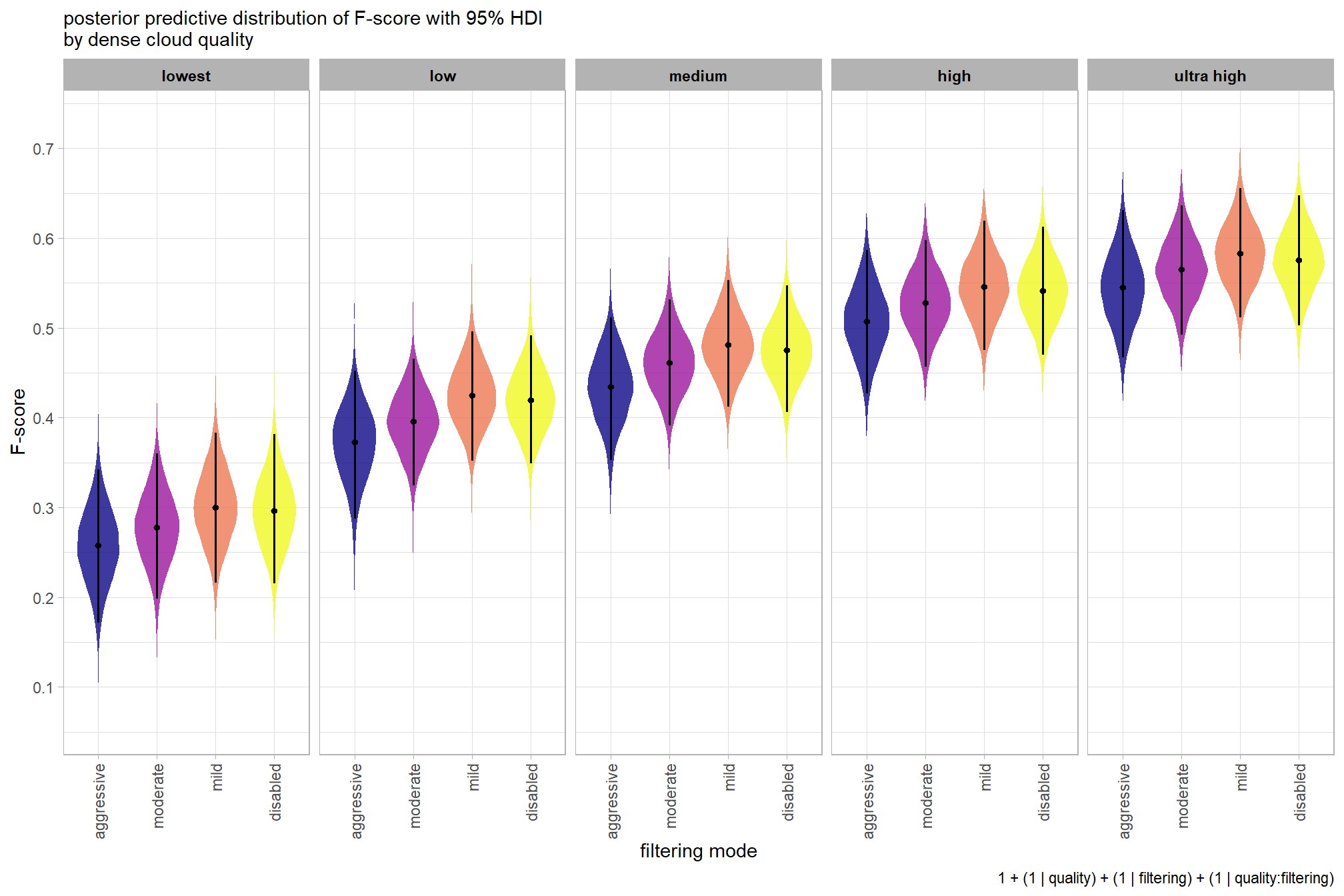

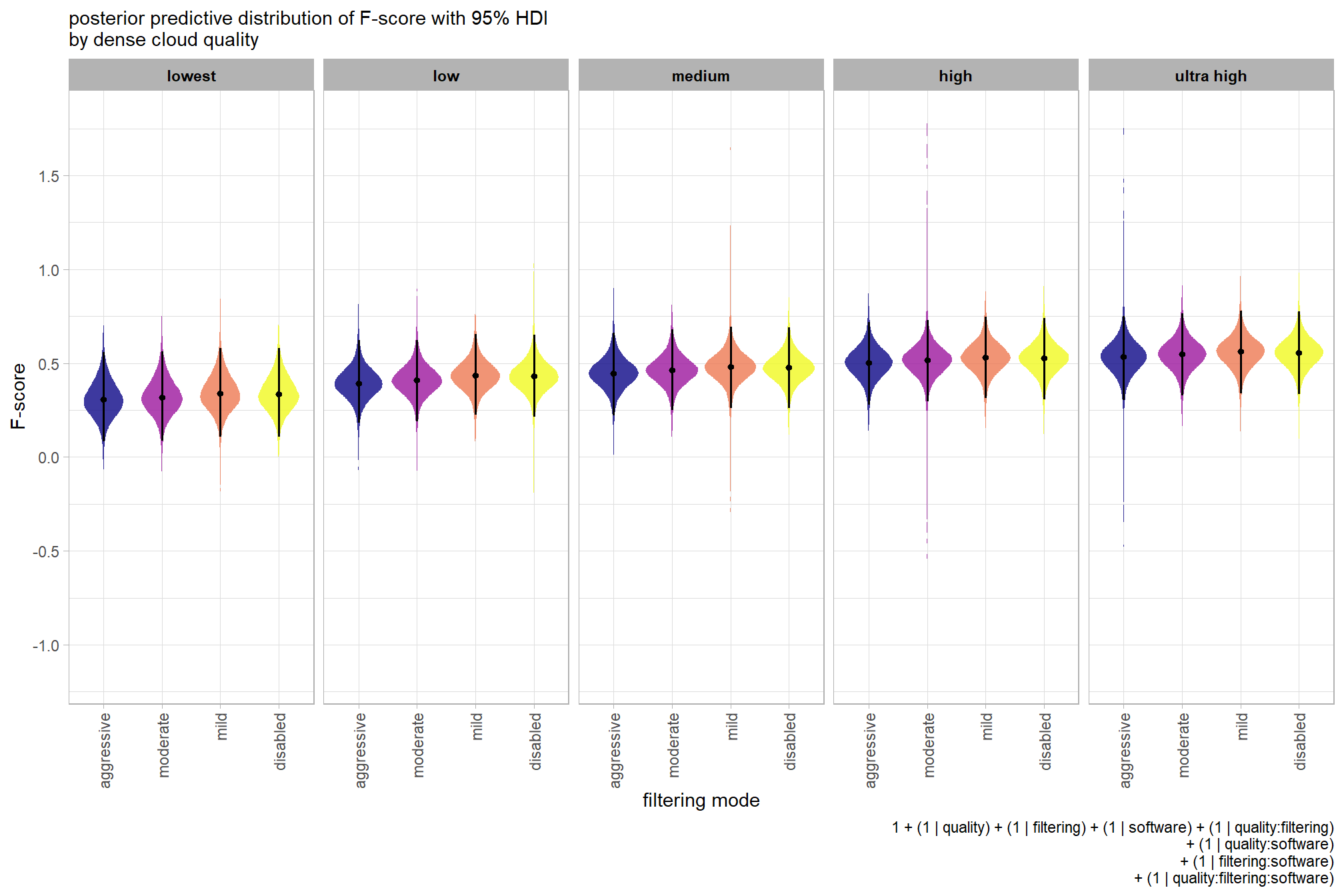

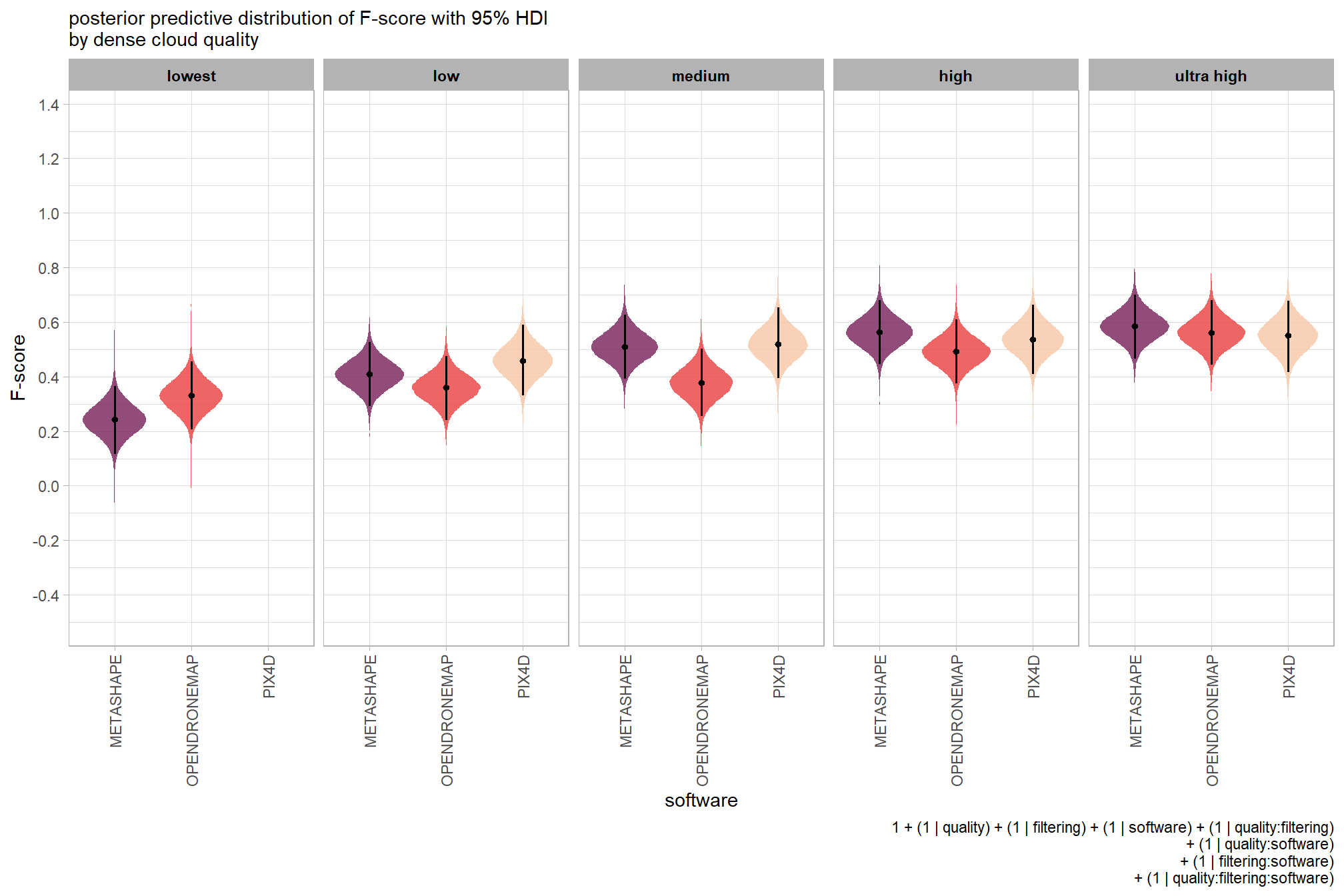

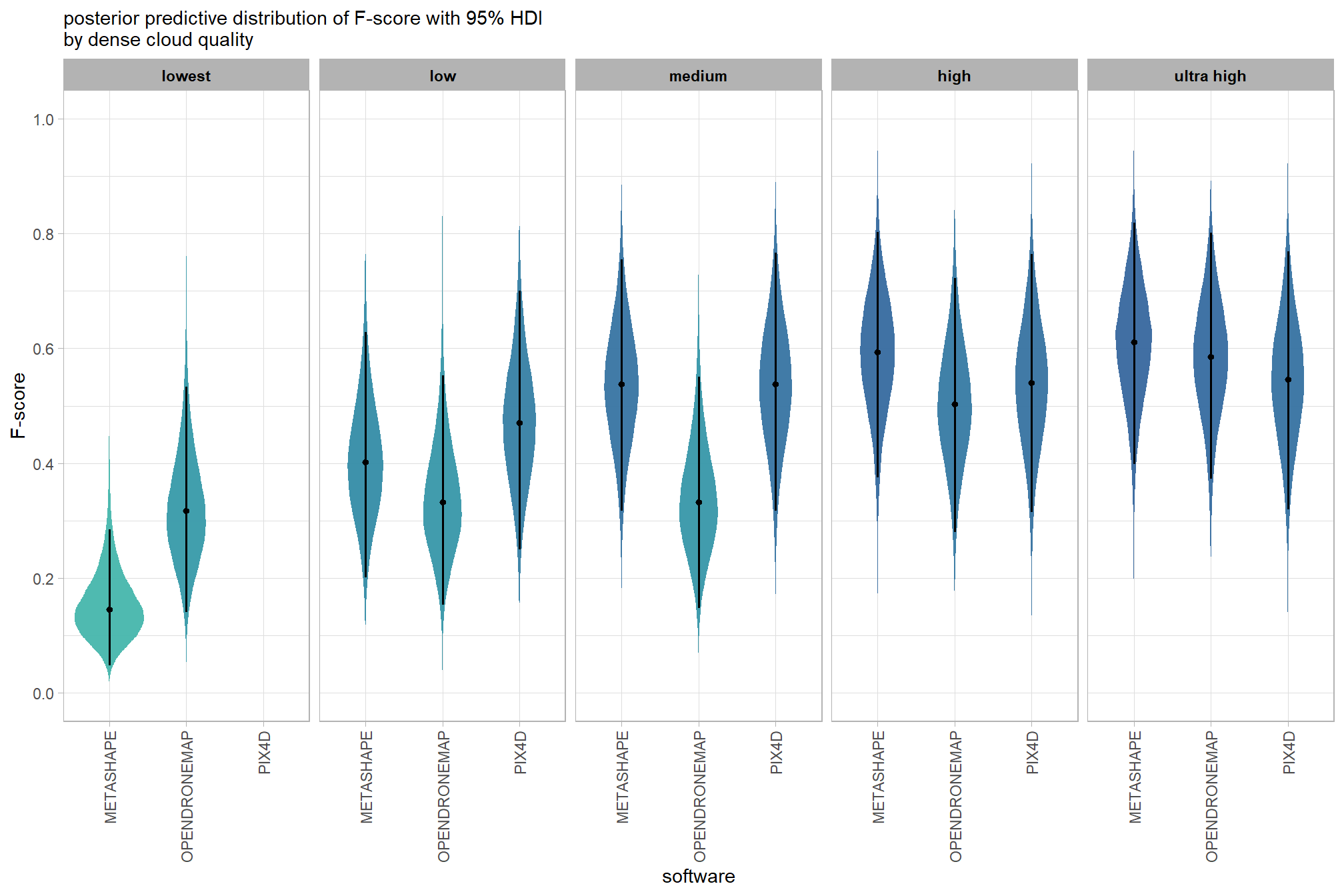

plot the posterior predictive distributions of the conditional means with the median F-score and the 95% highest posterior density interval (HDI)

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality, depth_maps_generation_filtering_mode) %>%

tidybayes::add_epred_draws(brms_f_mod2, allow_new_levels = T) %>%

dplyr::rename(value = .epred) %>%

dplyr::mutate(depth_maps_generation_quality = depth_maps_generation_quality %>% forcats::fct_rev()) %>%

# plot

ggplot(

mapping = aes(

y = value, x = depth_maps_generation_filtering_mode

, fill = depth_maps_generation_filtering_mode

)

) +

tidybayes::stat_eye(

point_interval = median_hdi, .width = .95

, slab_alpha = 0.8

, interval_color = "black", linewidth = 1

, point_color = "black", point_fill = "black", point_size = 1

) +

scale_fill_viridis_d(option = "plasma", drop = F) +

scale_y_continuous(breaks = scales::extended_breaks(n=10)) +

facet_grid(cols = vars(depth_maps_generation_quality)) +

labs(

x = "filtering mode", y = "F-score"

, subtitle = "posterior predictive distribution of F-score with 95% HDI\nby dense cloud quality"

, fill = "Filtering Mode"

, caption = form_temp

) +

theme_light() +

theme(

legend.position = "none"

, legend.direction = "horizontal"

, axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1)

, strip.text = element_text(color = "black", face = "bold")

)

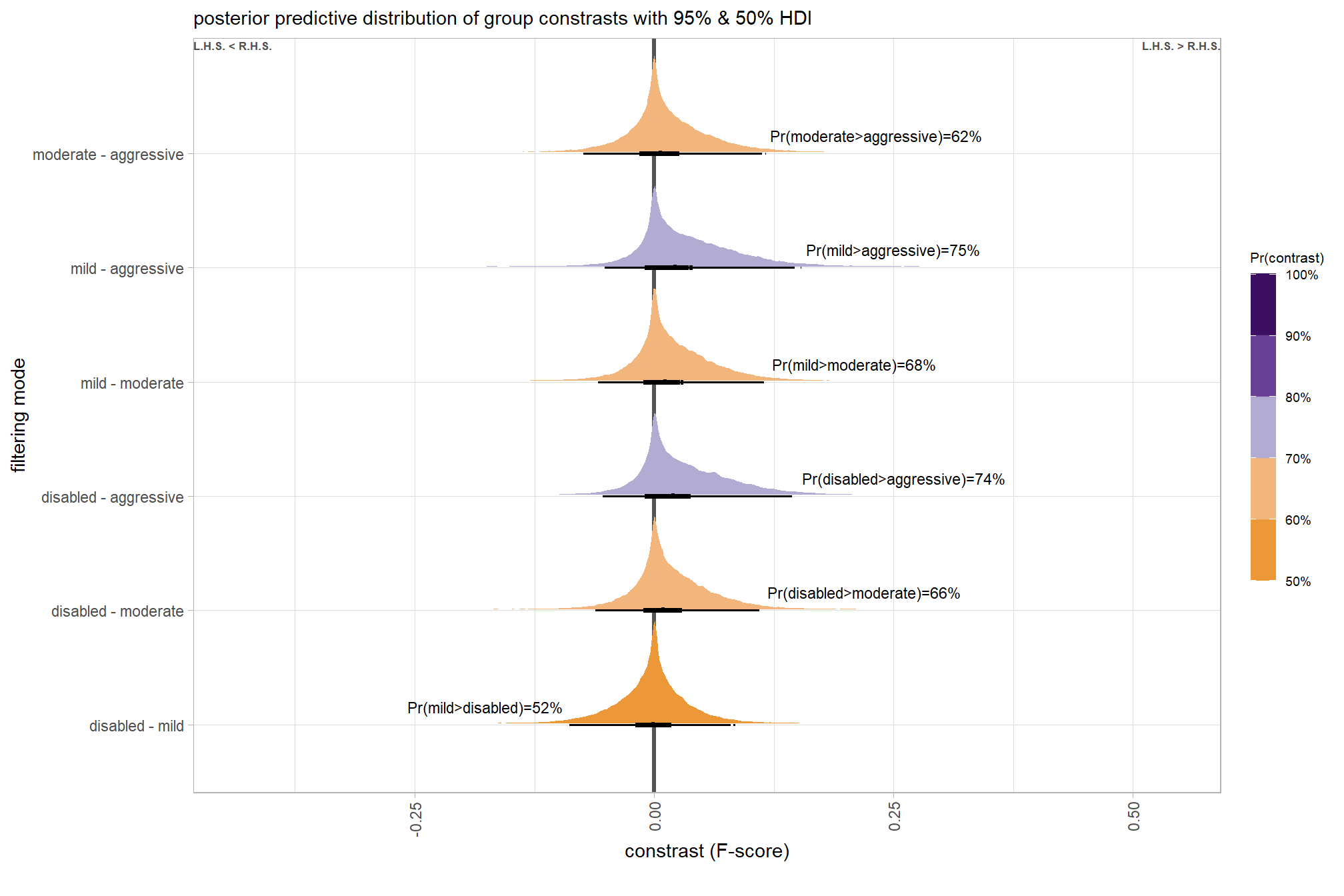

we can also make pairwise comparisons

brms_contrast_temp =

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality, depth_maps_generation_filtering_mode) %>%

tidybayes::add_epred_draws(brms_f_mod2, allow_new_levels = T) %>%

dplyr::rename(value = .epred) %>%

tidybayes::compare_levels(

value

, by = depth_maps_generation_quality

, comparison =

contrast_list

# tidybayes::emmeans_comparison("revpairwise")

#"pairwise"

) %>%

dplyr::rename(contrast = depth_maps_generation_quality)

# separate contrast

brms_contrast_temp = brms_contrast_temp %>%

dplyr::ungroup() %>%

tidyr::separate_wider_delim(

cols = contrast

, delim = " - "

, names = paste0(

"sorter"

, 1:(max(stringr::str_count(brms_contrast_temp$contrast, "-"))+1)

)

, too_few = "align_start"

, cols_remove = F

) %>%

dplyr::filter(sorter1!=sorter2) %>%

dplyr::mutate(

dplyr::across(

tidyselect::starts_with("sorter")

, .fns = function(x){factor(

x, ordered = T

, levels = levels(ptcld_validation_data$depth_maps_generation_quality)

)}

)

, contrast = contrast %>%

forcats::fct_reorder(

paste0(as.numeric(sorter1), as.numeric(sorter2)) %>%

as.numeric()

)

, depth_maps_generation_filtering_mode = depth_maps_generation_filtering_mode %>%

factor(

levels = levels(ptcld_validation_data$depth_maps_generation_filtering_mode)

, ordered = T

)

) %>%

# median_hdi summary for coloring

dplyr::group_by(contrast, depth_maps_generation_filtering_mode) %>%

make_contrast_vars()

# what?

brms_contrast_temp %>% dplyr::glimpse()## Rows: 480,000

## Columns: 19

## Groups: contrast, depth_maps_generation_filtering_mode [40]

## $ depth_maps_generation_filtering_mode <ord> aggressive, aggressive, aggressiv…

## $ .chain <int> NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ .iteration <int> NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11…

## $ sorter1 <ord> ultra high, ultra high, ultra hig…

## $ sorter2 <ord> high, high, high, high, high, hig…

## $ contrast <fct> ultra high - high, ultra high - h…

## $ value <dbl> 0.078887252, 0.064029088, 0.02146…

## $ median_hdi_est <dbl> 0.03770622, 0.03770622, 0.0377062…

## $ median_hdi_lower <dbl> -0.05514285, -0.05514285, -0.0551…

## $ median_hdi_upper <dbl> 0.135536, 0.135536, 0.135536, 0.1…

## $ pr_gt_zero <chr> "79%", "79%", "79%", "79%", "79%"…

## $ pr_lt_zero <chr> "21%", "21%", "21%", "21%", "21%"…

## $ is_diff_dir <lgl> TRUE, TRUE, TRUE, TRUE, TRUE, FAL…

## $ pr_diff <dbl> 0.7908333, 0.7908333, 0.7908333, …

## $ pr_diff_lab <chr> "Pr(ultra high>high)=79%", "Pr(ul…

## $ pr_diff_lab_sm <chr> "Pr(>0)=79%", "Pr(>0)=79%", "Pr(>…

## $ pr_diff_lab_pos <dbl> 0.1457012, 0.1457012, 0.1457012, …

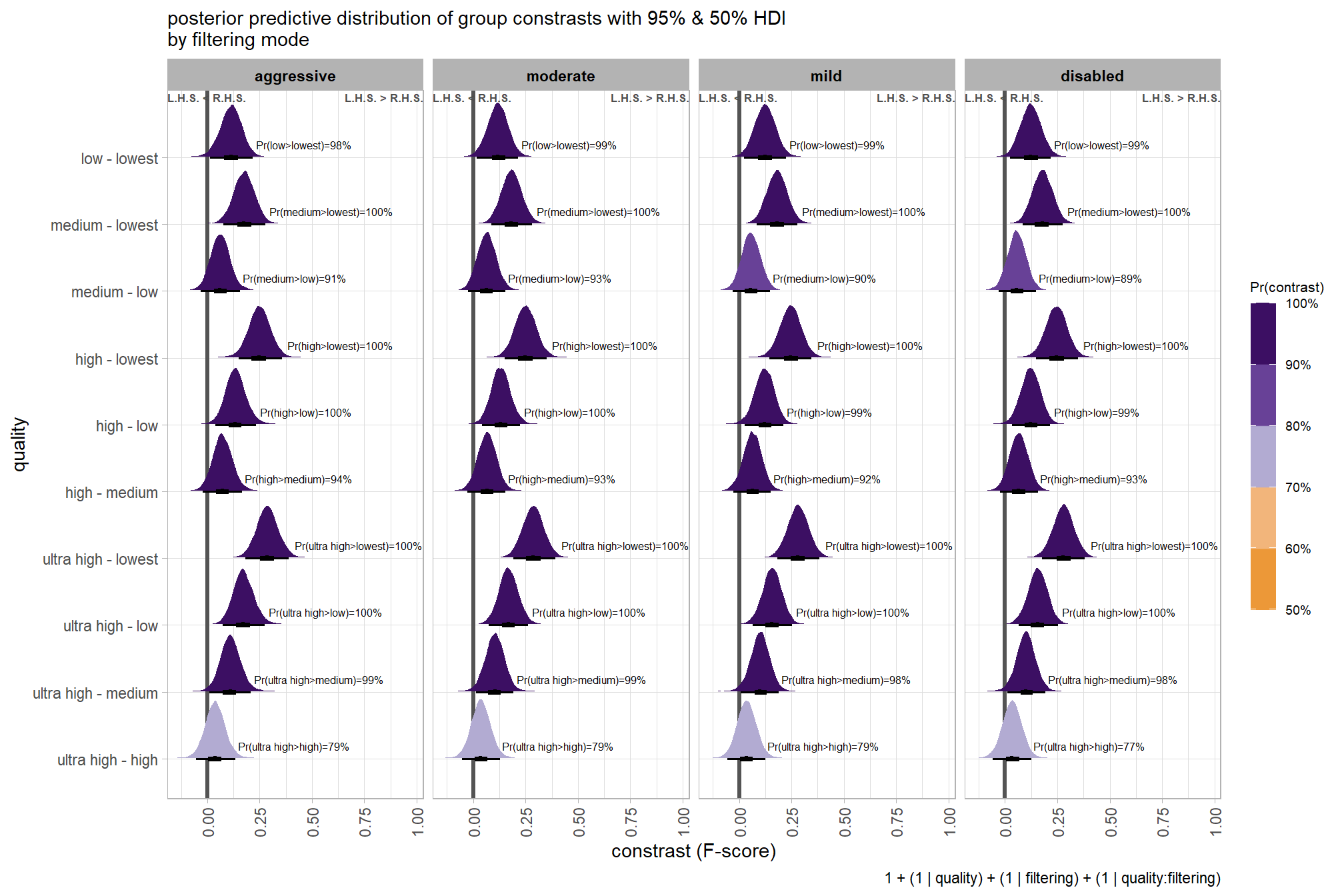

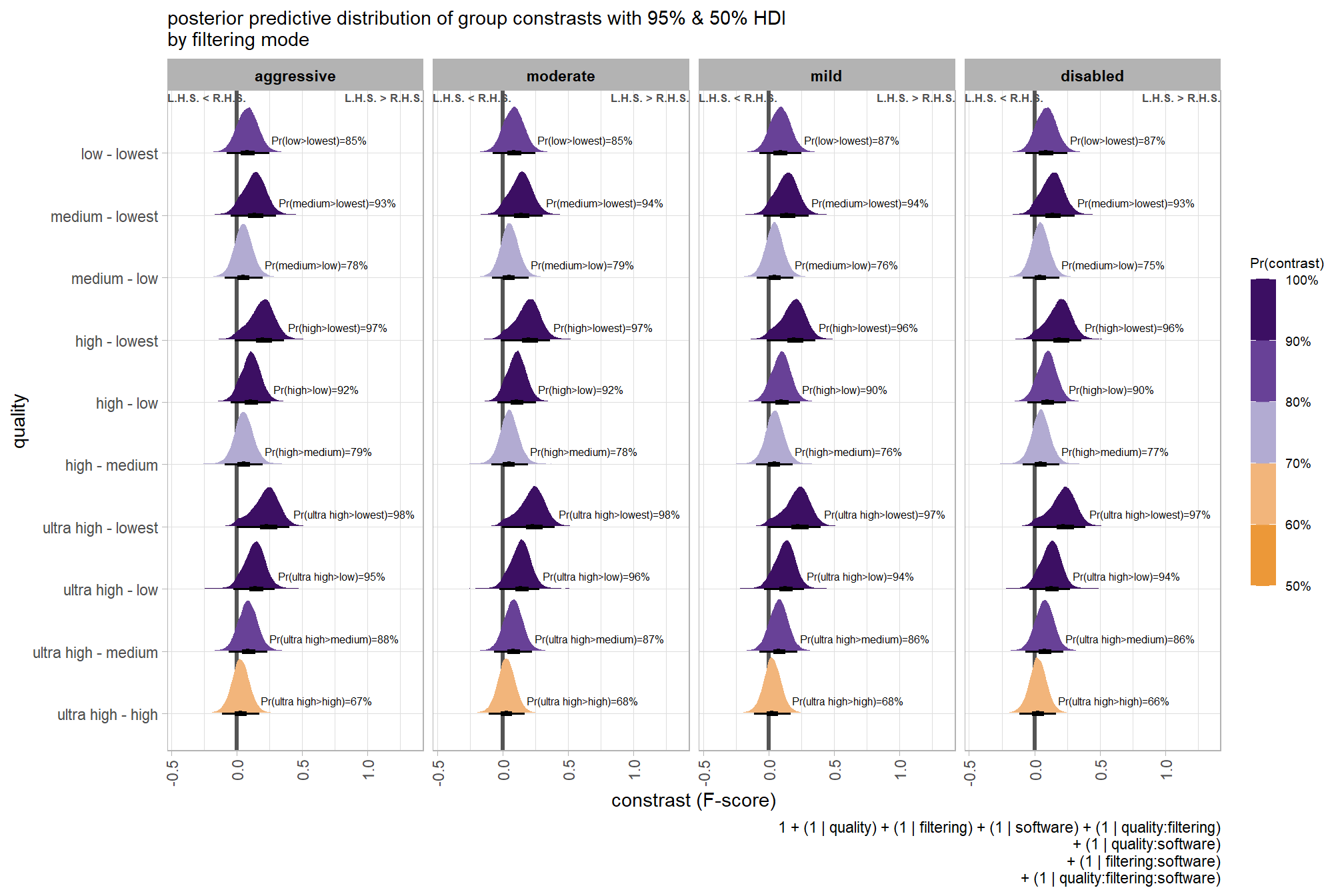

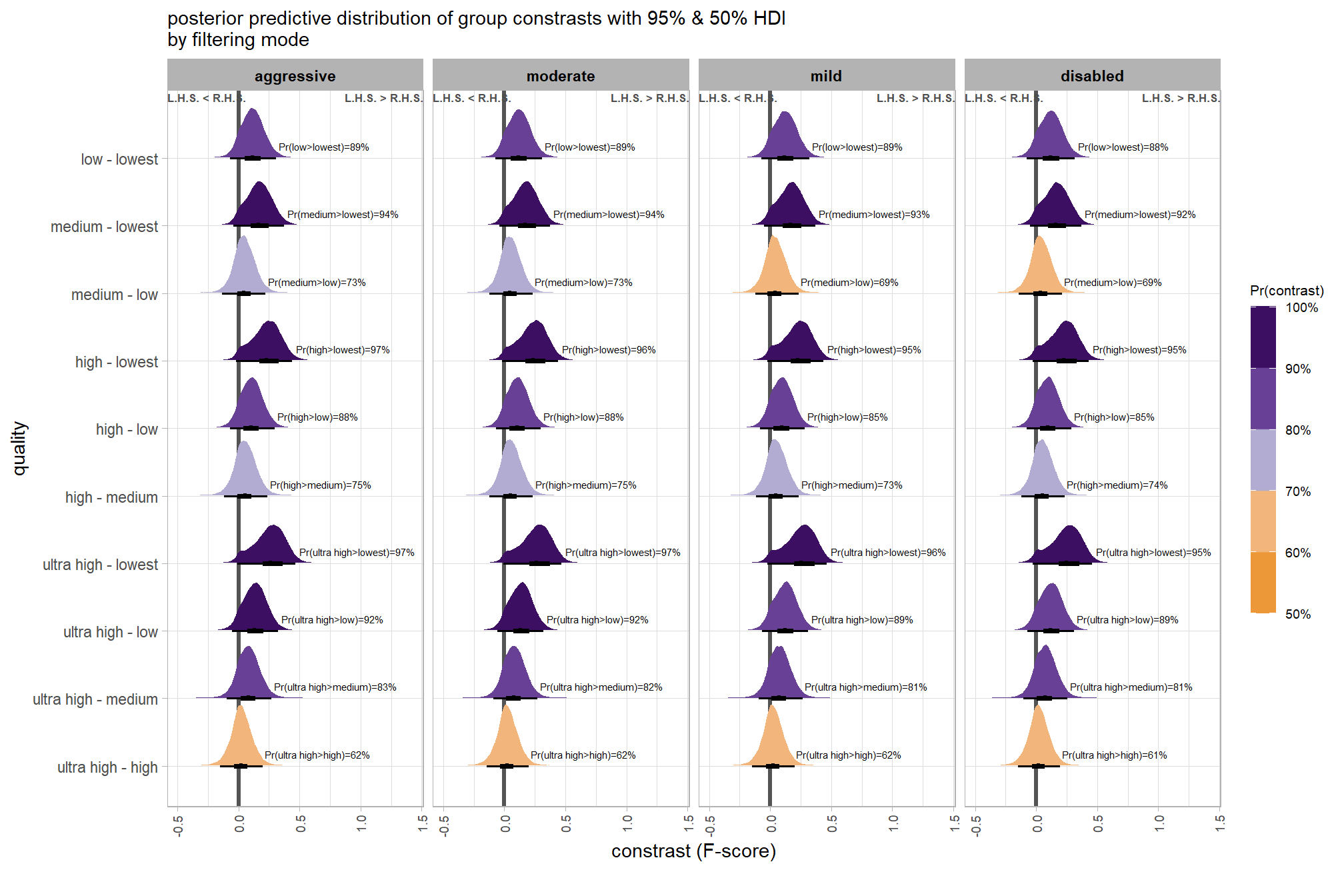

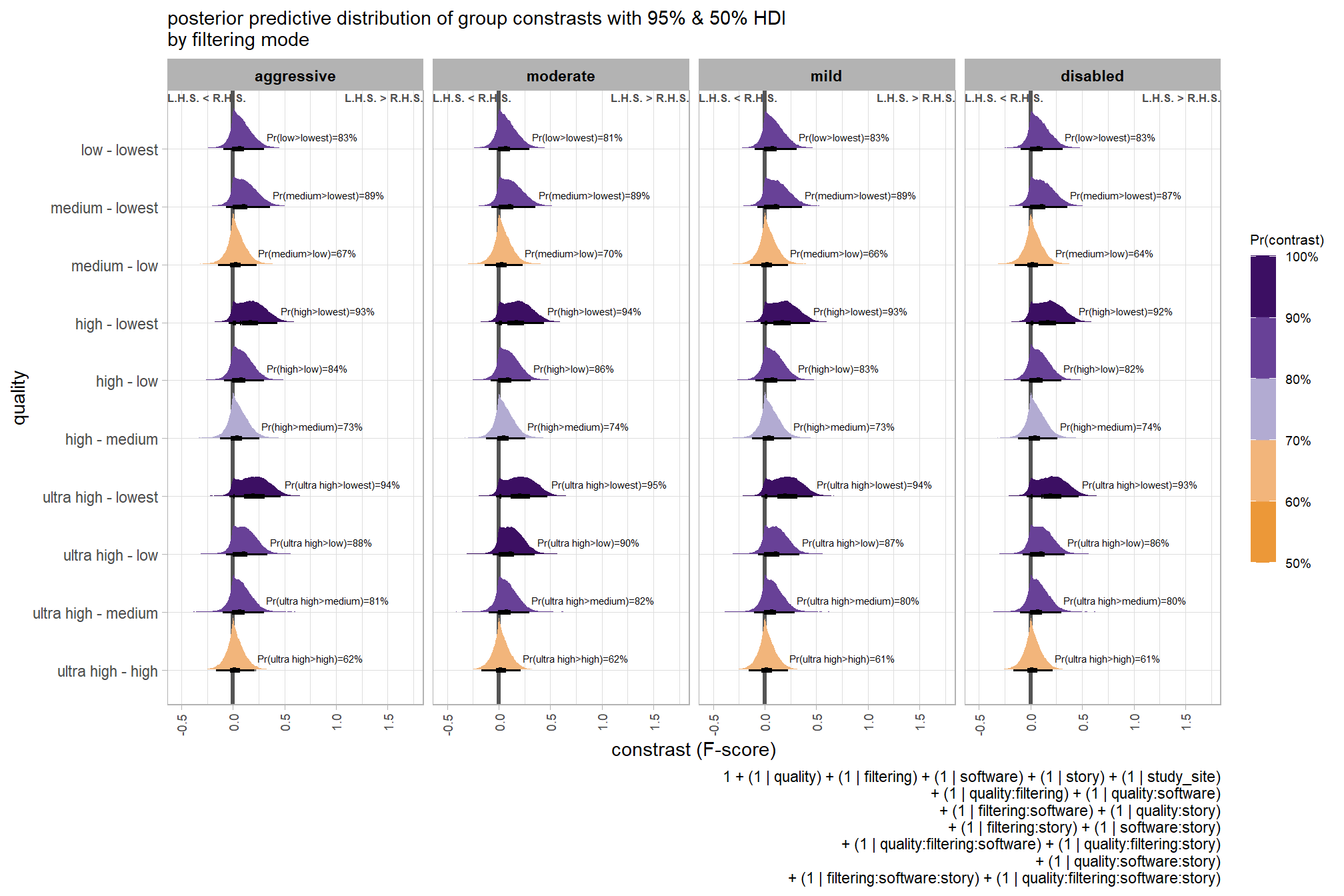

## $ sig_level <ord> <80%, <80%, <80%, <80%, <80%, <80…plot it

plt_contrast(

brms_contrast_temp, caption_text = form_temp

, y_axis_title = "quality"

, facet = "depth_maps_generation_filtering_mode"

, label_size = 2.1

, x_expand = c(0,0.65)

) +

labs(

subtitle = "posterior predictive distribution of group constrasts with 95% & 50% HDI\nby filtering mode"

)

and summarize these contrasts

brms_contrast_temp %>%

dplyr::group_by(contrast, depth_maps_generation_filtering_mode) %>%

tidybayes::median_hdi(value) %>%

dplyr::arrange(contrast, depth_maps_generation_filtering_mode) %>%

dplyr::select(contrast, depth_maps_generation_filtering_mode, value, .lower, .upper) %>%

kableExtra::kbl(

digits = 2

, caption = "brms::brm model: 95% HDI of the posterior predictive distribution of group constrasts"

, col.names = c(

"quality contrast"

, "filtering mode"

, "difference (F-score)"

, "HDI low", "HDI high"

)

) %>%

kableExtra::kable_styling() %>%

kableExtra::scroll_box(height = "6in")| quality contrast | filtering mode | difference (F-score) | HDI low | HDI high |

|---|---|---|---|---|

| ultra high - high | aggressive | 0.04 | -0.06 | 0.14 |

| ultra high - high | moderate | 0.04 | -0.05 | 0.13 |

| ultra high - high | mild | 0.04 | -0.06 | 0.13 |

| ultra high - high | disabled | 0.03 | -0.06 | 0.12 |

| ultra high - medium | aggressive | 0.11 | 0.01 | 0.21 |

| ultra high - medium | moderate | 0.10 | 0.01 | 0.19 |

| ultra high - medium | mild | 0.10 | 0.01 | 0.19 |

| ultra high - medium | disabled | 0.10 | 0.01 | 0.19 |

| ultra high - low | aggressive | 0.17 | 0.07 | 0.27 |

| ultra high - low | moderate | 0.17 | 0.07 | 0.26 |

| ultra high - low | mild | 0.16 | 0.07 | 0.25 |

| ultra high - low | disabled | 0.16 | 0.06 | 0.25 |

| ultra high - lowest | aggressive | 0.29 | 0.18 | 0.39 |

| ultra high - lowest | moderate | 0.29 | 0.19 | 0.39 |

| ultra high - lowest | mild | 0.28 | 0.18 | 0.38 |

| ultra high - lowest | disabled | 0.28 | 0.18 | 0.38 |

| high - medium | aggressive | 0.07 | -0.02 | 0.17 |

| high - medium | moderate | 0.07 | -0.03 | 0.15 |

| high - medium | mild | 0.06 | -0.03 | 0.15 |

| high - medium | disabled | 0.07 | -0.02 | 0.16 |

| high - low | aggressive | 0.13 | 0.04 | 0.23 |

| high - low | moderate | 0.13 | 0.04 | 0.22 |

| high - low | mild | 0.12 | 0.03 | 0.21 |

| high - low | disabled | 0.12 | 0.03 | 0.22 |

| high - lowest | aggressive | 0.25 | 0.15 | 0.36 |

| high - lowest | moderate | 0.25 | 0.15 | 0.35 |

| high - lowest | mild | 0.25 | 0.14 | 0.35 |

| high - lowest | disabled | 0.25 | 0.15 | 0.35 |

| medium - low | aggressive | 0.06 | -0.03 | 0.16 |

| medium - low | moderate | 0.07 | -0.03 | 0.15 |

| medium - low | mild | 0.06 | -0.03 | 0.15 |

| medium - low | disabled | 0.06 | -0.03 | 0.15 |

| medium - lowest | aggressive | 0.18 | 0.08 | 0.28 |

| medium - lowest | moderate | 0.18 | 0.09 | 0.28 |

| medium - lowest | mild | 0.18 | 0.08 | 0.28 |

| medium - lowest | disabled | 0.18 | 0.09 | 0.28 |

| low - lowest | aggressive | 0.12 | 0.01 | 0.22 |

| low - lowest | moderate | 0.12 | 0.02 | 0.21 |

| low - lowest | mild | 0.12 | 0.02 | 0.22 |

| low - lowest | disabled | 0.12 | 0.02 | 0.22 |

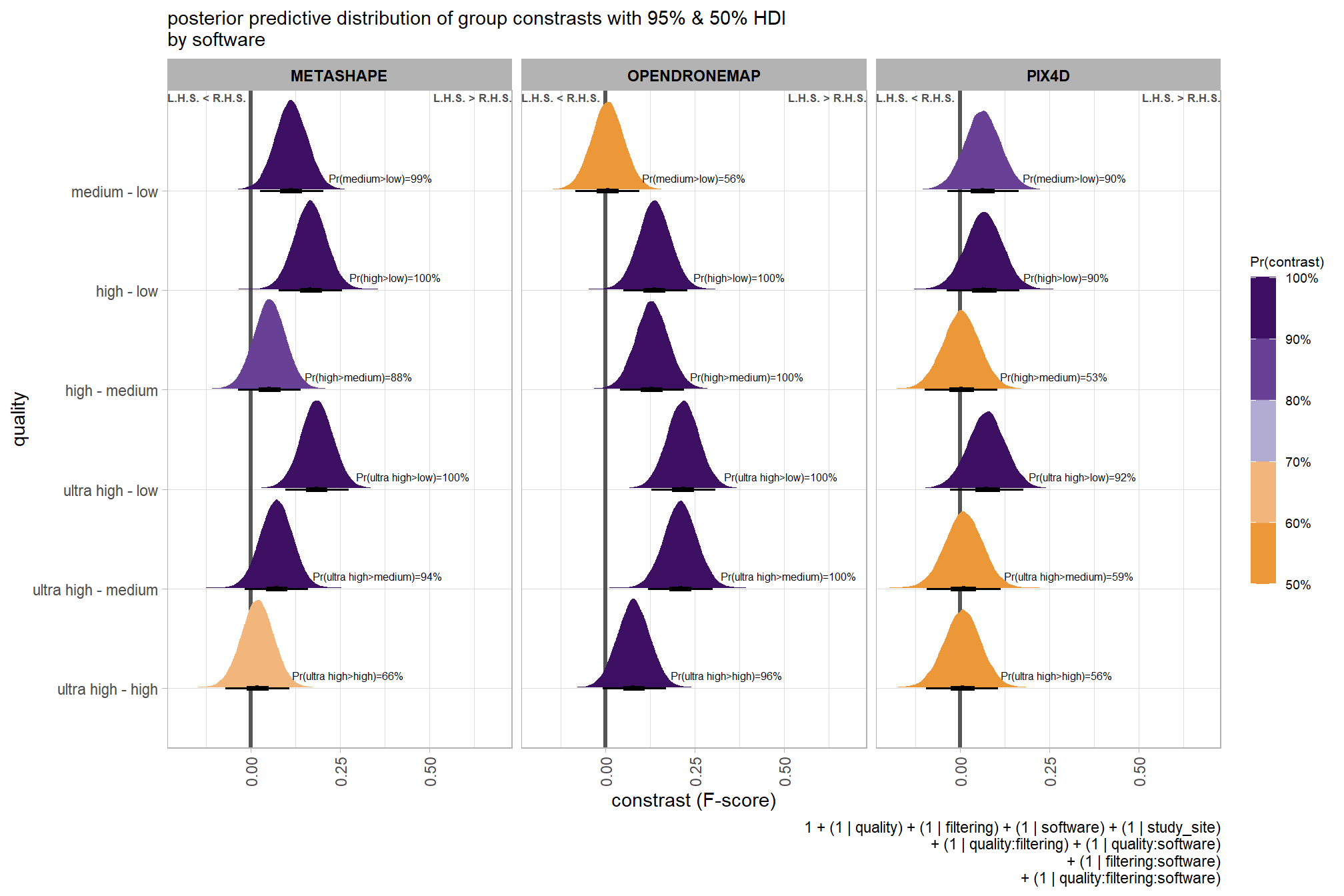

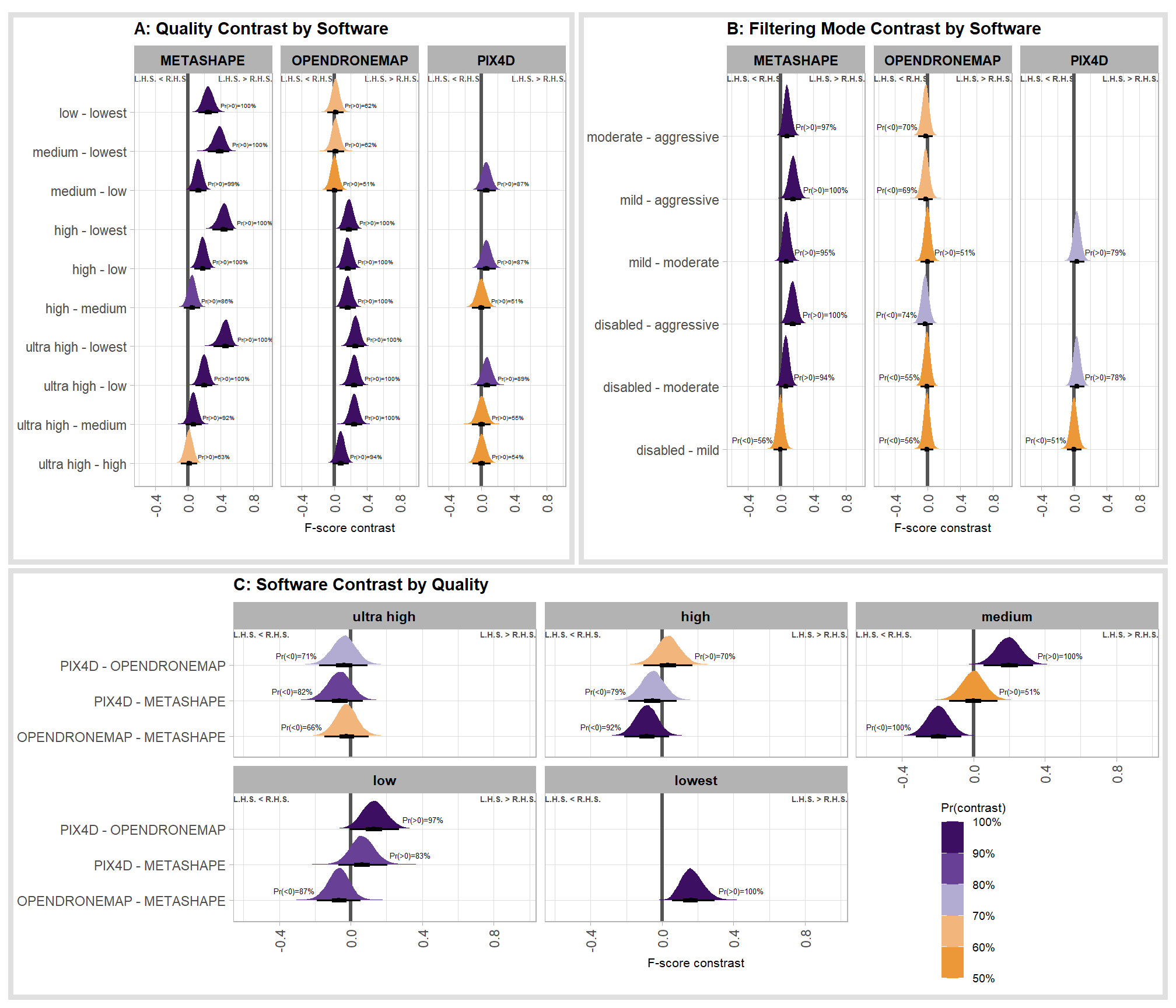

Kruschke (2015) notes that for the multiple nominal predictors model:

In applications with multiple levels of the factors, it is virtually always the case that we are interested in comparing particular levels with each other…These sorts of comparisons, which involve levels of a single factor and collapse across the other factor(s), are called main effect comparisons or contrasts.(p. 595)

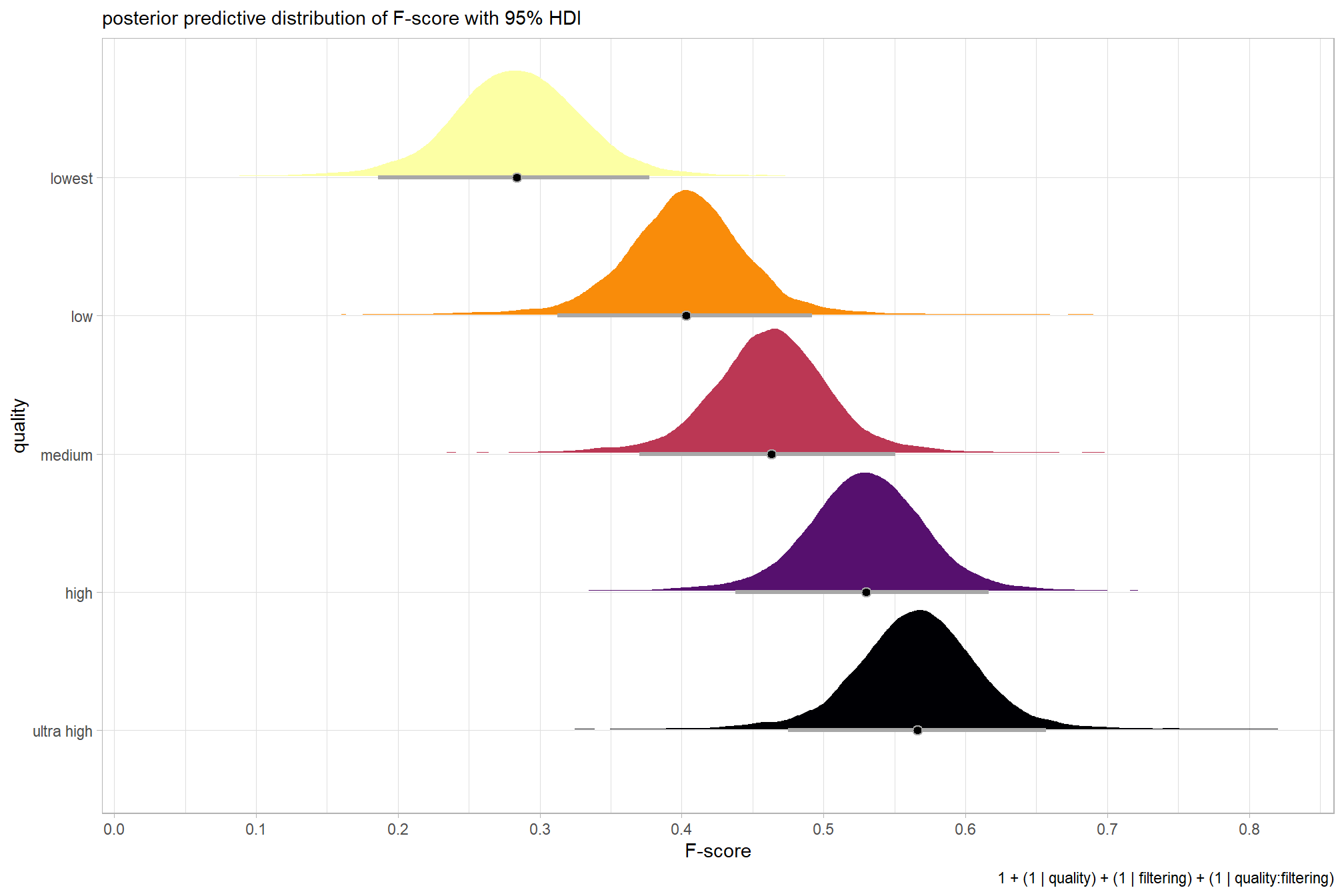

First, let’s collapse across the filtering mode to compare the dense cloud quality setting effect.

In a hierarchical model structure, we have to make use of the re_formula argument within tidybayes::add_epred_draws

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality) %>%

tidybayes::add_epred_draws(

brms_f_mod2

# this part is crucial

, re_formula = ~ (1 | depth_maps_generation_quality)

) %>%

dplyr::rename(value = .epred) %>%

# plot

ggplot(

mapping = aes(

x = value, y = depth_maps_generation_quality

, fill = depth_maps_generation_quality

)

) +

tidybayes::stat_halfeye(

point_interval = median_hdi, .width = .95

, interval_color = "gray66"

, shape = 21, point_color = "gray66", point_fill = "black"

, justification = -0.01

) +

scale_fill_viridis_d(option = "inferno", drop = F) +

scale_x_continuous(breaks = scales::extended_breaks(n=8)) +

labs(

y = "quality", x = "F-score"

, subtitle = "posterior predictive distribution of F-score with 95% HDI"

, caption = form_temp

) +

theme_light() +

theme(legend.position = "none")

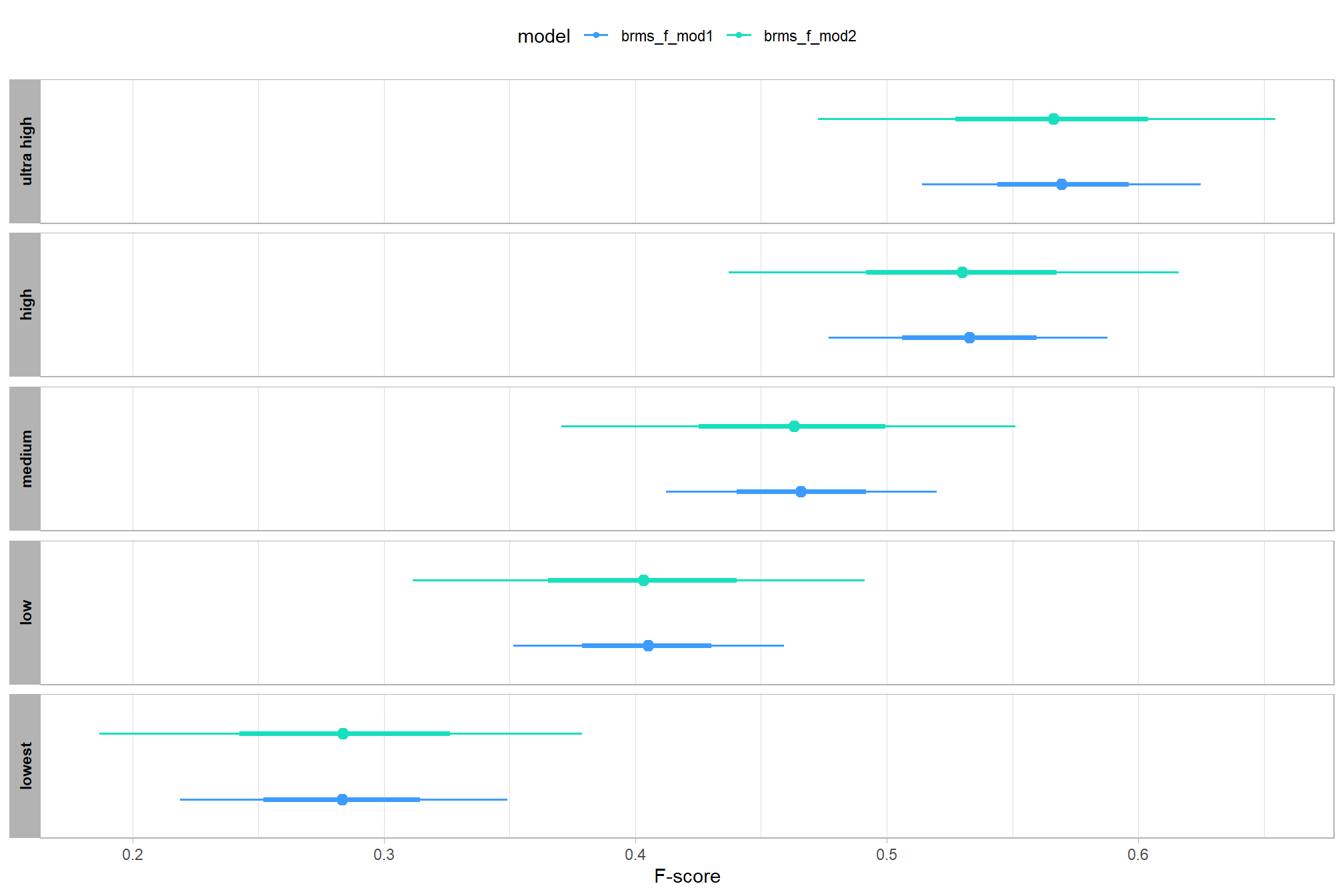

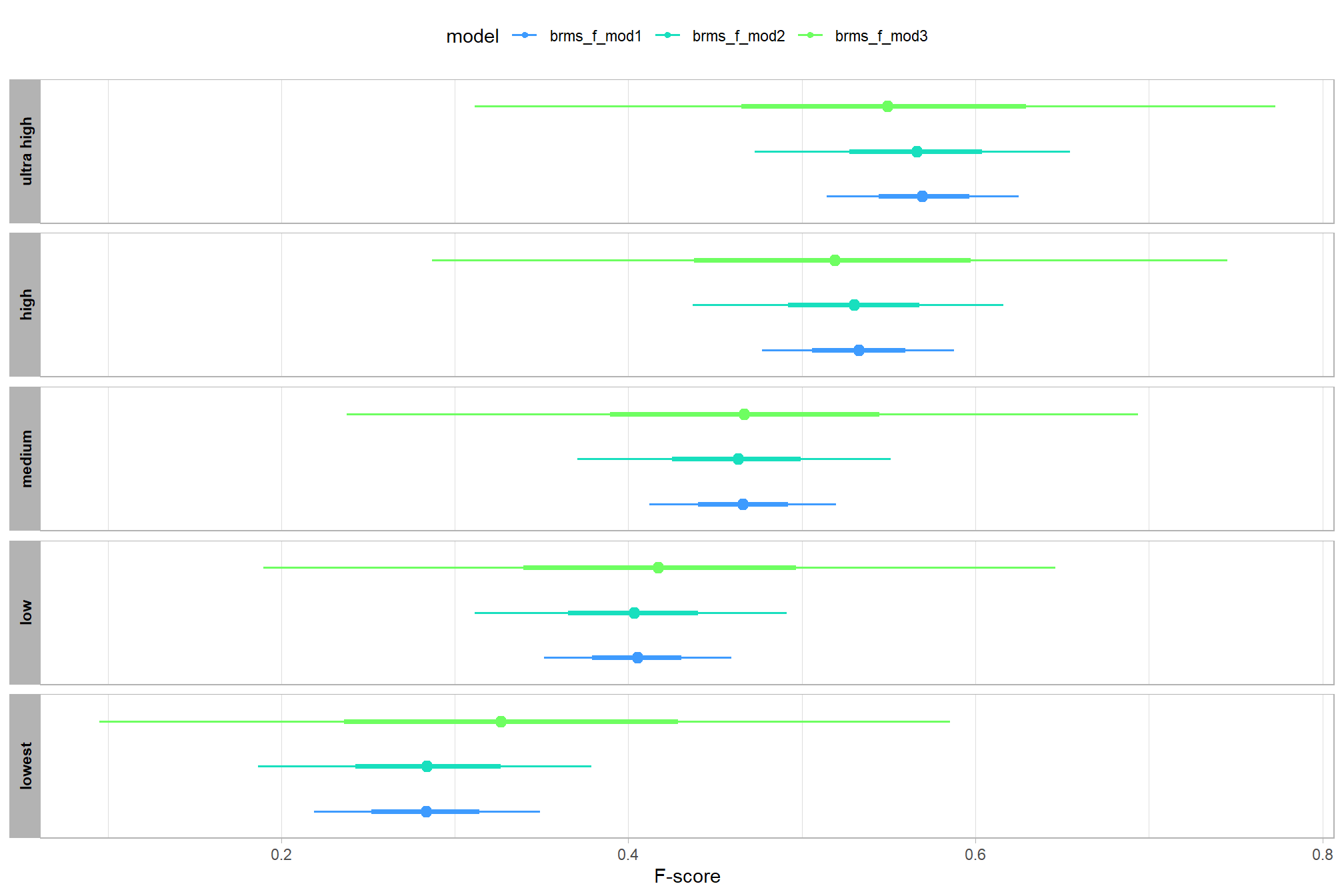

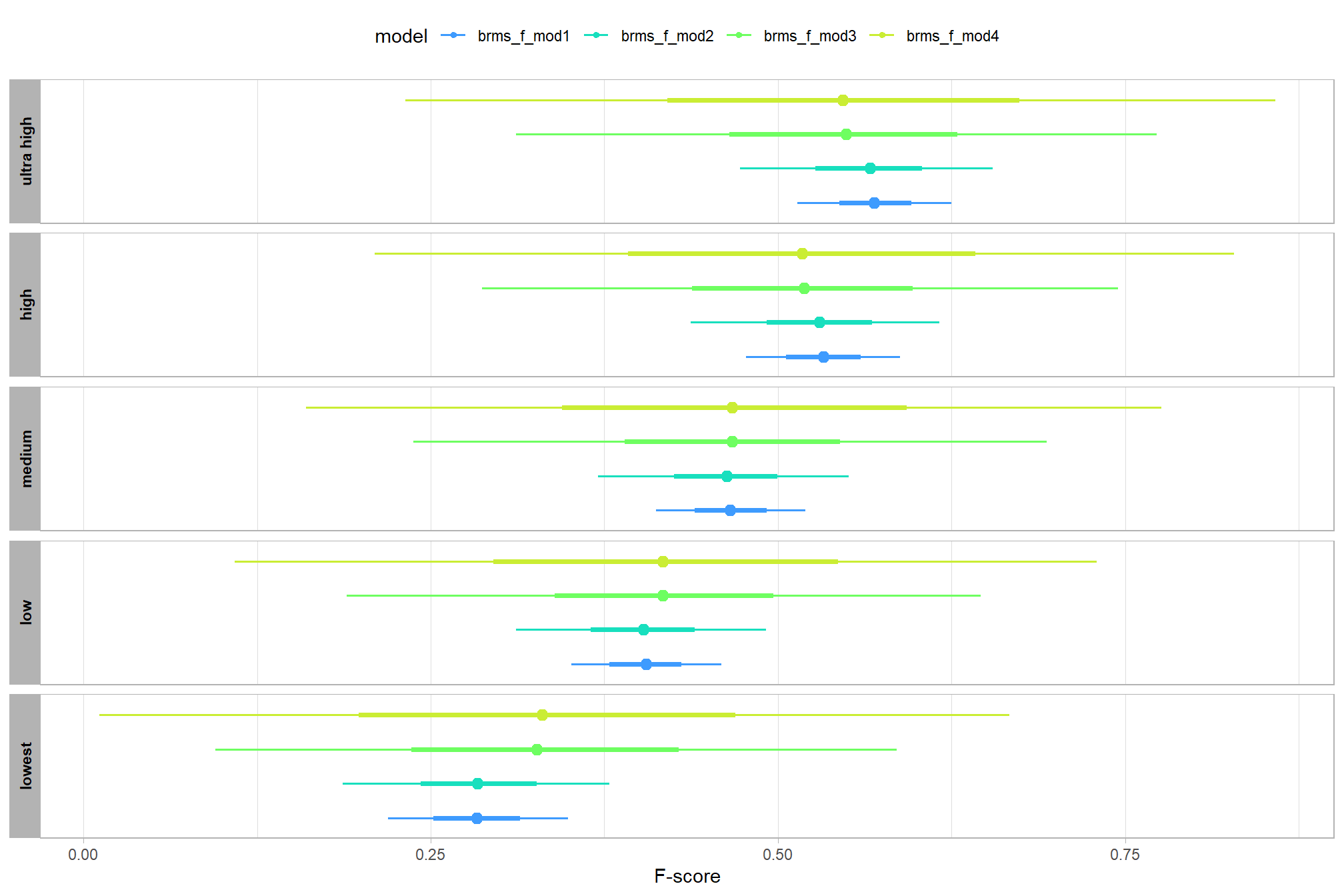

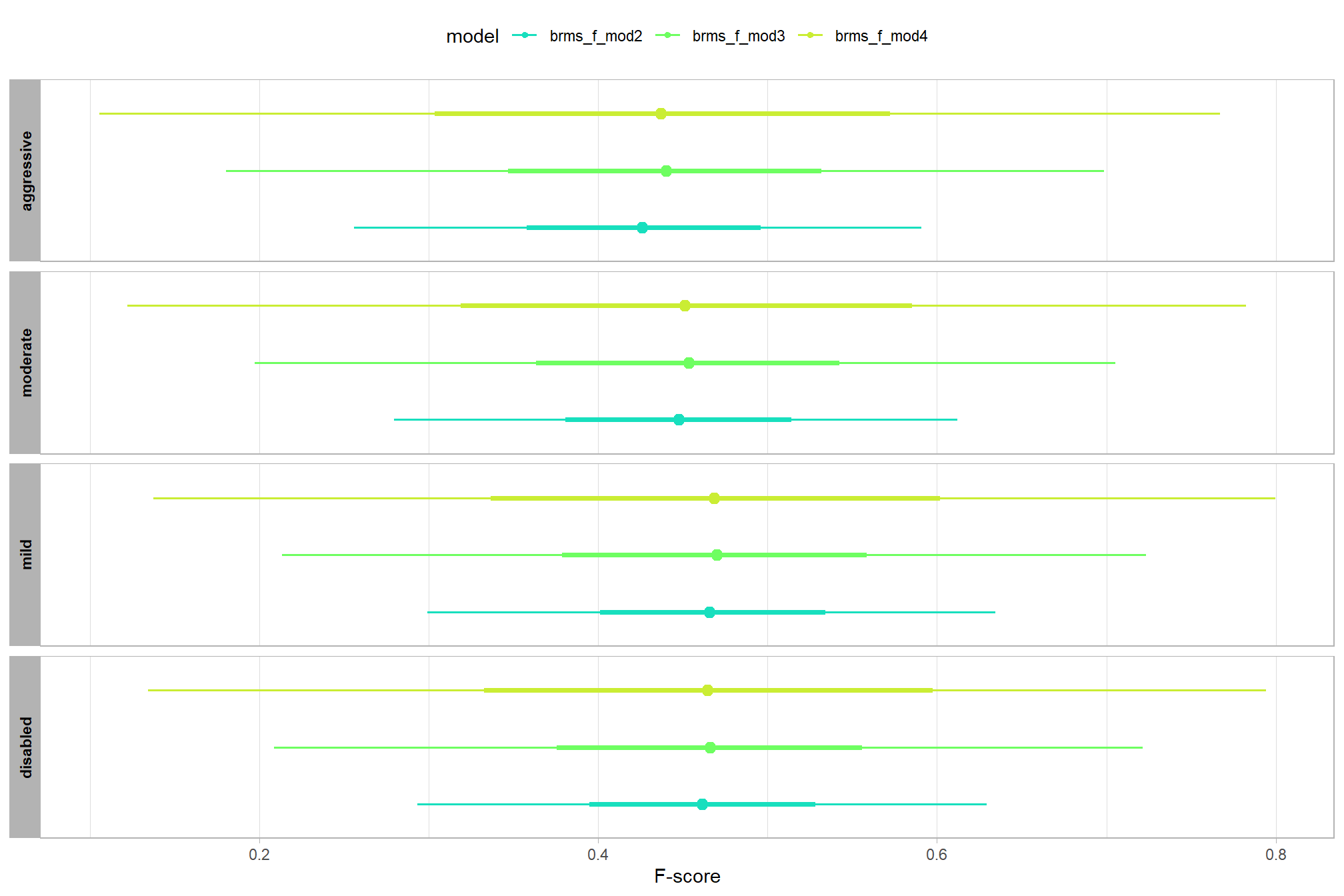

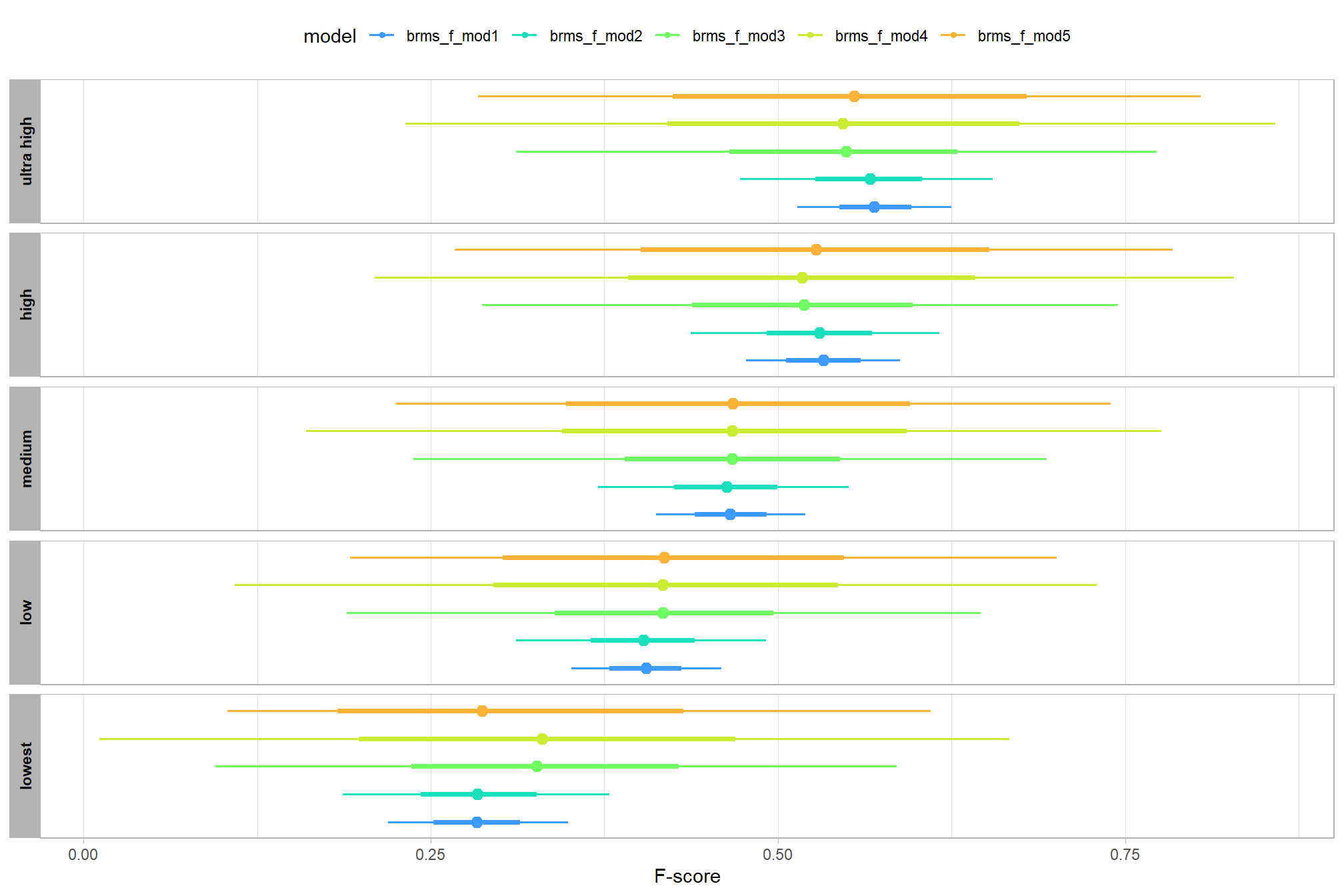

let’s compare these results to the results from our one nominal predictor model above

# let's compare these results to the results from our [one nominal predictor model above](#one_pred_mod)

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality) %>%

tidybayes::add_epred_draws(

brms_f_mod2

# this part is crucial

, re_formula = ~ (1 | depth_maps_generation_quality)

) %>%

dplyr::mutate(value = .epred, src = "brms_f_mod2") %>%

dplyr::bind_rows(

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_quality) %>%

tidybayes::add_epred_draws(brms_f_mod1) %>%

dplyr::mutate(value = .epred, src = "brms_f_mod1")

) %>%

ggplot(mapping = aes(y = src, x = value, color = src, group = src)) +

tidybayes::stat_pointinterval(position = "dodge") +

facet_grid(rows = vars(depth_maps_generation_quality), switch = "y") +

scale_y_discrete(NULL, breaks = NULL) +

scale_color_manual(values = viridis::turbo(n = 6, begin = 0.2, end = 0.8)[1:2]) +

labs(

y = "", x = "F-score"

, color = "model"

) +

theme_light() +

theme(legend.position = "top", strip.text = element_text(color = "black", face = "bold"))

these results are as expected, with Kruschke (2015) noting:

It is important to realize that the estimates of interaction contrasts are typically much more uncertain than the estimates of simple effects or main effects…This large uncertainty of an interaction contrast is caused by the fact that it involves at least four sources of uncertainty (i.e., at least four groups of data), unlike its component simple effects which each involve only half of those sources of uncertainty. In general, interaction contrasts require a lot of data to estimate accurately. (p. 598)

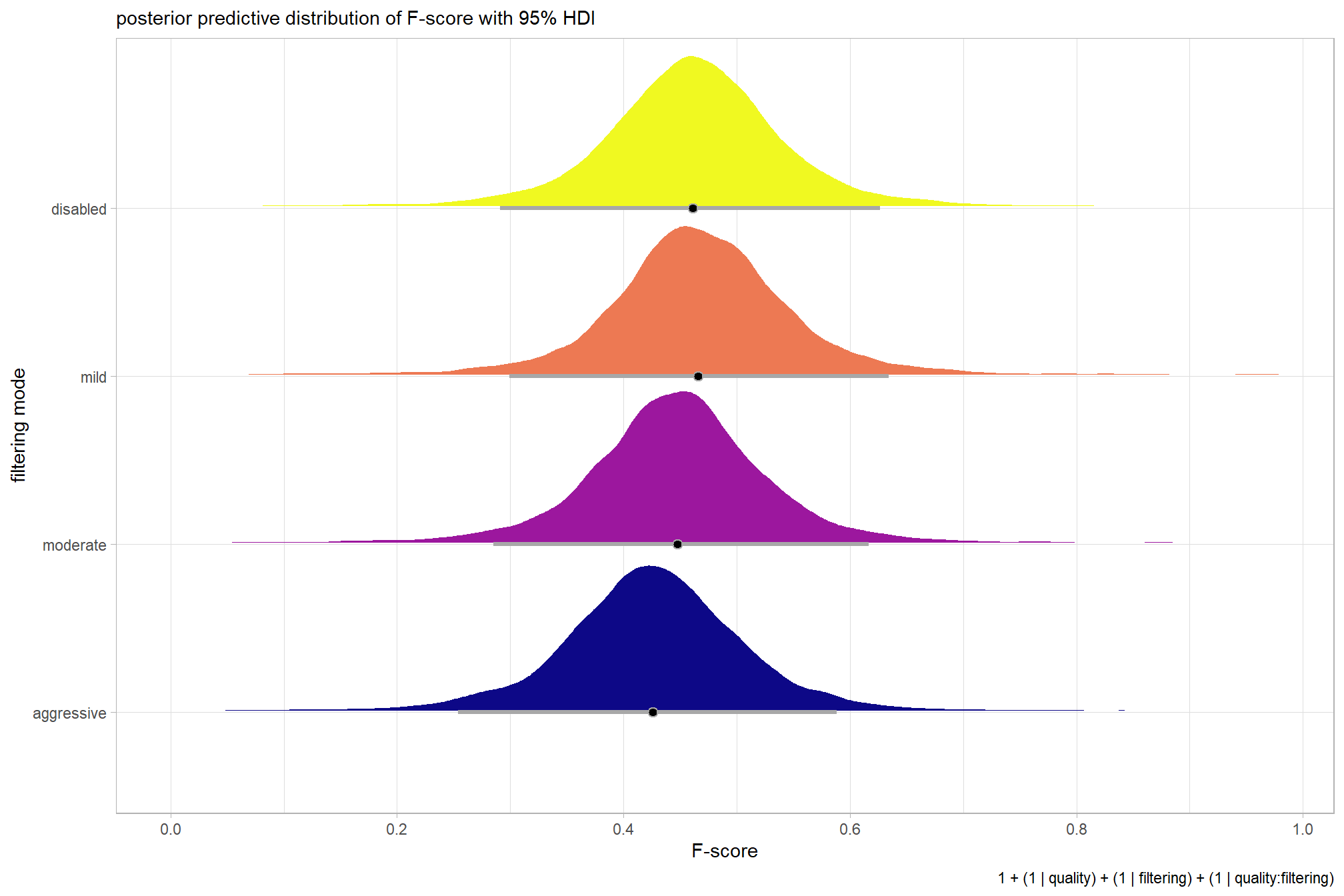

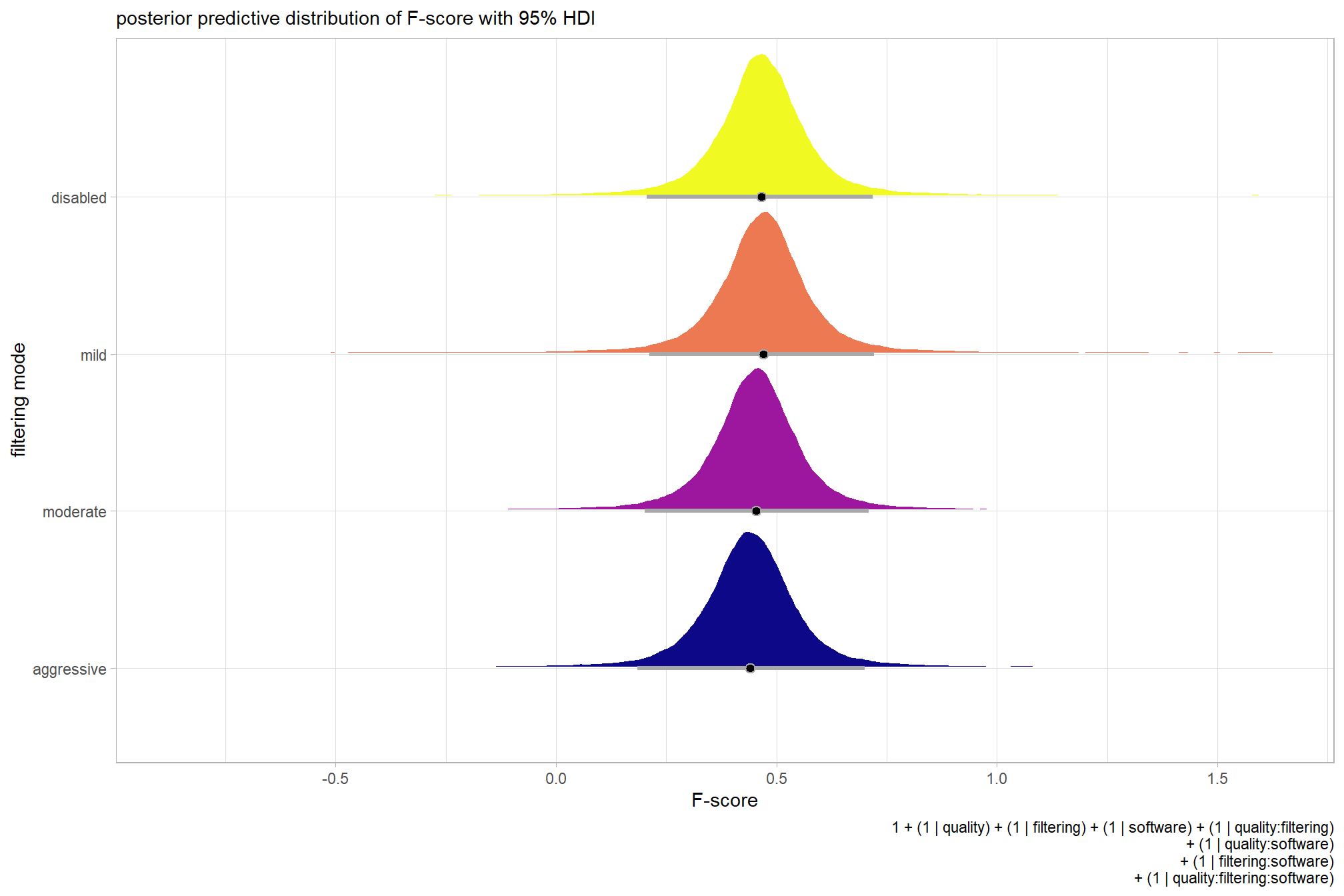

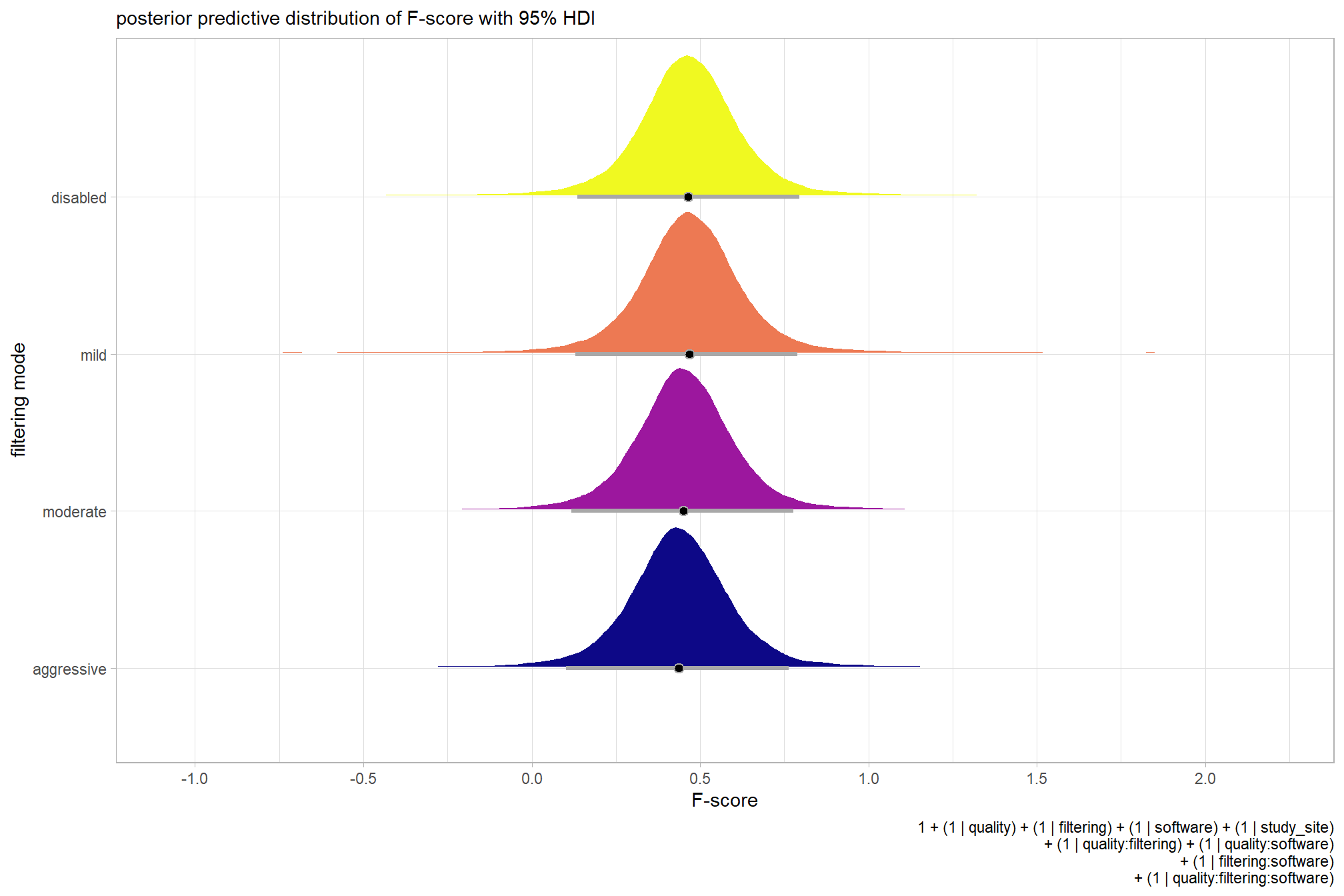

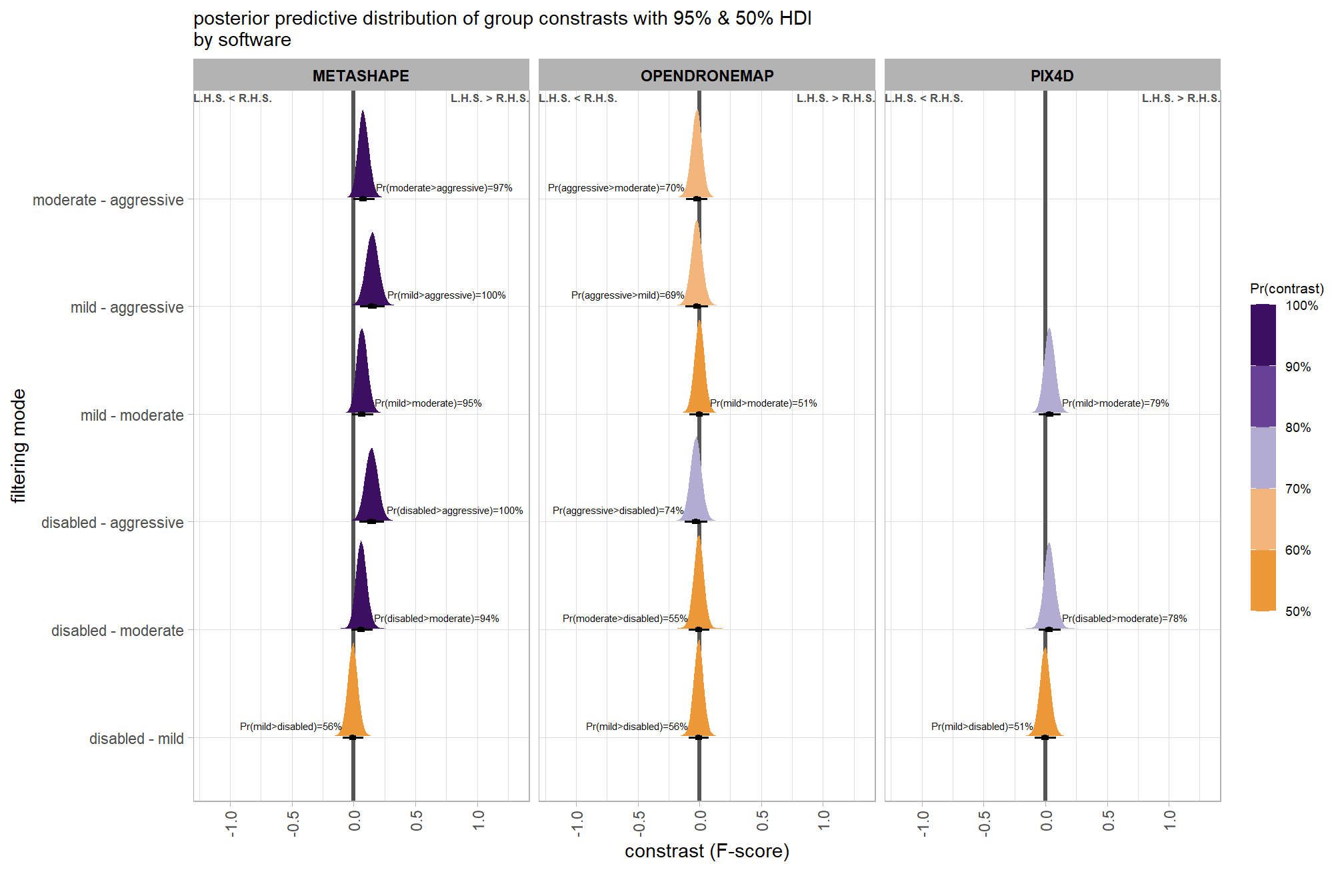

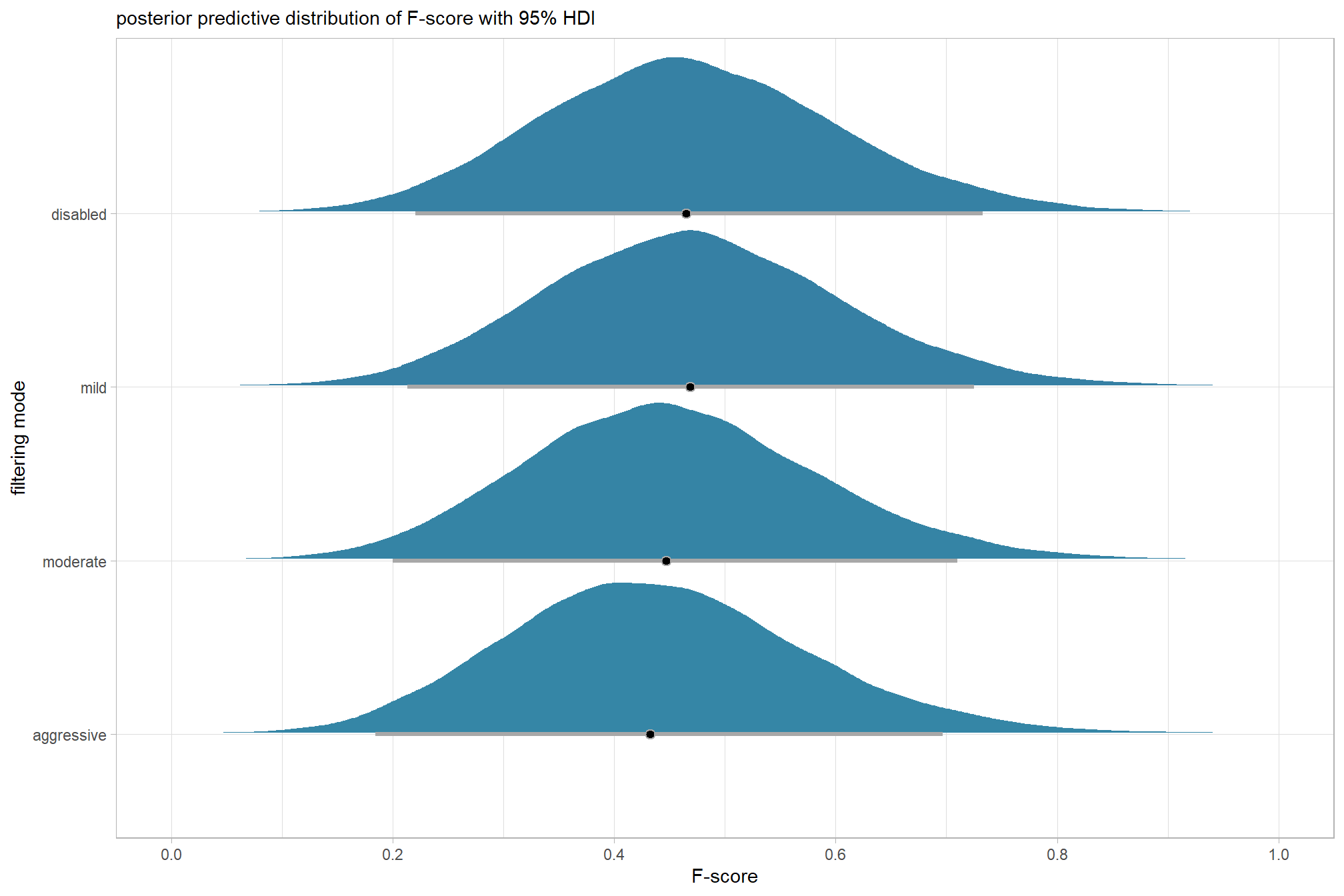

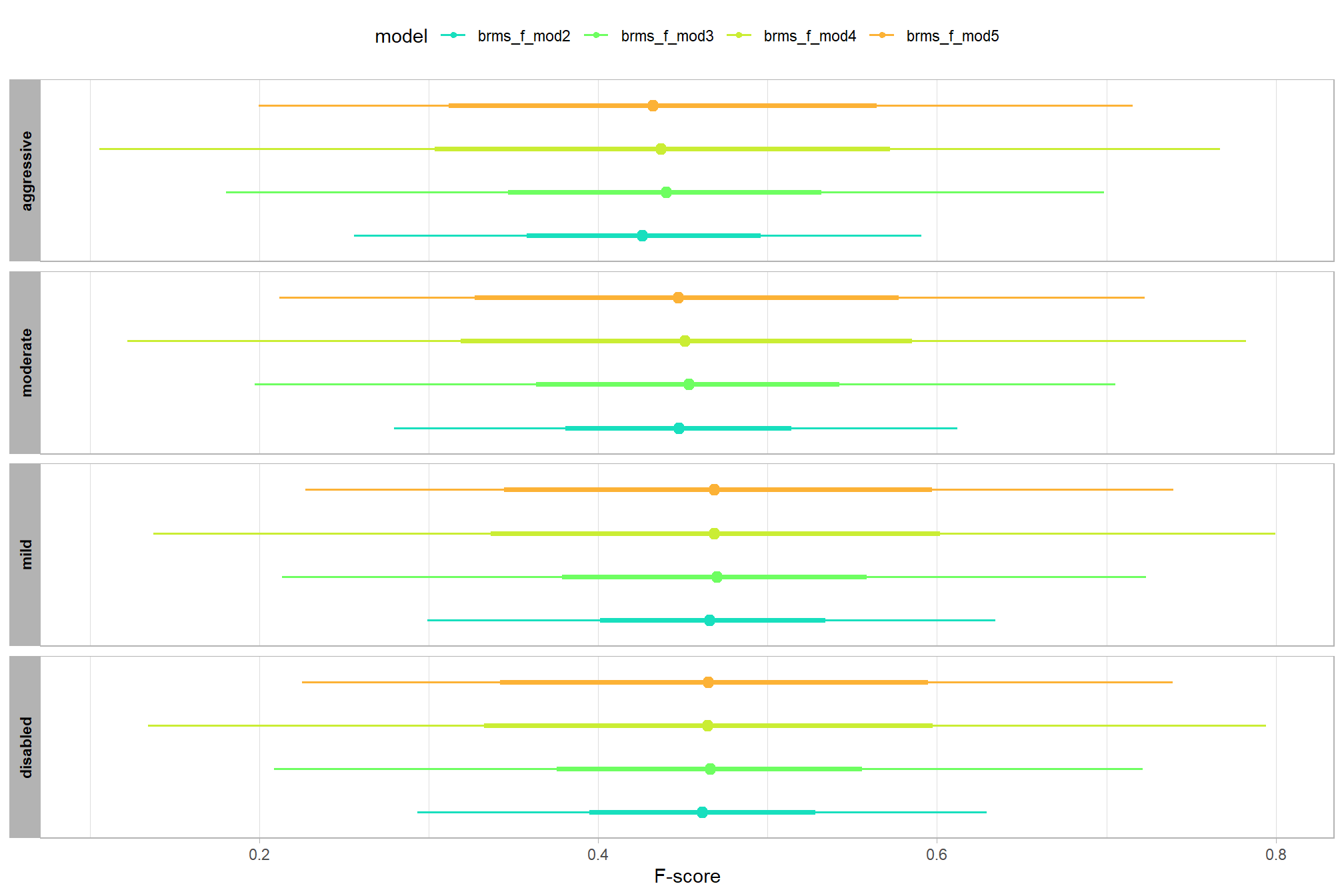

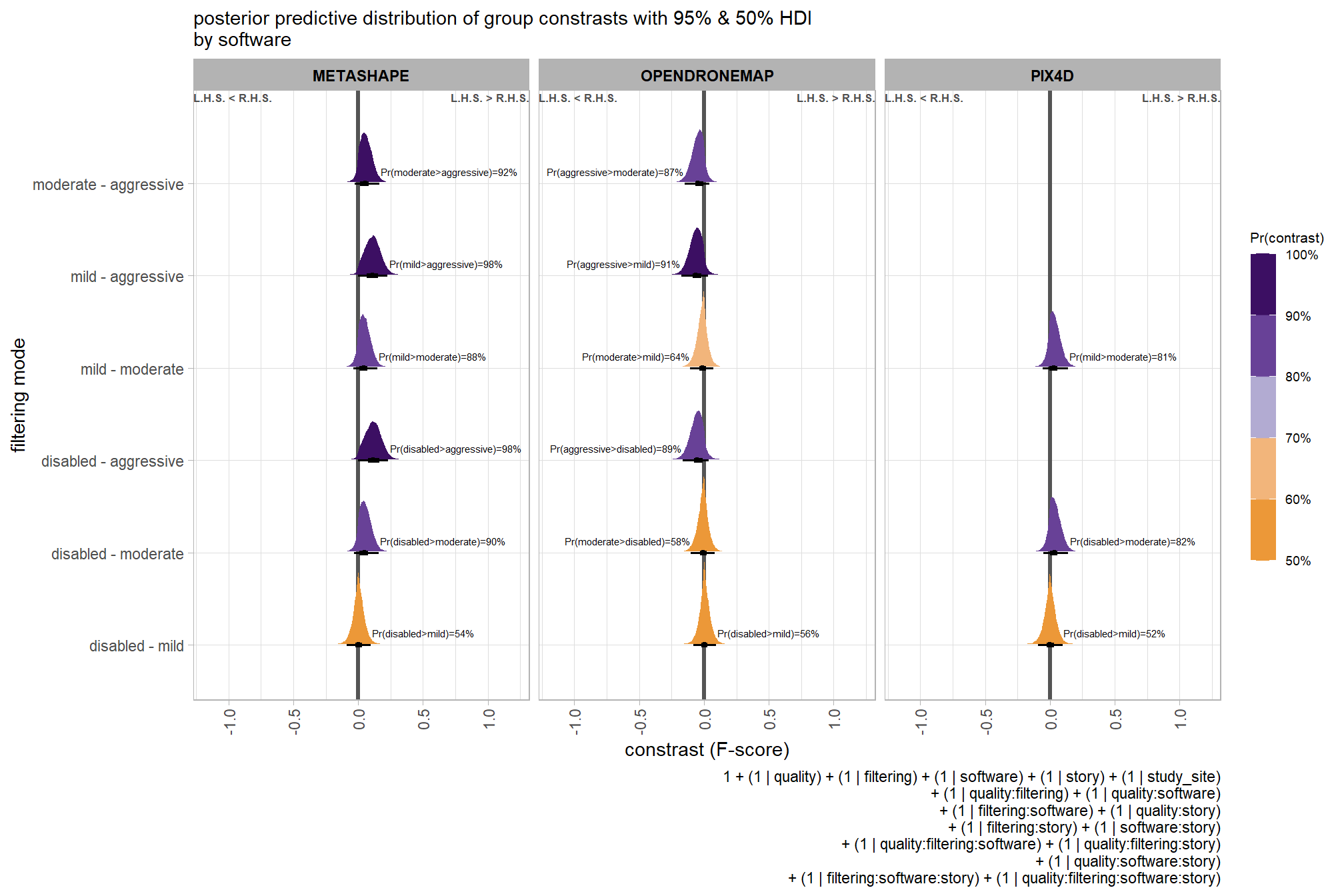

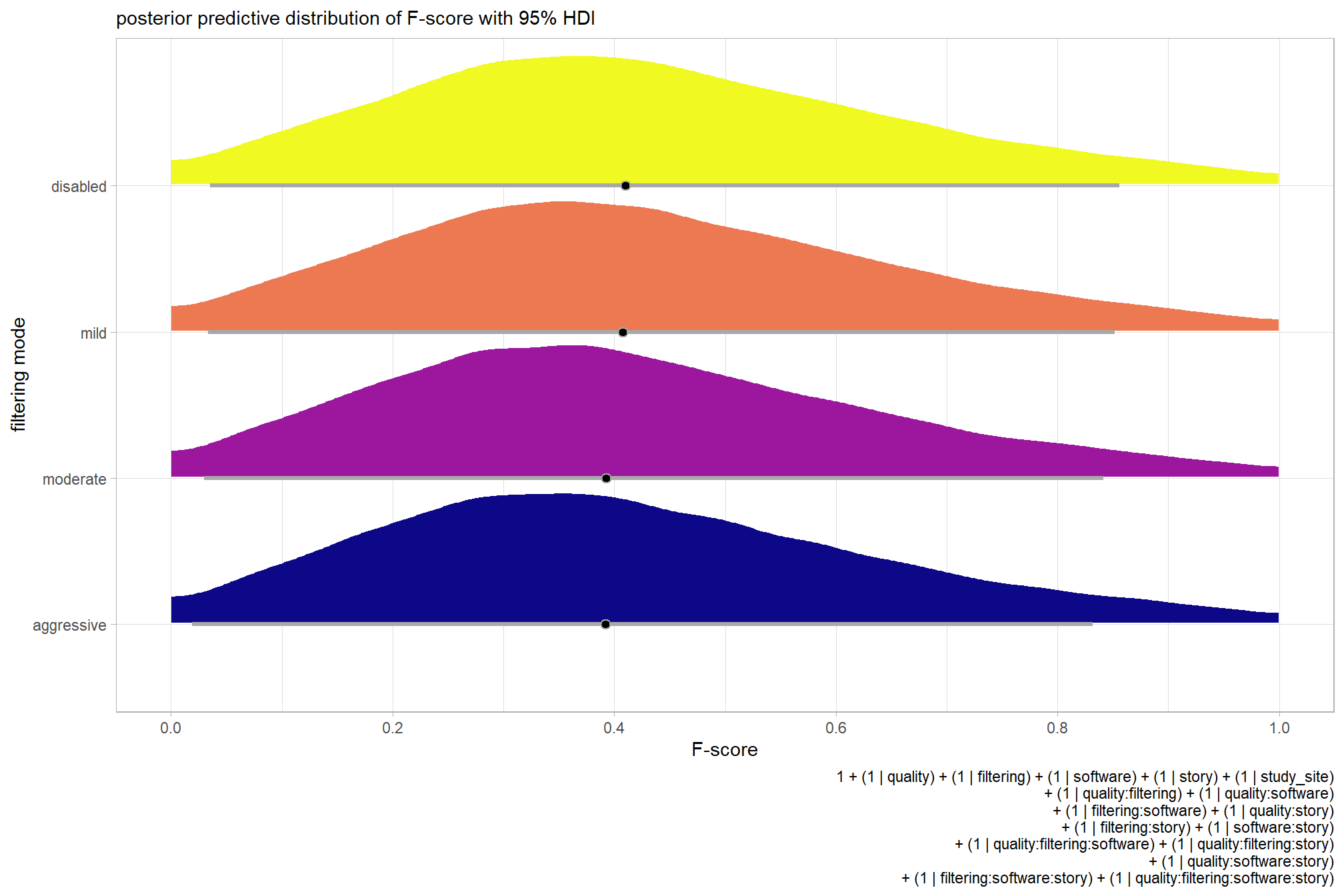

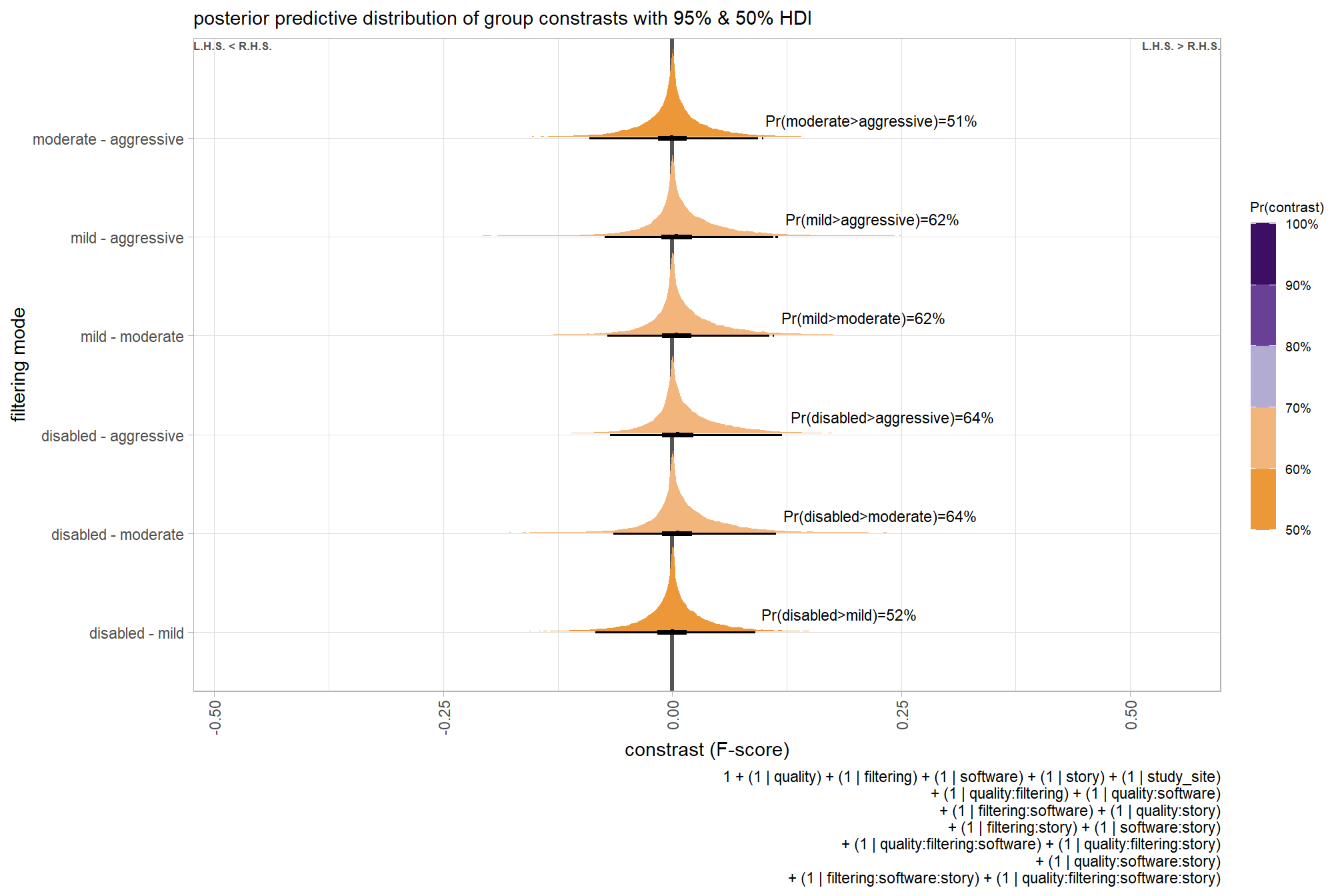

For completeness, let’s also collapse across the dense cloud quality to compare the filtering mode setting effect.

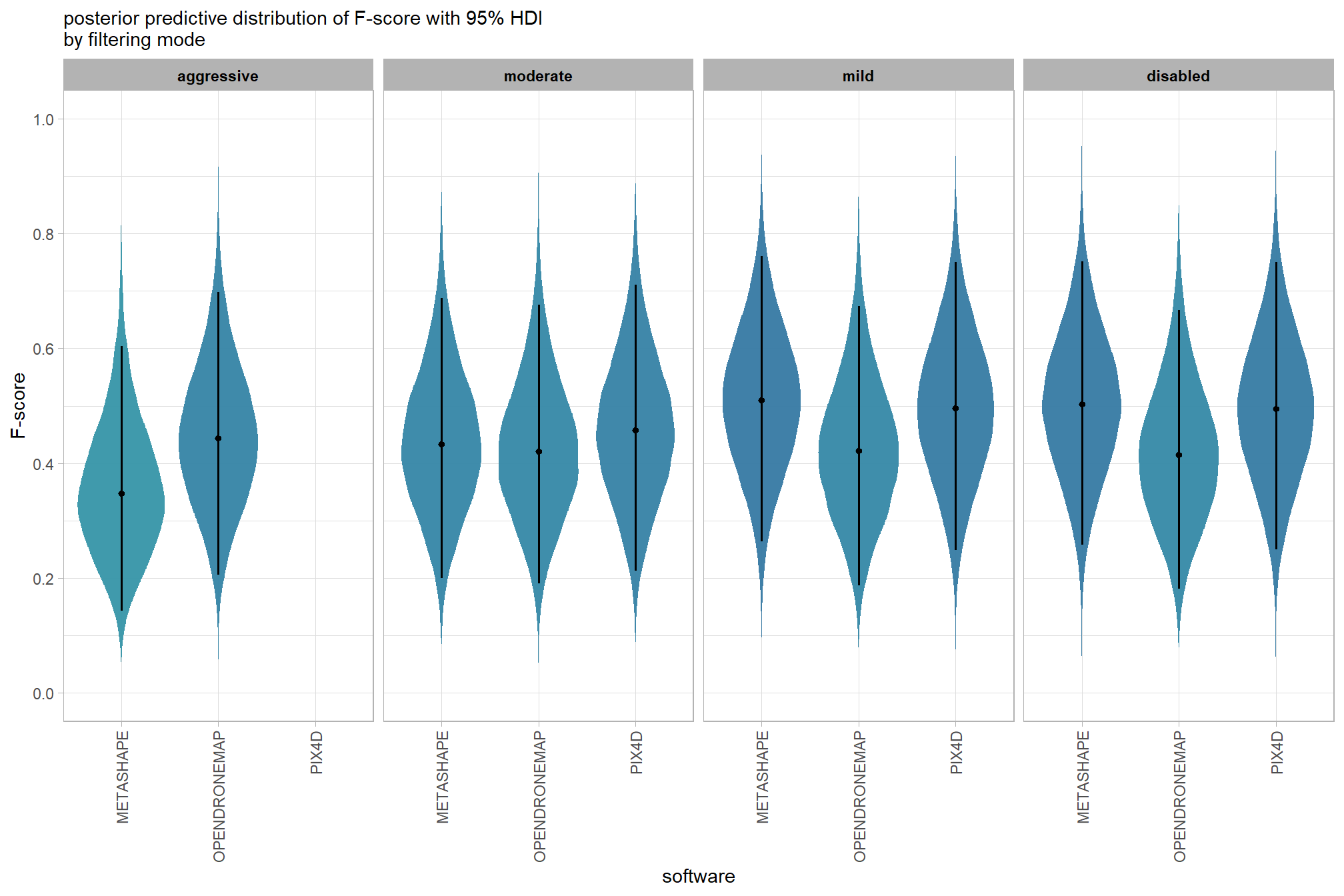

In a hierarchical model structure, we have to make use of the re_formula argument within tidybayes::add_epred_draws

ptcld_validation_data %>%

dplyr::distinct(depth_maps_generation_filtering_mode) %>%

tidybayes::add_epred_draws(

brms_f_mod2

# this part is crucial

, re_formula = ~ (1 | depth_maps_generation_filtering_mode)

) %>%

dplyr::rename(value = .epred) %>%

# plot

ggplot(

mapping = aes(

x = value, y = depth_maps_generation_filtering_mode

, fill = depth_maps_generation_filtering_mode

)

) +

tidybayes::stat_halfeye(

point_interval = median_hdi, .width = .95

, interval_color = "gray66"

, shape = 21, point_color = "gray66", point_fill = "black"

, justification = -0.01

) +

scale_fill_viridis_d(option = "plasma", drop = F) +

scale_x_continuous(breaks = scales::extended_breaks(n=8)) +

labs(

y = "filtering mode", x = "F-score"

, subtitle = "posterior predictive distribution of F-score with 95% HDI"

, caption = form_temp

) +

theme_light() +

theme(legend.position = "none")

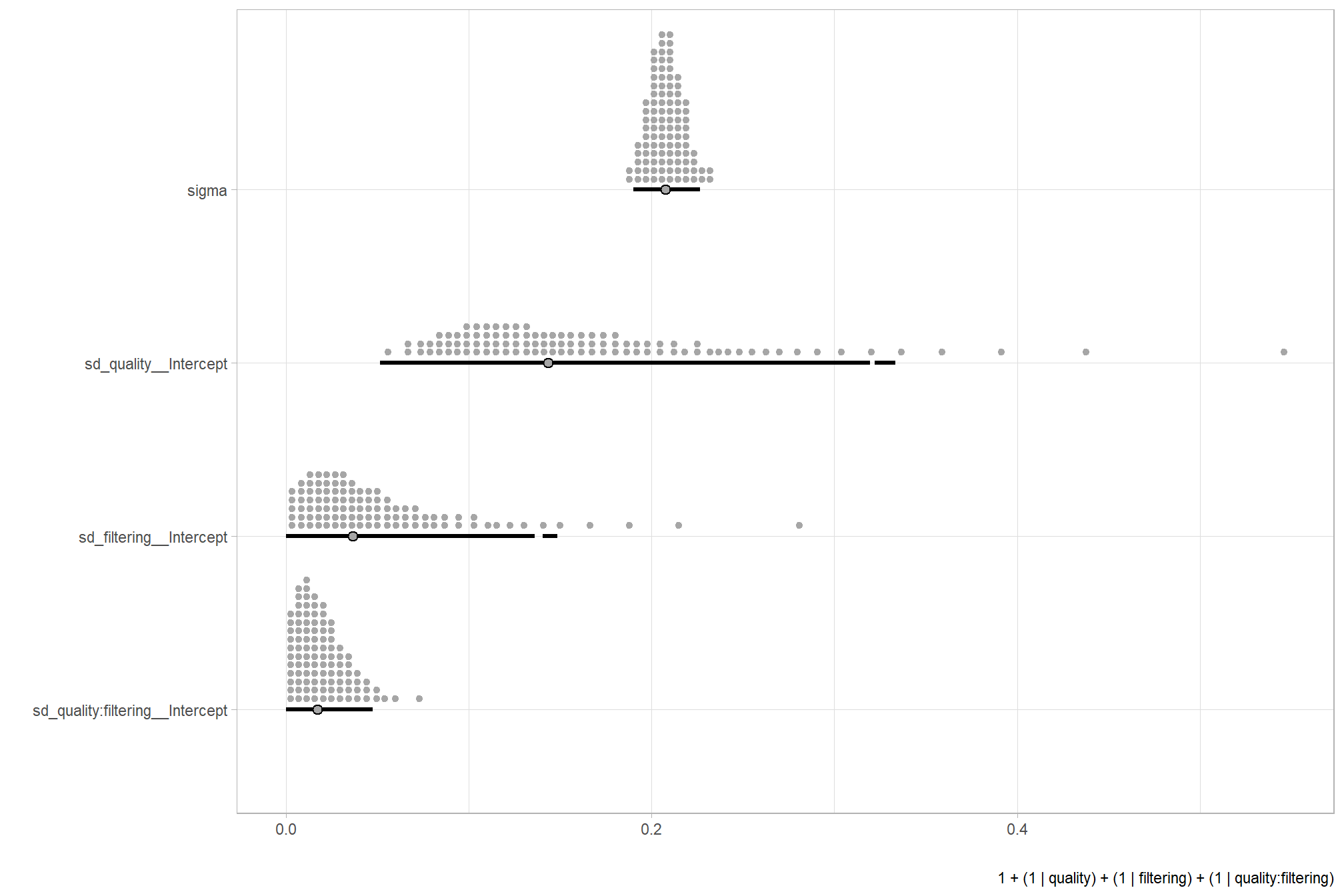

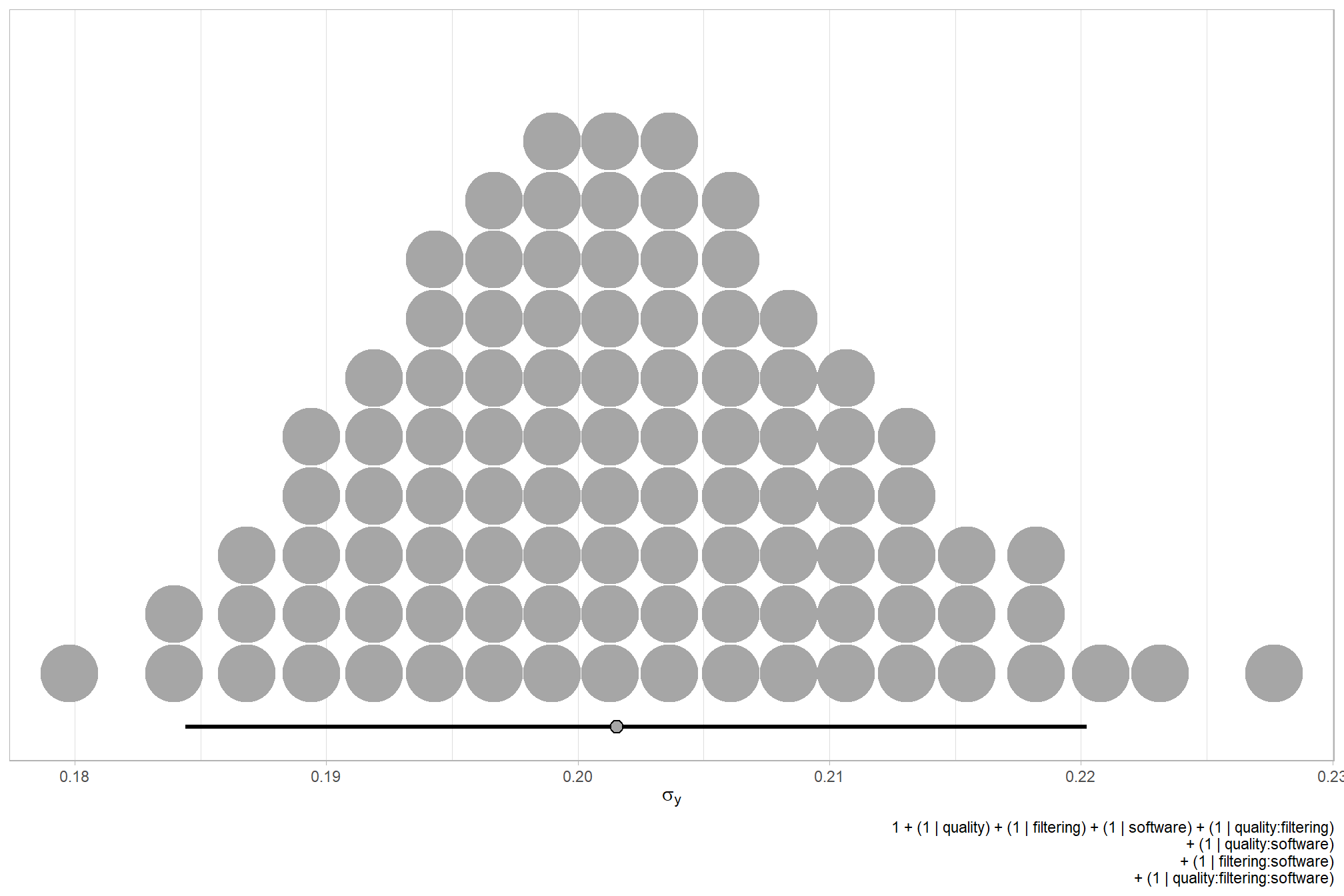

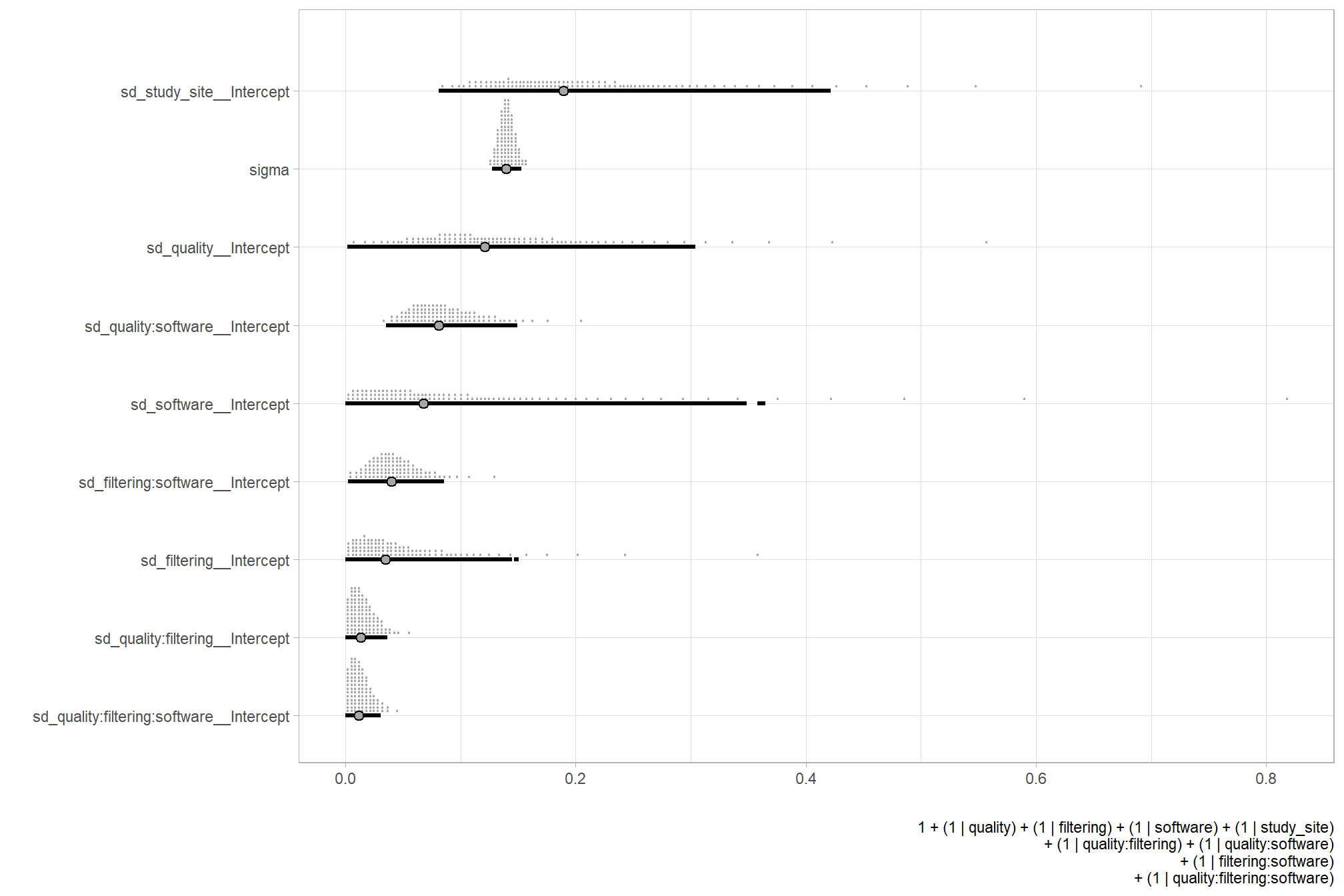

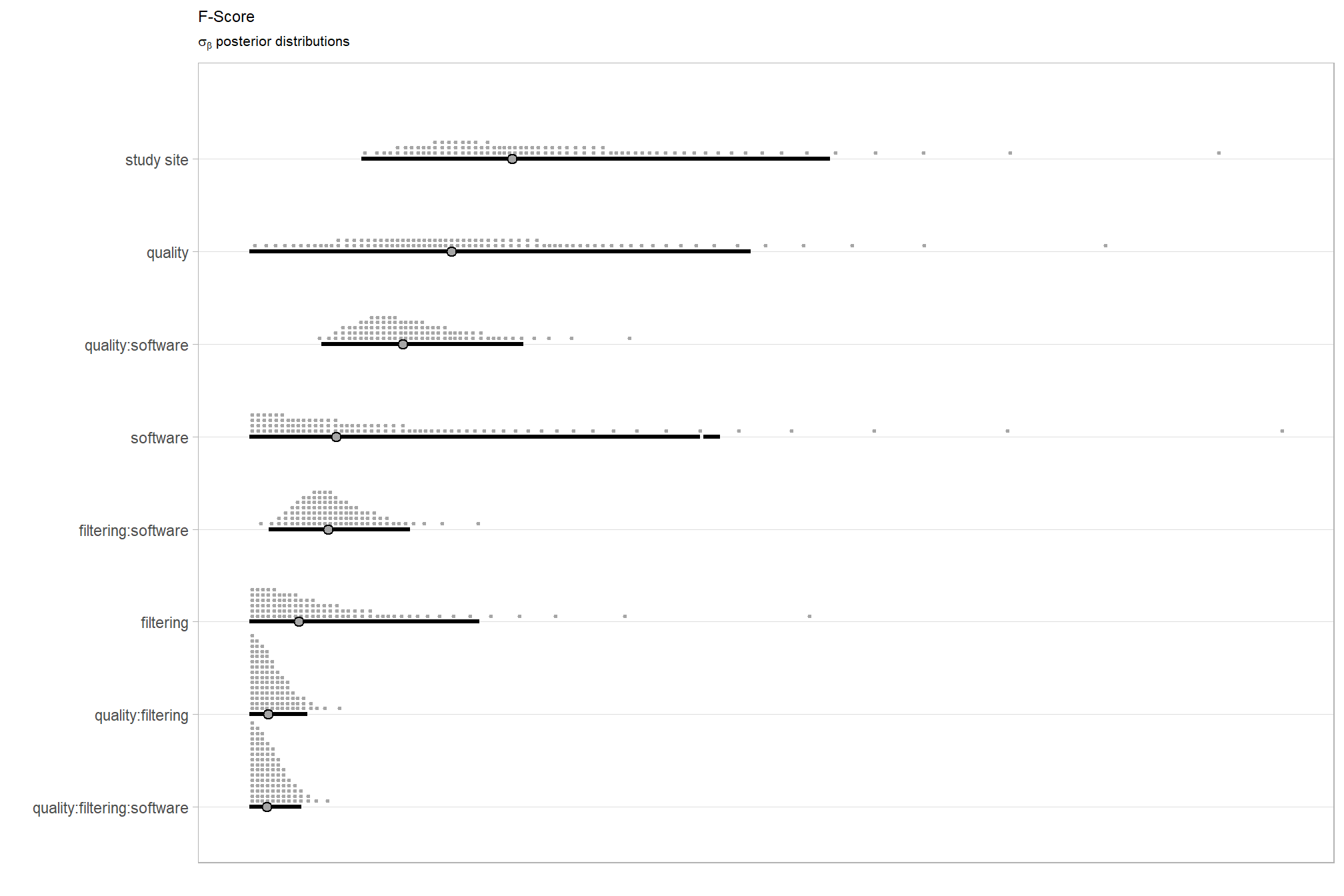

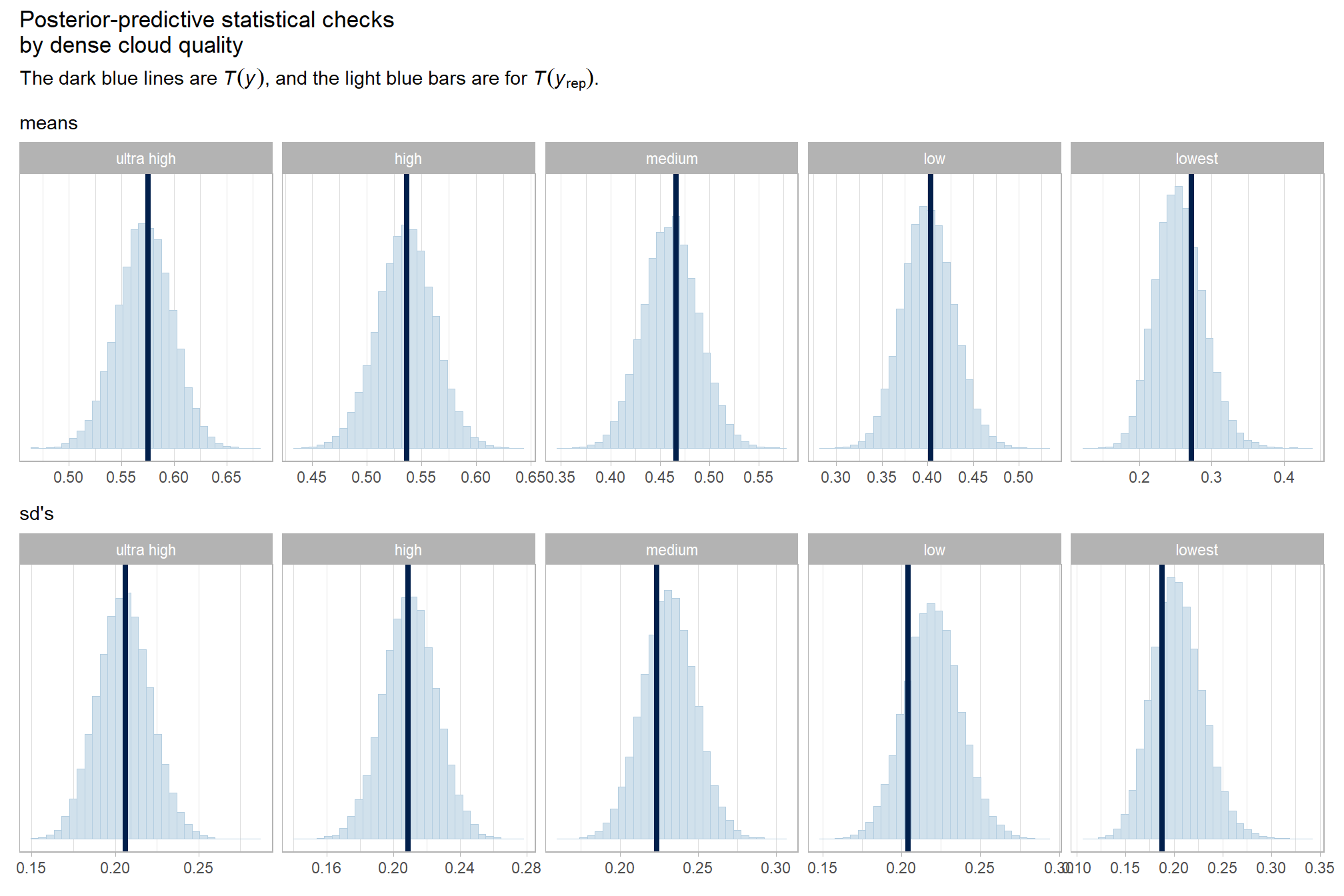

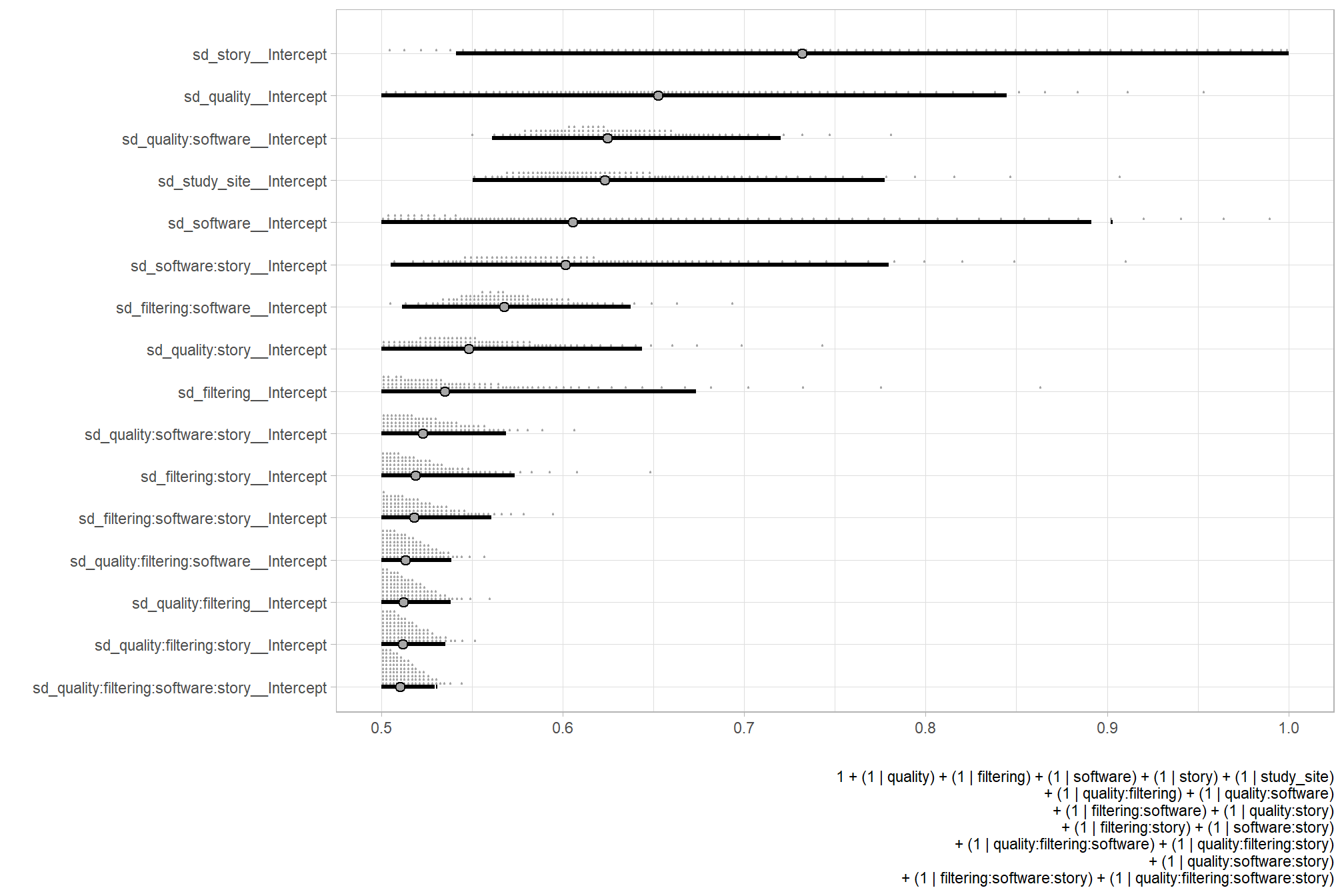

…it looks like the variation in F-score is driven by the dense cloud quality setting. We can quantify the variation in F-score by comparing the \(\sigma\) posteriors.

# extract the posterior draws

brms::as_draws_df(brms_f_mod2) %>%

dplyr::select(c(sigma,tidyselect::starts_with("sd_"))) %>%

tidyr::pivot_longer(dplyr::everything()) %>%

# dplyr::group_by(name) %>%

# tidybayes::median_hdi(value) %>%

dplyr::mutate(

name = name %>%

stringr::str_replace_all("depth_maps_generation_quality", "quality") %>%

stringr::str_replace_all("depth_maps_generation_filtering_mode", "filtering") %>%

forcats::fct_reorder(value)

) %>%

# plot

ggplot(aes(x = value, y = name)) +

tidybayes::stat_dotsinterval(

point_interval = median_hdi, .width = .95

, justification = -0.04

, shape = 21 #, point_size = 3

, quantiles = 100

) +

labs(x = "", y = "", caption = form_temp) +

theme_light()

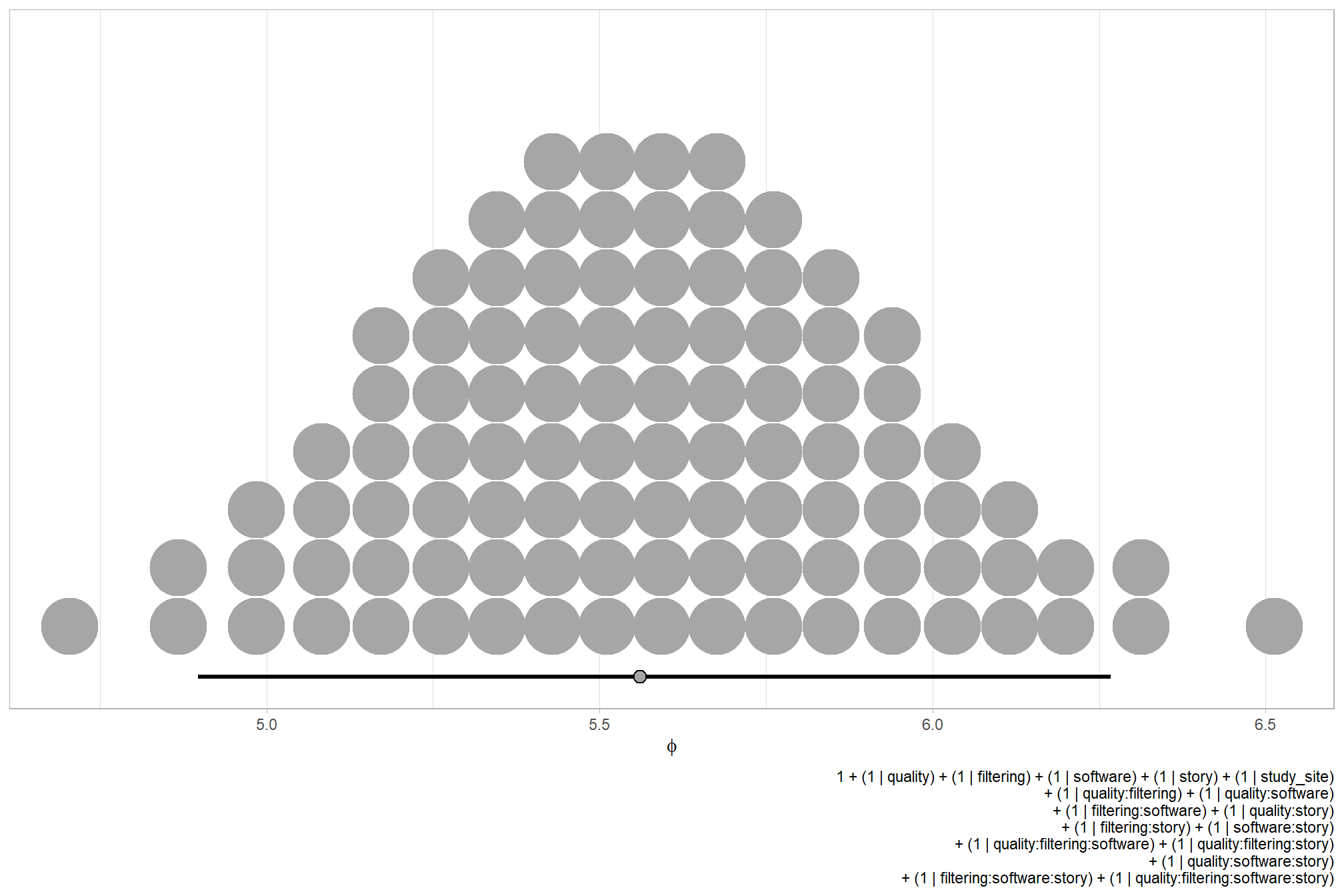

Finally we can perform model selection via information criteria, from section 10 in Kurz’s ebook supplement:

expected log predictive density (

elpd_loo), the estimated effective number of parameters (p_loo), and the Pareto smoothed importance-sampling leave-one-out cross-validation (PSIS-LOO;looic). Each estimate comes with a standard error (i.e.,SE). Like other information criteria, the LOO values aren’t of interest in and of themselves. However, the estimate of one model’s LOO relative to that of another can be of great interest. We generally prefer models with lower information criteria. With thebrms::loo_compare()function, we can compute a formal difference score between two models…Thebrms::loo_compare()output rank orders the models such that the best fitting model appears on top.

brms_f_mod1 = brms::add_criterion(brms_f_mod1, criterion = c("loo", "waic"))

brms_f_mod2 = brms::add_criterion(brms_f_mod2, criterion = c("loo", "waic"))

brms::loo_compare(brms_f_mod1, brms_f_mod2, criterion = "loo")## elpd_diff se_diff

## brms_f_mod1 0.0 0.0

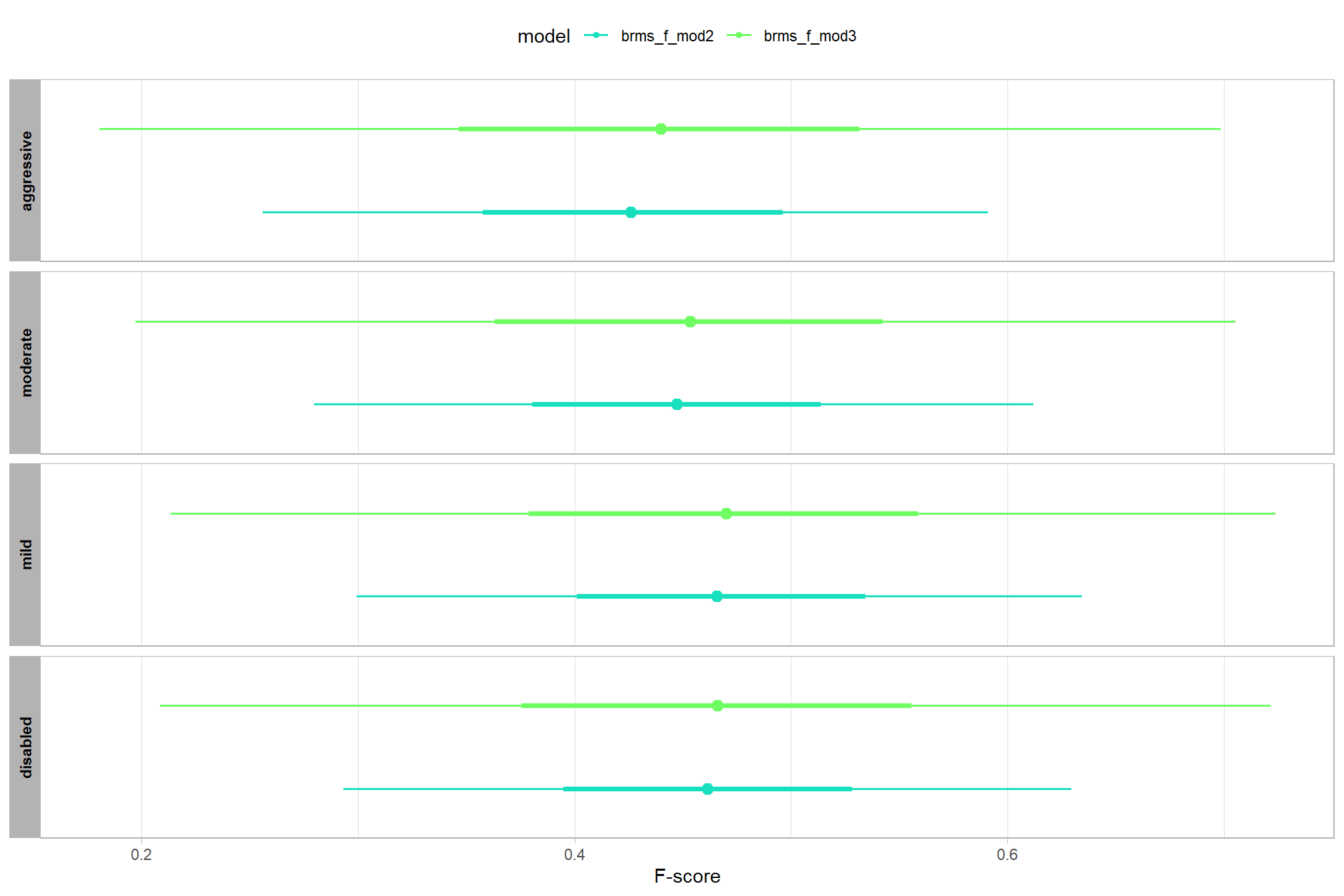

## brms_f_mod2 -1.7 1.2These models are not significantly different suggesting that the filtering mode is not contributing much to the variation in SfM-derived tree detection reliability after accounting for the quality setting

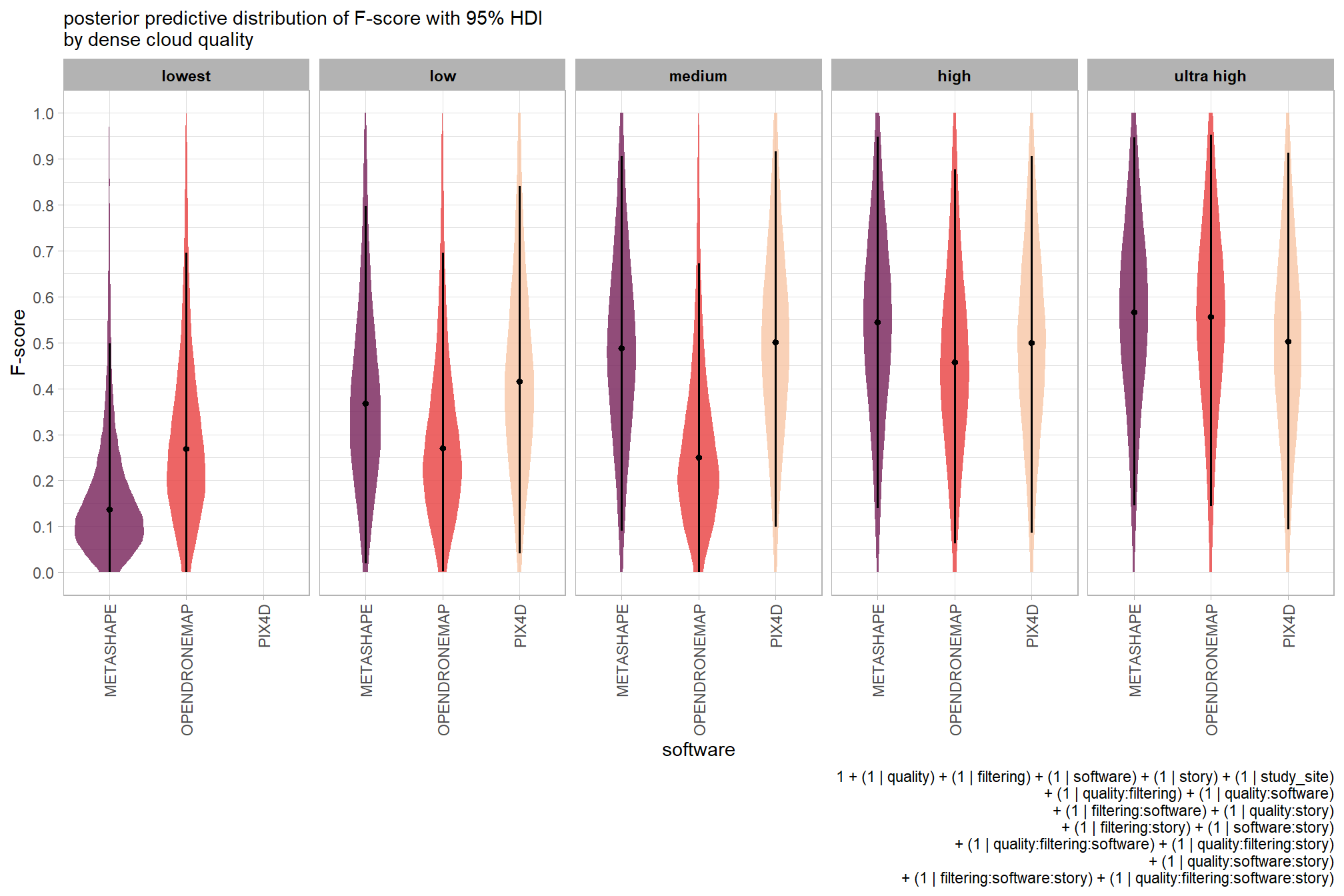

6.5 Three Nominal Predictors

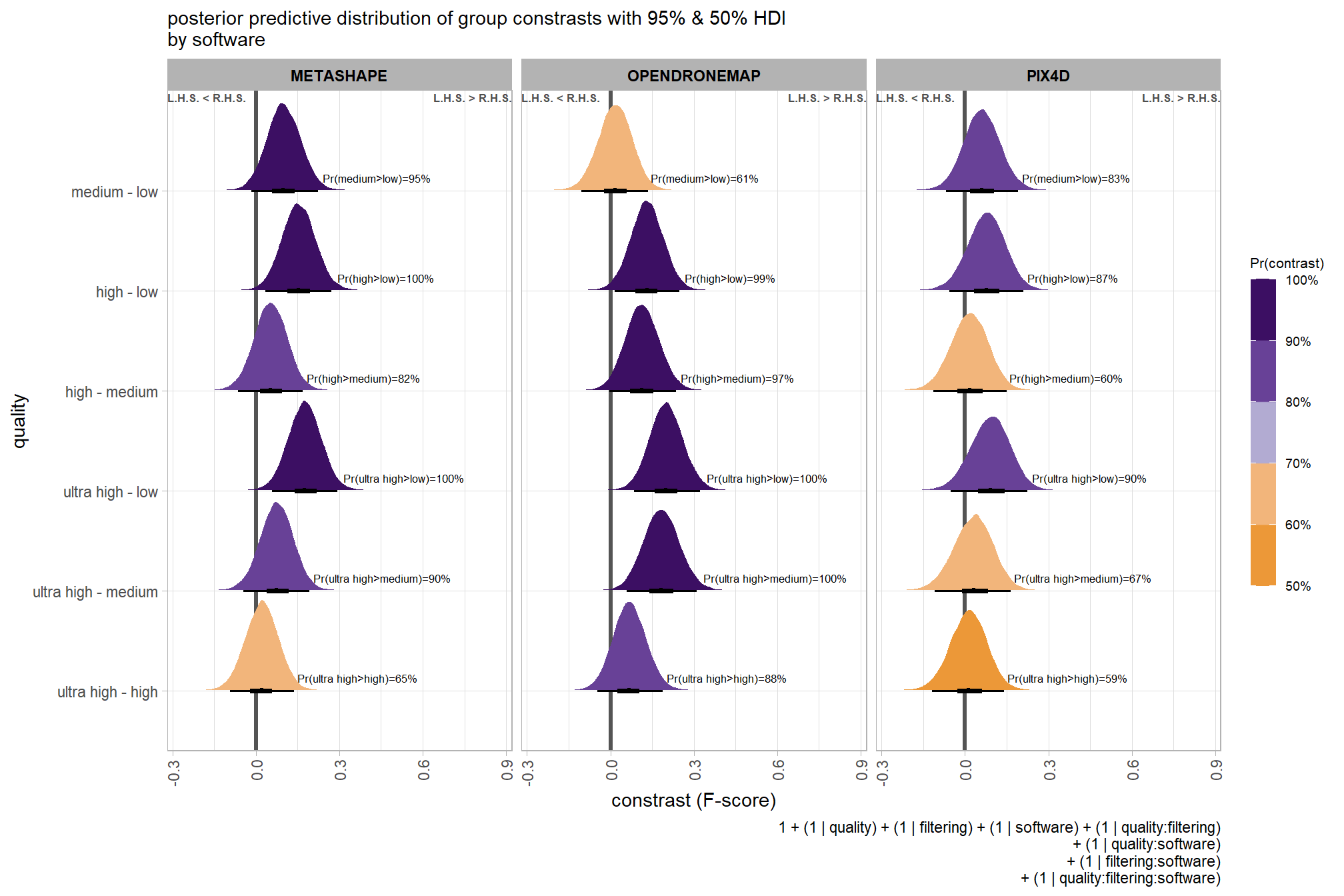

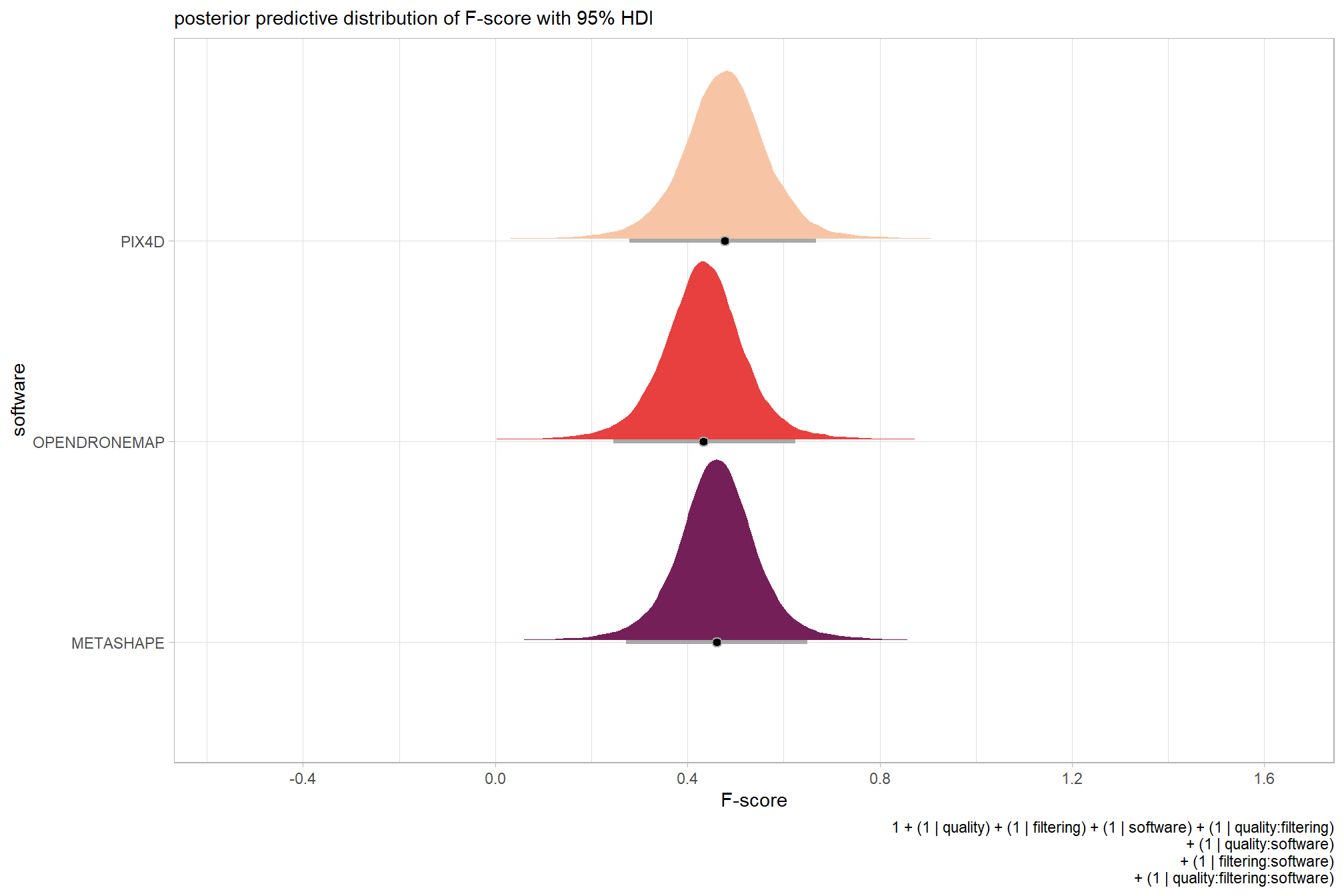

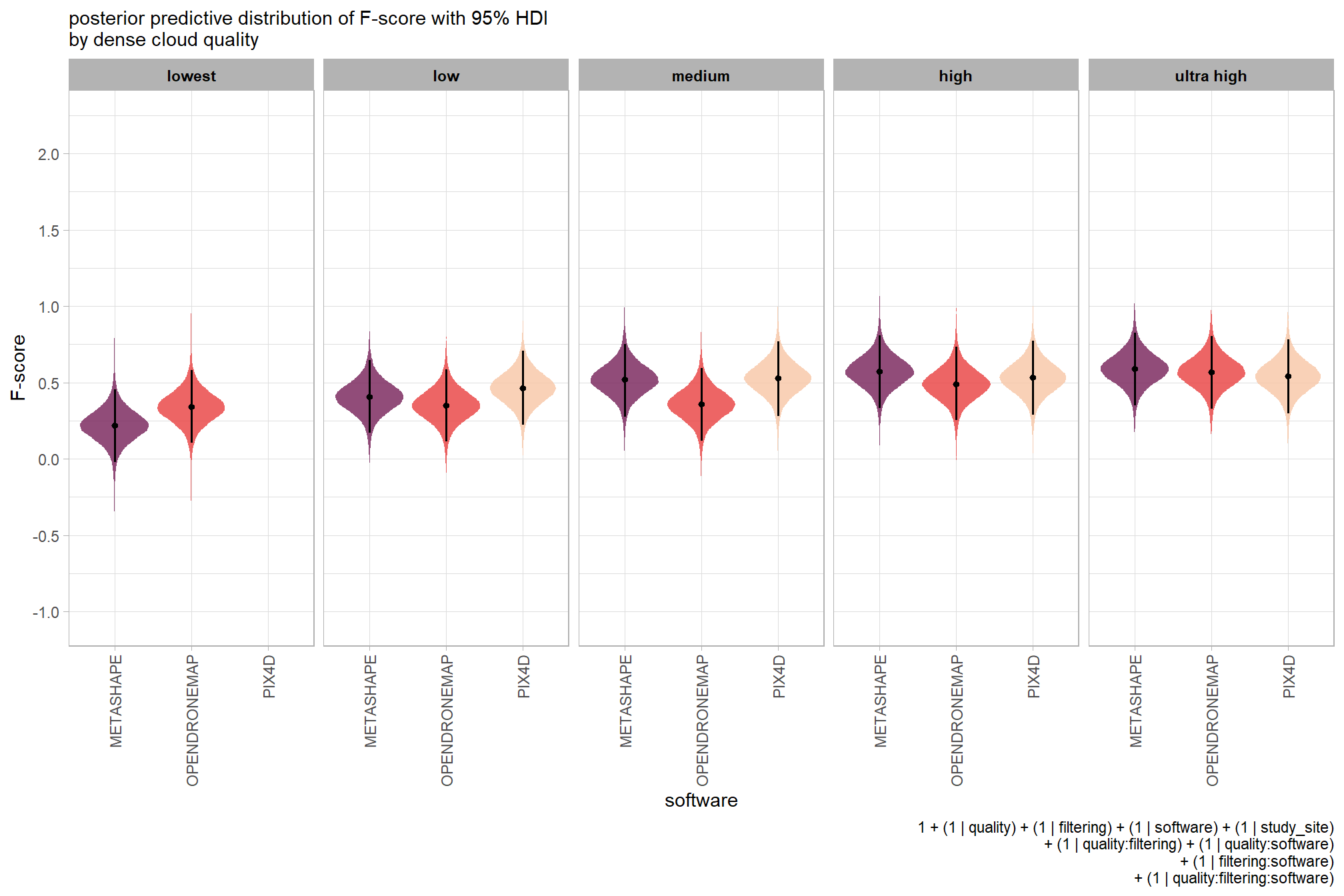

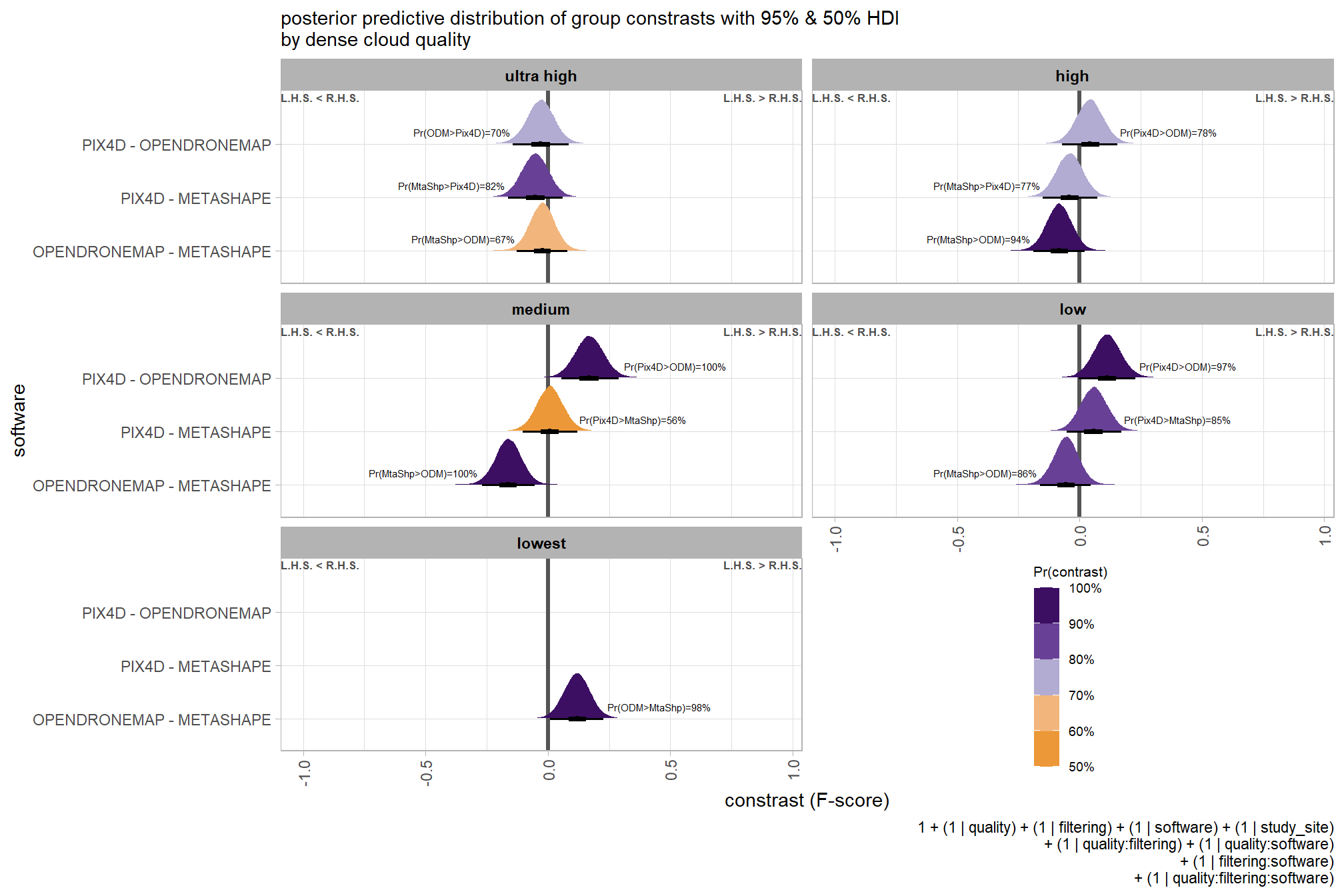

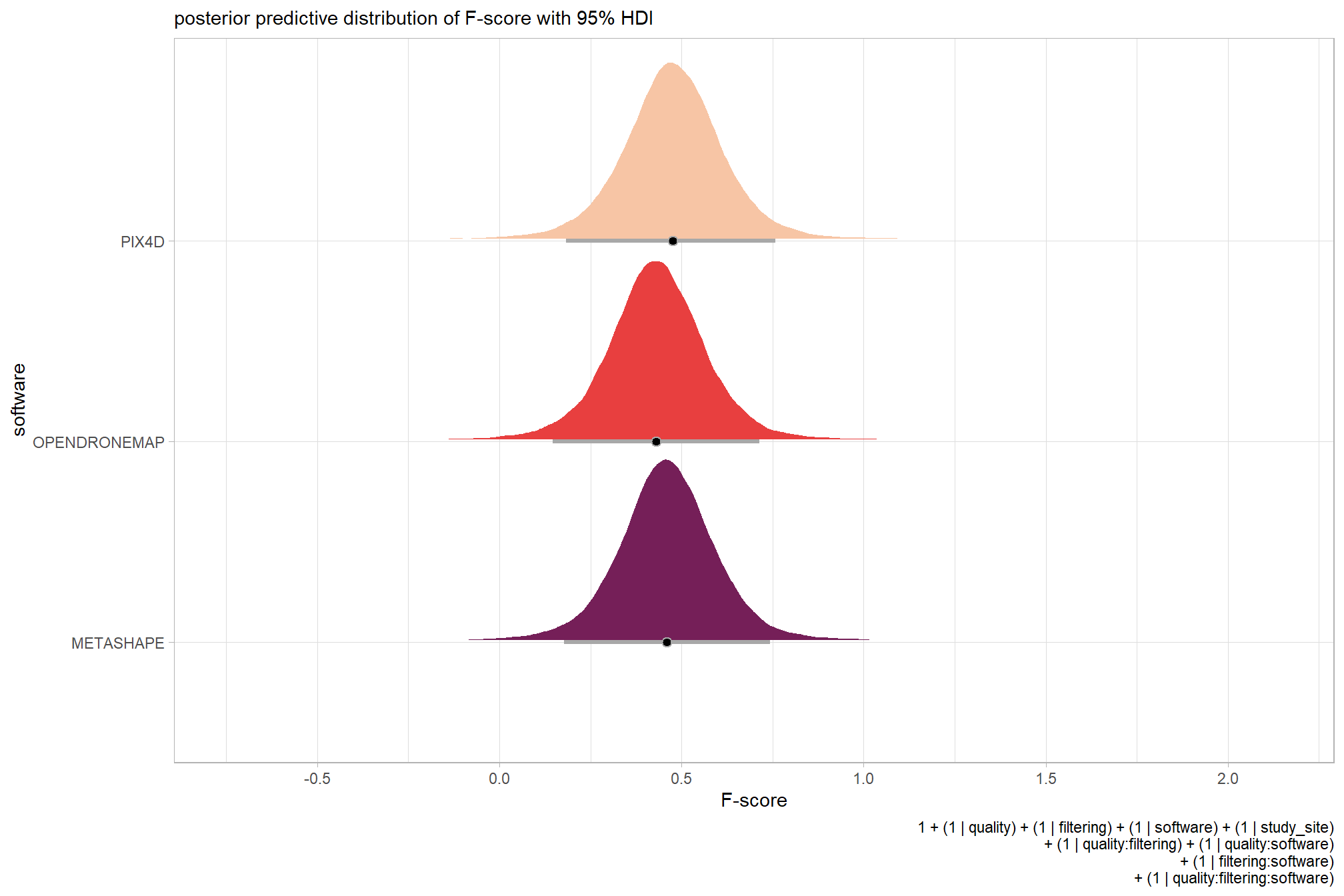

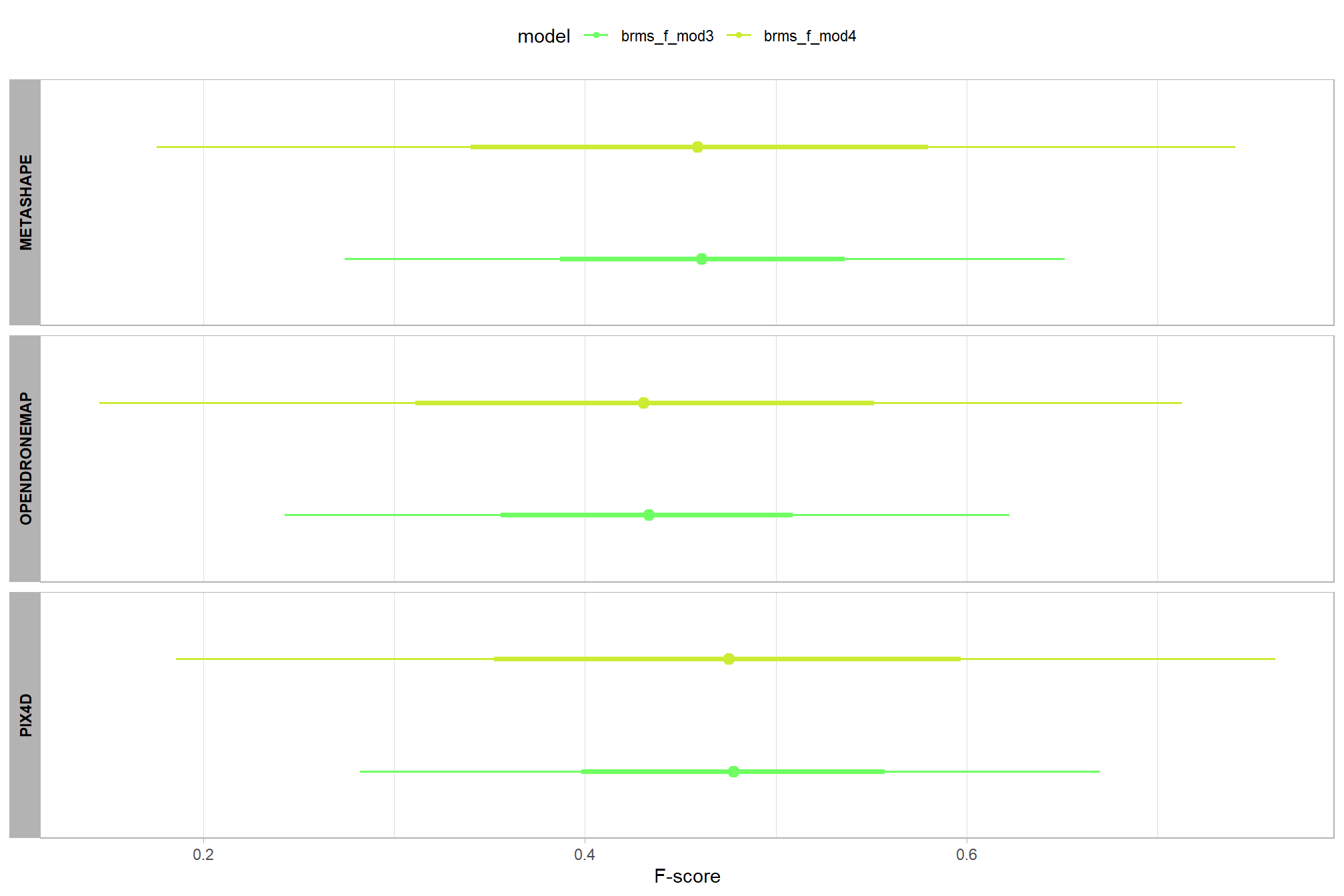

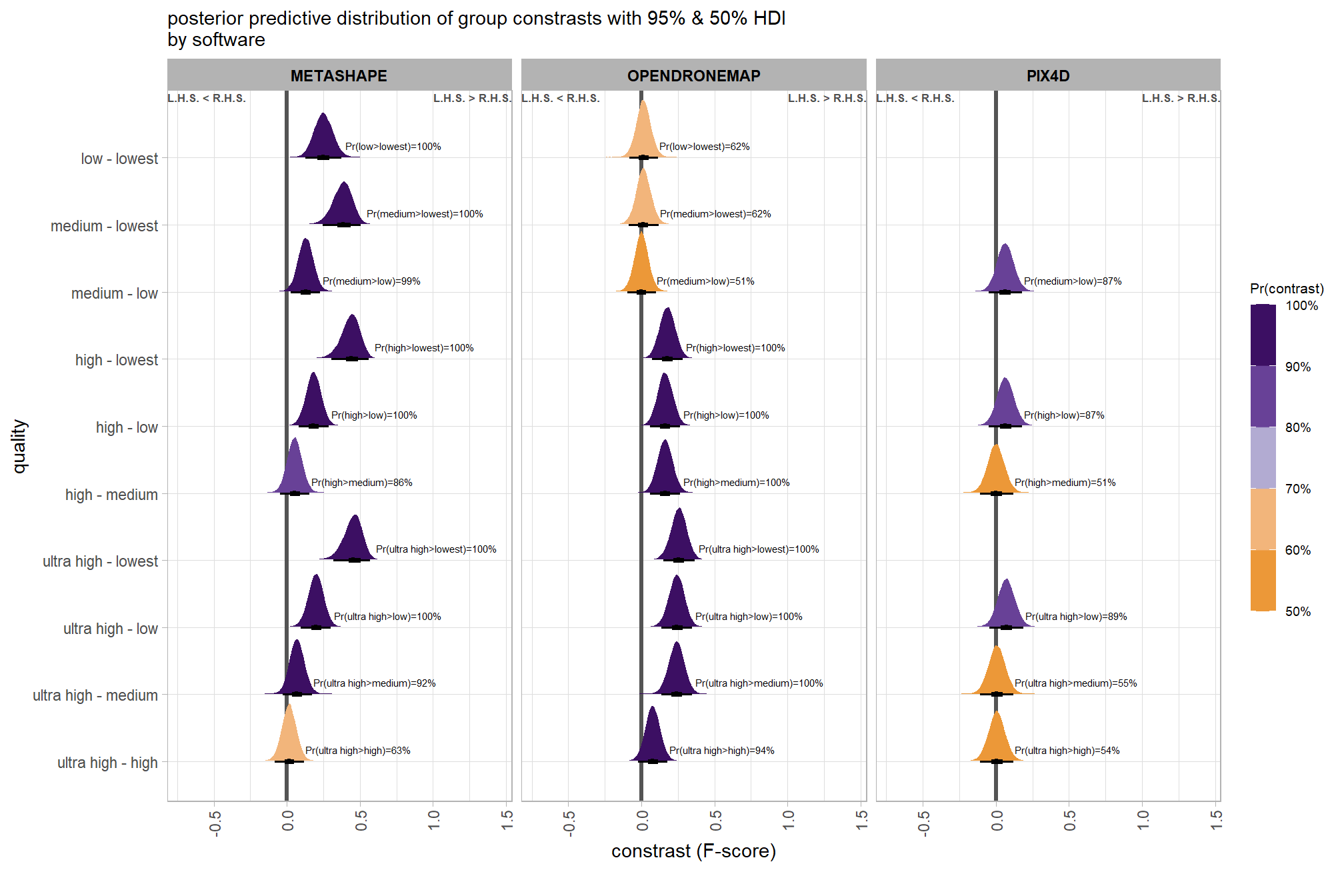

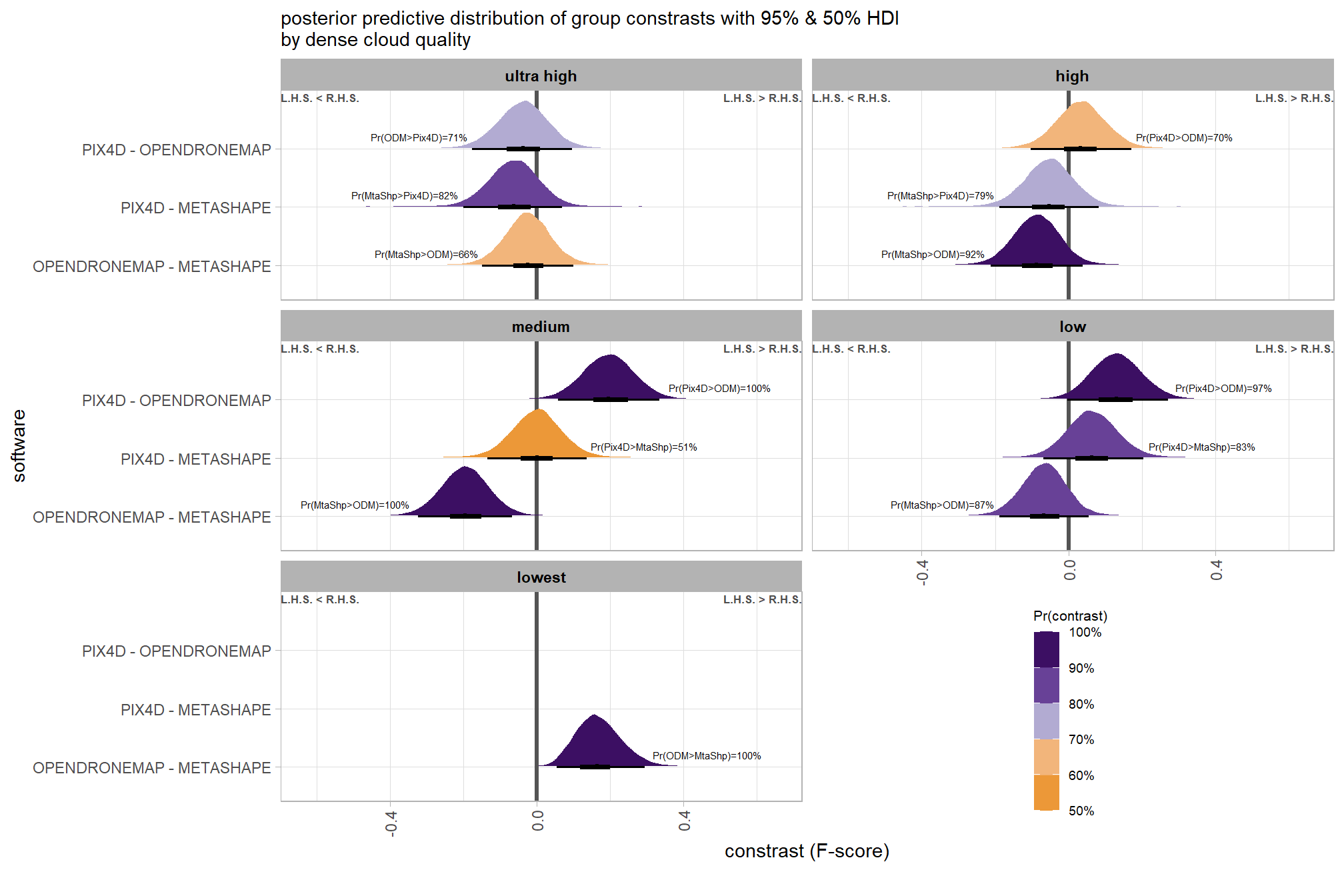

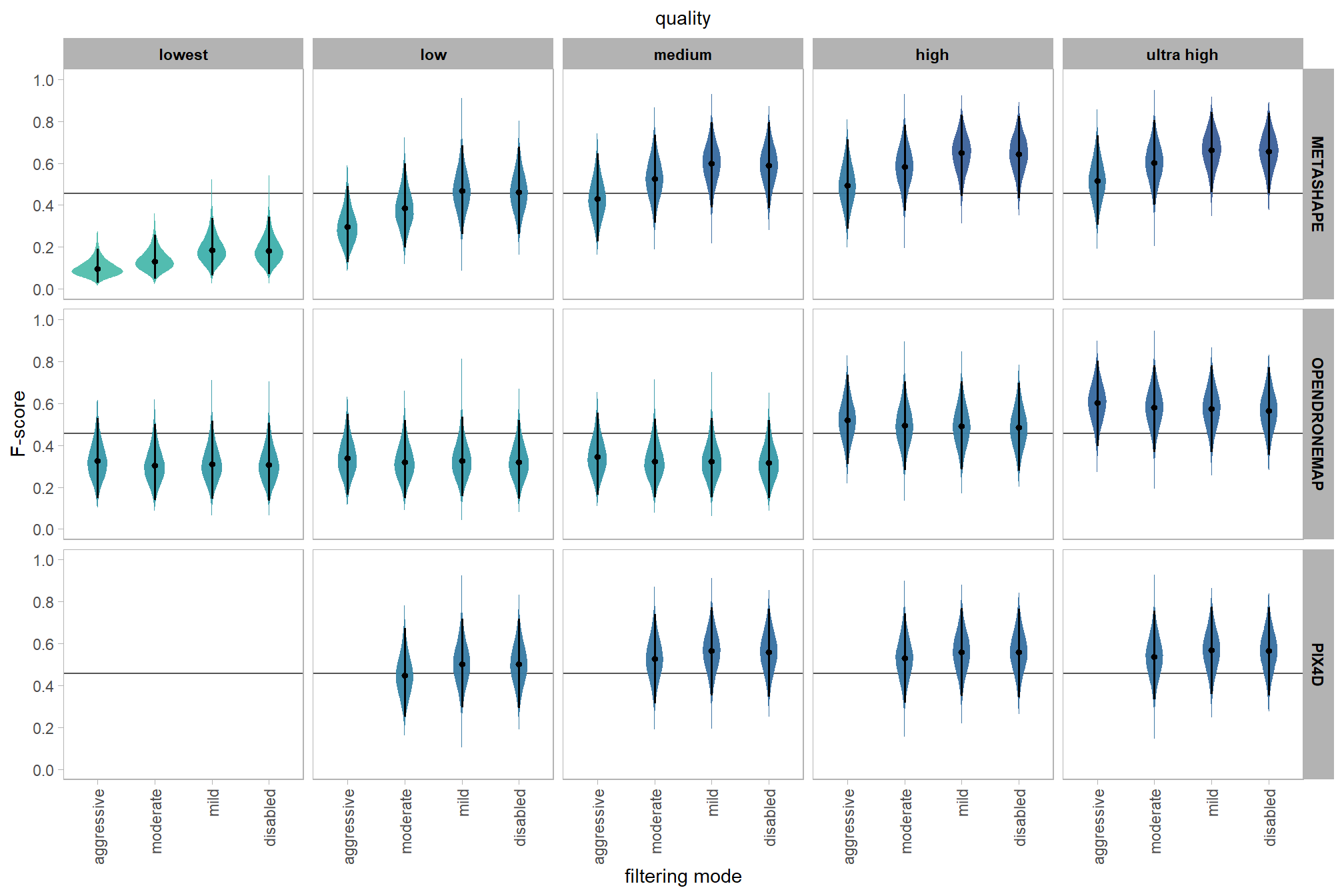

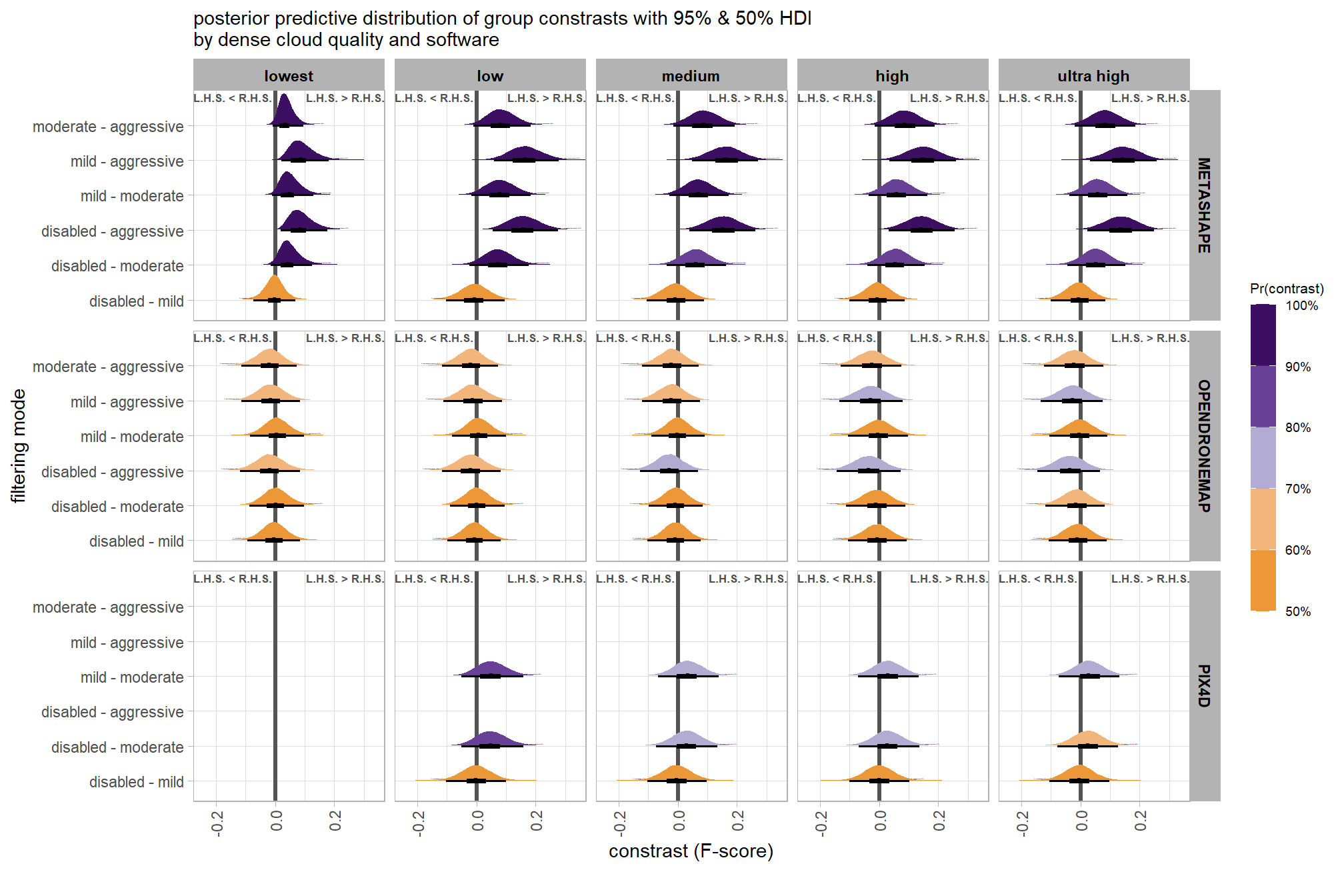

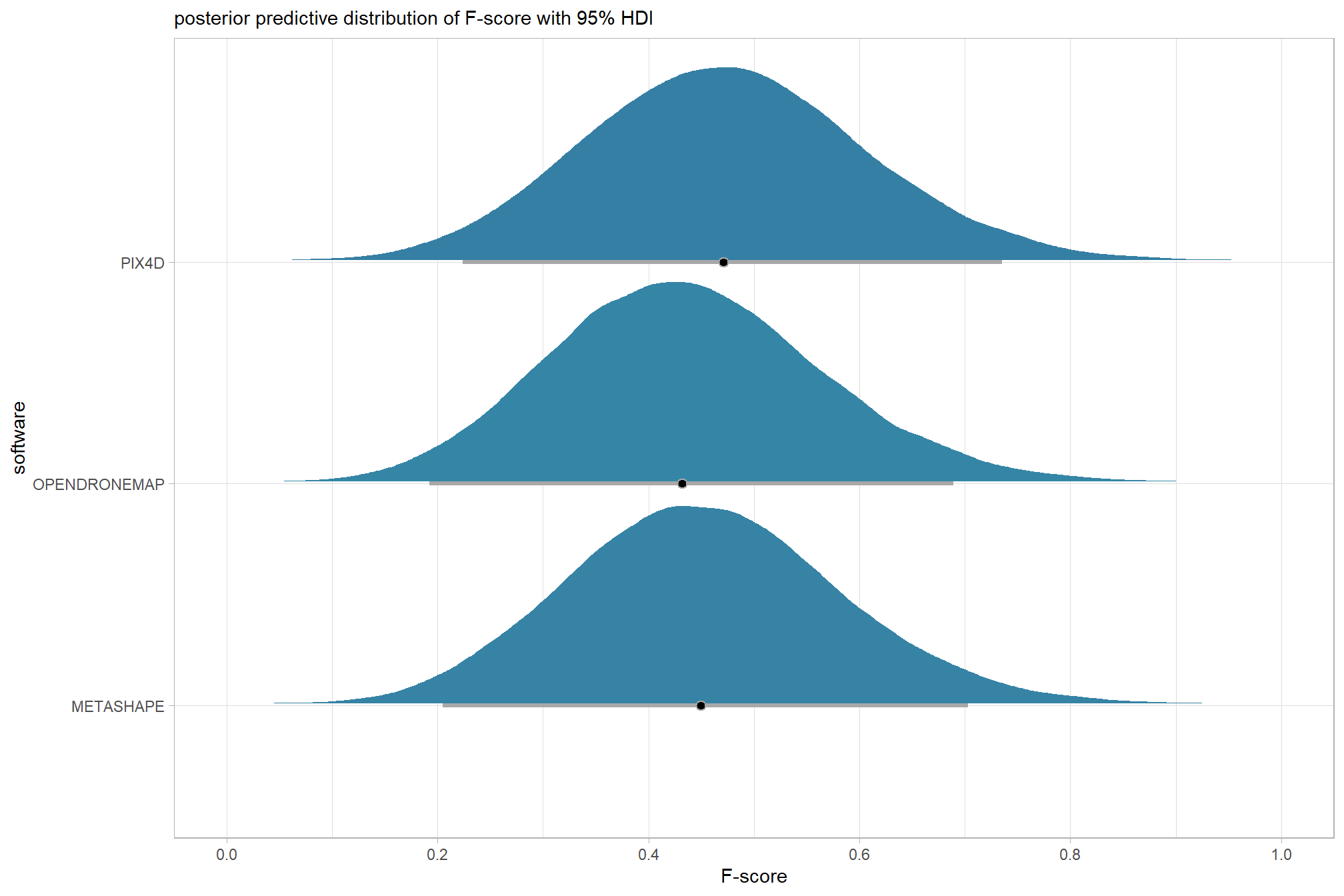

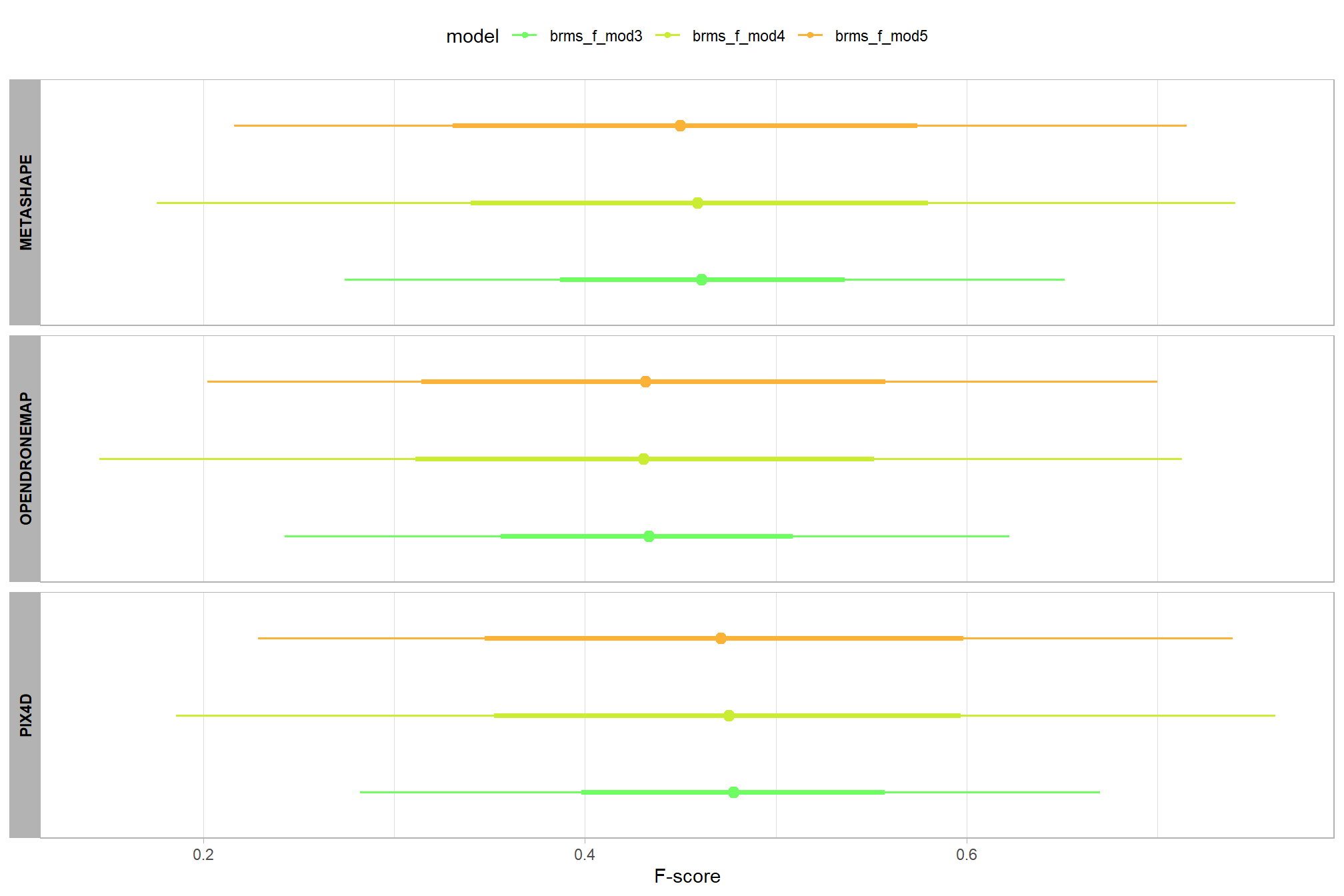

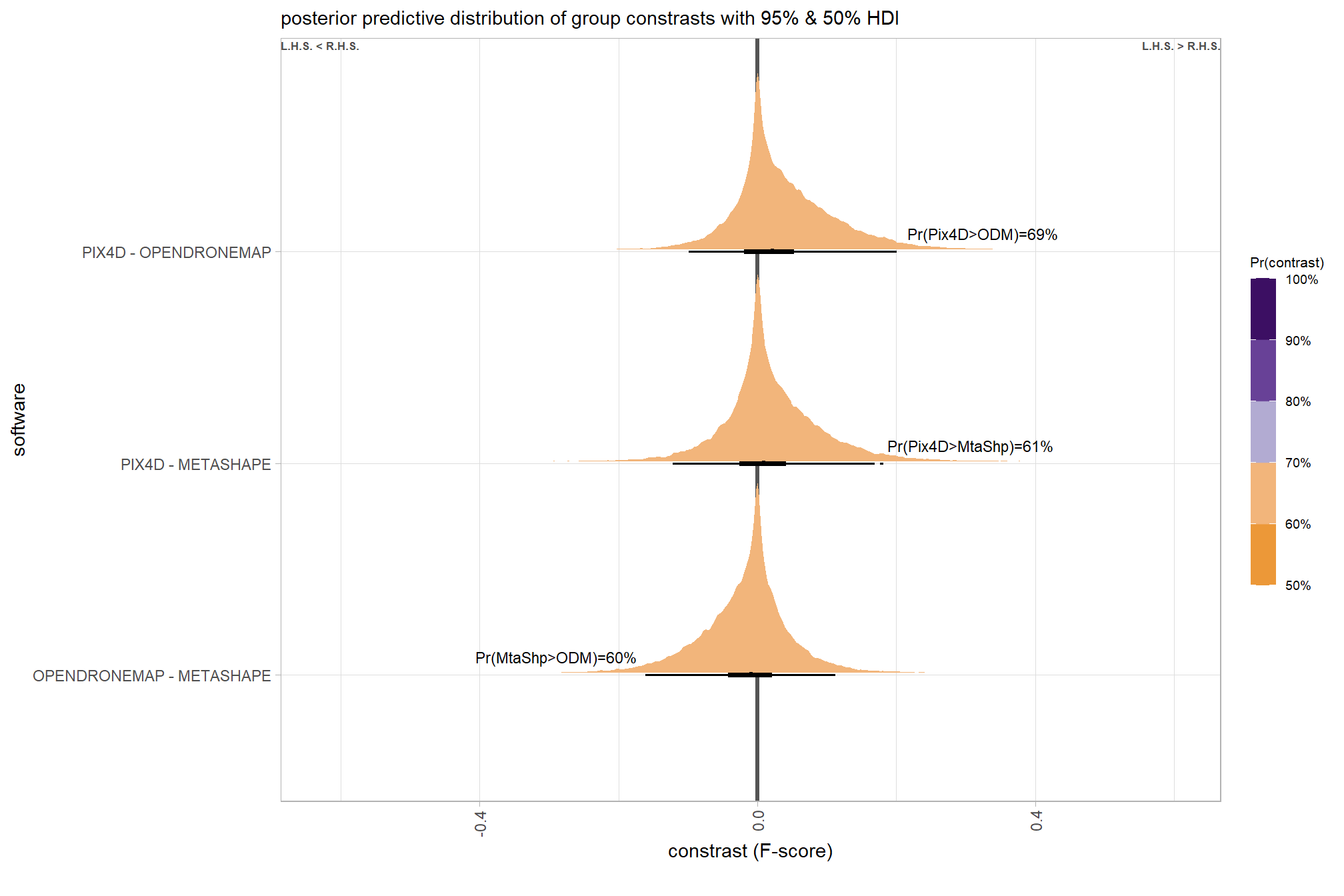

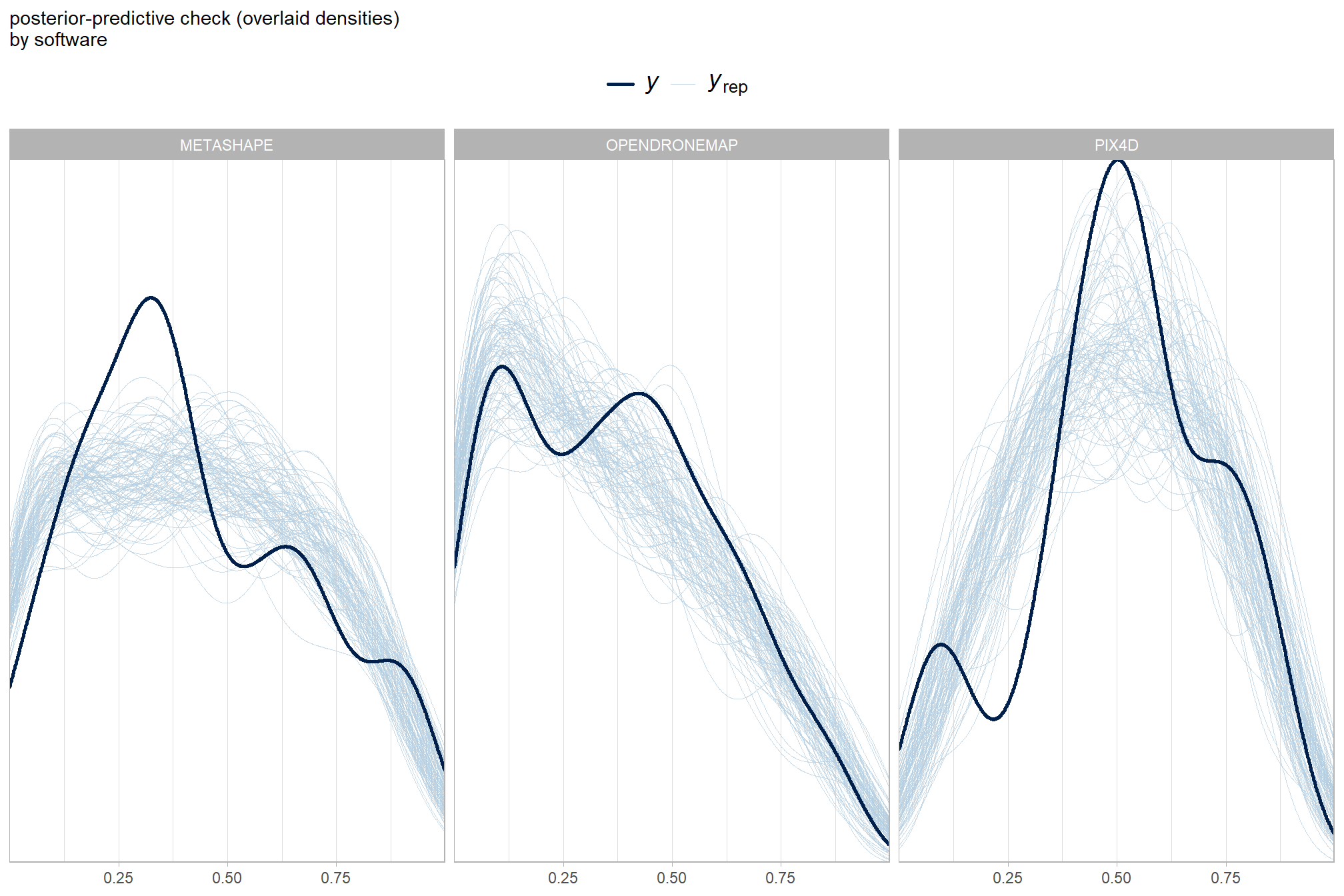

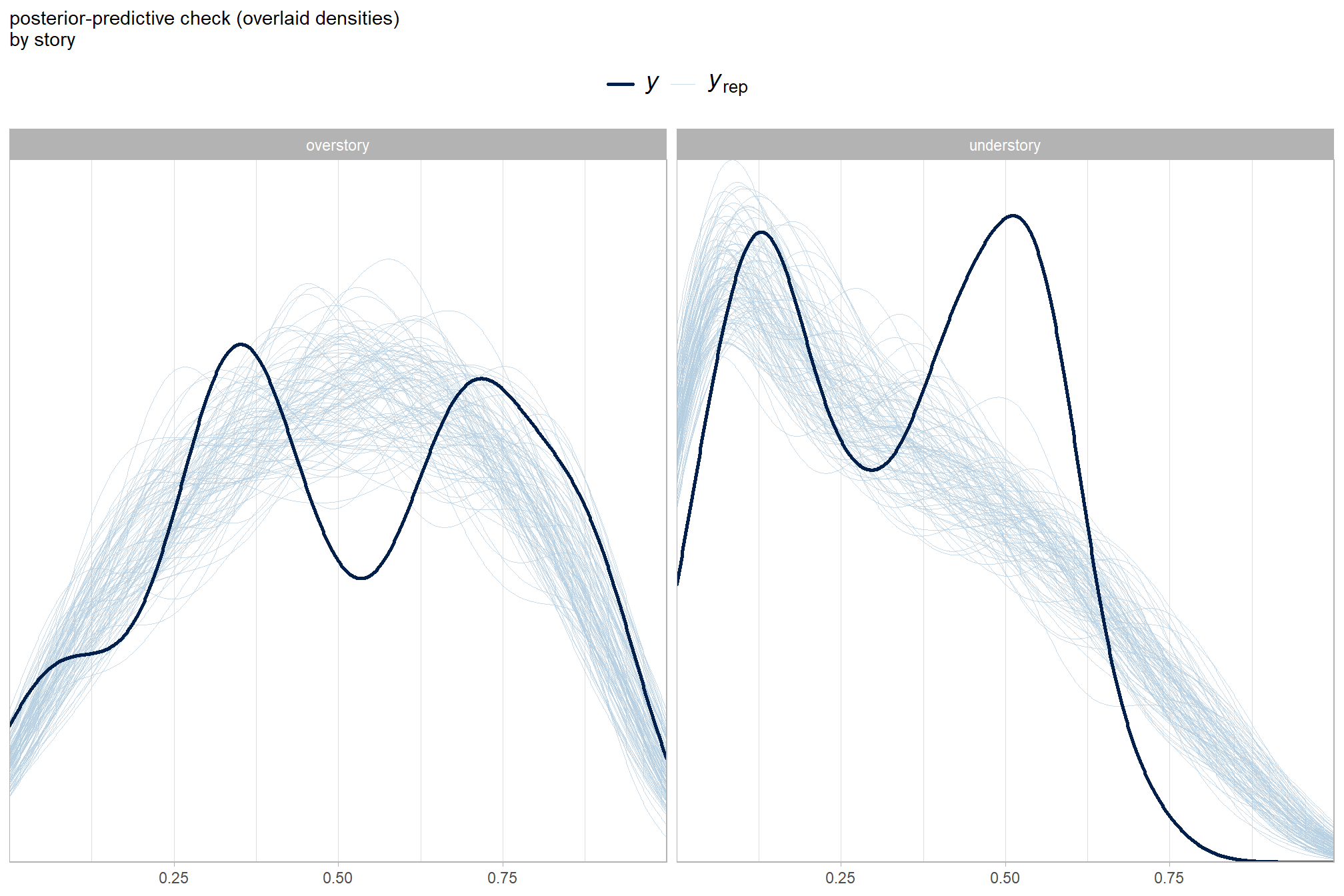

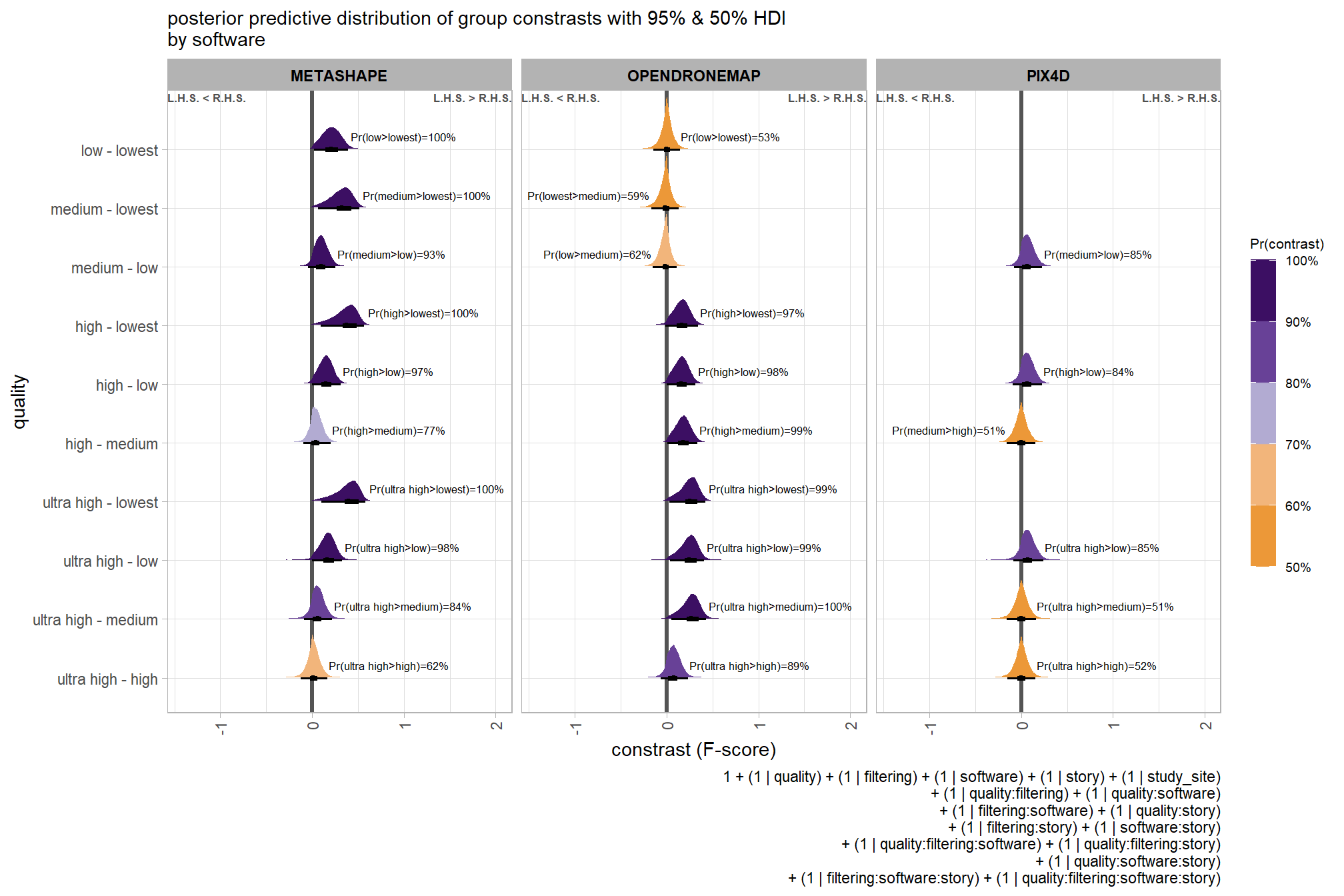

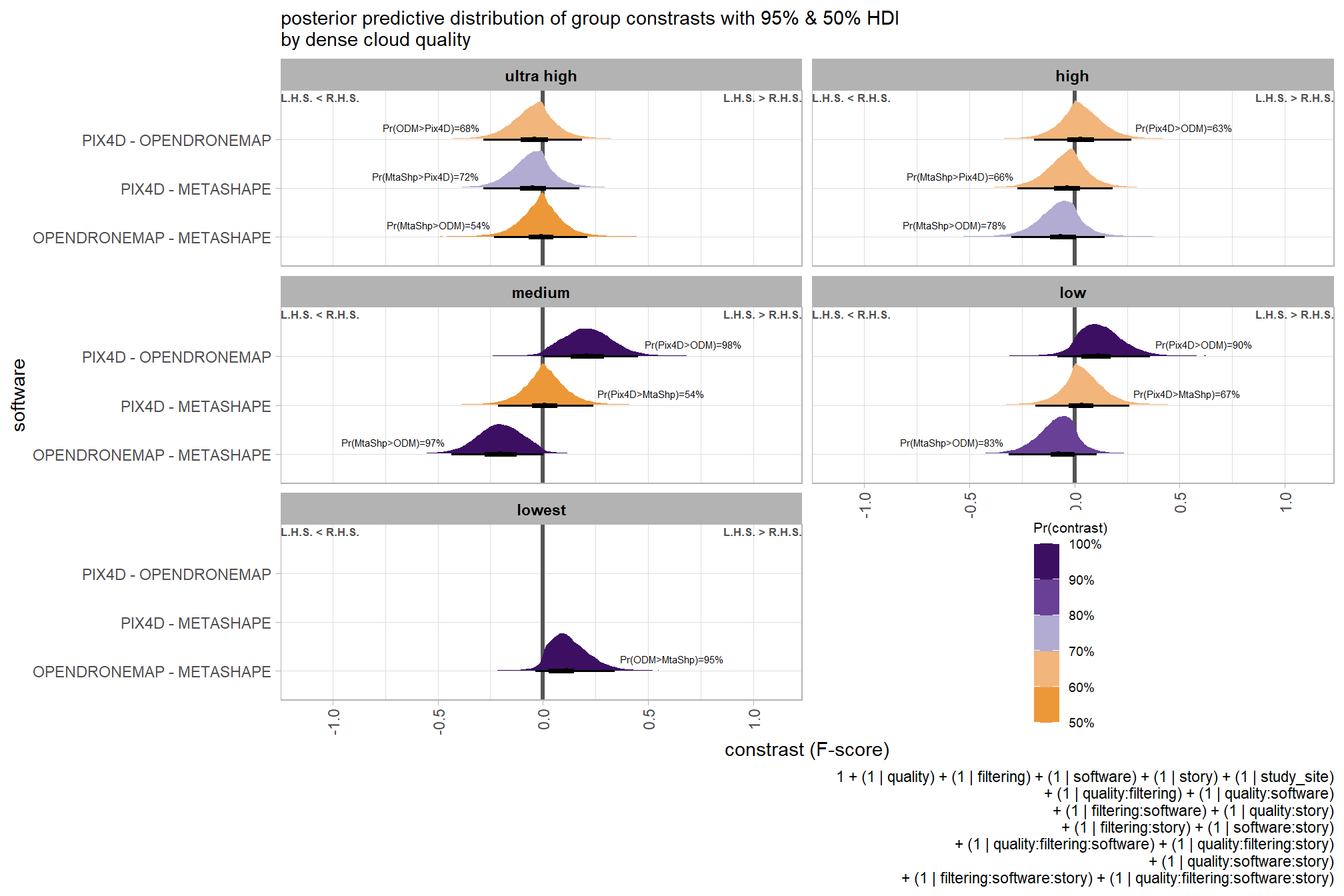

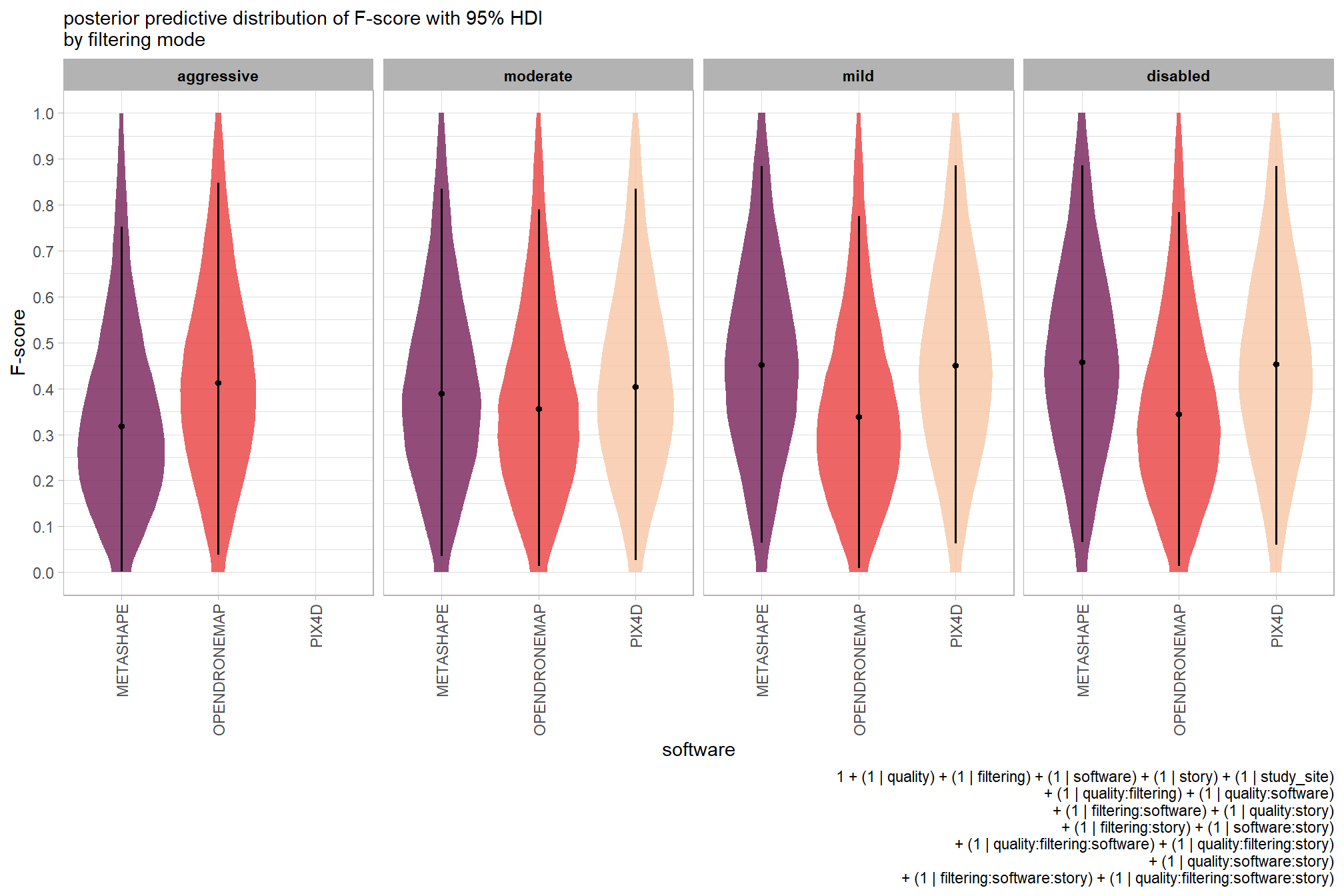

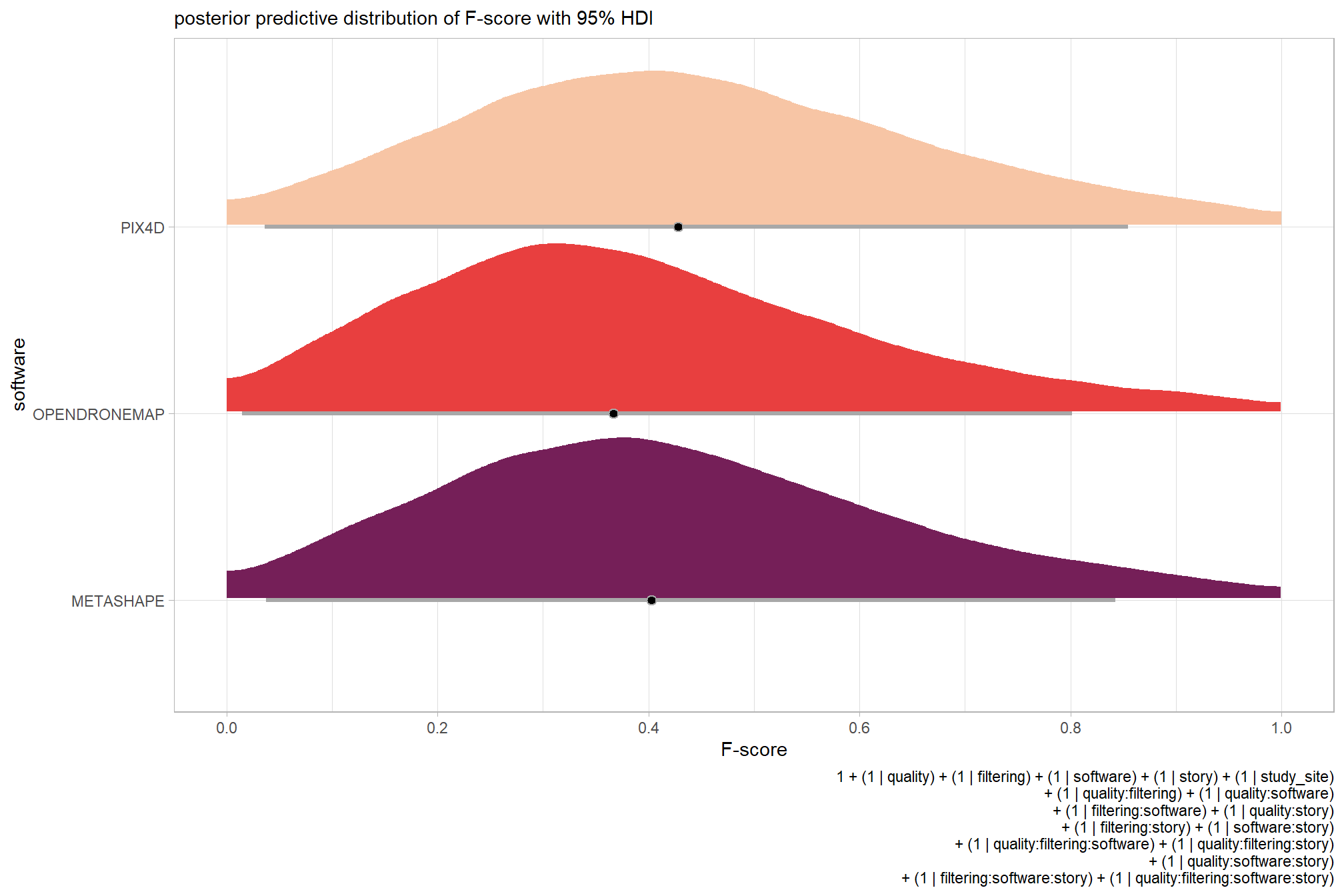

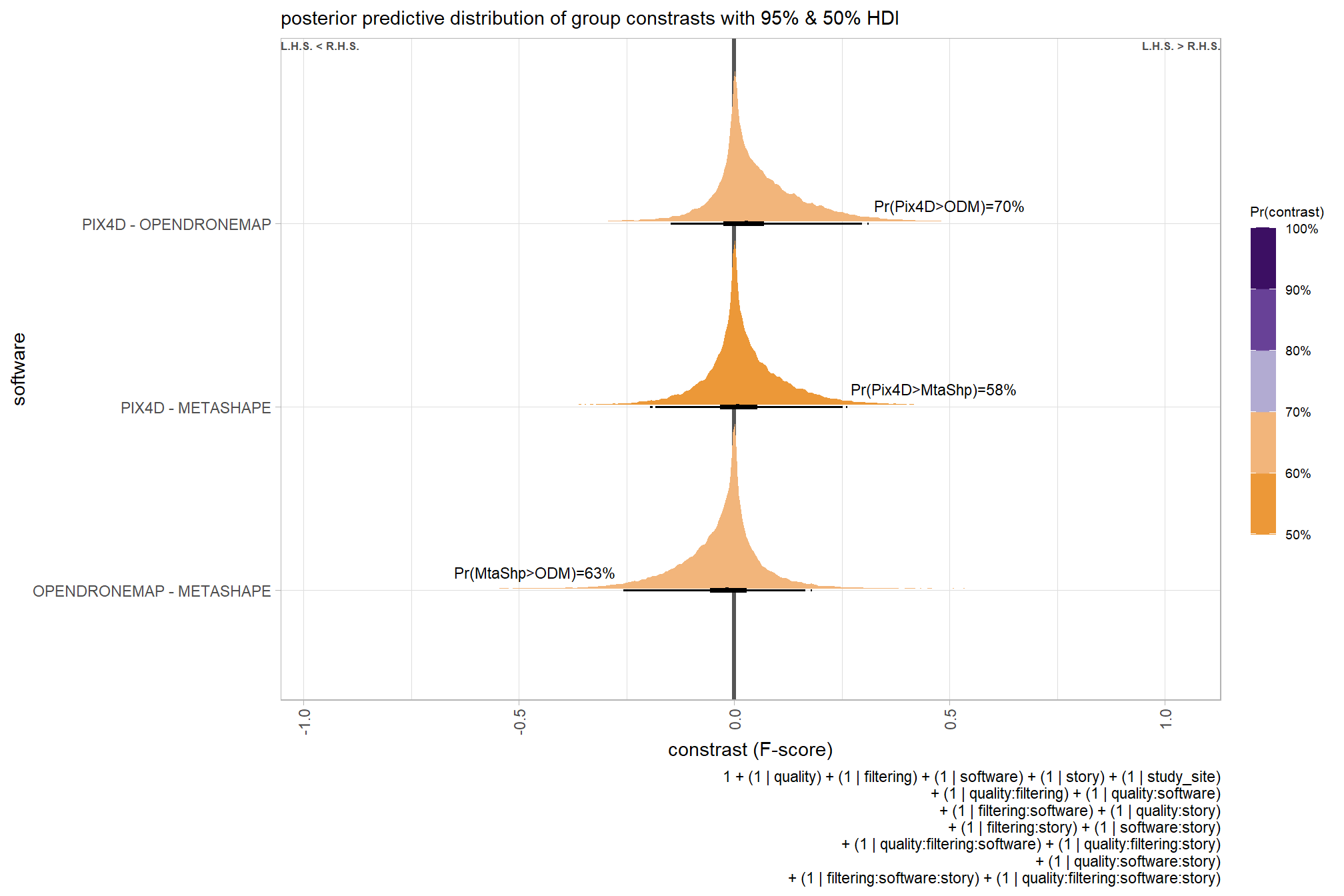

Now, we’ll add the SfM processing software to our model which includes the depth map generation quality and the depth map filtering parameters to quantify the SfM-derived tree detection performance based on the F-score.

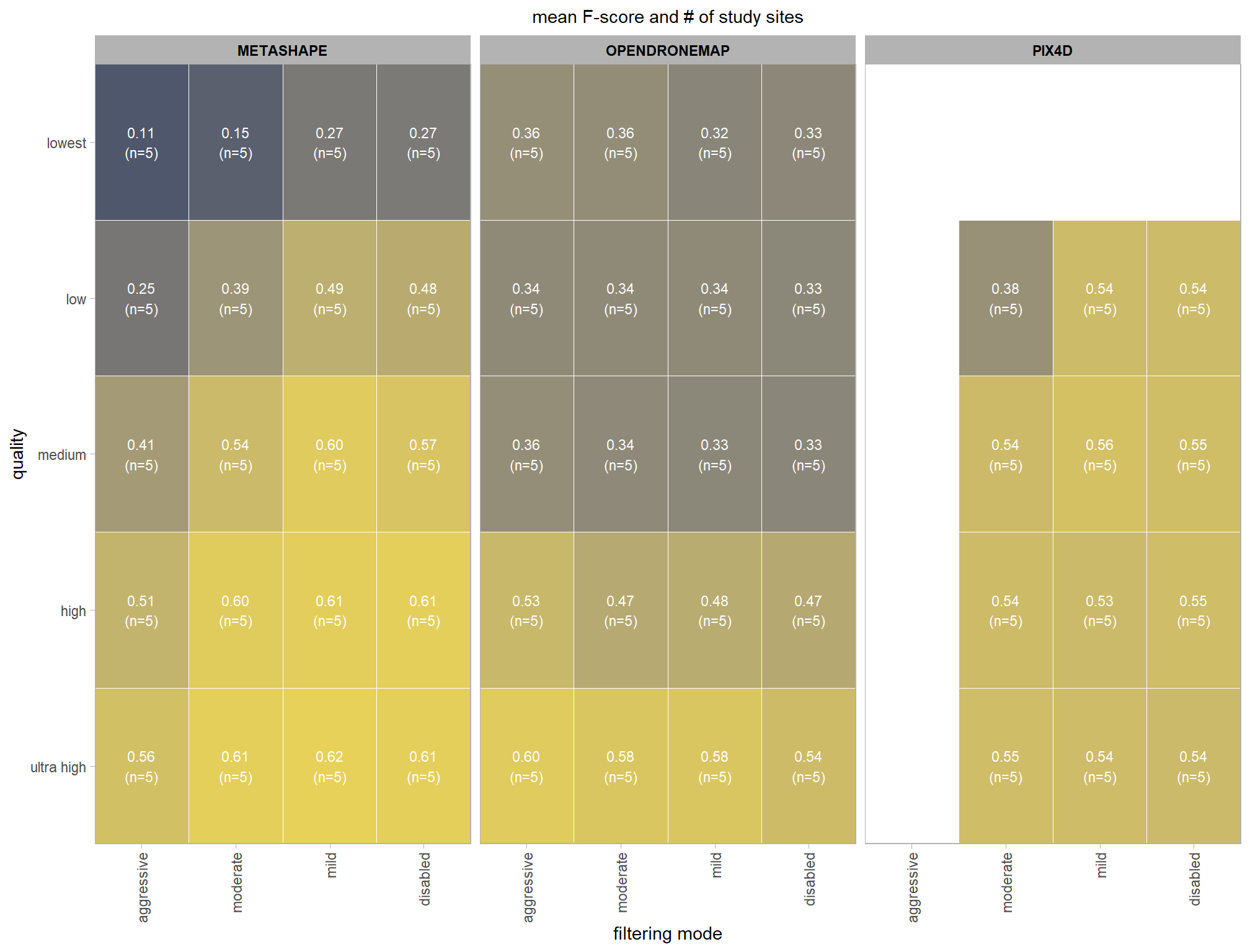

6.5.1 Summary Statistics

Summary statistics by group:

ptcld_validation_data %>%

dplyr::group_by(depth_maps_generation_quality, depth_maps_generation_filtering_mode, software) %>%

dplyr::summarise(

mean_f_score = mean(f_score, na.rm = T)

# , med_f_score = median(f_score, na.rm = T)

, sd_f_score = sd(f_score, na.rm = T)

, n = dplyr::n()

) %>%

kableExtra::kbl(

digits = 2

, caption = "summary statistics: F-score by dense cloud quality, filtering mode, and software"

, col.names = c(

"quality"

, "filtering mode"

, "software"

, "mean F-score"

, "sd"

, "n"

)

) %>%

kableExtra::kable_styling() %>%

kableExtra::scroll_box(height = "8in")| quality | filtering mode | software | mean F-score | sd | n |

|---|---|---|---|---|---|

| ultra high | aggressive | METASHAPE | 0.56 | 0.21 | 5 |

| ultra high | aggressive | OPENDRONEMAP | 0.60 | 0.21 | 5 |

| ultra high | moderate | METASHAPE | 0.61 | 0.23 | 5 |

| ultra high | moderate | OPENDRONEMAP | 0.58 | 0.20 | 5 |

| ultra high | moderate | PIX4D | 0.55 | 0.24 | 5 |

| ultra high | mild | METASHAPE | 0.62 | 0.23 | 5 |

| ultra high | mild | OPENDRONEMAP | 0.58 | 0.19 | 5 |

| ultra high | mild | PIX4D | 0.54 | 0.24 | 5 |

| ultra high | disabled | METASHAPE | 0.61 | 0.24 | 5 |

| ultra high | disabled | OPENDRONEMAP | 0.54 | 0.23 | 5 |

| ultra high | disabled | PIX4D | 0.54 | 0.25 | 5 |

| high | aggressive | METASHAPE | 0.51 | 0.19 | 5 |

| high | aggressive | OPENDRONEMAP | 0.53 | 0.17 | 5 |

| high | moderate | METASHAPE | 0.60 | 0.24 | 5 |

| high | moderate | OPENDRONEMAP | 0.47 | 0.21 | 5 |

| high | moderate | PIX4D | 0.54 | 0.24 | 5 |

| high | mild | METASHAPE | 0.61 | 0.24 | 5 |

| high | mild | OPENDRONEMAP | 0.48 | 0.21 | 5 |

| high | mild | PIX4D | 0.53 | 0.24 | 5 |

| high | disabled | METASHAPE | 0.61 | 0.24 | 5 |

| high | disabled | OPENDRONEMAP | 0.47 | 0.22 | 5 |

| high | disabled | PIX4D | 0.55 | 0.25 | 5 |

| medium | aggressive | METASHAPE | 0.41 | 0.18 | 5 |

| medium | aggressive | OPENDRONEMAP | 0.36 | 0.15 | 5 |

| medium | moderate | METASHAPE | 0.54 | 0.20 | 5 |

| medium | moderate | OPENDRONEMAP | 0.34 | 0.20 | 5 |

| medium | moderate | PIX4D | 0.54 | 0.23 | 5 |

| medium | mild | METASHAPE | 0.60 | 0.25 | 5 |

| medium | mild | OPENDRONEMAP | 0.33 | 0.19 | 5 |

| medium | mild | PIX4D | 0.56 | 0.25 | 5 |

| medium | disabled | METASHAPE | 0.57 | 0.25 | 5 |

| medium | disabled | OPENDRONEMAP | 0.33 | 0.22 | 5 |

| medium | disabled | PIX4D | 0.55 | 0.24 | 5 |

| low | aggressive | METASHAPE | 0.25 | 0.12 | 5 |

| low | aggressive | OPENDRONEMAP | 0.34 | 0.13 | 5 |

| low | moderate | METASHAPE | 0.39 | 0.20 | 5 |

| low | moderate | OPENDRONEMAP | 0.34 | 0.20 | 5 |

| low | moderate | PIX4D | 0.38 | 0.17 | 5 |

| low | mild | METASHAPE | 0.49 | 0.21 | 5 |

| low | mild | OPENDRONEMAP | 0.34 | 0.21 | 5 |

| low | mild | PIX4D | 0.54 | 0.24 | 5 |

| low | disabled | METASHAPE | 0.48 | 0.24 | 5 |

| low | disabled | OPENDRONEMAP | 0.33 | 0.22 | 5 |

| low | disabled | PIX4D | 0.54 | 0.24 | 5 |

| lowest | aggressive | METASHAPE | 0.11 | 0.10 | 5 |

| lowest | aggressive | OPENDRONEMAP | 0.36 | 0.16 | 5 |

| lowest | moderate | METASHAPE | 0.15 | 0.10 | 5 |

| lowest | moderate | OPENDRONEMAP | 0.36 | 0.22 | 5 |

| lowest | mild | METASHAPE | 0.27 | 0.19 | 5 |

| lowest | mild | OPENDRONEMAP | 0.32 | 0.19 | 5 |

| lowest | disabled | METASHAPE | 0.27 | 0.22 | 5 |

| lowest | disabled | OPENDRONEMAP | 0.33 | 0.22 | 5 |

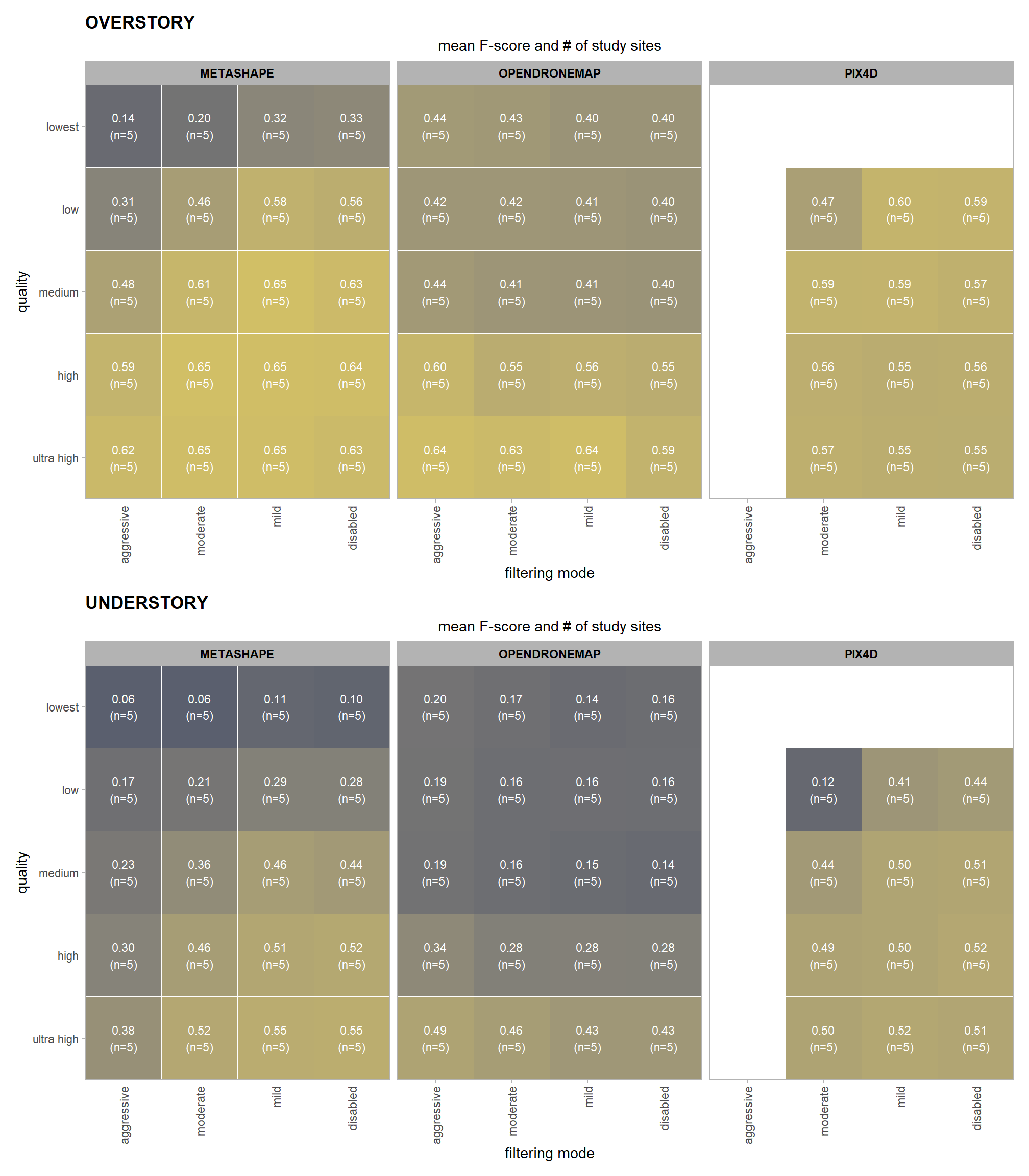

we can view this data by using ggplot2::geom_tile

ptcld_validation_data %>%

dplyr::group_by(software, depth_maps_generation_quality, depth_maps_generation_filtering_mode) %>%

# collapse across study site

dplyr::summarise(

mean_f_score = mean(f_score, na.rm = T)

# , med_f_score = median(f_score, na.rm = T)

, sd_f_score = sd(f_score, na.rm = T)

, n = dplyr::n()

) %>%

ggplot(mapping = aes(

y = depth_maps_generation_quality

, x = depth_maps_generation_filtering_mode

, fill = mean_f_score

, label = paste0(scales::comma(mean_f_score,accuracy = 0.01), "\n(n=", n,")")

)) +

geom_tile(color = "white") +

geom_text(color = "white", size = 3) +

facet_grid(cols = vars(software)) +

scale_x_discrete(expand = c(0, 0)) +

scale_y_discrete(expand = c(0, 0)) +

scale_fill_viridis_c(option = "cividis", begin = 0.3, end = 0.9) +

labs(

x = "filtering mode"

, y = "quality"

, fill = "F-score"

, subtitle = "mean F-score and # of study sites"

) +

theme_light() +

theme(

legend.position = "none"

, axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1)

, panel.background = element_blank()

, panel.grid = element_blank()

, plot.subtitle = element_text(hjust = 0.5)

, strip.text = element_text(color = "black", face = "bold")

)

6.5.2 Bayesian

Kruschke (2015) describes the Hierarchical Bayesian approach to extend our two nominal predictor model to include another nominal predictor (referred to as “subject” here but the methodology applies for other nominal variables):

When every subject contributes many measurements to every cell, then the model of the situation is a straight-forward extension of the models we have already considered. We merely add “subject” as another nominal predictor in the model, with each individual subject being a level of the predictor. If there is one predictor other than subject, the model becomes

\[ y = \beta_0 + \overrightarrow \beta_1 \overrightarrow x_1 + \overrightarrow \beta_S \overrightarrow x_S + \overrightarrow \beta_{1 \times S} \overrightarrow x_{1 \times S} \]

This is exactly the two-predictor model we have already considered, with the second predictor being subject. When there are two predictors other than subject, the model becomes

\[\begin{align*} y = & \; \beta_0 & \text{baseline} \\ & + \overrightarrow \beta_1 \overrightarrow x_1 + \overrightarrow \beta_2 \overrightarrow x_2 + \overrightarrow \beta_S \overrightarrow x_S & \text{main effects} \\ & + \overrightarrow \beta_{1 \times 2} \overrightarrow x_{1 \times 2} + \overrightarrow \beta_{1 \times S} \overrightarrow x_{1 \times S} + \overrightarrow \beta_{2 \times S} \overrightarrow x_{2 \times S} & \text{two-way interactions} \\ & + \overrightarrow \beta_{1 \times 2 \times S} \overrightarrow x_{1 \times 2 \times S} & \text{three-way interactions} \end{align*}\]

This model includes all the two-way interactions of the factors, plus the three-way interaction. (p. 607)

The metric predicted variable with three nominal predictor variables model has the form:

\[\begin{align*} y_{i} \sim & \operatorname{Normal} \bigl(\mu_{i}, \sigma_{y} \bigr) \\ \mu_{i} = & \beta_0 \\ & + \sum_{j} \beta_{1[j]} x_{1[j]} + \sum_{k} \beta_{2[k]} x_{2[k]} + \sum_{f} \beta_{3[f]} x_{3[f]} \\ & + \sum_{j,k} \beta_{1\times2[j,k]} x_{1\times2[j,k]} + \sum_{j,f} \beta_{1\times3[j,f]} x_{1\times3[j,f]} + \sum_{k,f} \beta_{2\times3[k,f]} x_{2\times3[k,f]} \\ & + \sum_{j,k,f} \beta_{1\times2\times3[j,k,f]} x_{1\times2\times3[j,k,f]} \\ \beta_{0} \sim & \operatorname{Normal}(0,100) \\ \beta_{1[j]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{1}}) \\ \beta_{2[k]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{2}}) \\ \beta_{3[f]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{3}}) \\ \beta_{1\times2[j,k]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{1\times2}}) \\ \beta_{1\times3[j,f]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{1\times3}}) \\ \beta_{2\times3[k,f]} \sim & \operatorname{Normal}(0,\sigma_{\beta_{2\times3}}) \\ \sigma_{\beta_{1}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{2}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{3}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{1\times2}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{1\times3}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{\beta_{2\times3}} \sim & \operatorname{Gamma}(1.28,0.005) \\ \sigma_{y} \sim & {\sf Cauchy} (0,109) \\ \end{align*}\]

, where \(j\) is the depth map generation quality setting corresponding to observation \(i\), \(k\) is the depth map filtering mode setting corresponding to observation \(i\), and \(f\) is the processing software corresponding to observation \(i\)

for this model, we’ll define the priors following Kurz who notes that:

The noise standard deviation \(\sigma_y\) is depicted in the prior statement including the argument

class = sigma…in order to be weakly informative, we will use the half-Cauchy. Recall that since the brms default is to set the lower bound for any variance parameter to 0, there’s no need to worry about doing so ourselves. So even though the syntax only indicatescauchy, it’s understood to mean Cauchy with a lower bound at zero; since the mean is usually 0, that makes this a half-Cauchy…The tails of the half-Cauchy are sufficiently fat that, in practice, I’ve found it doesn’t matter much what you set the \(SD\) of its prior to.

# from Kurz:

gamma_a_b_from_omega_sigma = function(mode, sd) {

if (mode <= 0) stop("mode must be > 0")

if (sd <= 0) stop("sd must be > 0")

rate = (mode + sqrt(mode^2 + 4 * sd^2)) / (2 * sd^2)

shape = 1 + mode * rate

return(list(shape = shape, rate = rate))

}

mean_y_temp = mean(ptcld_validation_data$f_score)

sd_y_temp = sd(ptcld_validation_data$f_score)

omega_temp = sd_y_temp / 2

sigma_temp = 2 * sd_y_temp

s_r_temp = gamma_a_b_from_omega_sigma(mode = omega_temp, sd = sigma_temp)

stanvars_temp =

brms::stanvar(mean_y_temp, name = "mean_y") +

brms::stanvar(sd_y_temp, name = "sd_y") +

brms::stanvar(s_r_temp$shape, name = "alpha") +

brms::stanvar(s_r_temp$rate, name = "beta")Now fit the model.

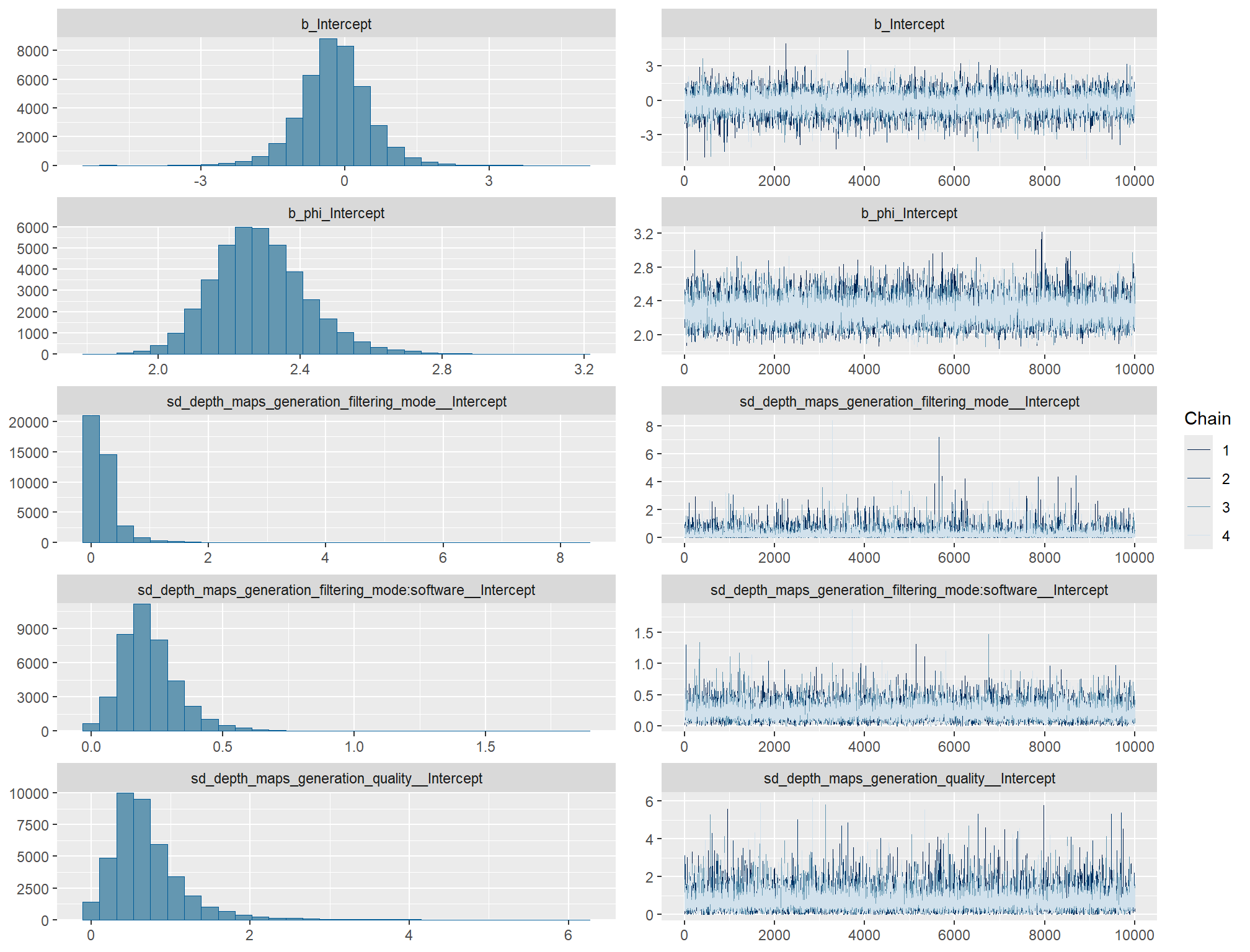

brms_f_mod3 = brms::brm(

formula = f_score ~

# baseline

1 +

# main effects

(1 | depth_maps_generation_quality) +

(1 | depth_maps_generation_filtering_mode) +

(1 | software) +

# two-way interactions

(1 | depth_maps_generation_quality:depth_maps_generation_filtering_mode) +

(1 | depth_maps_generation_quality:software) +

(1 | depth_maps_generation_filtering_mode:software) +

# three-way interactions

(1 | depth_maps_generation_quality:depth_maps_generation_filtering_mode:software)

, data = ptcld_validation_data

, family = brms::brmsfamily(family = "gaussian")

, iter = 20000, warmup = 10000, chains = 4

, control = list(adapt_delta = 0.999, max_treedepth = 13)

, cores = round(parallel::detectCores()/2)

, prior = c(

brms::prior(normal(mean_y, sd_y * 5), class = "Intercept")

, brms::prior(gamma(alpha, beta), class = "sd")

, brms::prior(cauchy(0, sd_y), class = "sigma")

)

, stanvars = stanvars_temp

, file = paste0(rootdir, "/fits/brms_f_mod3")

)

# https://mc-stan.org/misc/warnings.html#divergent-transitions-after-warmup

# https://mc-stan.org/misc/warnings.html#bulk-ess

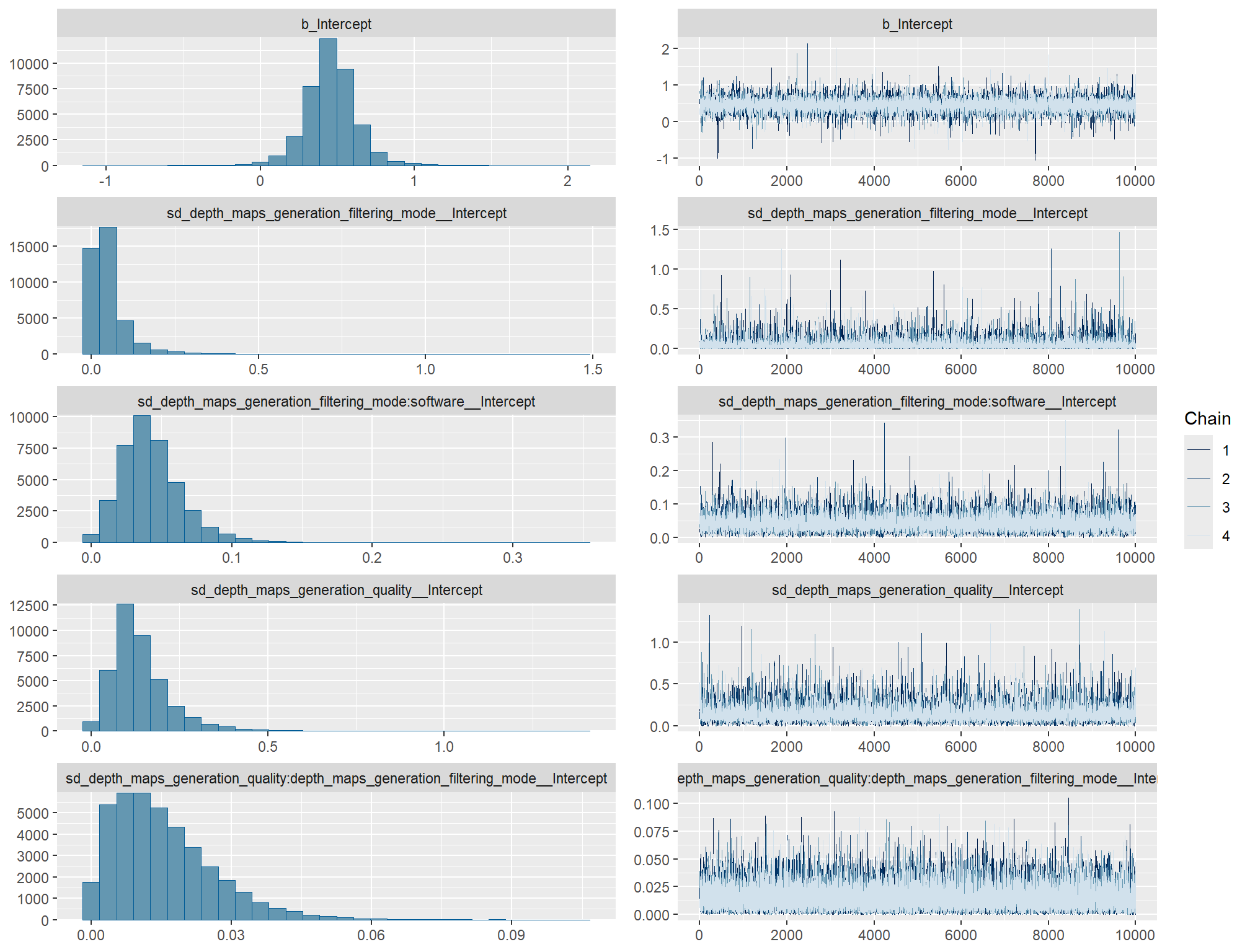

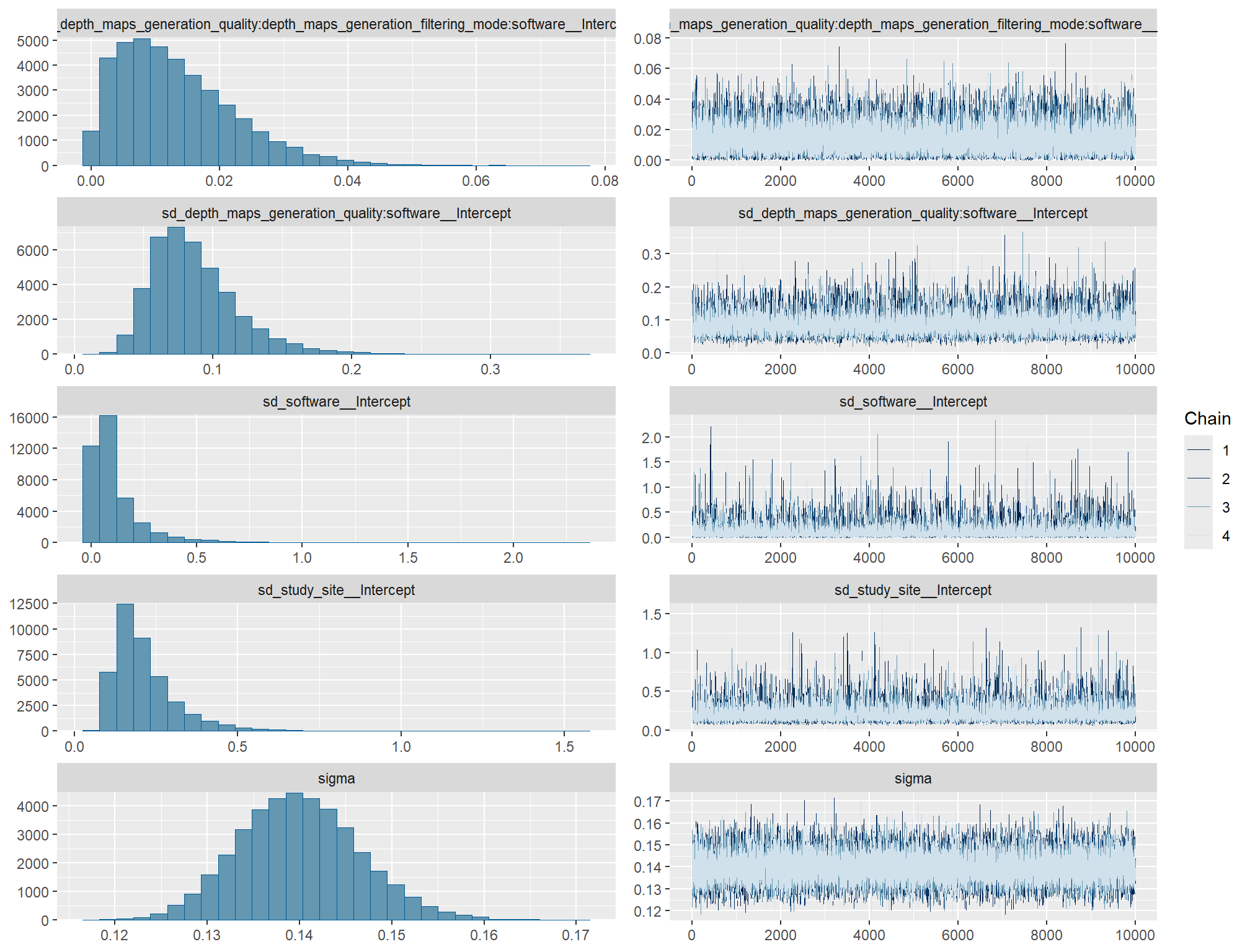

# https://mc-stan.org/misc/warnings.html#tail-ess check the trace plots for problems with convergence of the Markov chains

check the prior distributions

# check priors

brms::prior_summary(brms_f_mod3) %>%

kableExtra::kbl() %>%

kableExtra::kable_styling()| prior | class | coef | group | resp | dpar | nlpar | lb | ub | source |

|---|---|---|---|---|---|---|---|---|---|

| normal(mean_y, sd_y * 5) | Intercept | user | |||||||

| gamma(alpha, beta) | sd | 0 | user | ||||||

| sd | depth_maps_generation_filtering_mode | default | |||||||

| sd | Intercept | depth_maps_generation_filtering_mode | default | ||||||

| sd | depth_maps_generation_filtering_mode:software | default | |||||||

| sd | Intercept | depth_maps_generation_filtering_mode:software | default | ||||||

| sd | depth_maps_generation_quality | default | |||||||

| sd | Intercept | depth_maps_generation_quality | default | ||||||

| sd | depth_maps_generation_quality:depth_maps_generation_filtering_mode | default | |||||||

| sd | Intercept | depth_maps_generation_quality:depth_maps_generation_filtering_mode | default | ||||||

| sd | depth_maps_generation_quality:depth_maps_generation_filtering_mode:software | default | |||||||

| sd | Intercept | depth_maps_generation_quality:depth_maps_generation_filtering_mode:software | default | ||||||

| sd | depth_maps_generation_quality:software | default | |||||||

| sd | Intercept | depth_maps_generation_quality:software | default | ||||||

| sd | software | default | |||||||

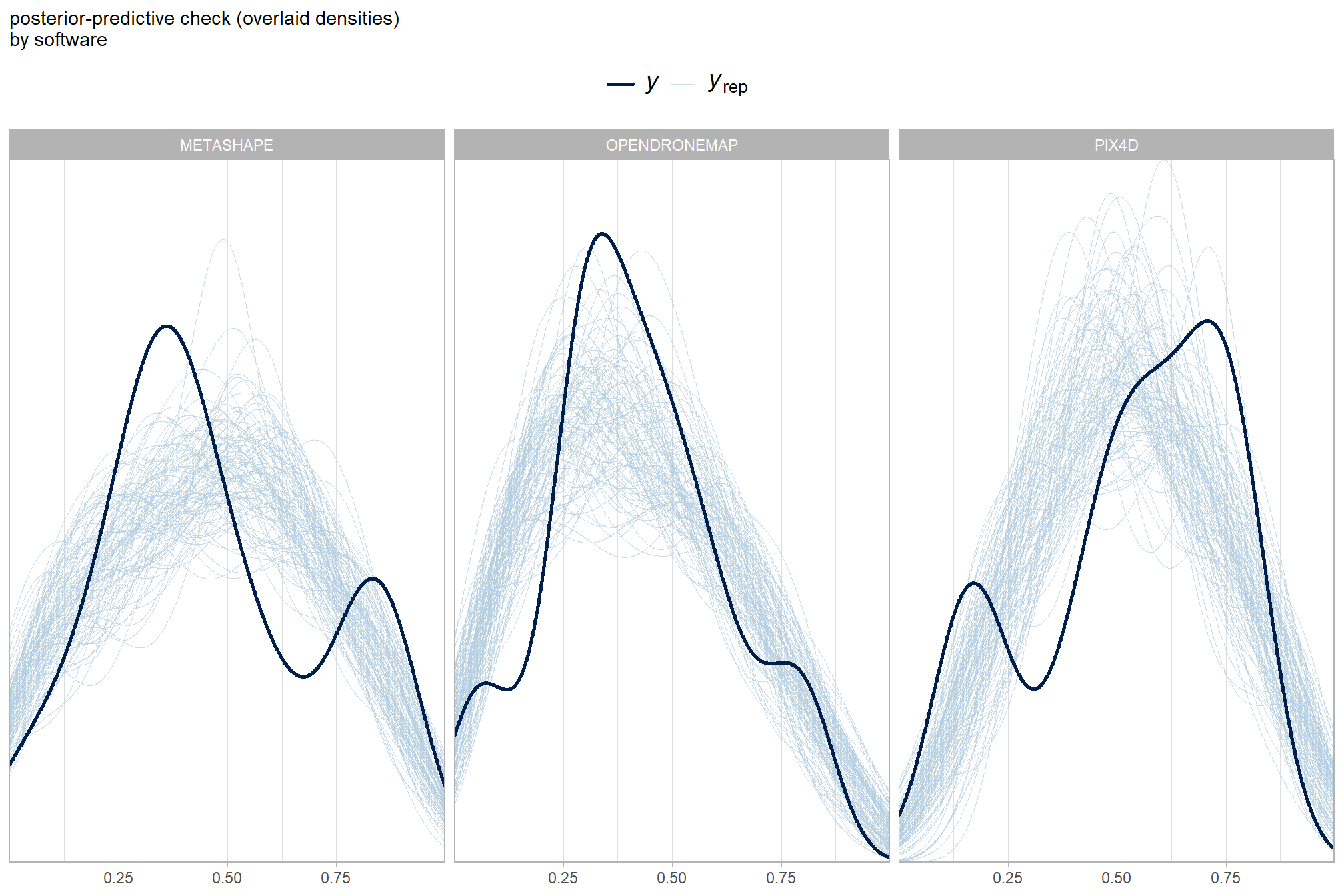

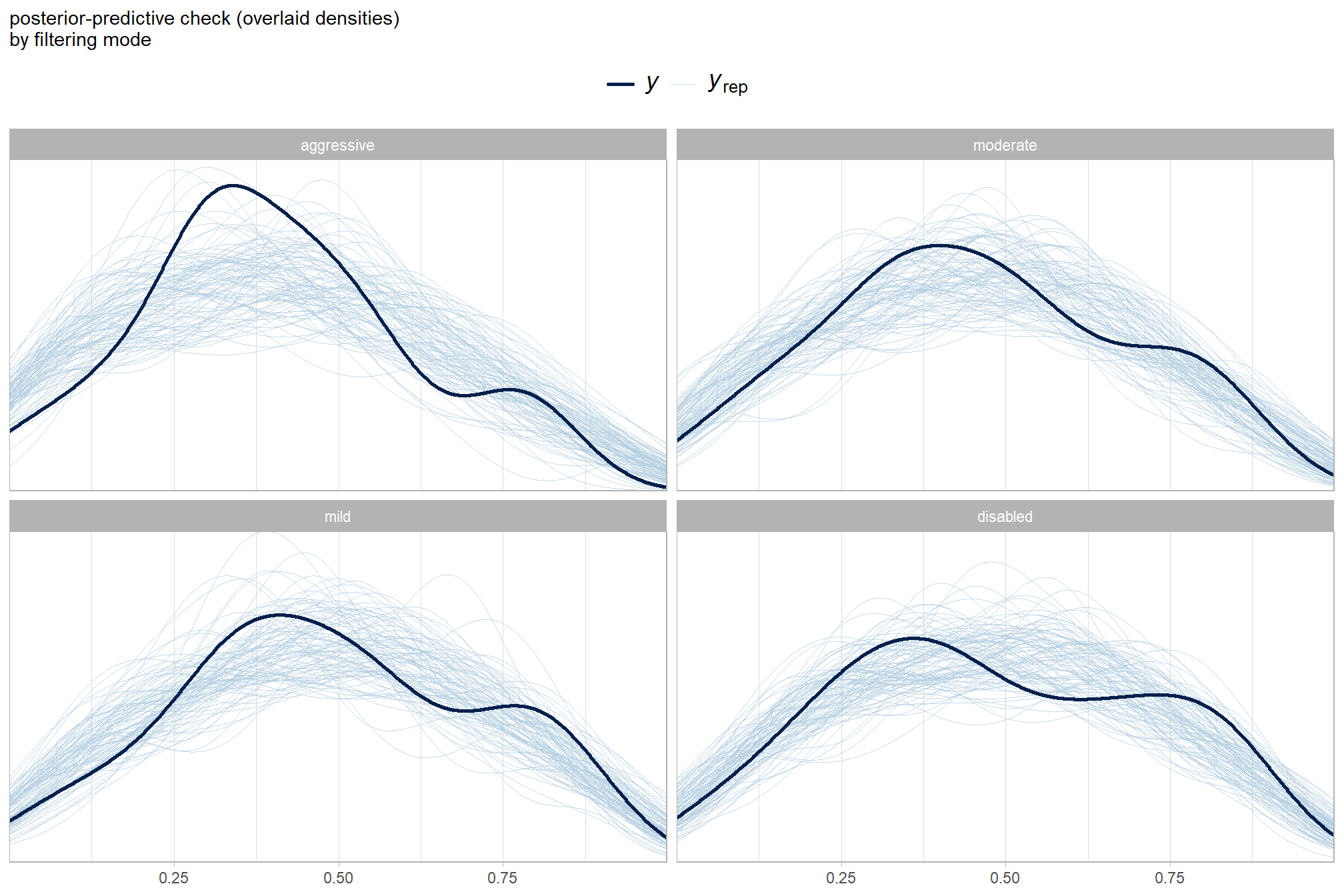

| sd | Intercept | software | default | ||||||